Projects

Research projects at the Empathic Computing Lab exploring systems that create understanding.

- 2023 - Now

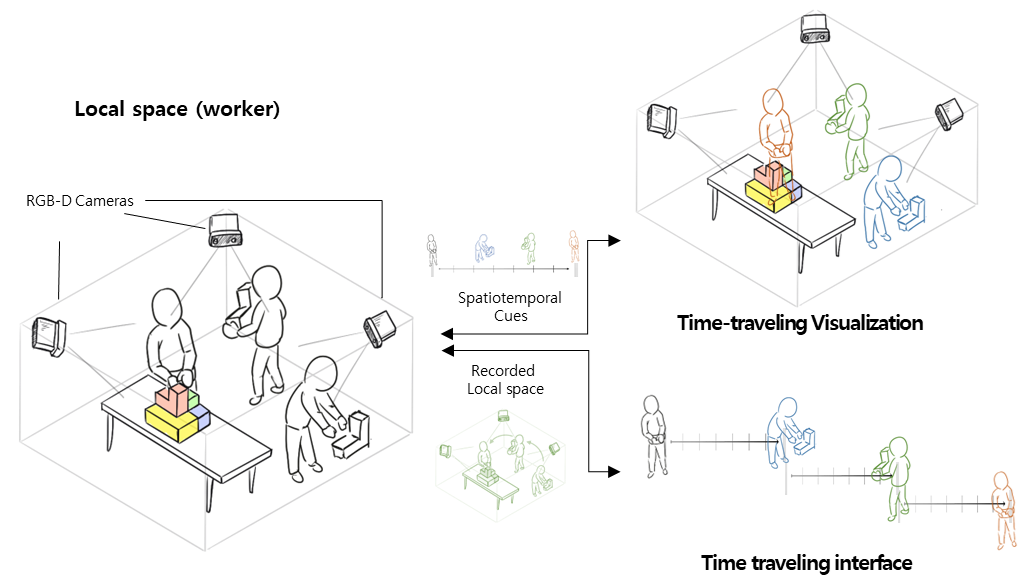

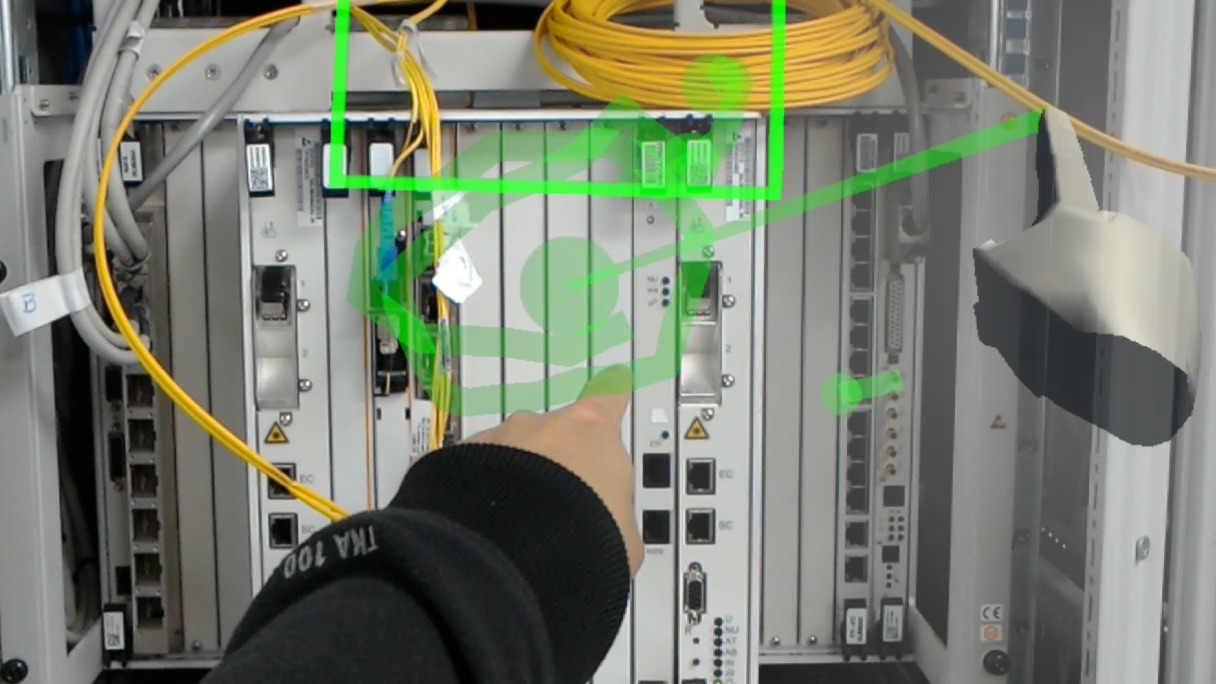

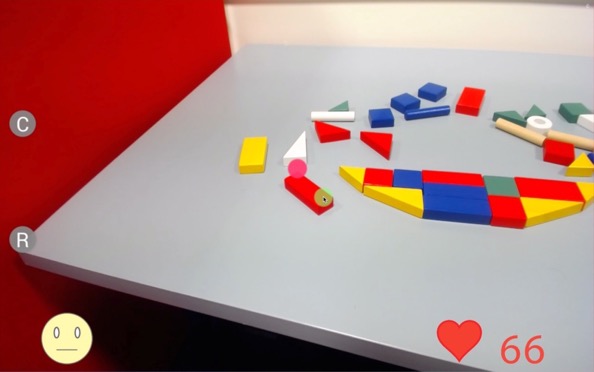

AR-based spatiotemporal interface and visualization for the physical task

The proposed study aims to assist in solving physical tasks such as mechanical assembly or collaborative design efficiently by using augmented reality-based space-time visualization techniques. In particular, when disassembling/reassembling is required, 3D recording of past actions and playback visualization are used to help memorize the exact assembly order and position of objects in the task. This study proposes a novel method that employs 3D-based spatial information recording and augmented reality-based playback to effectively support these types of physical tasks.

- 2017-2023

MPConnect: A Mixed Presence Mixed Reality System

This project explores how a Mixed Presence Mixed Reality System can enhance remote collaboration. Collaborative Mixed Reality (MR) is a popular area of research, but most work has focused on one-to-one systems where either both collaborators are co-located or the collaborators are remote from one another. For example, remote users might collaborate in a shared Virtual Reality (VR) system, or a local worker might use an Augmented Reality (AR) display to connect with a remote expert to help them complete a task.

- 2017-2020

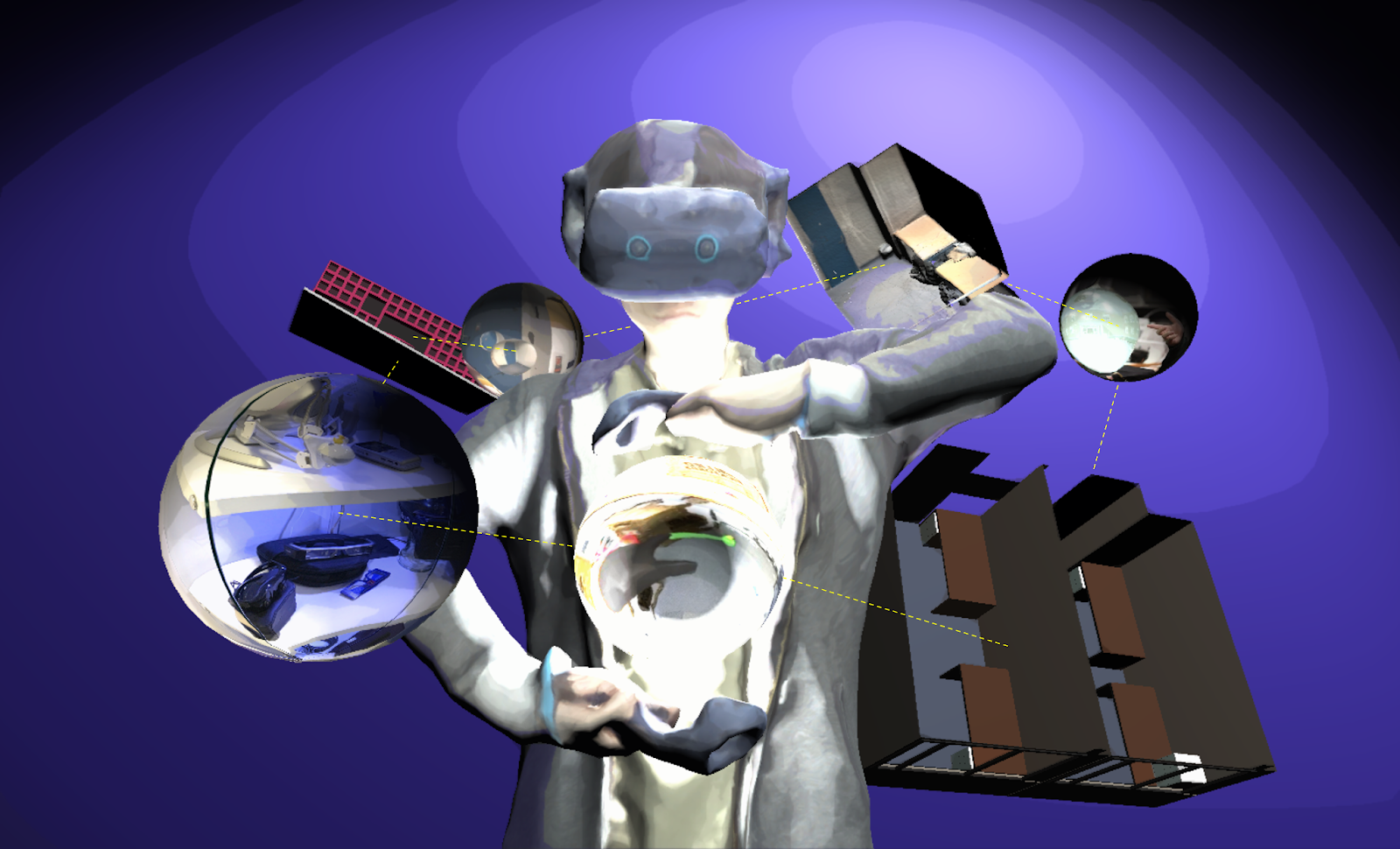

Using 3D Spaces and 360 Video Content for Collaboration

This project explores techniques to enhance collaborative experience in Mixed Reality environments using 3D reconstructions, 360 videos and 2D images. Previous research has shown that 360 video can provide a high resolution immersive visual space for collaboration, but little spatial information. Conversely, 3D scanned environments can provide high quality spatial cues, but with poor visual resolution. This project combines both approaches, enabling users to switch between a 3D view or 360 video of a collaborative space. In this hybrid interface, users can pick the representation of space best suited to the needs of the collaborative task. The project seeks to provide design guidelines for collaboration systems to enable empathic collaboration by sharing visual cues and environments across time and space.

- 2019-2023

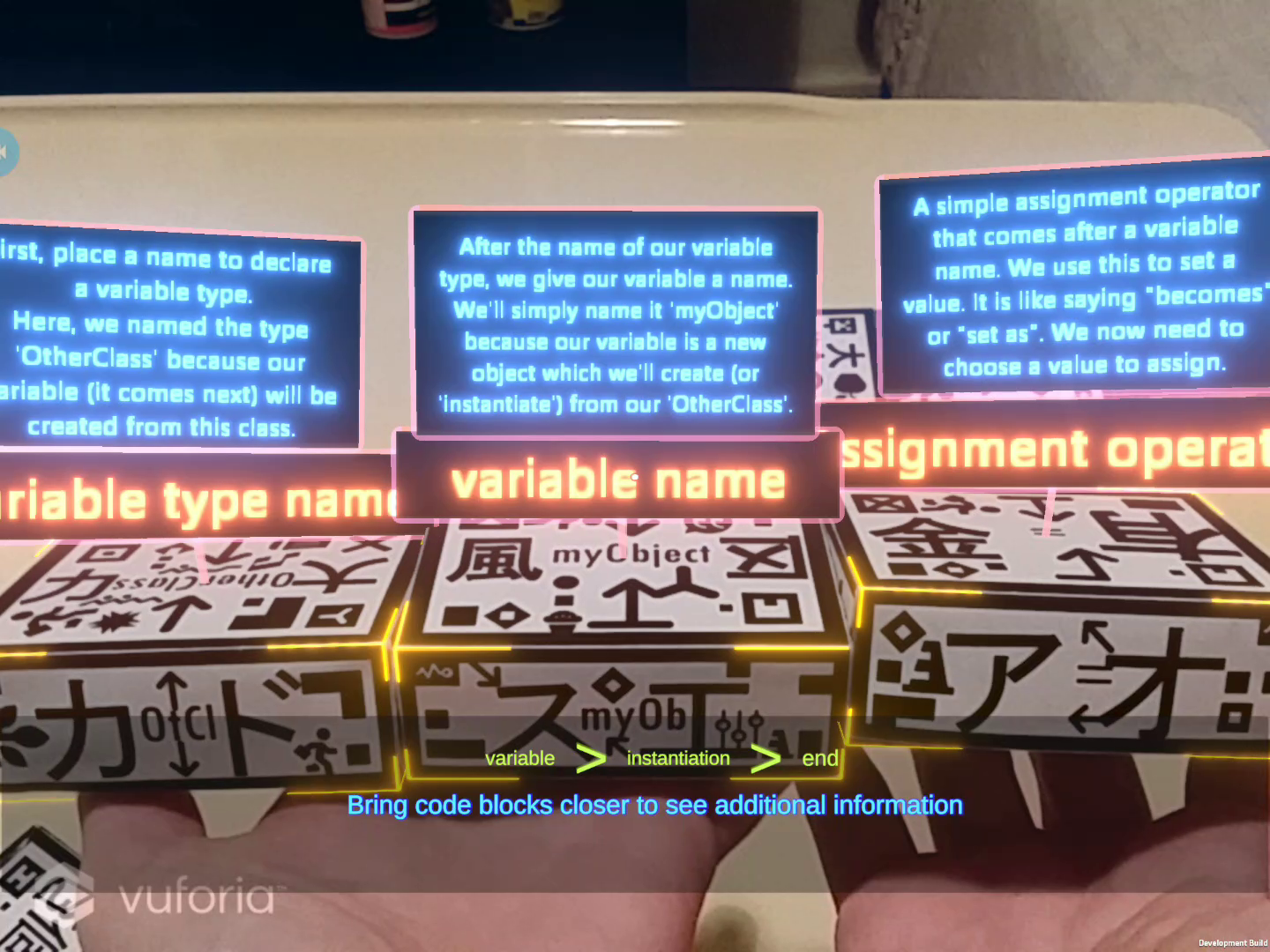

Tangible Augmented Reality for Learning Programming Learning

This project explores how tangible Augmented Reality (AR) can be used to teach computer programming. We have developed TARPLE, A Tangible Augmented Reality Programming Learning Environment, and are studying its efficacy for teaching text-based programming languages to novice learners. TARPLE uses physical blocks to represent programming functions and overlays virtual imagery on the blocks to show the programming code. Use can arrange the blocks by moving them with their hands, and see the AR content either through the Microsoft Hololens2 AR display, or a handheld tablet.

This research project expands upon the broader question of educational AR as well as on the questions of tangible programming languages and tangible learning mediums. When supported by the embodied learning and natural interaction affordances of AR, physical objects may hold the key to developing fundamental knowledge of abstract, complex subjects for younger learners in particular. It may also serve as a powerful future tool in advancing early computational thinking skills in novices. Evaluation of such learning environments addresses the hypothesis that hybrid tangible AR mediums are able to support an extended learning taxonomy both within the classroom and without.

- 2023 - Now

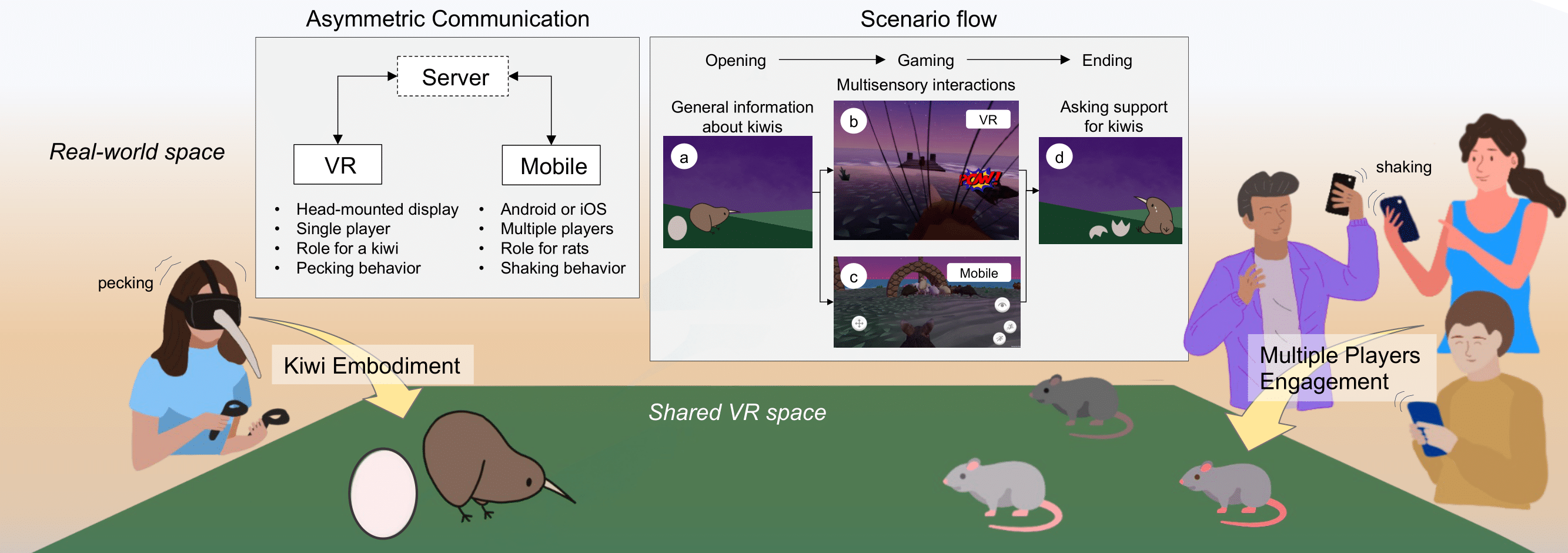

KiwiRescuer: A new interactive exhibition using an asymmetric interaction

This research demo aims to address the problem of passive and dull museum exhibition experiences that many audiences still encounter. The current approaches to exhibitions are typically less interactive and mostly provide single sensory information (e.g., visual, auditory, or haptic) in a one-to-one experience.

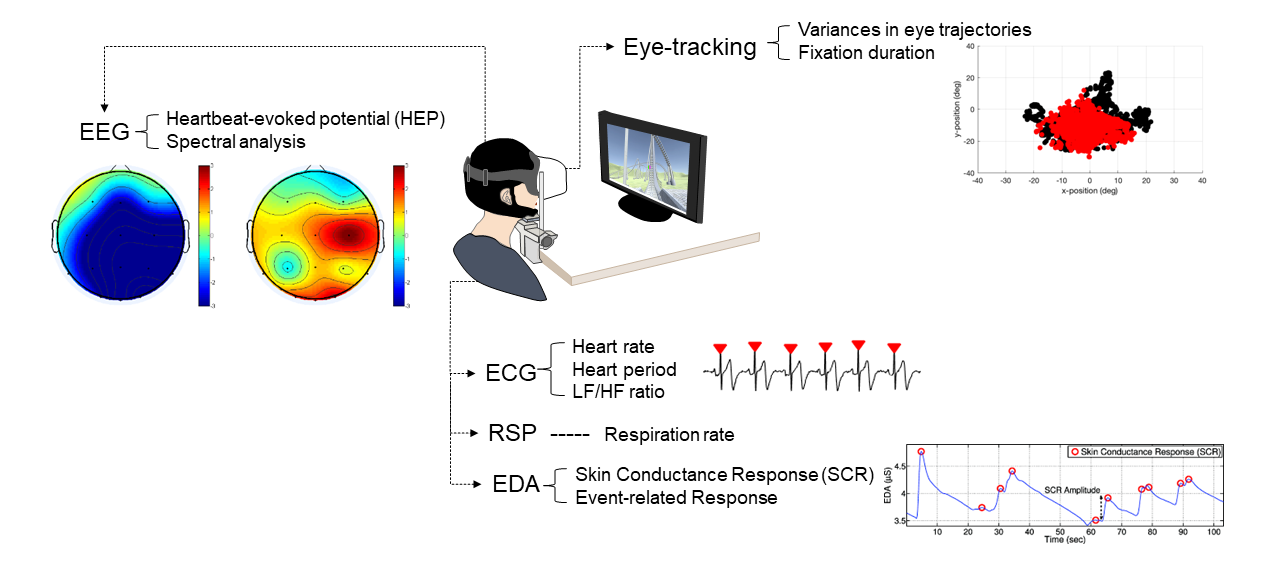

Detecting of the Onset of Cybersickness using Physiological Cues

In this project we explore if the onset of cybersickness can be detected by considering multiple physiological signals simultaneously from users in VR. We are particularly interested in physiological cues that can be collected from the current generation of VR HMDs, such as eye-gaze, and heart rate. We are also interested in exploring other physiological cues that could be available in the near future in VR HMDs, such as GSR and EEG.

- 2021-2024

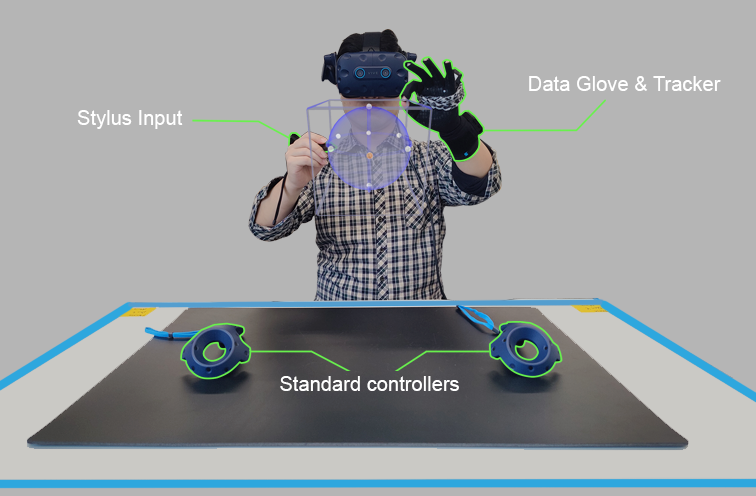

Asymmetric Interaction for VR sketching

This project explores how tool-based asymmetric VR interfaces can be used by artists to create immersive artwork more effectively. Most VR interfaces use two input methods of the same type, such as two handheld controllers or two bare-hand gestures. However, it is common for artists to use different tools in each hand, such as a pencil and sketch pad.

The research involves developed interaction methods that use different input methods in the edge hand, such as a stylus and gesture. Using this interface, artists can rapidly sketch their designs in VR. User studies are being conducted to compare asymmetric and symmetric interfaces to see which provides the best performance and which the users prefer more.

- 2021-2023

RadarHand

RadarHand is a wrist-worn wearable system that uses radar sensing to detect on-skin proprioceptive hand gestures, making it easy to interact with simple finger motions. Radar has the advantage of being robust, private, small, penetrating materials and requiring low computation costs.

In this project, we first evaluated the proprioceptive nature of the back of the hand and found that the thumb is the most proprioceptive of all the finger joints, followed by the index finger, middle finger, ring finger and pinky finger. This helped determine the types of gestures most suitable for the system.

Next, we trained deep-learning models for gesture classification. Out of 27 gesture group possibilities, we achieved 92% accuracy for a generic set of seven gestures and 93% accuracy for the proprioceptive set of eight gestures. We also evaluated RadarHand's performance in real-time and achieved an accuracy of between 74% and 91% depending if the system or user initiates the gesture first.

This research could contribute to a new generation of radar-based interfaces that allow people to interact with computers in a more natural way.

- 2023

Mind Reader

This project explores how brain activity can be used for computer input. The innovative MindReader game uses EEG (electroencephalogram) based Brain-Computer Interface (BCI) technology to showcase the player’s real-time brain waves. It uses colourful and creative visuals to show the raw brain activity from a number of EEG electrodes worn on the head. The player can also play a version of the Angry Birds game where their concentration level determines how far the birds can be shot. In this cheerful and engaging demo, friends and family can challenge each other to see who has the strongest neural connections!

- 2019-2023

Haptic Hongi

This project explores if XR technologies help overcome intercultural discomfort by using Augmented Reality (AR) and haptic feedback to present a traditional Māori greeting.

Using a Hololens2 AR headset, guests see a pre-recorded volumetric virtual video of Tania, a Māori woman, who greets them in a re-imagined, contemporary first encounter between indigenous Māori and newcomers. The visitors, manuhiri, consider their response in the absence of usual social pressures.

After a brief introduction, the virtual Tania slowly leans forward, inviting the visitor to ‘hongi’, a pressing together of noses and foreheads in a gesture symbolising “ ...peace and oneness of thought, purpose, desire, and hope”. This is felt as a haptic response delivered via a custom-made actuator built into the visitors' AR headset.

- 2023-2025

TBI Cafe

Over 36,000 Kiwis experience Traumatic Brain Injury (TBI) per year. TBI patients often experience severe cognitive fatigue, which impairs their ability to cope well in public/social settings. Rehabilitation can involve taking people into social settings with a therapist so that they can relearn how to interact in these environments. However, this is a time-consuming, expensive and difficult process.

To address this, we've created the TBI Cafe, a VR tool designed to help TBI patients cope with their injury and practice interacting in a cafe. In this application, people in VR practice ordering food and drink while interacting with virtual characters. Different types of distractions are introduced, such as a crying baby and loud conversations, which are designed to make the experience more stressful, and let the user practice managing stressful situations. Clinical trials with the software are currently underway.

- 2020-2022

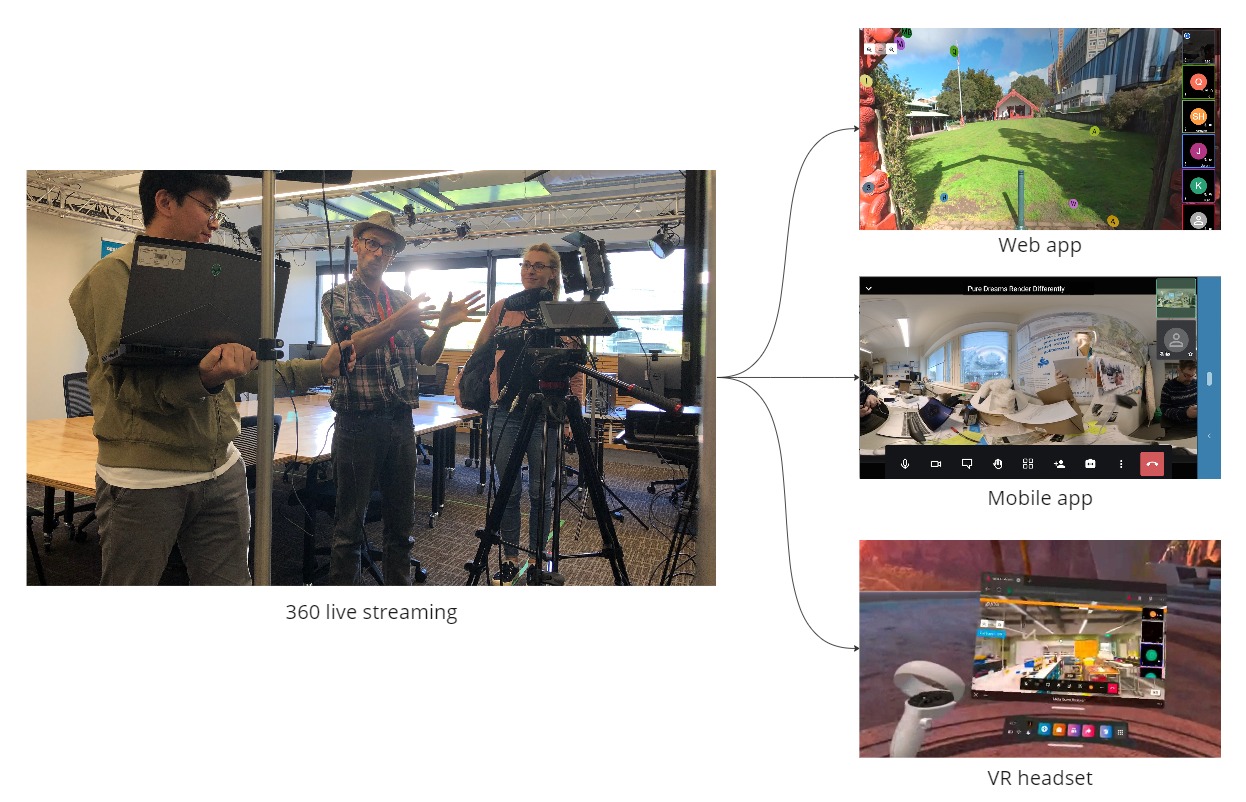

Show Me Around

This project introduces an immersive way to experience a conference call - by using a 360° camera to live stream a person’s surroundings to remote viewers. Viewers have the ability to freely look around the host video and get a better understanding of the sender’s surroundings.

Viewers can also observe where the other participants are looking, allowing them to understand better the conversation and what people are paying attention to. In a user study of the system, people found it much more immersive than a traditional video conferencing call and reported that they felt that they were transported to a remote location. Possible applications of this system include virtual tourism, education, industrial monitoring, entertainment, and more.

- 2020-2021

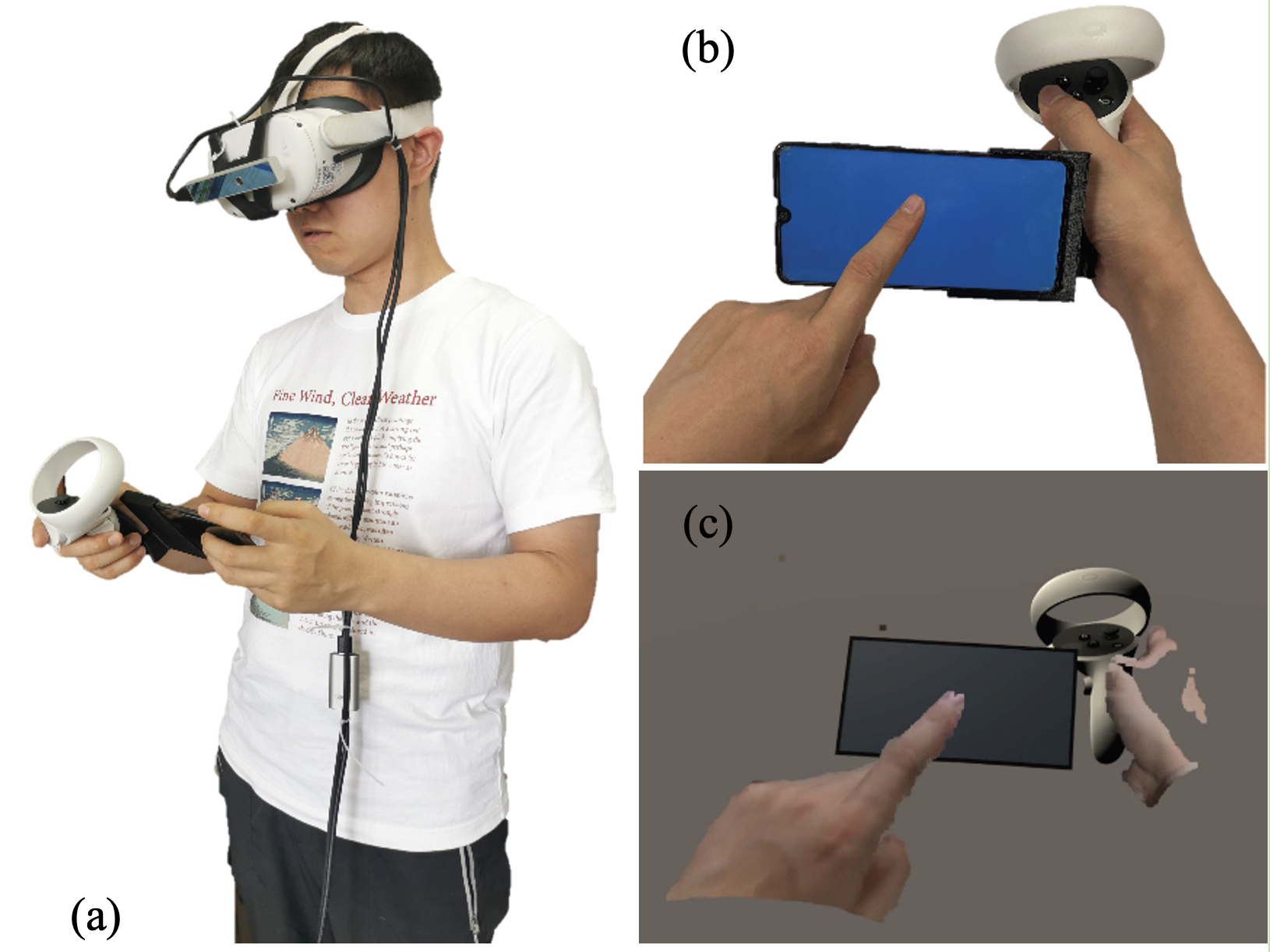

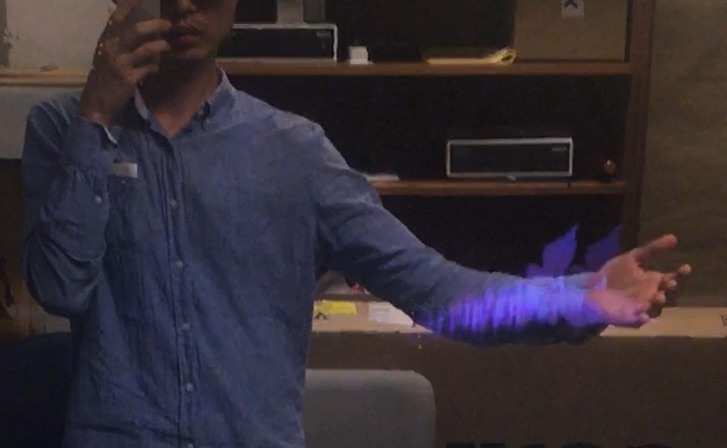

Using a Mobile Phone in VR

Virtual Reality (VR) Head-Mounted Display (HMD) technology immerses a user in a computer generated virtual environment. However, a VR HMD also blocks the users’ view of their physical surroundings, and so prevents them from using their mobile phones in a natural manner. In this project, we present a novel Augmented Virtuality (AV) interface that enables people to naturally interact with a mobile phone in real time in a virtual environment. The system allows the user to wear a VR HMD while seeing his/her 3D hands captured by a depth sensor and rendered in different styles, and enables the user to operate a virtual mobile phone aligned with their real phone.

- 2018 - 2022

Sharing Gesture and Gaze Cues for Enhancing MR Collaboration

This research focuses on visualizing shared gaze cues, designing interfaces for collaborative experience, and incorporating multimodal interaction techniques and physiological cues to support empathic Mixed Reality (MR) remote collaboration using HoloLens 2, Vive Pro Eye, Meta Pro, HP Omnicept, Theta V 360 camera, Windows Speech Recognition, Leap motion hand tracking, and Zephyr/Shimmer Sensing technologies

- 2019 - ongoing

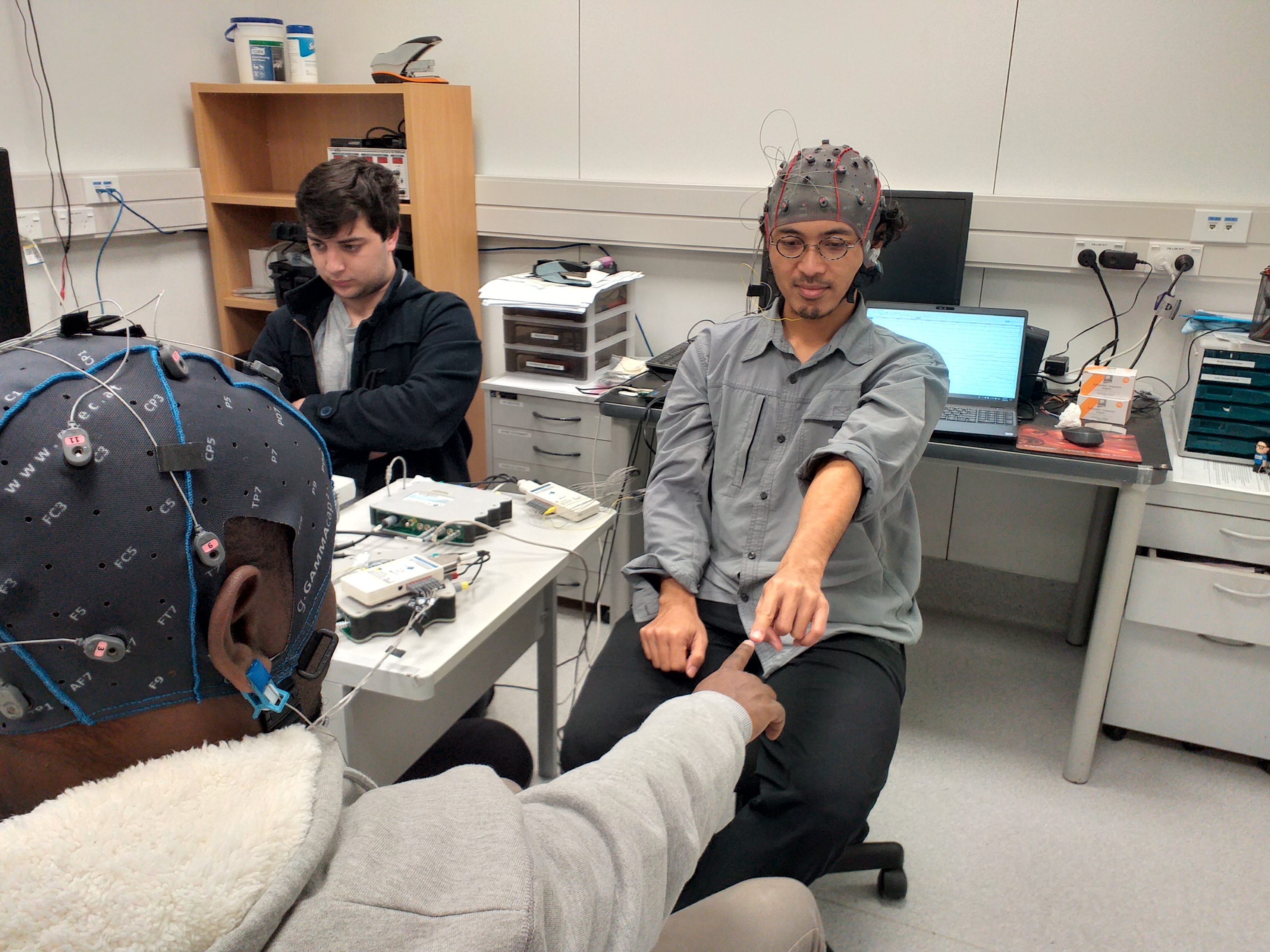

Brain Synchronisation in VR

Collaborative Virtual Reality have been the subject of research for nearly three decades now. This has led to a deep understanding of how individuals interact in such environments and some of the factors that impede these interactions. However, despite this knowledge we still do not fully understand how inter-personal interactions in virtual environments are reflected in the physiological domain. This project seeks to answer the question by monitoring neural activity of participants in collaborative virtual environments. We do this by using a technique known as Hyperscanning, which refers to the simultaneous acquisition of neural activity from two or more people. In this project we use Hyperscanning to determine if individuals interacting in a virtual environment exhibit inter-brain synchrony. The goal of this project is to first study the phenomenon of inter-brain synchrony, and then find means of inducing and expediting it by making changes in the virtual environment. This project feeds into the overarching goals of the Empathic Computing Laboratory that seek to bring individuals closer using technology as a vehicle to evoke empathy.

- 2016-2018

Empathy in Virtual Reality

Virtual reality (VR) interfaces is an influential medium to trigger emotional changes in humans. However, there is little research on making users of VR interfaces aware of their own and in collaborative interfaces, one another's emotional state.

In this project, through a series of system development and user evaluations, we are investigating how physiological data such as heart rate, galvanic skin response, pupil dilation, and EEG can be used as a medium to communicate emotional states either to self (single user interfaces) or the collaborator (collaborative interfaces). The overarching goal is to make VR environments more empathetic and collaborators more aware of each other's emotional state.

- 2017

SharedSphere

SharedSphere is a Mixed Reality based remote collaboration system which not only allows sharing a live captured immersive 360 panorama, but also supports enriched two-way communication and collaboration through sharing non-verbal communication cues, such as view awareness cues, drawn annotation, and hand gestures.

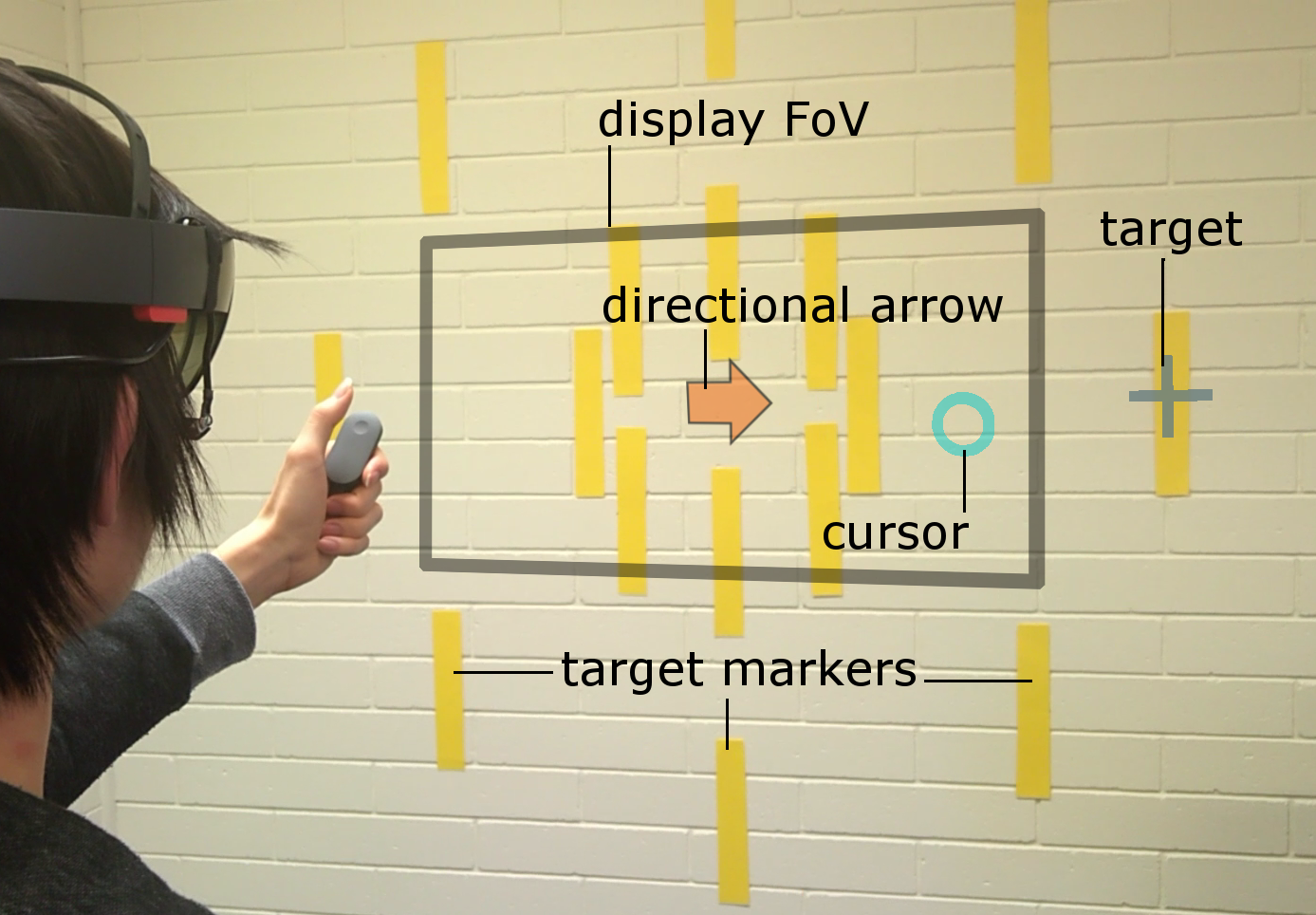

Pinpointing

Head and eye movement can be leveraged to improve the user’s interaction repertoire for wearable displays. Head movements are deliberate and accurate, and provide the current state-of-the-art pointing technique. Eye gaze can potentially be faster and more ergonomic, but suffers from low accuracy due to calibration errors and drift of wearable eye-tracking sensors. This work investigates precise, multimodal selection techniques using head motion and eye gaze. A comparison of speed and pointing accuracy reveals the relative merits of each method, including the achievable target size for robust selection. We demonstrate and discuss example applications for augmented reality, including compact menus with deep structure, and a proof-of-concept method for on-line correction of calibration drift.

Mini-Me

Mini-Me is an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user’s gaze direction and body gestures while it transforms in size and orientation to stay within the AR user’s field of view. We tested Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration.

Augmented Mirrors

Mirrors are physical displays that show our real world in reflection. While physical mirrors simply show what is in the real world scene, with help of digital technology, we can also alter the reality reflected in the mirror. The Augmented Mirrors project aims at exploring visualisation interaction techniques for exploiting mirrors as Augmented Reality (AR) displays. The project especially focuses on using user interface agents for guiding user interaction with Augmented Mirrors.

- 2016

Empathy Glasses

We have been developing a remote collaboration system with Empathy Glasses, a head worn display designed to create a stronger feeling of empathy between remote collaborators. To do this, we combined a head- mounted see-through display with a facial expression recognition system, a heart rate sensor, and an eye tracker. The goal is to enable a remote person to see and hear from another person's perspective and to understand how they are feeling. In this way, the system shares non-verbal cues that could help increase empathy between remote collaborators.