RadarHand

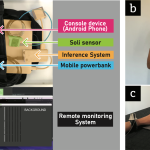

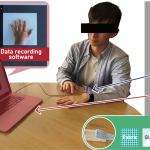

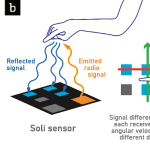

RadarHand is a wrist-worn wearable system that uses radar sensing to detect on-skin proprioceptive hand gestures, making it easy to interact with simple finger motions. Radar has the advantage of being robust, private, small, penetrating materials and requiring low computation costs.

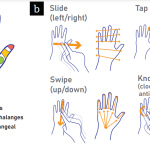

In this project, we first evaluated the proprioceptive nature of the back of the hand and found that the thumb is the most proprioceptive of all the finger joints, followed by the index finger, middle finger, ring finger and pinky finger. This helped determine the types of gestures most suitable for the system.

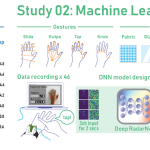

Next, we trained deep-learning models for gesture classification. Out of 27 gesture group possibilities, we achieved 92% accuracy for a generic set of seven gestures and 93% accuracy for the proprioceptive set of eight gestures. We also evaluated RadarHand’s performance in real-time and achieved an accuracy of between 74% and 91% depending if the system or user initiates the gesture first.

This research could contribute to a new generation of radar-based interfaces that allow people to interact with computers in a more natural way.

Project Video(s):https://www.youtube.com/watch?v=BxbbxNes9vI