Sharing Gesture and Gaze Cues for Enhancing MR Collaboration

This project explores how gaze and gestures could be used to enhance collaboration in Mixed Reality environments. Gaze and gesture provide important cues for face to face collaboration, but it can be difficult to convey those same cues in current teleconferencing systems. The latest Augmented Reality and Virtual Reality displays incorporate eye-tracking, so an interesting research question is how gaze cues can be used to enhance collaborative MR experiences. For example a remote user in VR could have their hands tracked and shared with a local user in AR who can see virtual hands appearing over their workspace showing them what to do. In a similar way eye-tracking technology can be used to share the gaze of a remote helper with a local working to help them perform better on a real world task.

Most AR and VR gaze interfaces represent gaze as a simple virtual cue, such as a gaze line, or crosshair. However, people exhibit a lot of different gaze behaviours, such as rapid browsing, saccades, focusing on locations of interest, and shared gaze. So in this project we explored how representing gaze behaviours using different cues could improve collaboration. We also explored how speech could be used in conjunction with gaze cues to enhance collaboration, and how physiological cues could also be shared between users.

Our research has shown that sharing a wide range of different virtual gaze and gesture cues can significantly enhance remote collaboration in Mixed Reality systems. We tested this research in a number of different MR interfaces, typically having one person in the real world wearing an AR display and collaborating with a remote person in a VR display. We found that gaze visualisations amplify meaningful joint attention and improve co-presence compared to a no gaze condition. Using gaze cues means that users don’t need to speak to each other as much, lowering their cognitive load while improving mutual understanding. The results from this research could help the next generation of interface designers create significantly improved MR interfaces for remote collaboration.

Project Video(s):https://www.youtube.com/watch?v=rq3Q0Duc7g4

https://www.youtube.com/watch?v=OEScbb3LajQ

Publications

-

CoVAR: Mixed-Platform Remote Collaborative Augmented and Virtual Realities System with Shared Collaboration Cues

Piumsomboon, T., Dey, A., Ens, B., Lee, G., and Billinghurst, MPiumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M. (2017, October). [POSTER] CoVAR: Mixed-Platform Remote Collaborative Augmented and Virtual Realities System with Shared Collaboration Cues. In 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct) (pp. 218-219). IEEE.

@inproceedings{piumsomboon2017poster,

title={[POSTER] CoVAR: Mixed-Platform Remote Collaborative Augmented and Virtual Realities System with Shared Collaboration Cues},

author={Piumsomboon, Thammathip and Dey, Arindam and Ens, Barrett and Lee, Gun and Billinghurst, Mark},

booktitle={2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct)},

pages={218--219},

year={2017},

organization={IEEE}

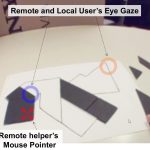

}We present CoVAR, a novel Virtual Reality (VR) and Augmented Reality (AR) system for remote collaboration. It supports collaboration between AR and VR users by sharing a 3D reconstruction of the AR user's environment. To enhance this mixed platform collaboration, it provides natural inputs such as eye-gaze and hand gestures, remote embodiment through avatar's head and hands, and awareness cues of field-of-view and gaze cue. In this paper, we describe the system architecture, setup and calibration procedures, input methods and interaction, and collaboration enhancement features. -

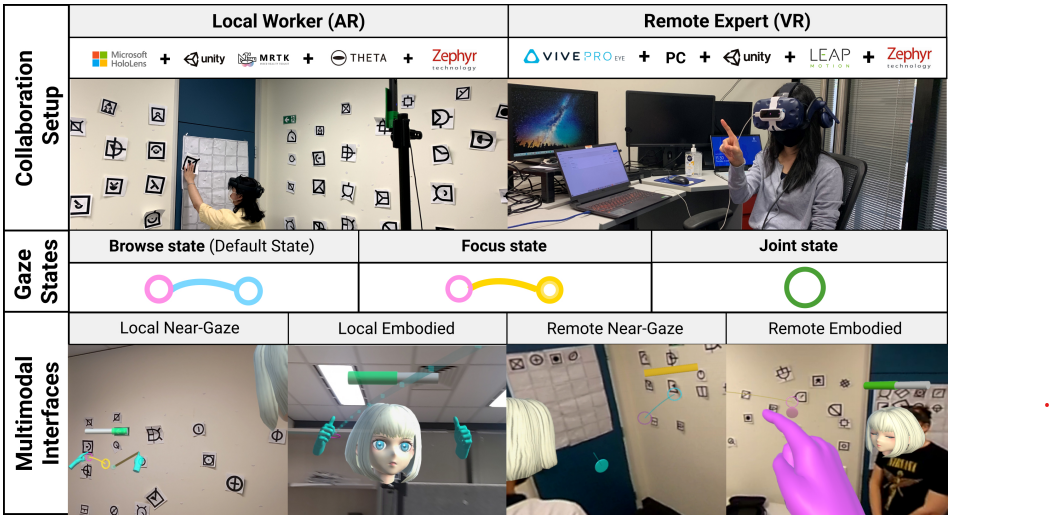

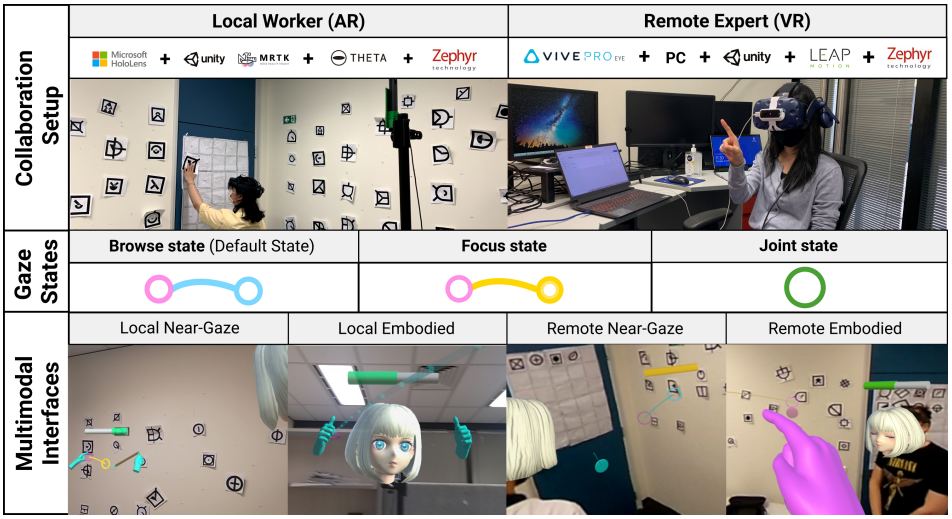

A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing

Huidong Bai , Prasanth Sasikumar , Jing Yang , Mark BillinghurstHuidong Bai, Prasanth Sasikumar, Jing Yang, and Mark Billinghurst. 2020. A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–13. DOI:https://doi.org/10.1145/3313831.3376550

@inproceedings{bai2020user,

title={A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing},

author={Bai, Huidong and Sasikumar, Prasanth and Yang, Jing and Billinghurst, Mark},

booktitle={Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems},

pages={1--13},

year={2020}

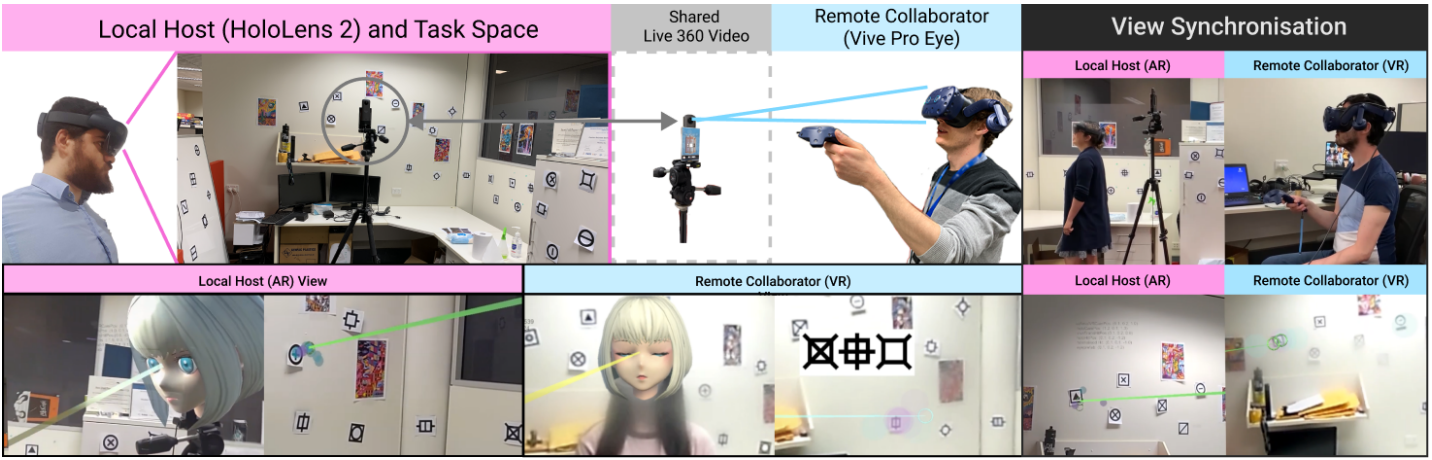

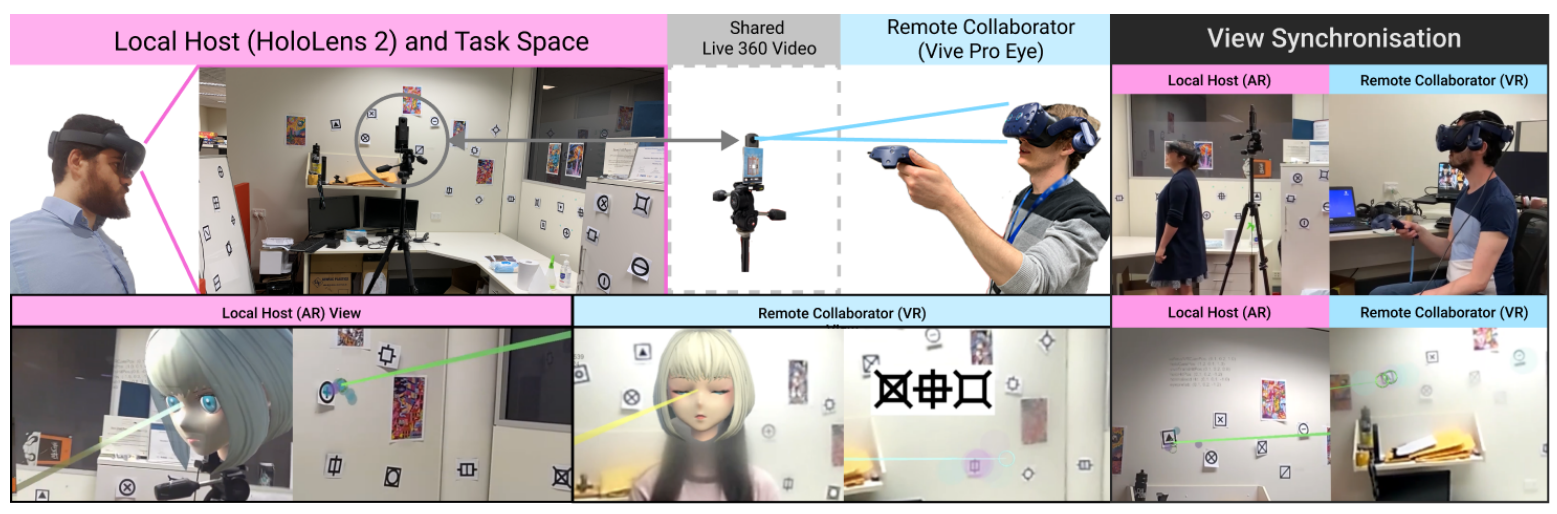

}Supporting natural communication cues is critical for people to work together remotely and face-to-face. In this paper we present a Mixed Reality (MR) remote collaboration system that enables a local worker to share a live 3D panorama of his/her surroundings with a remote expert. The remote expert can also share task instructions back to the local worker using visual cues in addition to verbal communication. We conducted a user study to investigate how sharing augmented gaze and gesture cues from the remote expert to the local worker could affect the overall collaboration performance and user experience. We found that by combing gaze and gesture cues, our remote collaboration system could provide a significantly stronger sense of co-presence for both the local and remote users than using the gaze cue alone. The combined cues were also rated significantly higher than the gaze in terms of ease of conveying spatial actions. -

Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration

Allison Jing, Kieran May, Gun Lee, Mark Billinghurst.Jing, A., May, K., Lee, G., & Billinghurst, M. (2021). Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration. Frontiers in Virtual Reality, 2, 79.

@article{jing2021eye,

title={Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration},

author={Jing, Allison and May, Kieran and Lee, Gun and Billinghurst, Mark},

journal={Frontiers in Virtual Reality},

volume={2},

pages={79},

year={2021},

publisher={Frontiers}

}Gaze is one of the predominant communication cues and can provide valuable implicit information such as intention or focus when performing collaborative tasks. However, little research has been done on how virtual gaze cues combining spatial and temporal characteristics impact real-life physical tasks during face to face collaboration. In this study, we explore the effect of showing joint gaze interaction in an Augmented Reality (AR) interface by evaluating three bi-directional collaborative (BDC) gaze visualisations with three levels of gaze behaviours. Using three independent tasks, we found that all bi-directional collaborative BDC visualisations are rated significantly better at representing joint attention and user intention compared to a non-collaborative (NC) condition, and hence are considered more engaging. The Laser Eye condition, spatially embodied with gaze direction, is perceived significantly more effective as it encourages mutual gaze awareness with a relatively low mental effort in a less constrained workspace. In addition, by offering additional virtual representation that compensates for verbal descriptions and hand pointing, BDC gaze visualisations can encourage more conscious use of gaze cues coupled with deictic references during co-located symmetric collaboration. We provide a summary of the lessons learned, limitations of the study, and directions for future research. -

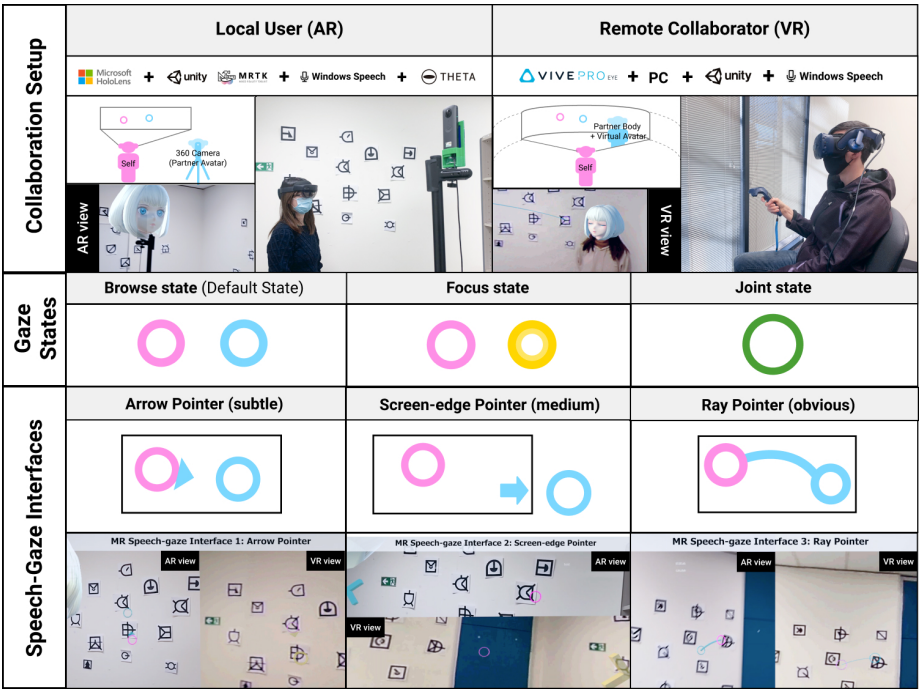

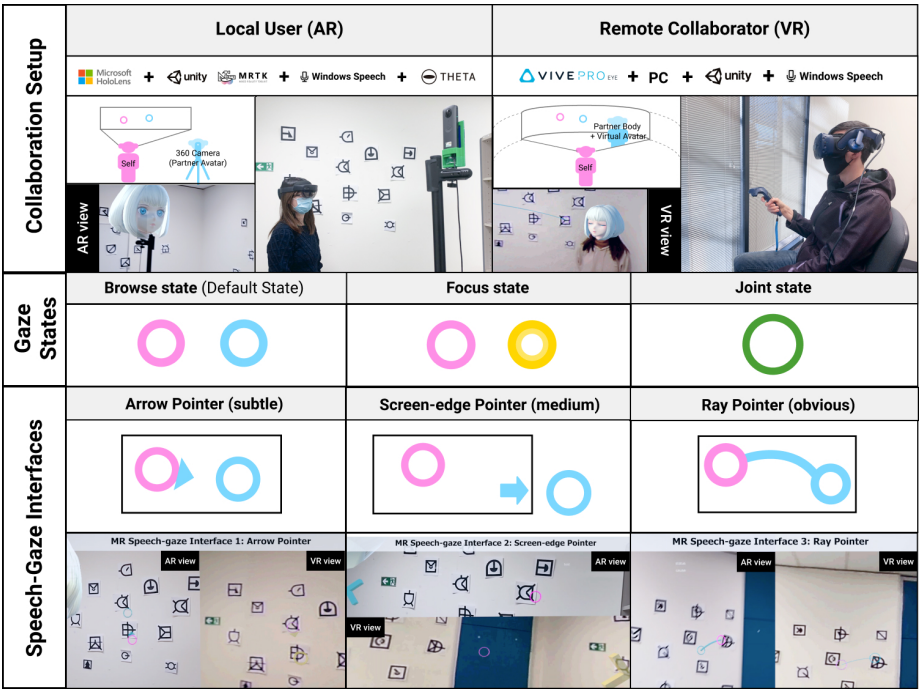

Using Speech to Visualise Shared Gaze Cues in MR Remote Collaboration

Allison Jing; Gun Lee; Mark BillinghurstJing, A., Lee, G., & Billinghurst, M. (2022, March). Using Speech to Visualise Shared Gaze Cues in MR Remote Collaboration. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 250-259). IEEE.

@inproceedings{jing2022using,

title={Using Speech to Visualise Shared Gaze Cues in MR Remote Collaboration},

author={Jing, Allison and Lee, Gun and Billinghurst, Mark},

booktitle={2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={250--259},

year={2022},

organization={IEEE}

}

In this paper, we present a 360° panoramic Mixed Reality (MR) sys-tem that visualises shared gaze cues using contextual speech input to improve task coordination. We conducted two studies to evaluate the design of the MR gaze-speech interface exploring the combinations of visualisation style and context control level. Findings from the first study suggest that an explicit visual form that directly connects the collaborators’ shared gaze to the contextual conversation is preferred. The second study indicates that the gaze-speech modality shortens the coordination time to attend to the shared interest, making the communication more natural and the collaboration more effective. Qualitative feedback also suggest that having a constant joint gaze indicator provides a consistent bi-directional view while establishing a sense of co-presence during task collaboration. We discuss the implications for the design of collaborative MR systems and directions for future research. -

The Impact of Sharing Gaze Behaviours in Collaborative Mixed Reality

Allison Jing , Kieran May , Brandon Matthews , Gun Lee , Mark BillinghurstAllison Jing, Kieran May, Brandon Matthews, Gun Lee, and Mark Billinghurst. 2022. The Impact of Sharing Gaze Behaviours in Collaborative Mixed Reality. Proc. ACM Hum.-Comput. Interact. 6, CSCW2, Article 463 (November 2022), 27 pages. https://doi.org/10.1145/3555564

@article{10.1145/3555564,

author = {Jing, Allison and May, Kieran and Matthews, Brandon and Lee, Gun and Billinghurst, Mark},

title = {The Impact of Sharing Gaze Behaviours in Collaborative Mixed Reality},

year = {2022},

issue_date = {November 2022},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {6},

number = {CSCW2},

url = {https://doi.org/10.1145/3555564},

doi = {10.1145/3555564},

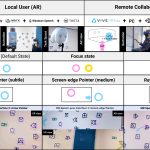

abstract = {In a remote collaboration involving a physical task, visualising gaze behaviours may compensate for other unavailable communication channels. In this paper, we report on a 360° panoramic Mixed Reality (MR) remote collaboration system that shares gaze behaviour visualisations between a local user in Augmented Reality and a remote collaborator in Virtual Reality. We conducted two user studies to evaluate the design of MR gaze interfaces and the effect of gaze behaviour (on/off) and gaze style (bi-/uni-directional). The results indicate that gaze visualisations amplify meaningful joint attention and improve co-presence compared to a no gaze condition. Gaze behaviour visualisations enable communication to be less verbally complex therefore lowering collaborators' cognitive load while improving mutual understanding. Users felt that bi-directional behaviour visualisation, showing both collaborator's gaze state, was the preferred condition since it enabled easy identification of shared interests and task progress.},

journal = {Proc. ACM Hum.-Comput. Interact.},

month = {nov},

articleno = {463},

numpages = {27},

keywords = {gaze visualization, mixed reality remote collaboration, human-computer interaction}

}In a remote collaboration involving a physical task, visualising gaze behaviours may compensate for other unavailable communication channels. In this paper, we report on a 360° panoramic Mixed Reality (MR) remote collaboration system that shares gaze behaviour visualisations between a local user in Augmented Reality and a remote collaborator in Virtual Reality. We conducted two user studies to evaluate the design of MR gaze interfaces and the effect of gaze behaviour (on/off) and gaze style (bi-/uni-directional). The results indicate that gaze visualisations amplify meaningful joint attention and improve co-presence compared to a no gaze condition. Gaze behaviour visualisations enable communication to be less verbally complex therefore lowering collaborators' cognitive load while improving mutual understanding. Users felt that bi-directional behaviour visualisation, showing both collaborator's gaze state, was the preferred condition since it enabled easy identification of shared interests and task progress. -

Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration

Allison Jing; Kunal Gupta; Jeremy McDade; Gun A. Lee; Mark BillinghurstA. Jing, K. Gupta, J. McDade, G. A. Lee and M. Billinghurst, "Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration," 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, Singapore, 2022, pp. 837-846, doi: 10.1109/ISMAR55827.2022.00102.

@INPROCEEDINGS{9995367,

author={Jing, Allison and Gupta, Kunal and McDade, Jeremy and Lee, Gun A. and Billinghurst, Mark},

booktitle={2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

title={Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration},

year={2022},

volume={},

number={},

pages={837-846},

doi={10.1109/ISMAR55827.2022.00102}}

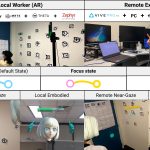

In this paper, we share real-time collaborative gaze behaviours, hand pointing, gesturing, and heart rate visualisations between remote collaborators using a live 360 ° panoramic-video based Mixed Reality (MR) system. We first ran a pilot study to explore visual designs to combine communication cues with biofeedback (heart rate), aiming to understand user perceptions of empathic collaboration. We then conducted a formal study to investigate the effect of modality (Gaze+Hand, Hand-only) and interface (Near-Gaze, Embodied). The results show that the Gaze+Hand modality in a Near-Gaze interface is significantly better at reducing task load, improving co-presence, enhancing understanding and tightening collaborative behaviours compared to the conventional Embodied hand-only experience. Ranked as the most preferred condition, the Gaze+Hand in Near-Gaze condition is perceived to reduce the need for dividing attention to the collaborator’s physical location, although it feels slightly less natural compared to the embodied visualisations. In addition, the Gaze+Hand conditions also led to more joint attention and less hand pointing to align mutual understanding. Lastly, we provide a design guideline to summarize what we have learned from the studies on the representation between modality, interface, and biofeedback. -

Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration

Allison Jing; Kunal Gupta; Jeremy McDade; Gun A. Lee; Mark BillinghurstAllison Jing, Kunal Gupta, Jeremy McDade, Gun Lee, and Mark Billinghurst. 2022. Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration. In ACM SIGGRAPH 2022 Posters (SIGGRAPH '22). Association for Computing Machinery, New York, NY, USA, Article 29, 1–2. https://doi.org/10.1145/3532719.3543213

@inproceedings{10.1145/3532719.3543213,

author = {Jing, Allison and Gupta, Kunal and McDade, Jeremy and Lee, Gun and Billinghurst, Mark},

title = {Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration},

year = {2022},

isbn = {9781450393614},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3532719.3543213},

doi = {10.1145/3532719.3543213},

abstract = {In this poster, we present a live 360° panoramic-video based empathic Mixed Reality (MR) collaboration system that shares various Near-Gaze non-verbal communication cues including gaze, hand pointing, gesturing, and heart rate visualisations in real-time. The preliminary results indicate that the interface with the partner’s communication cues visualised close to the gaze point allows users to focus without dividing attention to the collaborator’s physical body movements yet still effectively communicate. Shared gaze visualisations coupled with deictic languages are primarily used to affirm joint attention and mutual understanding, while hand pointing and gesturing are used as secondary. Our approach provides a new way to help enable effective remote collaboration through varied empathic communication visualisations and modalities which covers different task properties and spatial setups.},

booktitle = {ACM SIGGRAPH 2022 Posters},

articleno = {29},

numpages = {2},

location = {Vancouver, BC, Canada},

series = {SIGGRAPH '22}

}In this poster, we present a live 360° panoramic-video based empathic Mixed Reality (MR) collaboration system that shares various Near-Gaze non-verbal communication cues including gaze, hand pointing, gesturing, and heart rate visualisations in real-time. The preliminary results indicate that the interface with the partner’s communication cues visualised close to the gaze point allows users to focus without dividing attention to the collaborator’s physical body movements yet still effectively communicate. Shared gaze visualisations coupled with deictic languages are primarily used to affirm joint attention and mutual understanding, while hand pointing and gesturing are used as secondary. Our approach provides a new way to help enable effective remote collaboration through varied empathic communication visualisations and modalities which covers different task properties and spatial setups. -

eyemR-Talk: Using Speech to Visualise Shared MR Gaze Cues

Allison Jing , Brandon Matthews , Kieran May , Thomas Clarke , Gun Lee , Mark BillinghurstAllison Jing, Brandon Matthews, Kieran May, Thomas Clarke, Gun Lee, and Mark Billinghurst. 2021. EyemR-Talk: Using Speech to Visualise Shared MR Gaze Cues. In SIGGRAPH Asia 2021 Posters (SA '21 Posters). Association for Computing Machinery, New York, NY, USA, Article 16, 1–2. https://doi.org/10.1145/3476124.3488618

@inproceedings{10.1145/3476124.3488618,

author = {Jing, Allison and Matthews, Brandon and May, Kieran and Clarke, Thomas and Lee, Gun and Billinghurst, Mark},

title = {EyemR-Talk: Using Speech to Visualise Shared MR Gaze Cues},

year = {2021},

isbn = {9781450386876},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3476124.3488618},

doi = {10.1145/3476124.3488618},

abstract = {In this poster we present eyemR-Talk, a Mixed Reality (MR) collaboration system that uses speech input to trigger shared gaze visualisations between remote users. The system uses 360° panoramic video to support collaboration between a local user in the real world in an Augmented Reality (AR) view and a remote collaborator in Virtual Reality (VR). Using specific speech phrases to turn on virtual gaze visualisations, the system enables contextual speech-gaze interaction between collaborators. The overall benefit is to achieve more natural gaze awareness, leading to better communication and more effective collaboration.},

booktitle = {SIGGRAPH Asia 2021 Posters},

articleno = {16},

numpages = {2},

keywords = {Mixed Reality remote collaboration, gaze visualization, speech input},

location = {Tokyo, Japan},

series = {SA '21 Posters}

}In this poster we present eyemR-Talk, a Mixed Reality (MR) collaboration system that uses speech input to trigger shared gaze visualisations between remote users. The system uses 360° panoramic video to support collaboration between a local user in the real world in an Augmented Reality (AR) view and a remote collaborator in Virtual Reality (VR). Using specific speech phrases to turn on virtual gaze visualisations, the system enables contextual speech-gaze interaction between collaborators. The overall benefit is to achieve more natural gaze awareness, leading to better communication and more effective collaboration. -

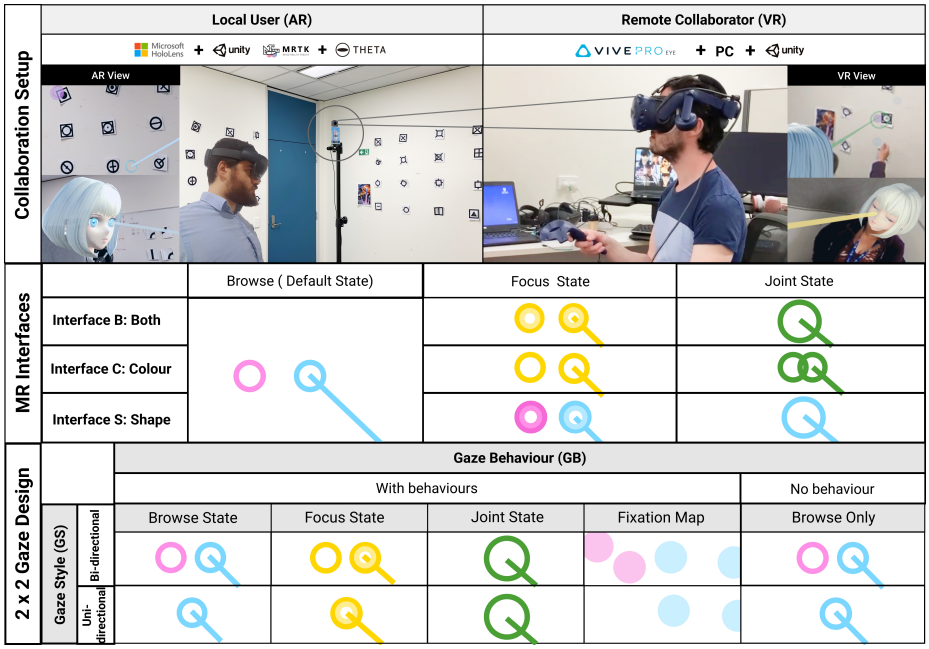

eyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration

Allison Jing , Kieran William May , Mahnoor Naeem , Gun Lee , Mark BillinghurstAllison Jing, Kieran William May, Mahnoor Naeem, Gun Lee, and Mark Billinghurst. 2021. EyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (CHI EA '21). Association for Computing Machinery, New York, NY, USA, Article 283, 1–7. https://doi.org/10.1145/3411763.3451844

@inproceedings{10.1145/3411763.3451844,

author = {Jing, Allison and May, Kieran William and Naeem, Mahnoor and Lee, Gun and Billinghurst, Mark},

title = {EyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration},

year = {2021},

isbn = {9781450380959},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3411763.3451844},

doi = {10.1145/3411763.3451844},

abstract = {Gaze is one of the most important communication cues in face-to-face collaboration. However, in remote collaboration, sharing dynamic gaze information is more difficult. In this research, we investigate how sharing gaze behavioural cues can improve remote collaboration in a Mixed Reality (MR) environment. To do this, we developed eyemR-Vis, a 360 panoramic Mixed Reality remote collaboration system that shows gaze behavioural cues as bi-directional spatial virtual visualisations shared between a local host and a remote collaborator. Preliminary results from an exploratory study indicate that using virtual cues to visualise gaze behaviour has the potential to increase co-presence, improve gaze awareness, encourage collaboration, and is inclined to be less physically demanding or mentally distracting.},

booktitle = {Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

articleno = {283},

numpages = {7},

keywords = {Human-Computer Interaction, Gaze Visualisation, Mixed Reality Remote Collaboration, CSCW},

location = {Yokohama, Japan},

series = {CHI EA '21}

}Gaze is one of the most important communication cues in face-to-face collaboration. However, in remote collaboration, sharing dynamic gaze information is more difficult. In this research, we investigate how sharing gaze behavioural cues can improve remote collaboration in a Mixed Reality (MR) environment. To do this, we developed eyemR-Vis, a 360 panoramic Mixed Reality remote collaboration system that shows gaze behavioural cues as bi-directional spatial virtual visualisations shared between a local host and a remote collaborator. Preliminary results from an exploratory study indicate that using virtual cues to visualise gaze behaviour has the potential to increase co-presence, improve gaze awareness, encourage collaboration, and is inclined to be less physically demanding or mentally distracting. -

eyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators

Allison Jing , Kieran William May , Mahnoor Naeem , Gun Lee , Mark BillinghurstAllison Jing, Kieran William May, Mahnoor Naeem, Gun Lee, and Mark Billinghurst. 2021. EyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (CHI EA '21). Association for Computing Machinery, New York, NY, USA, Article 188, 1–4. https://doi.org/10.1145/3411763.3451545

@inproceedings{10.1145/3411763.3451545,

author = {Jing, Allison and May, Kieran William and Naeem, Mahnoor and Lee, Gun and Billinghurst, Mark},

title = {EyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators},

year = {2021},

isbn = {9781450380959},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3411763.3451545},

doi = {10.1145/3411763.3451545},

abstract = {This demonstration shows eyemR-Vis, a 360 panoramic Mixed Reality collaboration system that translates gaze behavioural cues to bi-directional visualisations between a local host (AR) and a remote collaborator (VR). The system is designed to share dynamic gaze behavioural cues as bi-directional spatial virtual visualisations between a local host and a remote collaborator. This enables richer communication of gaze through four visualisation techniques: browse, focus, mutual-gaze, and fixated circle-map. Additionally, our system supports simple bi-directional avatar interaction as well as panoramic video zoom. This makes interaction in the normally constrained remote task space more flexible and relatively natural. By showing visual communication cues that are physically inaccessible in the remote task space through reallocating and visualising the existing ones, our system aims to provide a more engaging and effective remote collaboration experience.},

booktitle = {Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

articleno = {188},

numpages = {4},

keywords = {Gaze Visualisation, Human-Computer Interaction, Mixed Reality Remote Collaboration, CSCW},

location = {Yokohama, Japan},

series = {CHI EA '21}

}This demonstration shows eyemR-Vis, a 360 panoramic Mixed Reality collaboration system that translates gaze behavioural cues to bi-directional visualisations between a local host (AR) and a remote collaborator (VR). The system is designed to share dynamic gaze behavioural cues as bi-directional spatial virtual visualisations between a local host and a remote collaborator. This enables richer communication of gaze through four visualisation techniques: browse, focus, mutual-gaze, and fixated circle-map. Additionally, our system supports simple bi-directional avatar interaction as well as panoramic video zoom. This makes interaction in the normally constrained remote task space more flexible and relatively natural. By showing visual communication cues that are physically inaccessible in the remote task space through reallocating and visualising the existing ones, our system aims to provide a more engaging and effective remote collaboration experience.