Publications

- 2023

Brain activity during cybersickness: a scoping review

Eunhee Chang, Mark Billinghurst, Byounghyun YooChang, E., Billinghurst, M., & Yoo, B. (2023). Brain activity during cybersickness: a scoping review. Virtual Reality, 1-25.

@article{chang2023brain,

title={Brain activity during cybersickness: a scoping review},

author={Chang, Eunhee and Billinghurst, Mark and Yoo, Byounghyun},

journal={Virtual Reality},

pages={1--25},

year={2023},

publisher={Springer}

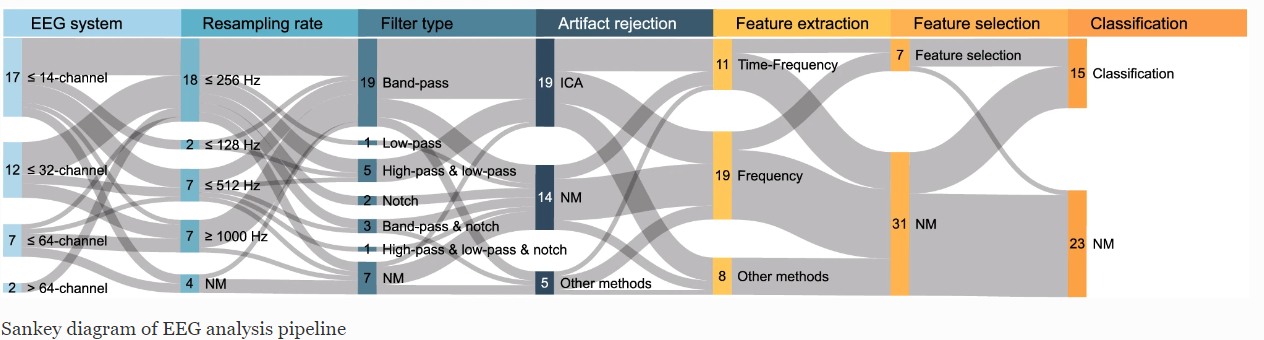

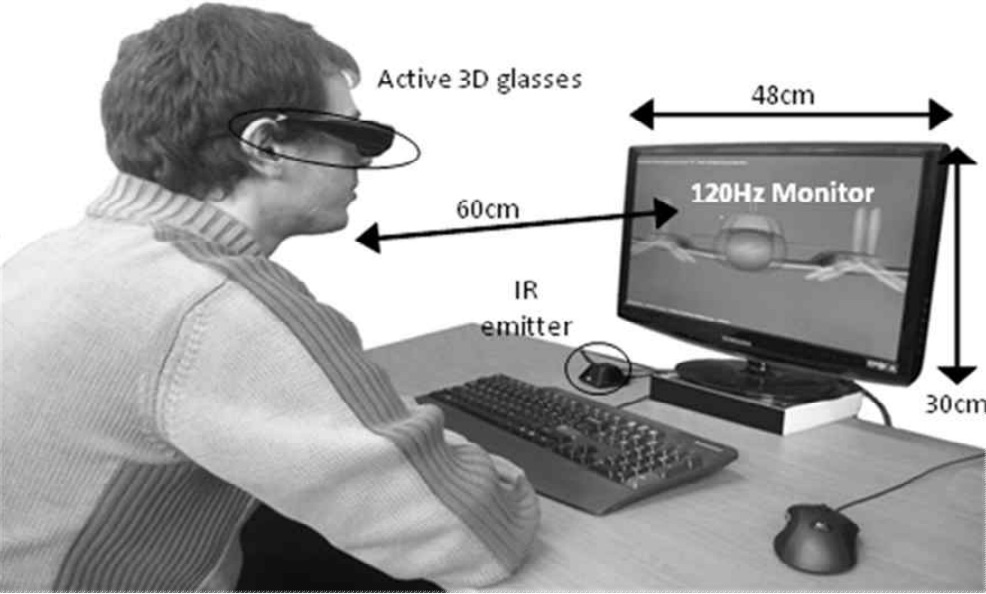

}Virtual reality (VR) experiences can cause a range of negative symptoms such as nausea, disorientation, and oculomotor discomfort, which is collectively called cybersickness. Previous studies have attempted to develop a reliable measure for detecting cybersickness instead of using questionnaires, and electroencephalogram (EEG) has been regarded as one of the possible alternatives. However, despite the increasing interest, little is known about which brain activities are consistently associated with cybersickness and what types of methods should be adopted for measuring discomfort through brain activity. We conducted a scoping review of 33 experimental studies in cybersickness and EEG found through database searches and screening. To understand these studies, we organized the pipeline of EEG analysis into four steps (preprocessing, feature extraction, feature selection, classification) and surveyed the characteristics of each step. The results showed that most studies performed frequency or time-frequency analysis for EEG feature extraction. A part of the studies applied a classification model to predict cybersickness indicating an accuracy between 79 and 100%. These studies tended to use HMD-based VR with a portable EEG headset for measuring brain activity. Most VR content shown was scenic views such as driving or navigating a road, and the age of participants was limited to people in their 20 s. This scoping review contributes to presenting an overview of cybersickness-related EEG research and establishing directions for future work.- 2022

NapWell: An EOG-based Sleep Assistant Exploring the Effects of Virtual Reality on Sleep Onset

Yun Suen Pai, Marsel L. Bait, Juyoung Lee, Jingjing Xu, Roshan L Peiris, Woontack Woo, Mark Billinghurst & Kai KunzePai, Y. S., Bait, M. L., Lee, J., Xu, J., Peiris, R. L., Woo, W., ... & Kunze, K. (2022). NapWell: an EOG-based sleep assistant exploring the effects of virtual reality on sleep onset. Virtual Reality, 26(2), 437-451.

@article{pai2022napwell,

title={NapWell: an EOG-based sleep assistant exploring the effects of virtual reality on sleep onset},

author={Pai, Yun Suen and Bait, Marsel L and Lee, Juyoung and Xu, Jingjing and Peiris, Roshan L and Woo, Woontack and Billinghurst, Mark and Kunze, Kai},

journal={Virtual Reality},

volume={26},

number={2},

pages={437--451},

year={2022},

publisher={Springer}

}We present NapWell, a Sleep Assistant using virtual reality (VR) to decrease sleep onset latency by providing a realistic imagery distraction prior to sleep onset. Our proposed prototype was built using commercial hardware and with relatively low cost, making it replicable for future works as well as paving the way for more low cost EOG-VR devices for sleep assistance. We conducted a user study (n=20) by comparing different sleep conditions; no devices, sleeping mask, VR environment of the study room and preferred VR environment by the participant. During this period, we recorded the electrooculography (EOG) signal and sleep onset time using a finger tapping task (FTT). We found that VR was able to significantly decrease sleep onset latency. We also developed a machine learning model based on EOG signals that can predict sleep onset with a cross-validated accuracy of 70.03%. The presented study demonstrates the feasibility of VR to be used as a tool to decrease sleep onset latency, as well as the use of embedded EOG sensors with VR for automatic sleep detection.

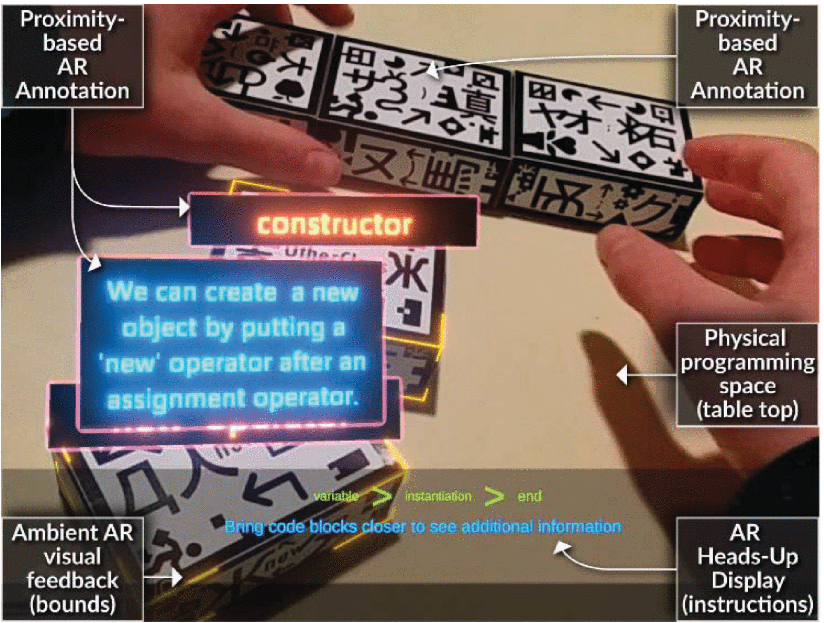

RaITIn: Radar-Based Identification for Tangible Interactions

Tamil Selvan Gunasekaran , Ryo Hajika , Yun Suen Pai , Eiji Hayashi , Mark BillinghurstGunasekaran, T. S., Hajika, R., Pai, Y. S., Hayashi, E., & Billinghurst, M. (2022, April). RaITIn: Radar-Based Identification for Tangible Interactions. In CHI Conference on Human Factors in Computing Systems Extended Abstracts (pp. 1-7).

@inproceedings{gunasekaran2022raitin,

title={RaITIn: Radar-Based Identification for Tangible Interactions},

author={Gunasekaran, Tamil Selvan and Hajika, Ryo and Pai, Yun Suen and Hayashi, Eiji and Billinghurst, Mark},

booktitle={CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--7},

year={2022}

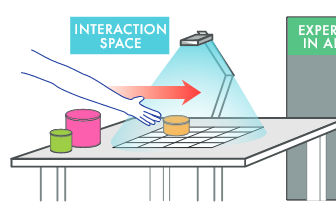

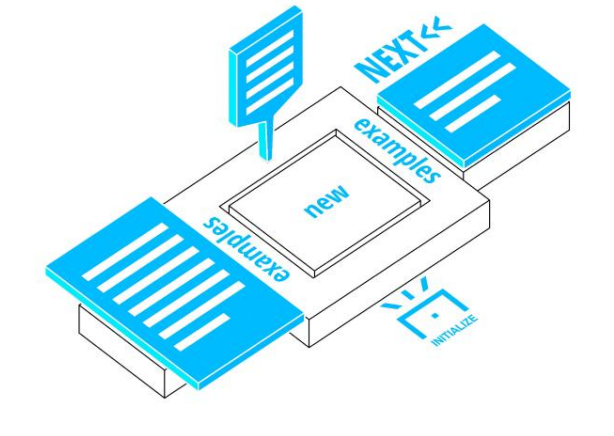

}Radar is primarily used for applications like tracking and large-scale ranging, and its use for object identification has been rarely explored. This paper introduces RaITIn, a radar-based identification (ID) method for tangible interactions. Unlike conventional radar solutions, RaITIn can track and identify objects on a tabletop scale. We use frequency modulated continuous wave (FMCW) radar sensors to classify different objects embedded with low-cost radar reflectors of varying sizes on a tabletop setup. We also introduce Stackable IDs, where different objects can be stacked and combined to produce unique IDs. The result allows RaITIn to accurately identify visually identical objects embedded with different low-cost reflector configurations. When combined with a radar’s ability for tracking, it creates novel tabletop interaction modalities. We discuss possible applications and areas for future work.

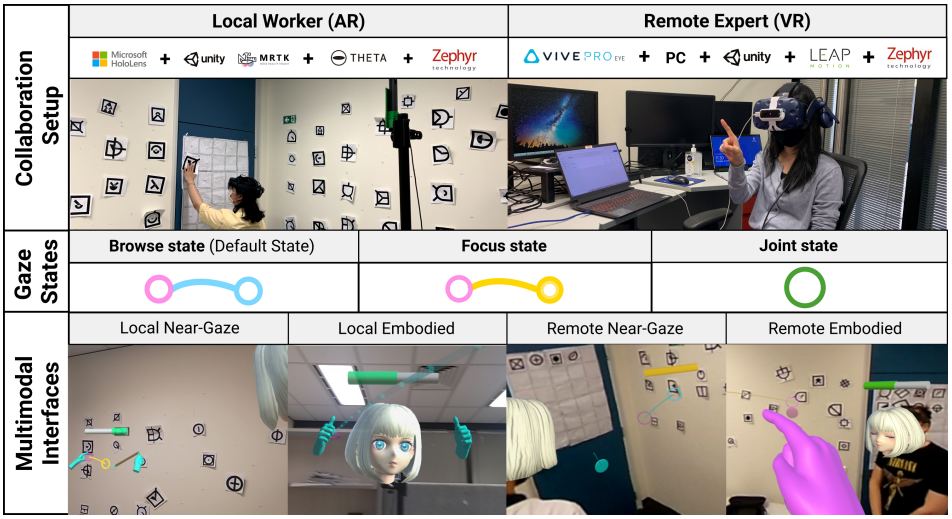

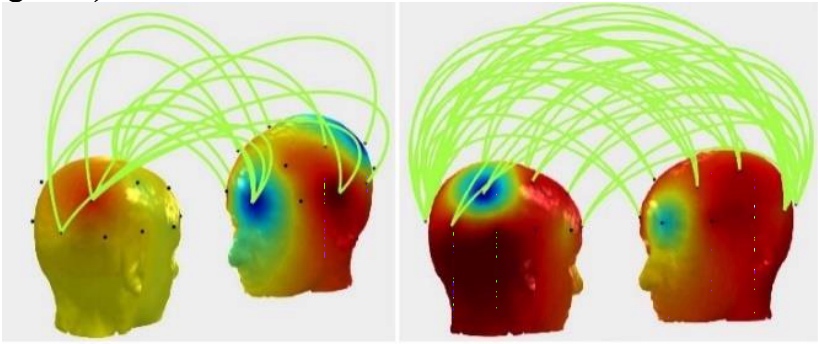

Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR

Ihshan Gumilar , Amit Barde , Prasanth Sasikumar , Mark Billinghurst , Ashkan F. Hayati , Gun Lee , Yuda Munarko , Sanjit Singh , Abdul MominGumilar, I., Barde, A., Sasikumar, P., Billinghurst, M., Hayati, A. F., Lee, G., ... & Momin, A. (2022, April). Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR. In CHI Conference on Human Factors in Computing Systems Extended Abstracts (pp. 1-7).

@inproceedings{gumilar2022inter,

title={Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR},

author={Gumilar, Ihshan and Barde, Amit and Sasikumar, Prasanth and Billinghurst, Mark and Hayati, Ashkan F and Lee, Gun and Munarko, Yuda and Singh, Sanjit and Momin, Abdul},

booktitle={CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--7},

year={2022}

}Brain activity sometimes synchronises when people collaborate together on real world tasks. Understanding this process could to lead to improvements in face to face and remote collaboration. In this paper we report on an experiment exploring the relationship between eye gaze and inter-brain synchrony in Virtual Reality (VR). The experiment recruited pairs who were asked to perform finger-tracking exercises in VR with three different gaze conditions: averted, direct, and natural, while their brain activity was recorded. We found that gaze direction has a significant effect on inter-brain synchrony during collaboration for this task in VR. This shows that representing natural gaze could influence inter-brain synchrony in VR, which may have implications for avatar design for social VR. We discuss implications of our research and possible directions for future work.

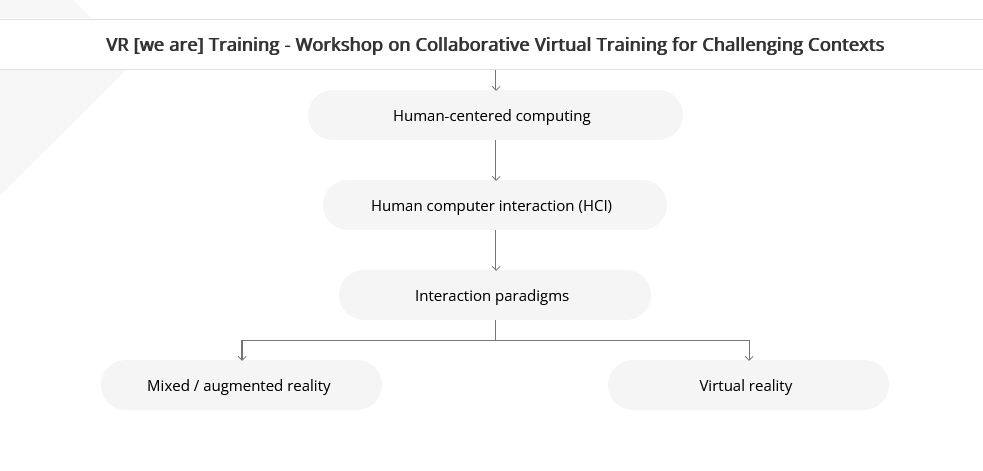

VR [we are] Training – Workshop on Collaborative Virtual Training for Challenging Contexts

Georg Regal , Helmut Schrom-Feiertag , Quynh Nguyen , Marco Aust , Markus Murtinger , Dorothé Smit , Manfred Tscheligi , Mark BillinghurstRegal, G., Schrom-Feiertag, H., Nguyen, Q., Aust, M., Murtinger, M., Smit, D., ... & Billinghurst, M. (2022, April). VR [we are] Training-Workshop on Collaborative Virtual Training for Challenging Contexts. In CHI Conference on Human Factors in Computing Systems Extended Abstracts (pp. 1-6).

@inproceedings{regal2022vr,

title={VR [we are] Training-Workshop on Collaborative Virtual Training for Challenging Contexts},

author={Regal, Georg and Schrom-Feiertag, Helmut and Nguyen, Quynh and Aust, Marco and Murtinger, Markus and Smit, Doroth{\'e} and Tscheligi, Manfred and Billinghurst, Mark},

booktitle={CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--6},

year={2022}

}Virtual reality provides great opportunities to simulate various environments and situations as reproducible and controllable training environments. Training is an inherently collaborative effort, with trainees and trainers working together to achieve specific goals. Recently, we have seen considerable effort to use virtual training environments (VTEs) in many demanding training contexts, e.g. police training, medical first responder training, firefighter training etc. For such contexts, trainers and trainees must undertake various roles as supervisors, adaptors, role players, and observers in training, making collaboration complex, but essential for training success. These social and multi-user aspects for collaborative VTEs have received little investigation so far. Therefore, we propose this workshop to discuss the potential and perspectives of VTEs for challenging training settings...

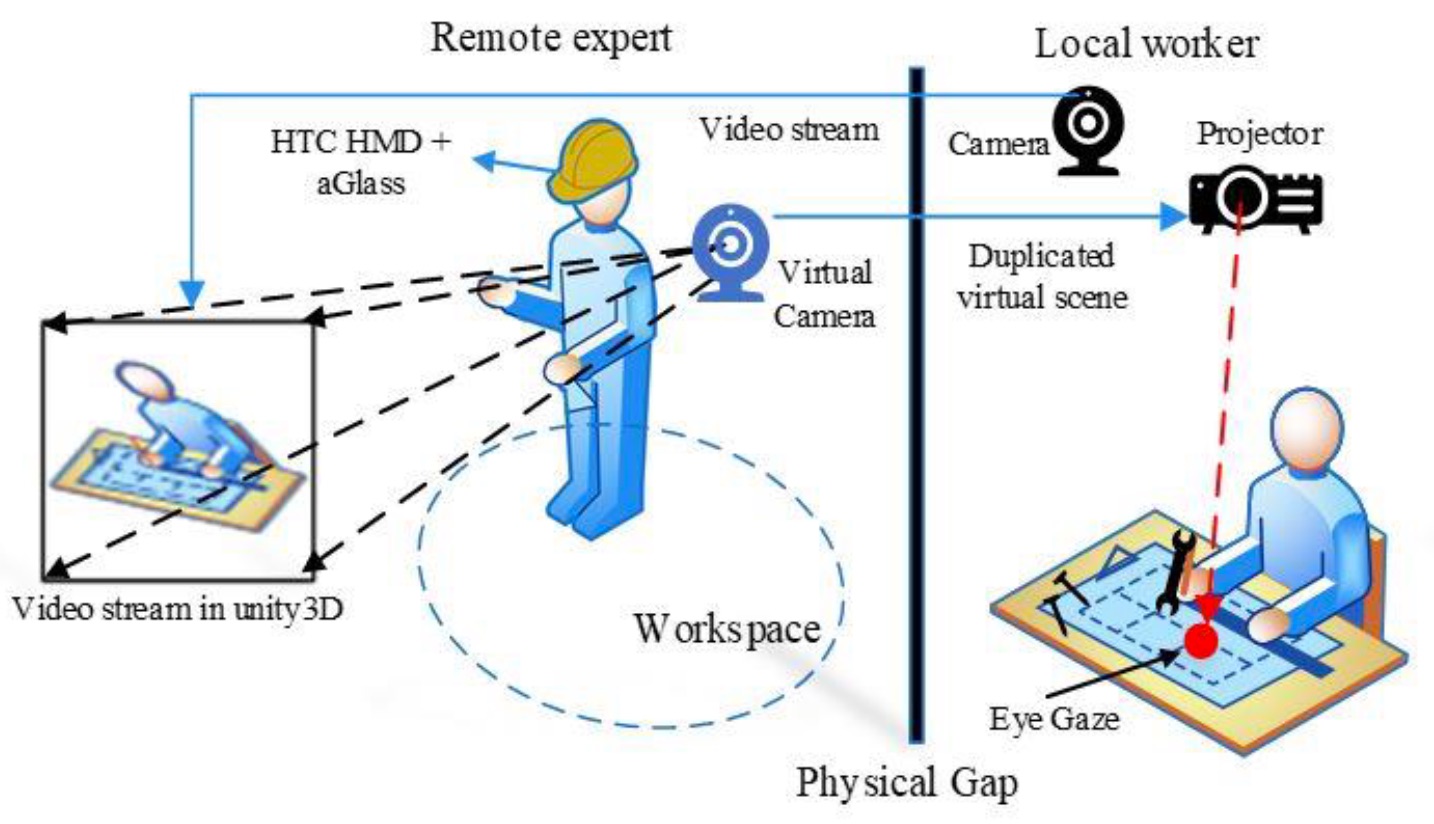

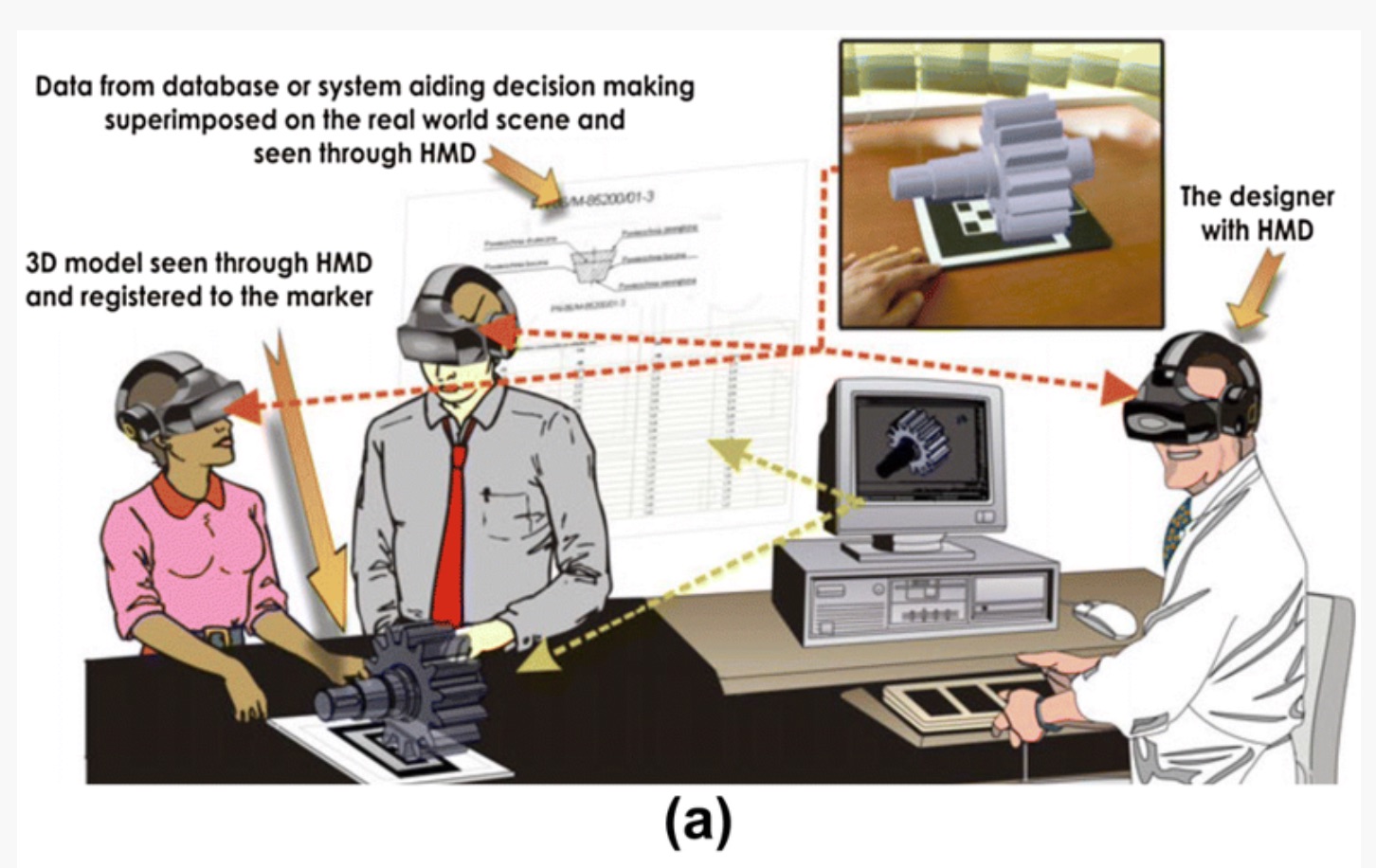

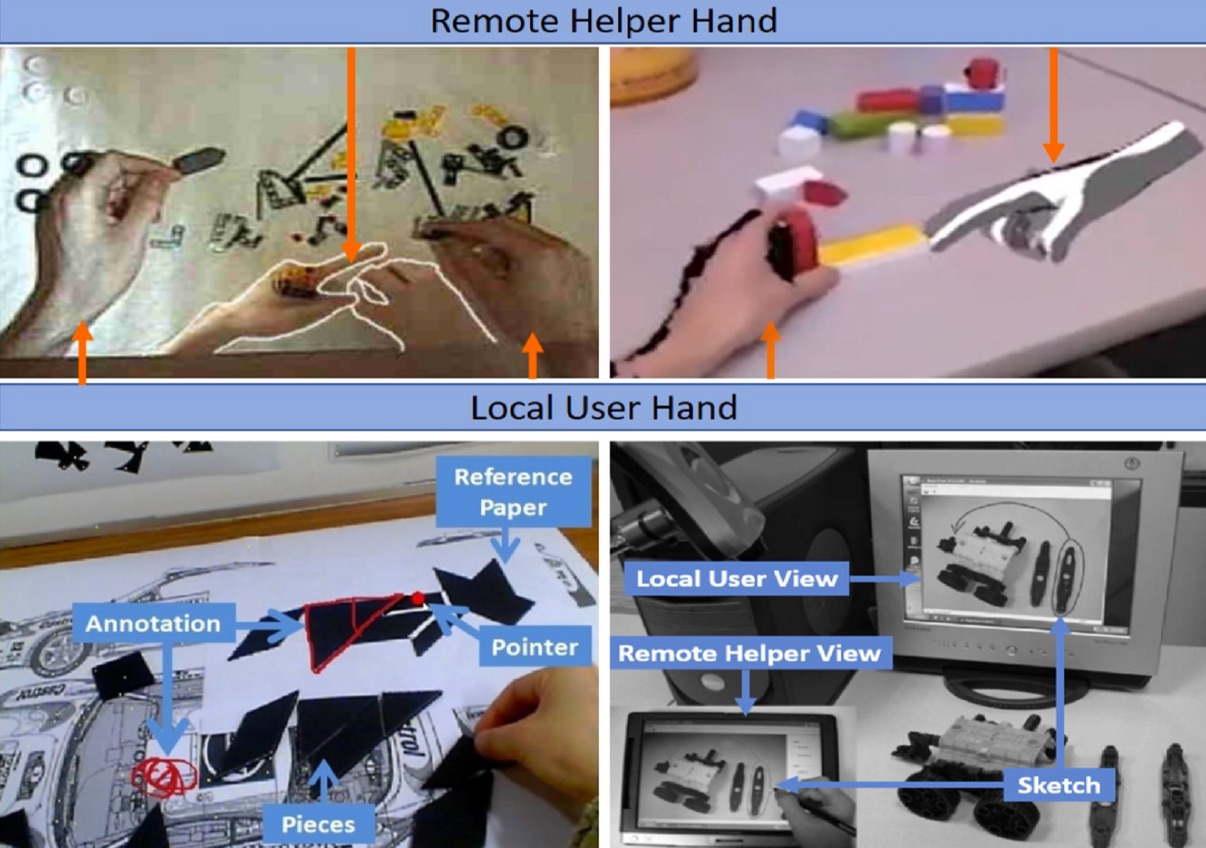

A review on communication cues for augmented reality based remote guidance

Weidong Huang, Mathew Wakefield, Troels Ammitsbøl Rasmussen, Seungwon Kim & Mark BillinghurstHuang, W., Wakefield, M., Rasmussen, T. A., Kim, S., & Billinghurst, M. (2022). A review on communication cues for augmented reality based remote guidance. Journal on Multimodal User Interfaces, 1-18.

@article{huang2022review,

title={A review on communication cues for augmented reality based remote guidance},

author={Huang, Weidong and Wakefield, Mathew and Rasmussen, Troels Ammitsb{\o}l and Kim, Seungwon and Billinghurst, Mark},

journal={Journal on Multimodal User Interfaces},

pages={1--18},

year={2022},

publisher={Springer}

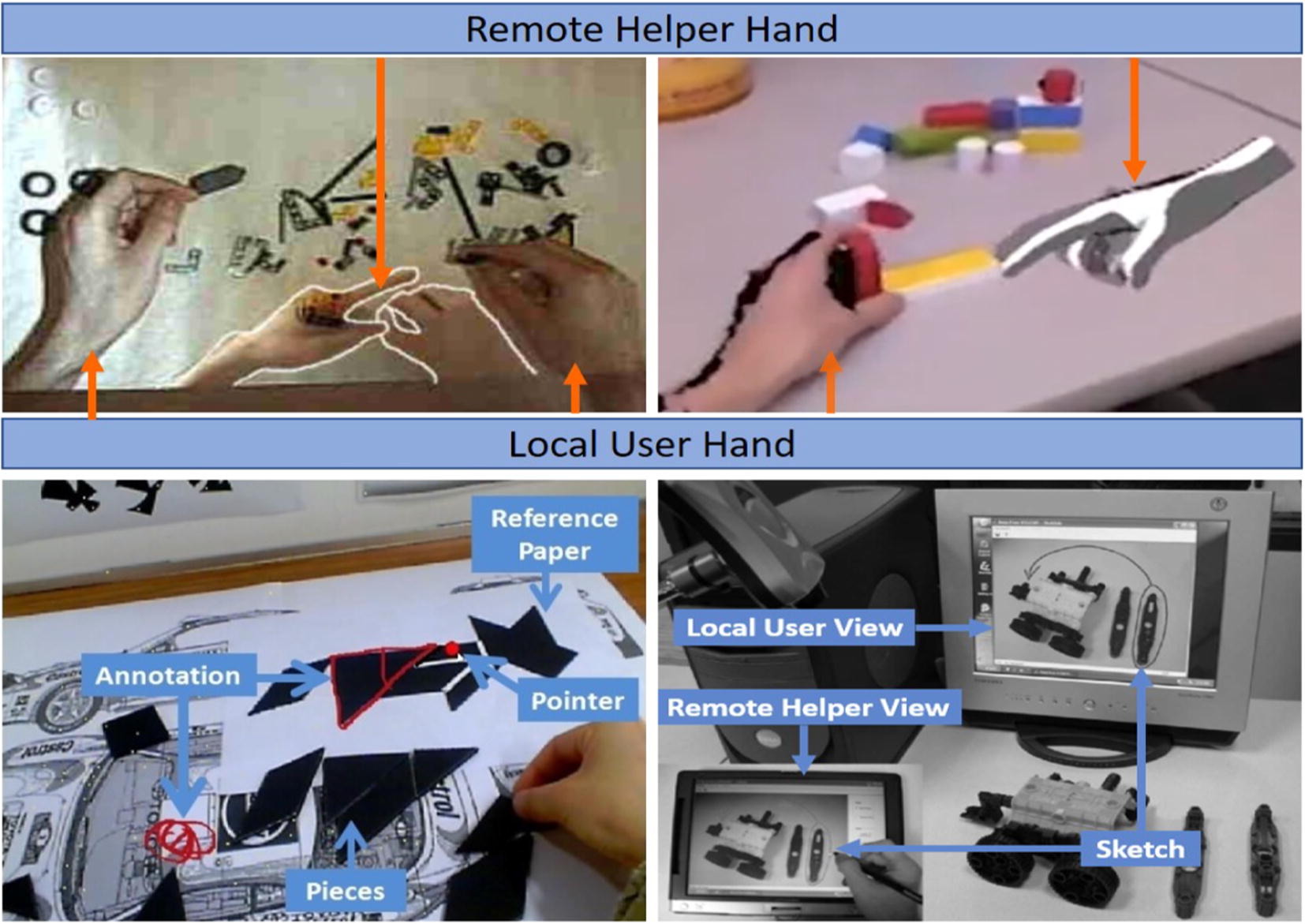

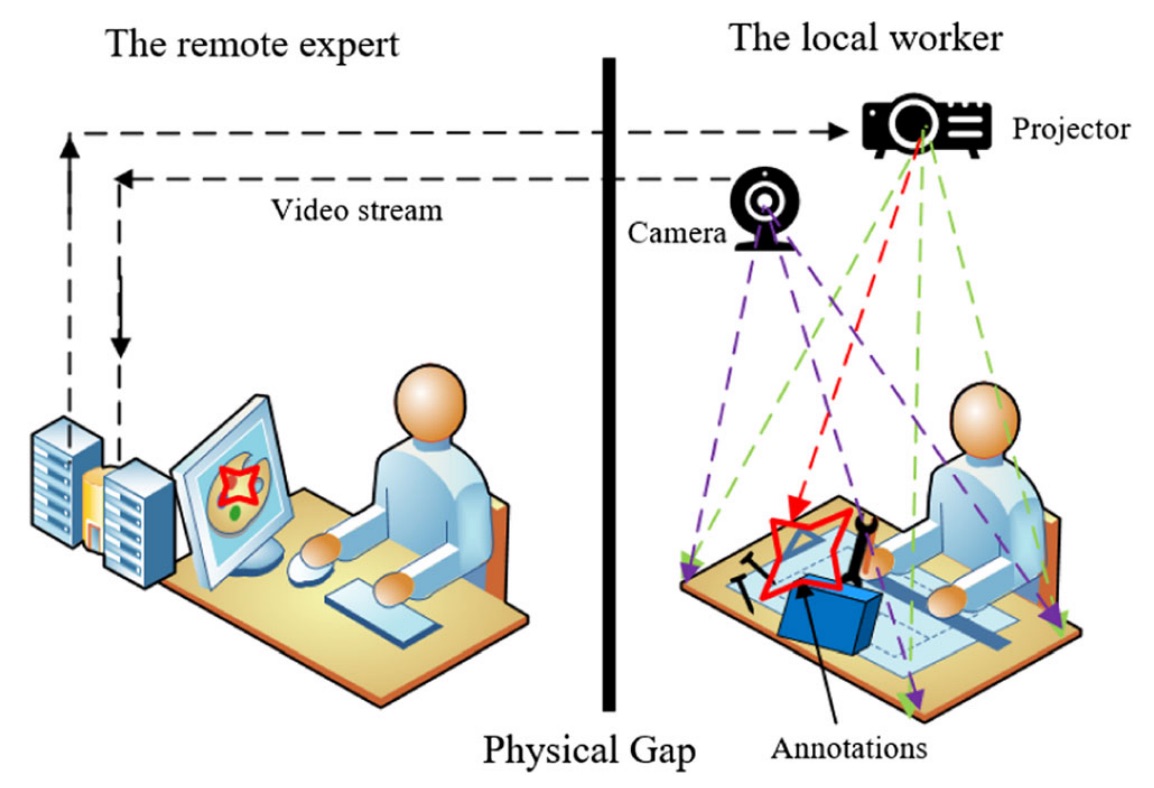

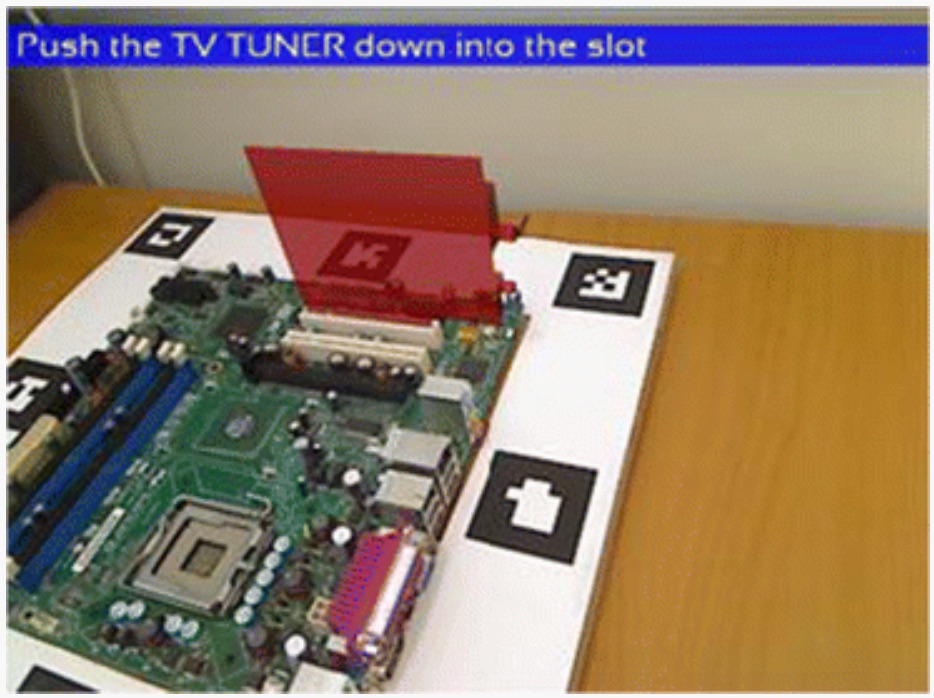

}Remote guidance on physical tasks is a type of collaboration in which a local worker is guided by a remote helper to operate on a set of physical objects. It has many applications in industrial sections such as remote maintenance and how to support this type of remote collaboration has been researched for almost three decades. Although a range of different modern computing tools and systems have been proposed, developed and used to support remote guidance in different application scenarios, it is essential to provide communication cues in a shared visual space to achieve common ground for effective communication and collaboration. In this paper, we conduct a selective review to summarize communication cues, approaches that implement the cues and their effects on augmented reality based remote guidance. We also discuss challenges and propose possible future research and development directions.

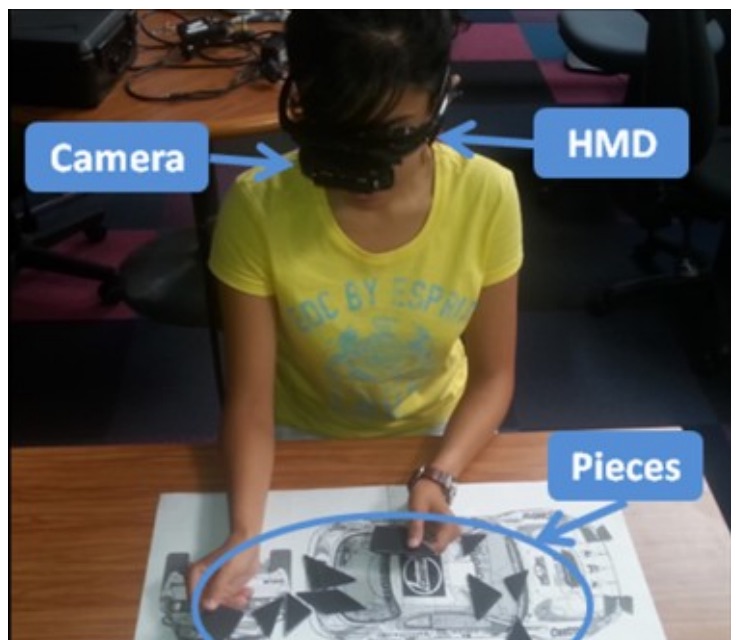

Seeing is believing: AR-assisted blind area assembly to support hand–eye coordination

Shuo Feng, Weiping He, Shaohua Zhang & Mark BillinghurstFeng, S., He, W., Zhang, S., & Billinghurst, M. (2022). Seeing is believing: AR-assisted blind area assembly to support hand–eye coordination. The International Journal of Advanced Manufacturing Technology, 119(11), 8149-8158.

@article{feng2022seeing,

title={Seeing is believing: AR-assisted blind area assembly to support hand--eye coordination},

author={Feng, Shuo and He, Weiping and Zhang, Shaohua and Billinghurst, Mark},

journal={The International Journal of Advanced Manufacturing Technology},

volume={119},

number={11},

pages={8149--8158},

year={2022},

publisher={Springer}

}The assembly stage is a vital phase in the production process and currently, there are still many manual tasks in the assembly operation. One of the challenges of manual assembly is the issue of blind area assembly since the visual obstruction of the hands or a part can lead to more errors and lower assembly efficiency. In this study, we developed an AR-assisted assembly system that solves the occlusion problem. Assembly workers can use the system to achieve comprehensive and precise hand–eye coordination (HEC). Additionally, we designed and conducted a user evaluation experiment to measure the learnability, usability, and mental effort required for the system for other HEC modes. Results indicate that hand position is the first visual information that should be considered in blind areas. Besides, the Intact HEC mode can effectively reduce the difficulty of learning and mental burden in operation, while at the same time improving efficiency.

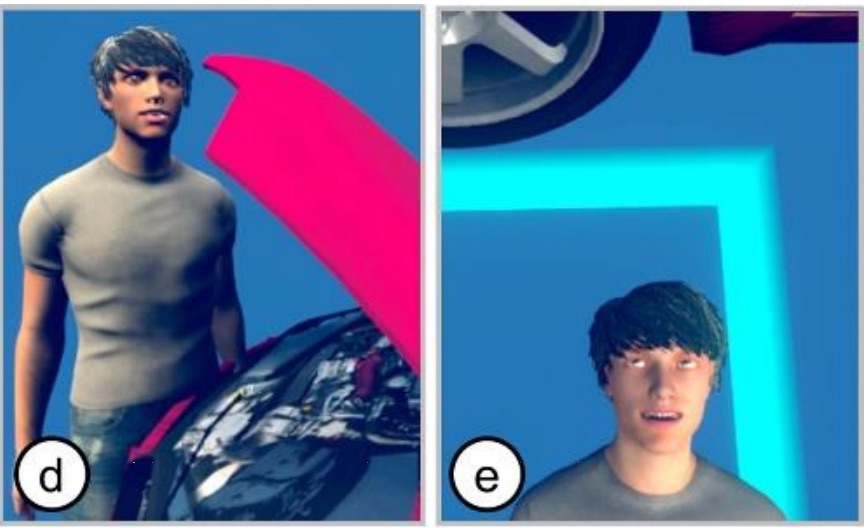

Effects of interacting with facial expressions and controllers in different virtual environments on presence, usability, affect, and neurophysiological signals

Arindam Dey, Amit Barde, Bowen Yuan, Ekansh Sareen, Chelsea Dobbins, Aaron Goh, Gaurav Gupta, Anubha Gupta, MarkBillinghurstDey, A., Barde, A., Yuan, B., Sareen, E., Dobbins, C., Goh, A., ... & Billinghurst, M. (2022). Effects of interacting with facial expressions and controllers in different virtual environments on presence, usability, affect, and neurophysiological signals. International Journal of Human-Computer Studies, 160, 102762.

@article{dey2022effects,

title={Effects of interacting with facial expressions and controllers in different virtual environments on presence, usability, affect, and neurophysiological signals},

author={Dey, Arindam and Barde, Amit and Yuan, Bowen and Sareen, Ekansh and Dobbins, Chelsea and Goh, Aaron and Gupta, Gaurav and Gupta, Anubha and Billinghurst, Mark},

journal={International Journal of Human-Computer Studies},

volume={160},

pages={102762},

year={2022},

publisher={Elsevier}

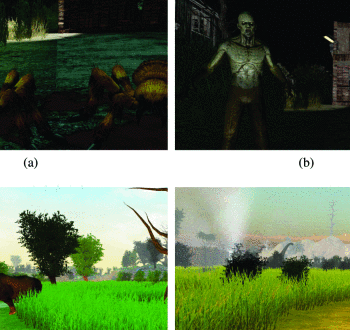

}Virtual Reality (VR) interfaces provide an immersive medium to interact with the digital world. Most VR interfaces require physical interactions using handheld controllers, but there are other alternative interaction methods that can support different use cases and users. Interaction methods in VR are primarily evaluated based on their usability, however, their differences in neurological and physiological effects remains less investigated. In this paper—along with other traditional qualitative matrices such as presence, affect, and system usability—we explore the neurophysiological effects—brain signals and electrodermal activity—of using an alternative facial expression interaction method to interact with VR interfaces. This form of interaction was also compared with traditional handheld controllers. Three different environments, with different experiences to interact with were used—happy (butterfly catching), neutral (object picking), and scary (zombie shooting). Overall, we noticed an effect of interaction methods on the gamma activities in the brain and on skin conductance. For some aspects of presence, facial expression outperformed controllers but controllers were found to be better than facial expressions in terms of usability.

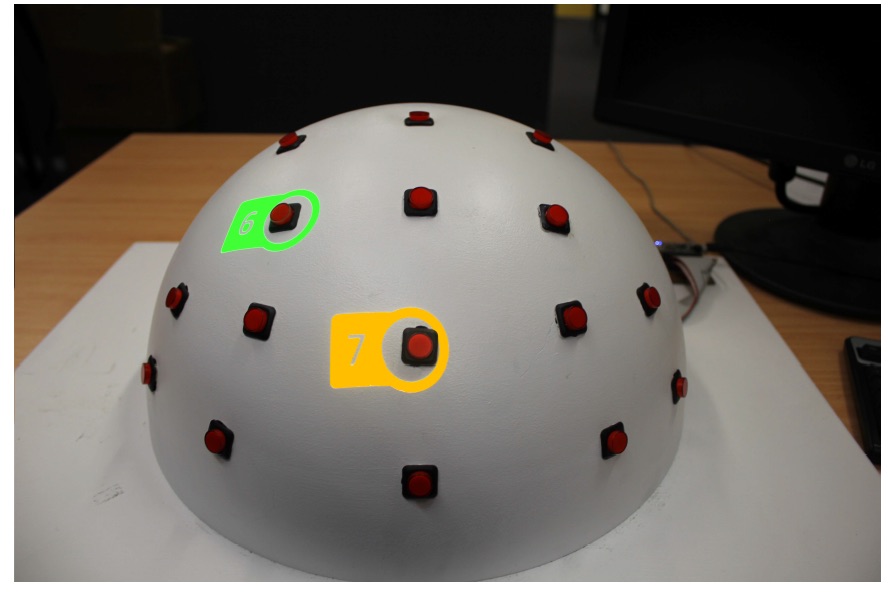

HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality

Zhang, L., He, W., Cao, Z., Wang, S., Bai, H., & Billinghurst, M.Zhang, L., He, W., Cao, Z., Wang, S., Bai, H., & Billinghurst, M. (2022). HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality. International Journal of Human–Computer Interaction, 1-15.

@article{zhang2022hapticproxy,

title={HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality},

author={Zhang, Li and He, Weiping and Cao, Zhiwei and Wang, Shuxia and Bai, Huidong and Billinghurst, Mark},

journal={International Journal of Human--Computer Interaction},

pages={1--15},

year={2022},

publisher={Taylor \& Francis}

}Consistent visual and haptic feedback is an important way to improve the user experience when interacting with virtual objects. However, the perception provided in Augmented Reality (AR) mainly comes from visual cues and amorphous tactile feedback. This work explores how to simulate positional vibrotactile feedback (PVF) with multiple vibration motors when colliding with virtual objects in AR. By attaching spatially distributed vibration motors on a physical haptic proxy, users can obtain an augmented collision experience with positional vibration sensations from the contact point with virtual objects. We first developed a prototype system and conducted a user study to optimize the design parameters. Then we investigated the effect of PVF on user performance and experience in a virtual and real object alignment task in the AR environment. We found that this approach could significantly reduce the alignment offset between virtual and physical objects with tolerable task completion time increments. With the PVF cue, participants obtained a more comprehensive perception of the offset direction, more useful information, and a more authentic AR experience.

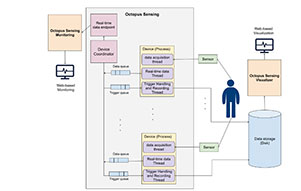

Octopus Sensing: A Python library for human behavior studies

Nastaran Saffaryazdi, Aidin Gharibnavaz, Mark BillinghurstSaffaryazdi, N., Gharibnavaz, A., & Billinghurst, M. (2022). Octopus Sensing: A Python library for human behavior studies. Journal of Open Source Software, 7(71), 4045.

@article{saffaryazdi2022octopus,

title={Octopus Sensing: A Python library for human behavior studies},

author={Saffaryazdi, Nastaran and Gharibnavaz, Aidin and Billinghurst, Mark},

journal={Journal of Open Source Software},

volume={7},

number={71},

pages={4045},

year={2022}

}Designing user studies and collecting data is critical to exploring and automatically recognizing human behavior. It is currently possible to use a range of sensors to capture heart rate, brain activity, skin conductance, and a variety of different physiological cues. These data can be combined to provide information about a user’s emotional state, cognitive load, or other factors. However, even when data are collected correctly, synchronizing data from multiple sensors is time-consuming and prone to errors. Failure to record and synchronize data is likely to result in errors in analysis and results, as well as the need to repeat the time-consuming experiments several times. To overcome these challenges, Octopus Sensing facilitates synchronous data acquisition from various sources and provides some utilities for designing user studies, real-time monitoring, and offline data visualization.

The primary aim of Octopus Sensing is to provide a simple scripting interface so that people with basic or no software development skills can define sensor-based experiment scenarios with less effort

Emotion Recognition in Conversations Using Brain and Physiological Signals

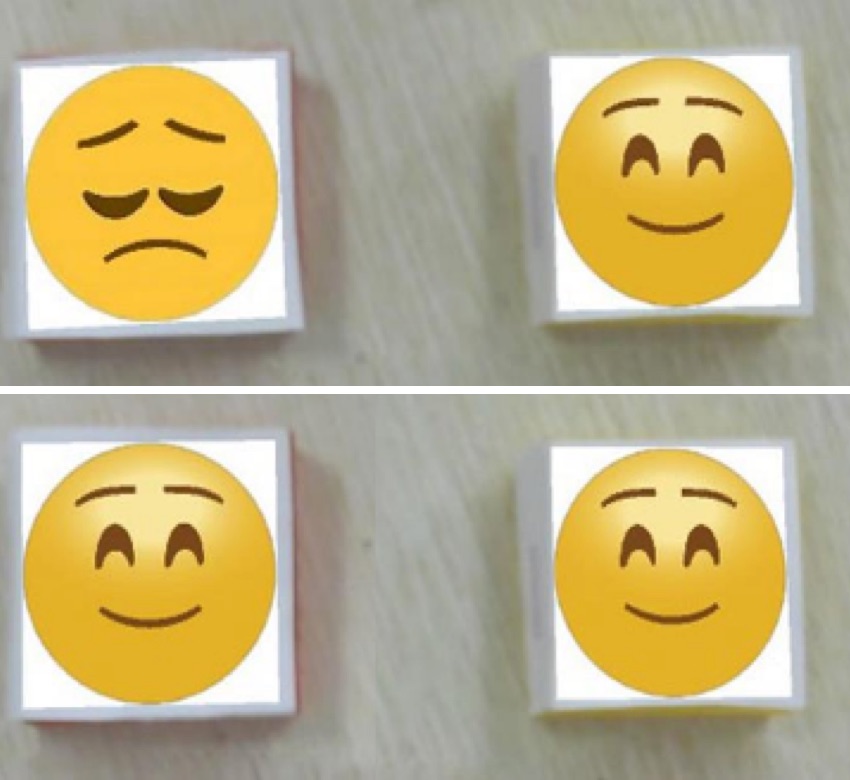

Nastaran Saffaryazdi , Yenushka Goonesekera , Nafiseh Saffaryazdi , Nebiyou Daniel Hailemariam , Ebasa Girma Temesgen , Suranga Nanayakkara , Elizabeth Broadbent , Mark BillinghurstSaffaryazdi, N., Goonesekera, Y., Saffaryazdi, N., Hailemariam, N. D., Temesgen, E. G., Nanayakkara, S., ... & Billinghurst, M. (2022, March). Emotion Recognition in Conversations Using Brain and Physiological Signals. In 27th International Conference on Intelligent User Interfaces (pp. 229-242).

@inproceedings{saffaryazdi2022emotion,

title={Emotion recognition in conversations using brain and physiological signals},

author={Saffaryazdi, Nastaran and Goonesekera, Yenushka and Saffaryazdi, Nafiseh and Hailemariam, Nebiyou Daniel and Temesgen, Ebasa Girma and Nanayakkara, Suranga and Broadbent, Elizabeth and Billinghurst, Mark},

booktitle={27th International Conference on Intelligent User Interfaces},

pages={229--242},

year={2022}

}Emotions are complicated psycho-physiological processes that are related to numerous external and internal changes in the body. They play an essential role in human-human interaction and can be important for human-machine interfaces. Automatically recognizing emotions in conversation could be applied in many application domains like health-care, education, social interactions, entertainment, and more. Facial expressions, speech, and body gestures are primary cues that have been widely used for recognizing emotions in conversation. However, these cues can be ineffective as they cannot reveal underlying emotions when people involuntarily or deliberately conceal their emotions. Researchers have shown that analyzing brain activity and physiological signals can lead to more reliable emotion recognition since they generally cannot be controlled. However, these body responses in emotional situations have been rarely explored in interactive tasks like conversations. This paper explores and discusses the performance and challenges of using brain activity and other physiological signals in recognizing emotions in a face-to-face conversation. We present an experimental setup for stimulating spontaneous emotions using a face-to-face conversation and creating a dataset of the brain and physiological activity. We then describe our analysis strategies for recognizing emotions using Electroencephalography (EEG), Photoplethysmography (PPG), and Galvanic Skin Response (GSR) signals in subject-dependent and subject-independent approaches. Finally, we describe new directions for future research in conversational emotion recognition and the limitations and challenges of our approach.

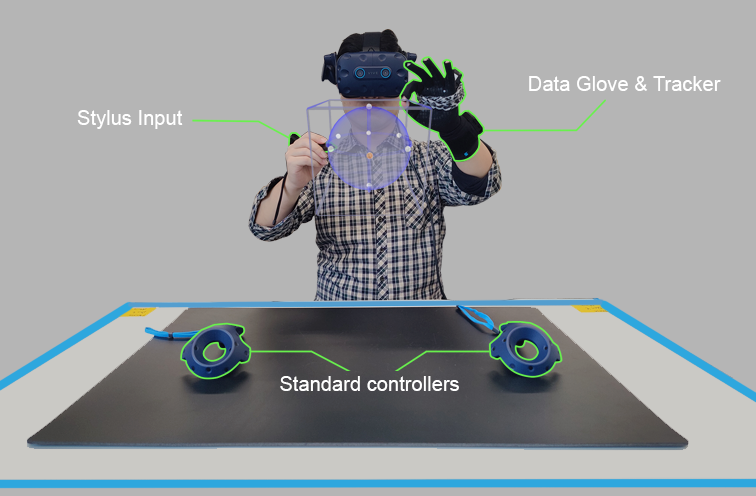

Asymmetric interfaces with stylus and gesture for VR sketching

Qianyuan Zou; Huidong Bai; Lei Gao; Allan Fowler; Mark BillinghurstZou, Q., Bai, H., Gao, L., Fowler, A., & Billinghurst, M. (2022, March). Asymmetric interfaces with stylus and gesture for VR sketching. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 968-969). IEEE.

@inproceedings{zou2022asymmetric,

title={Asymmetric interfaces with stylus and gesture for VR sketching},

author={Zou, Qianyuan and Bai, Huidong and Gao, Lei and Fowler, Allan and Billinghurst, Mark},

booktitle={2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages={968--969},

year={2022},

organization={IEEE}

}Virtual Reality (VR) can be used for design and artistic applications. However, traditional symmetrical input devices are not specifically designed as creative tools and may not fully meet artist needs. In this demonstration, we present a variety of tool-based asymmetric VR interfaces to assist artists to create artwork with better performance and easier effort. These interaction methods allow artists to hold different tools in their hands, such as wearing a data glove on the left hand and holding a stylus in the right-hand. We demonstrate this by showing a stylus and glove based sketching interface. We conducted a pilot study showing that most users prefer to create art with different tools in both hands.

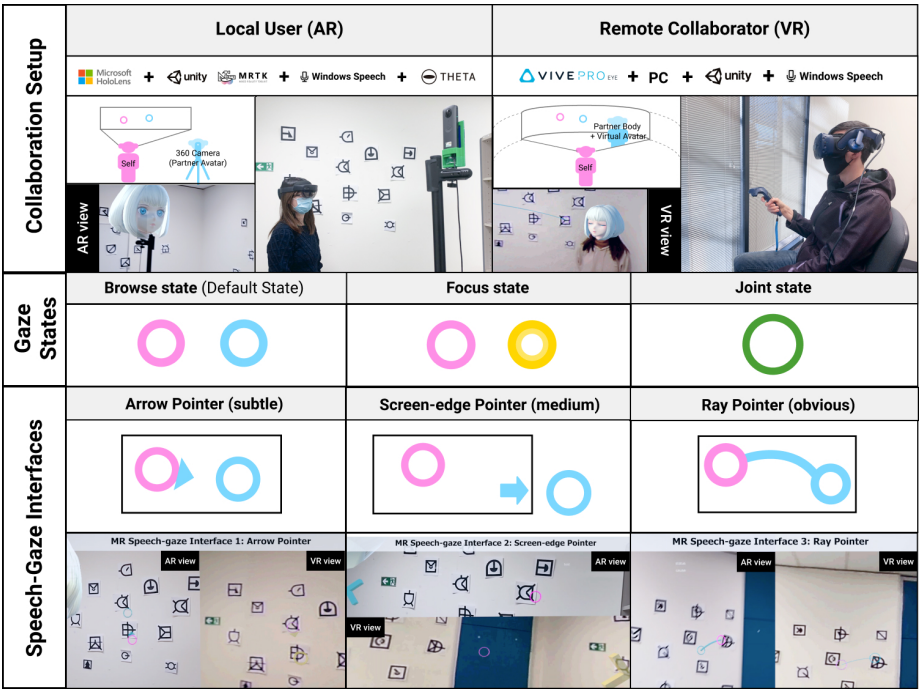

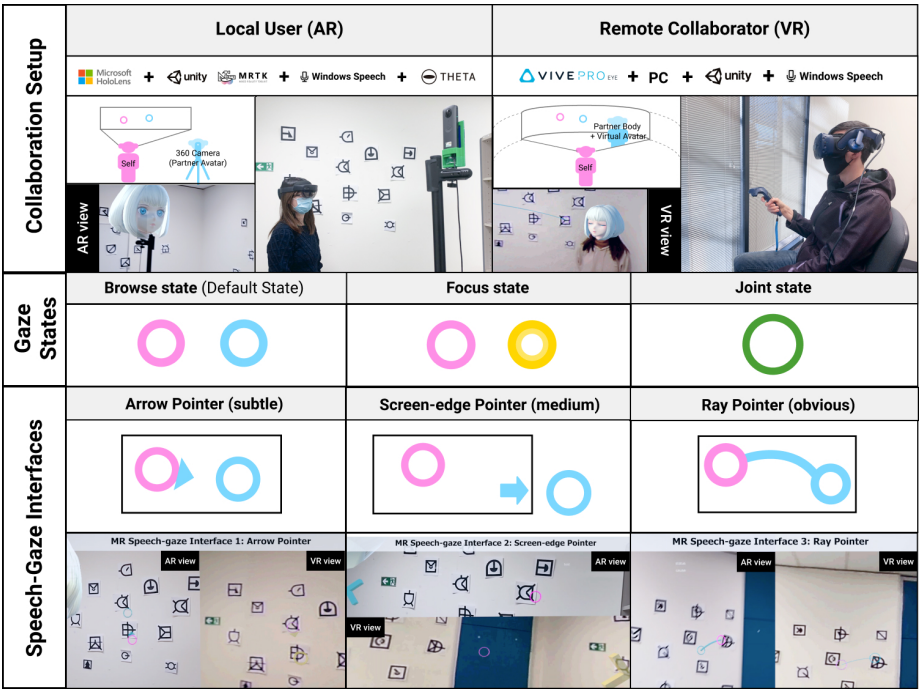

Using Speech to Visualise Shared Gaze Cues in MR Remote Collaboration

Allison Jing; Gun Lee; Mark BillinghurstJing, A., Lee, G., & Billinghurst, M. (2022, March). Using Speech to Visualise Shared Gaze Cues in MR Remote Collaboration. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 250-259). IEEE.

@inproceedings{jing2022using,

title={Using Speech to Visualise Shared Gaze Cues in MR Remote Collaboration},

author={Jing, Allison and Lee, Gun and Billinghurst, Mark},

booktitle={2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={250--259},

year={2022},

organization={IEEE}

}

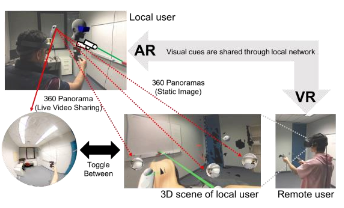

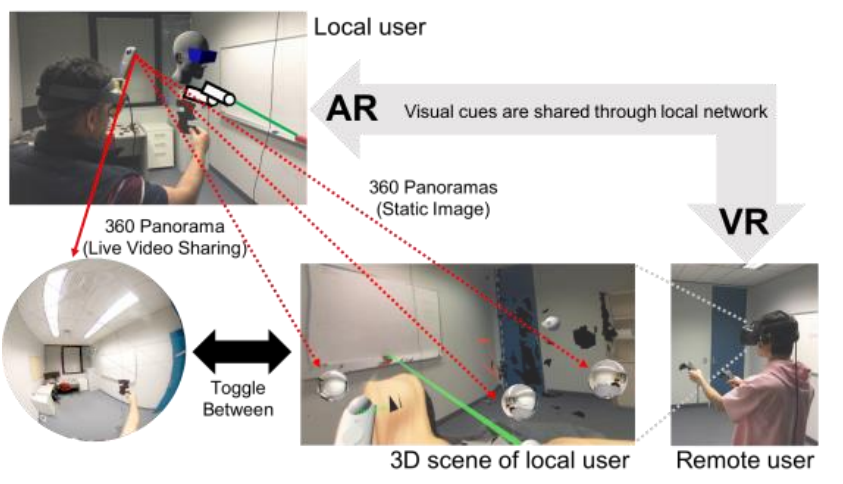

In this paper, we present a 360° panoramic Mixed Reality (MR) sys-tem that visualises shared gaze cues using contextual speech input to improve task coordination. We conducted two studies to evaluate the design of the MR gaze-speech interface exploring the combinations of visualisation style and context control level. Findings from the first study suggest that an explicit visual form that directly connects the collaborators’ shared gaze to the contextual conversation is preferred. The second study indicates that the gaze-speech modality shortens the coordination time to attend to the shared interest, making the communication more natural and the collaboration more effective. Qualitative feedback also suggest that having a constant joint gaze indicator provides a consistent bi-directional view while establishing a sense of co-presence during task collaboration. We discuss the implications for the design of collaborative MR systems and directions for future research.

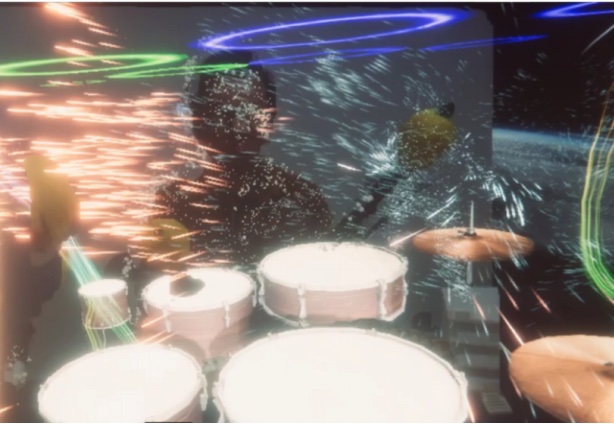

Jamming in MR: Towards Real-Time Music Collaboration in Mixed Reality

Ruben Schlagowski; Kunal Gupta; Silvan Mertes; Mark Billinghurst; Susanne Metzner; Elisabeth AndréSchlagowski, R., Gupta, K., Mertes, S., Billinghurst, M., Metzner, S., & André, E. (2022, March). Jamming in MR: Towards Real-Time Music Collaboration in Mixed Reality. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 854-855). IEEE.

@inproceedings{schlagowski2022jamming,

title={Jamming in MR: towards real-time music collaboration in mixed reality},

author={Schlagowski, Ruben and Gupta, Kunal and Mertes, Silvan and Billinghurst, Mark and Metzner, Susanne and Andr{\'e}, Elisabeth},

booktitle={2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages={854--855},

year={2022},

organization={IEEE}

}Recent pandemic-related contact restrictions have made it difficult for musicians to meet in person to make music. As a result, there has been an increased demand for applications that enable remote and real-time music collaboration. One desirable goal here is to give musicians a sense of social presence, to make them feel that they are “on site” with their musical partners. We conducted a focus group study to investigate the impact of remote jamming on users' affect. Further, we gathered user requirements for a Mixed Reality system that enables real-time jamming and developed a prototype based on these findings.

Supporting Jury Understanding of Expert Evidence in a Virtual Environment

Carolin Reichherzer; Andrew Cunningham; Jason Barr; Tracey Coleman; Kurt McManus; Dion Sheppard; Scott Coussens; Mark Kohler; Mark Billinghurst; Bruce H. ThomasReichherzer, C., Cunningham, A., Barr, J., Coleman, T., McManus, K., Sheppard, D., ... & Thomas, B. H. (2022, March). Supporting Jury Understanding of Expert Evidence in a Virtual Environment. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 615-624). IEEE.

@inproceedings{reichherzer2022supporting,

title={Supporting Jury Understanding of Expert Evidence in a Virtual Environment},

author={Reichherzer, Carolin and Cunningham, Andrew and Barr, Jason and Coleman, Tracey and McManus, Kurt and Sheppard, Dion and Coussens, Scott and Kohler, Mark and Billinghurst, Mark and Thomas, Bruce H},

booktitle={2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={615--624},

year={2022},

organization={IEEE}

}This work investigates the use of Virtual Reality (VR) to present forensic evidence to the jury in a courtroom trial. The findings of a between-participant user study on comprehension of an expert statement are presented, examining the benefits and issues of using VR compared to traditional courtroom presentation (being still images). Participants listened to a forensic scientist explain bloodstain spatter patterns while viewing a mock crime scene in either VR or as still images in video format. Under these conditions, we compared understanding of the expert domain, mental effort and content recall. We found that VR significantly improves the understanding of spatial information and knowledge acquisition. We also identify different patterns of user behaviour depending on the display method. We conclude with suggestions on how to best adapt evidence presentation to VR.

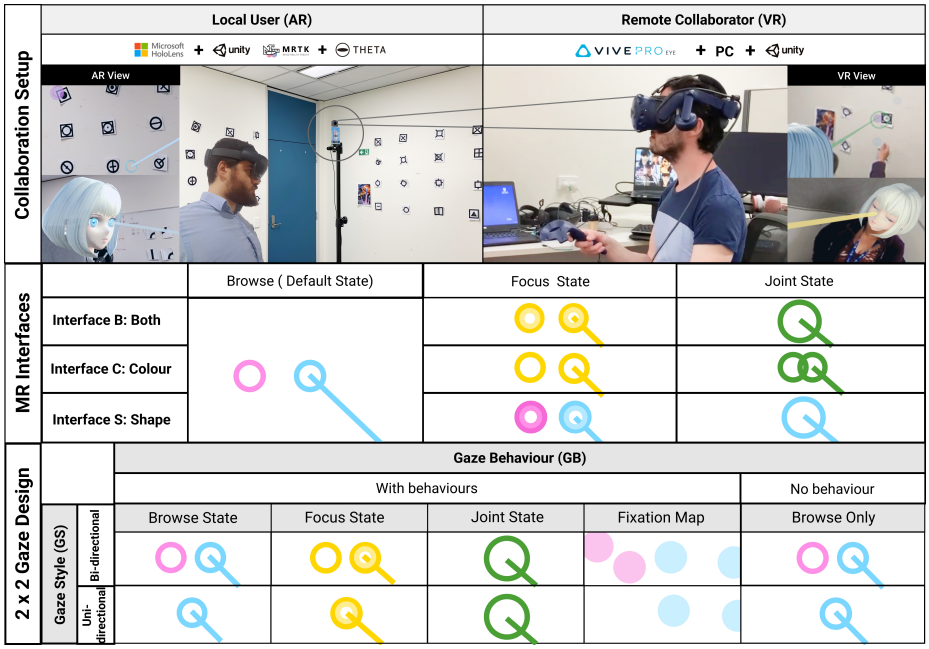

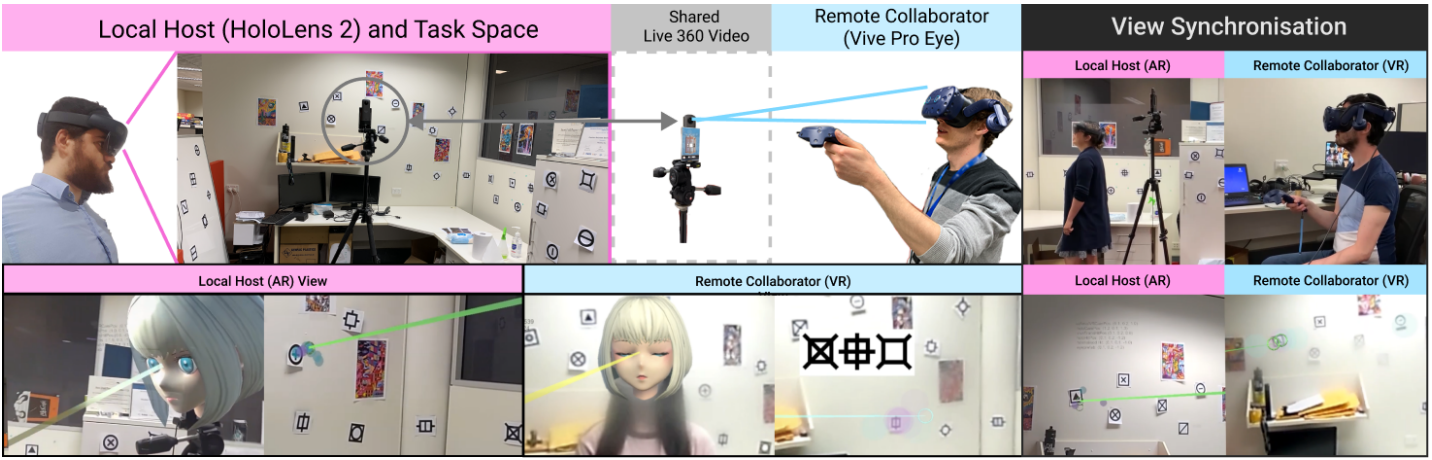

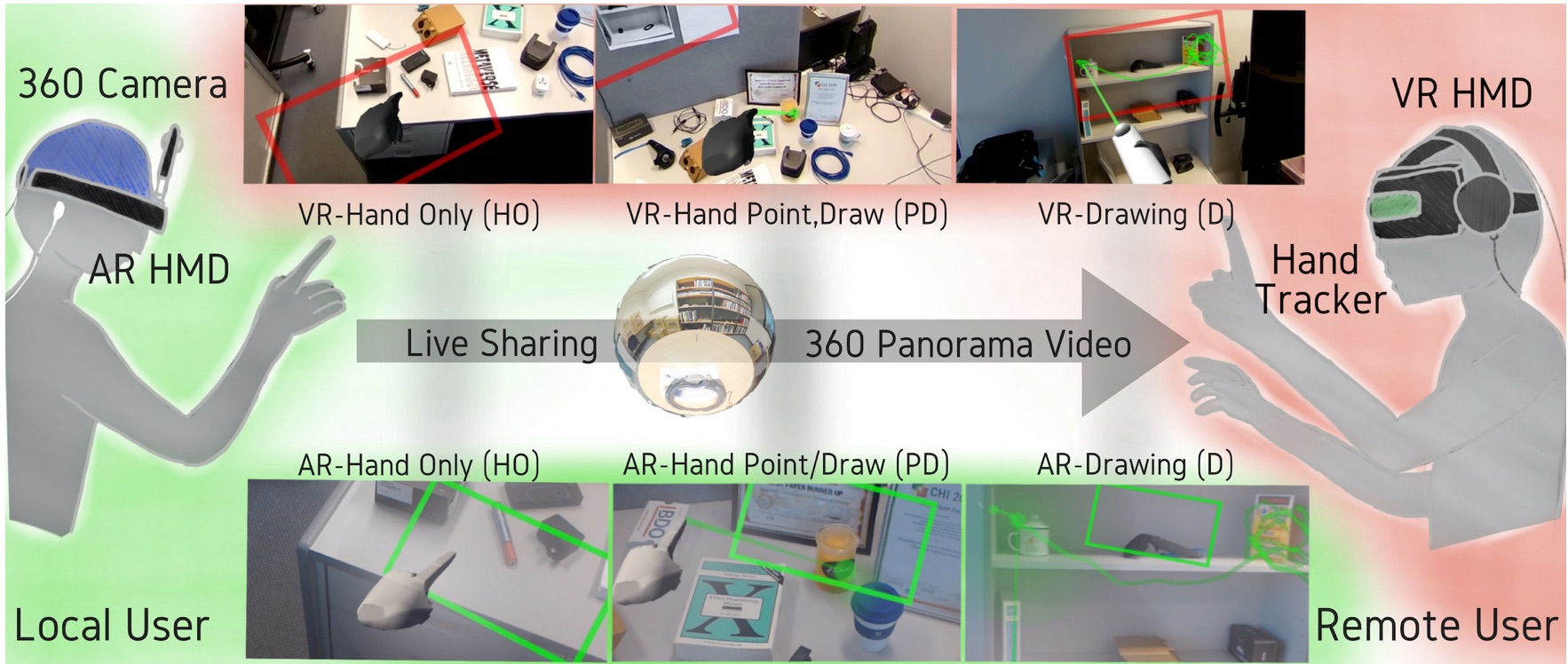

The Impact of Sharing Gaze Behaviours in Collaborative Mixed Reality

Allison Jing , Kieran May , Brandon Matthews , Gun Lee , Mark BillinghurstAllison Jing, Kieran May, Brandon Matthews, Gun Lee, and Mark Billinghurst. 2022. The Impact of Sharing Gaze Behaviours in Collaborative Mixed Reality. Proc. ACM Hum.-Comput. Interact. 6, CSCW2, Article 463 (November 2022), 27 pages. https://doi.org/10.1145/3555564

@article{10.1145/3555564,

author = {Jing, Allison and May, Kieran and Matthews, Brandon and Lee, Gun and Billinghurst, Mark},

title = {The Impact of Sharing Gaze Behaviours in Collaborative Mixed Reality},

year = {2022},

issue_date = {November 2022},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {6},

number = {CSCW2},

url = {https://doi.org/10.1145/3555564},

doi = {10.1145/3555564},

abstract = {In a remote collaboration involving a physical task, visualising gaze behaviours may compensate for other unavailable communication channels. In this paper, we report on a 360° panoramic Mixed Reality (MR) remote collaboration system that shares gaze behaviour visualisations between a local user in Augmented Reality and a remote collaborator in Virtual Reality. We conducted two user studies to evaluate the design of MR gaze interfaces and the effect of gaze behaviour (on/off) and gaze style (bi-/uni-directional). The results indicate that gaze visualisations amplify meaningful joint attention and improve co-presence compared to a no gaze condition. Gaze behaviour visualisations enable communication to be less verbally complex therefore lowering collaborators' cognitive load while improving mutual understanding. Users felt that bi-directional behaviour visualisation, showing both collaborator's gaze state, was the preferred condition since it enabled easy identification of shared interests and task progress.},

journal = {Proc. ACM Hum.-Comput. Interact.},

month = {nov},

articleno = {463},

numpages = {27},

keywords = {gaze visualization, mixed reality remote collaboration, human-computer interaction}

}In a remote collaboration involving a physical task, visualising gaze behaviours may compensate for other unavailable communication channels. In this paper, we report on a 360° panoramic Mixed Reality (MR) remote collaboration system that shares gaze behaviour visualisations between a local user in Augmented Reality and a remote collaborator in Virtual Reality. We conducted two user studies to evaluate the design of MR gaze interfaces and the effect of gaze behaviour (on/off) and gaze style (bi-/uni-directional). The results indicate that gaze visualisations amplify meaningful joint attention and improve co-presence compared to a no gaze condition. Gaze behaviour visualisations enable communication to be less verbally complex therefore lowering collaborators' cognitive load while improving mutual understanding. Users felt that bi-directional behaviour visualisation, showing both collaborator's gaze state, was the preferred condition since it enabled easy identification of shared interests and task progress.

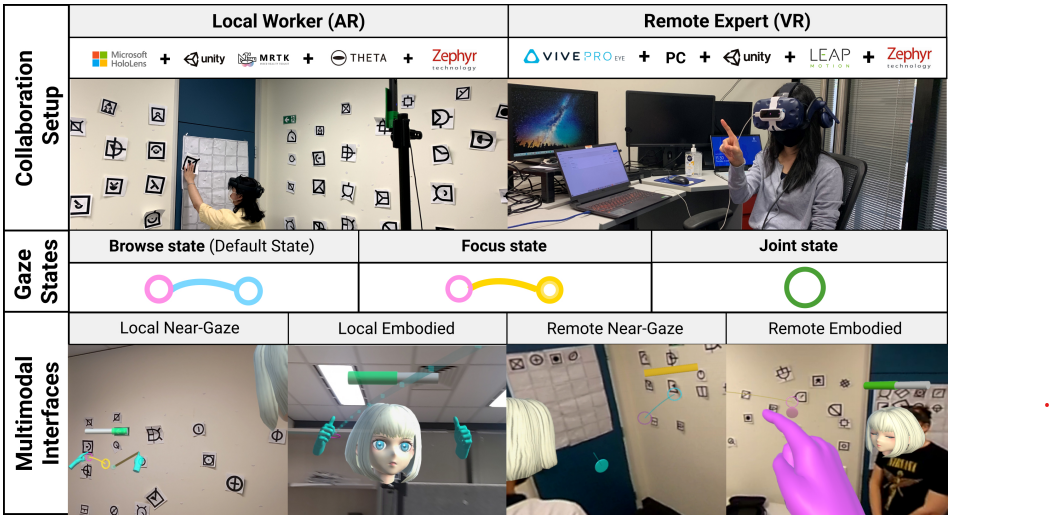

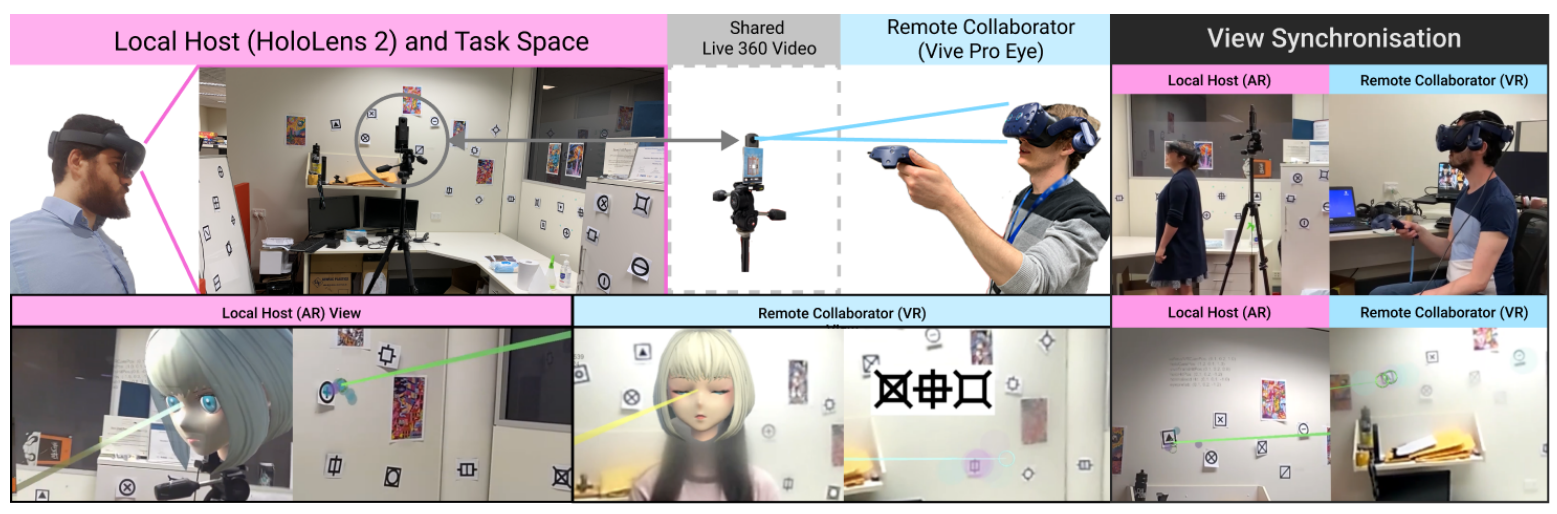

Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration

Allison Jing; Kunal Gupta; Jeremy McDade; Gun A. Lee; Mark BillinghurstA. Jing, K. Gupta, J. McDade, G. A. Lee and M. Billinghurst, "Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration," 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, Singapore, 2022, pp. 837-846, doi: 10.1109/ISMAR55827.2022.00102.

@INPROCEEDINGS{9995367,

author={Jing, Allison and Gupta, Kunal and McDade, Jeremy and Lee, Gun A. and Billinghurst, Mark},

booktitle={2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

title={Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration},

year={2022},

volume={},

number={},

pages={837-846},

doi={10.1109/ISMAR55827.2022.00102}}

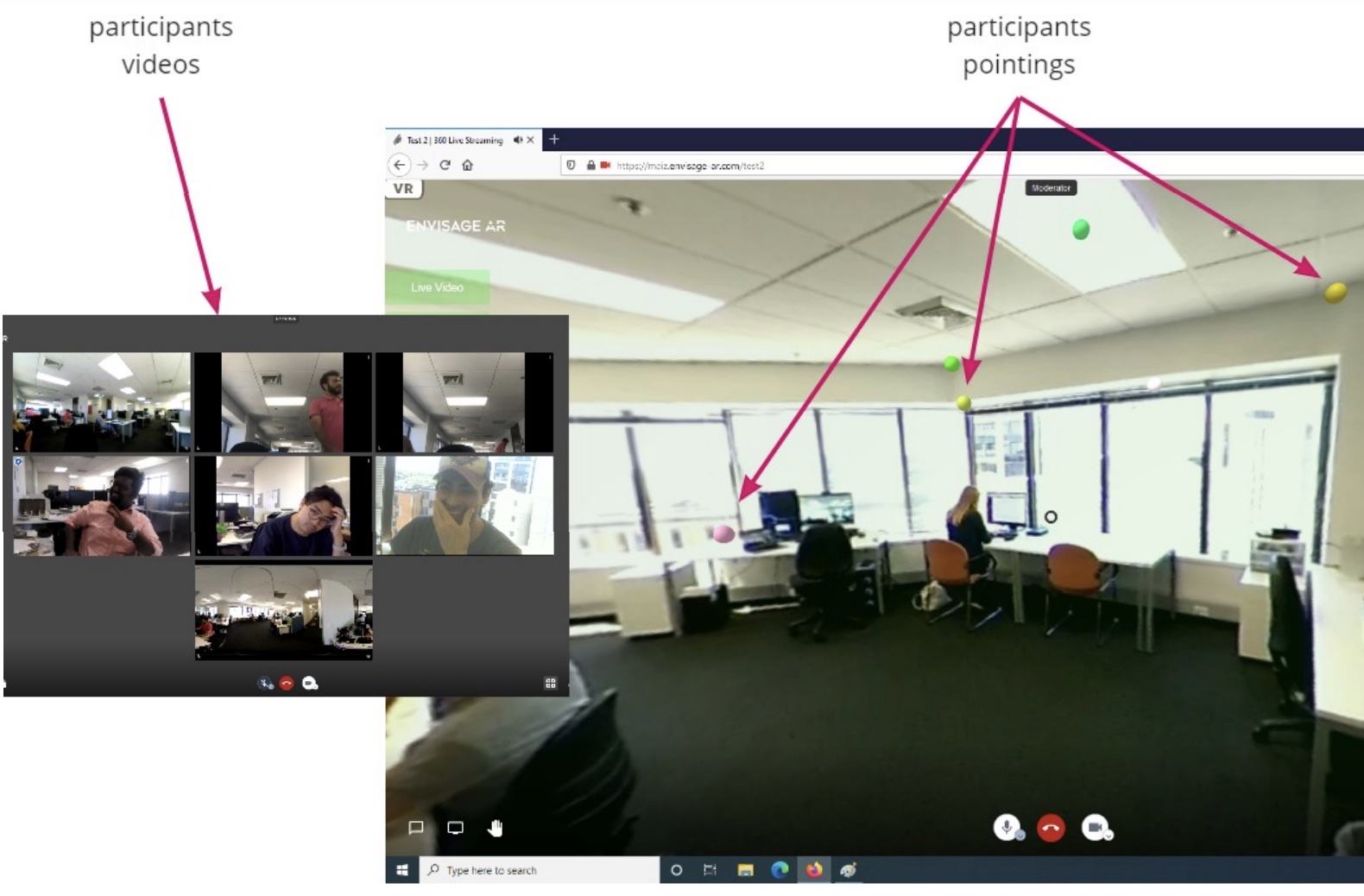

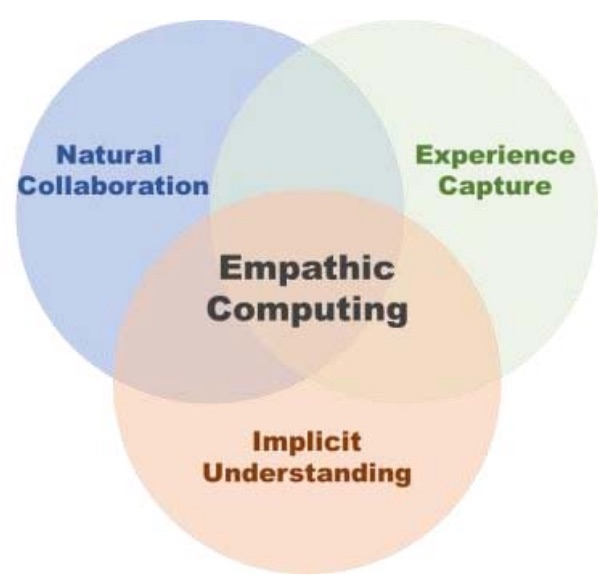

In this paper, we share real-time collaborative gaze behaviours, hand pointing, gesturing, and heart rate visualisations between remote collaborators using a live 360 ° panoramic-video based Mixed Reality (MR) system. We first ran a pilot study to explore visual designs to combine communication cues with biofeedback (heart rate), aiming to understand user perceptions of empathic collaboration. We then conducted a formal study to investigate the effect of modality (Gaze+Hand, Hand-only) and interface (Near-Gaze, Embodied). The results show that the Gaze+Hand modality in a Near-Gaze interface is significantly better at reducing task load, improving co-presence, enhancing understanding and tightening collaborative behaviours compared to the conventional Embodied hand-only experience. Ranked as the most preferred condition, the Gaze+Hand in Near-Gaze condition is perceived to reduce the need for dividing attention to the collaborator’s physical location, although it feels slightly less natural compared to the embodied visualisations. In addition, the Gaze+Hand conditions also led to more joint attention and less hand pointing to align mutual understanding. Lastly, we provide a design guideline to summarize what we have learned from the studies on the representation between modality, interface, and biofeedback.

Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration

Allison Jing; Kunal Gupta; Jeremy McDade; Gun A. Lee; Mark BillinghurstAllison Jing, Kunal Gupta, Jeremy McDade, Gun Lee, and Mark Billinghurst. 2022. Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration. In ACM SIGGRAPH 2022 Posters (SIGGRAPH '22). Association for Computing Machinery, New York, NY, USA, Article 29, 1–2. https://doi.org/10.1145/3532719.3543213

@inproceedings{10.1145/3532719.3543213,

author = {Jing, Allison and Gupta, Kunal and McDade, Jeremy and Lee, Gun and Billinghurst, Mark},

title = {Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration},

year = {2022},

isbn = {9781450393614},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3532719.3543213},

doi = {10.1145/3532719.3543213},

abstract = {In this poster, we present a live 360° panoramic-video based empathic Mixed Reality (MR) collaboration system that shares various Near-Gaze non-verbal communication cues including gaze, hand pointing, gesturing, and heart rate visualisations in real-time. The preliminary results indicate that the interface with the partner’s communication cues visualised close to the gaze point allows users to focus without dividing attention to the collaborator’s physical body movements yet still effectively communicate. Shared gaze visualisations coupled with deictic languages are primarily used to affirm joint attention and mutual understanding, while hand pointing and gesturing are used as secondary. Our approach provides a new way to help enable effective remote collaboration through varied empathic communication visualisations and modalities which covers different task properties and spatial setups.},

booktitle = {ACM SIGGRAPH 2022 Posters},

articleno = {29},

numpages = {2},

location = {Vancouver, BC, Canada},

series = {SIGGRAPH '22}

}In this poster, we present a live 360° panoramic-video based empathic Mixed Reality (MR) collaboration system that shares various Near-Gaze non-verbal communication cues including gaze, hand pointing, gesturing, and heart rate visualisations in real-time. The preliminary results indicate that the interface with the partner’s communication cues visualised close to the gaze point allows users to focus without dividing attention to the collaborator’s physical body movements yet still effectively communicate. Shared gaze visualisations coupled with deictic languages are primarily used to affirm joint attention and mutual understanding, while hand pointing and gesturing are used as secondary. Our approach provides a new way to help enable effective remote collaboration through varied empathic communication visualisations and modalities which covers different task properties and spatial setups.- 2021

A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning

Ihshan Gumilar, Ekansh Sareen, Reed Bell, Augustus Stone, Ashkan Hayati, Jingwen Mao, Amit Barde, Anubha Gupta, Arindam Dey, Gun Lee, Mark BillinghurstGumilar, I., Sareen, E., Bell, R., Stone, A., Hayati, A., Mao, J., ... & Billinghurst, M. (2021). A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning. Computers & Graphics, 94, 62-75.

@article{gumilar2021comparative,

title={A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning},

author={Gumilar, Ihshan and Sareen, Ekansh and Bell, Reed and Stone, Augustus and Hayati, Ashkan and Mao, Jingwen and Barde, Amit and Gupta, Anubha and Dey, Arindam and Lee, Gun and others},

journal={Computers \& Graphics},

volume={94},

pages={62--75},

year={2021},

publisher={Elsevier}

}Researchers have employed hyperscanning, a technique used to simultaneously record neural activity from multiple participants, in real-world collaborations. However, to the best of our knowledge, there is no study that has used hyperscanning in Virtual Reality (VR). The aims of this study were; firstly, to replicate results of inter-brain synchrony reported in existing literature for a real world task and secondly, to explore whether the inter-brain synchrony could be elicited in a Virtual Environment (VE). This paper reports on three pilot-studies in two different settings (real-world and VR). Paired participants performed two sessions of a finger-pointing exercise separated by a finger-tracking exercise during which their neural activity was simultaneously recorded by electroencephalography (EEG) hardware. By using Phase Locking Value (PLV) analysis, VR was found to induce similar inter-brain synchrony as seen in the real-world. Further, it was observed that the finger-pointing exercise shared the same neurally activated area in both the real-world and VR. Based on these results, we infer that VR can be used to enhance inter-brain synchrony in collaborative tasks carried out in a VE. In particular, we have been able to demonstrate that changing visual perspective in VR is capable of eliciting inter-brain synchrony. This demonstrates that VR could be an exciting platform to explore the phenomena of inter-brain synchrony further and provide a deeper understanding of the neuroscience of human communication.

Grand Challenges for Augmented Reality

Mark BillinghurstBillinghurst, M. (2021). Grand Challenges for Augmented Reality. Frontiers in Virtual Reality, 2, 12.

@article{billinghurst2021grand,

title={Grand Challenges for Augmented Reality},

author={Billinghurst, Mark},

journal={Frontiers in Virtual Reality},

volume={2},

pages={12},

year={2021},

publisher={Frontiers}

}

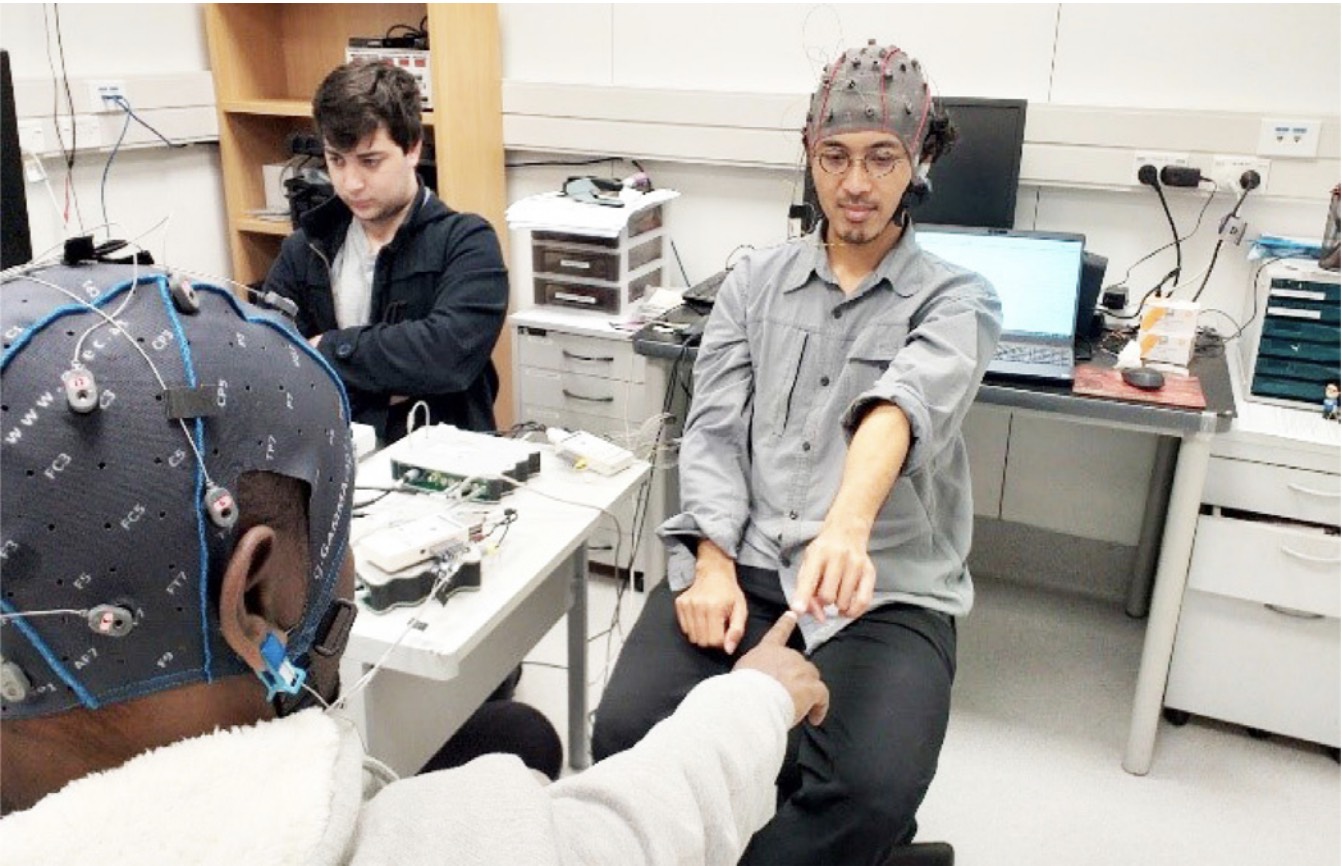

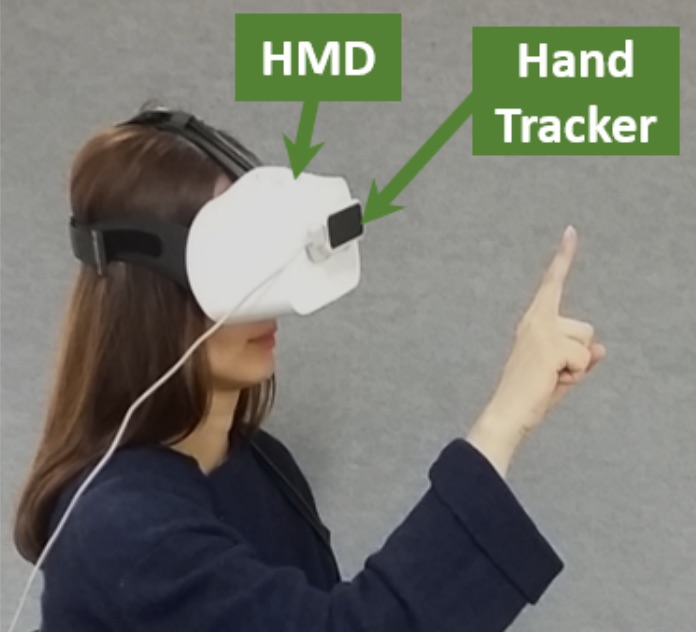

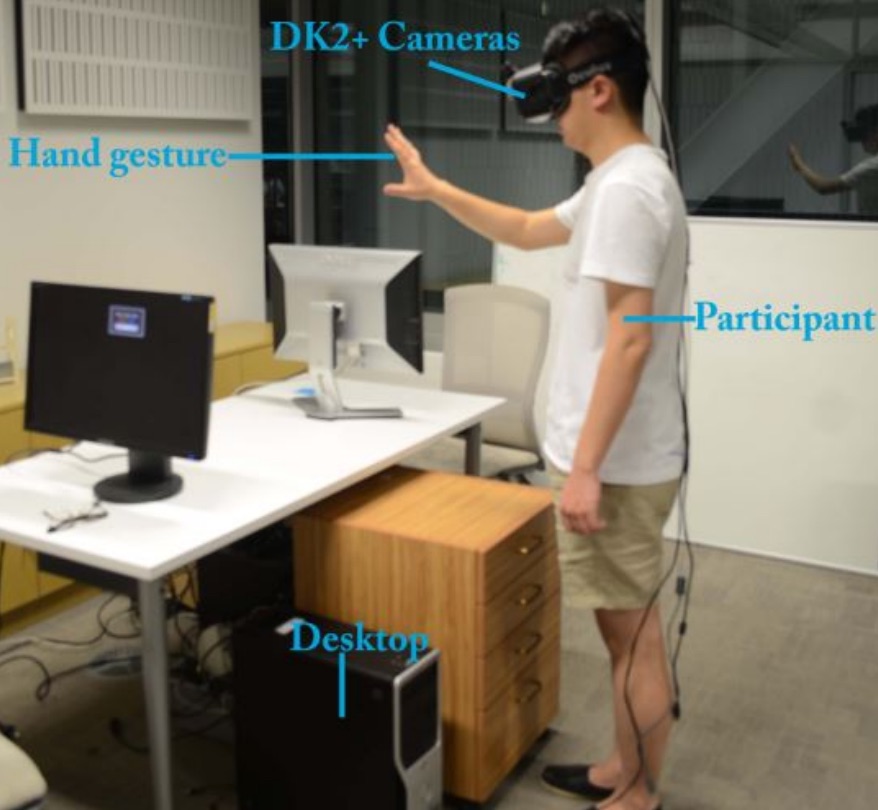

Bringing full-featured mobile phone interaction into virtual reality

H Bai, L Zhang, J Yang, M BillinghurstBai, H., Zhang, L., Yang, J., & Billinghurst, M. (2021). Bringing full-featured mobile phone interaction into virtual reality. Computers & Graphics, 97, 42-53.

@article{bai2021bringing,

title={Bringing full-featured mobile phone interaction into virtual reality},

author={Bai, Huidong and Zhang, Li and Yang, Jing and Billinghurst, Mark},

journal={Computers \& Graphics},

volume={97},

pages={42--53},

year={2021},

publisher={Elsevier}

}Virtual Reality (VR) Head-Mounted Display (HMD) technology immerses a user in a computer generated virtual environment. However, a VR HMD also blocks the users’ view of their physical surroundings, and so prevents them from using their mobile phones in a natural manner. In this paper, we present a novel Augmented Virtuality (AV) interface that enables people to naturally interact with a mobile phone in real time in a virtual environment. The system allows the user to wear a VR HMD while seeing his/her 3D hands captured by a depth sensor and rendered in different styles, and enables the user to operate a virtual mobile phone aligned with their real phone. We conducted a formal user study to compare the AV interface with physical touch interaction on user experience in five mobile applications. Participants reported that our system brought the real mobile phone into the virtual world. Unfortunately, the experiment results indicated that using a phone with our AV interfaces in VR was more difficult than the regular smartphone touch interaction, with increased workload and lower system usability, especially for a typing task. We ran a follow-up study to compare different hand visualizations for text typing using the AV interface. Participants felt that a skin-colored hand visualization method provided better usability and immersiveness than other hand rendering styles.

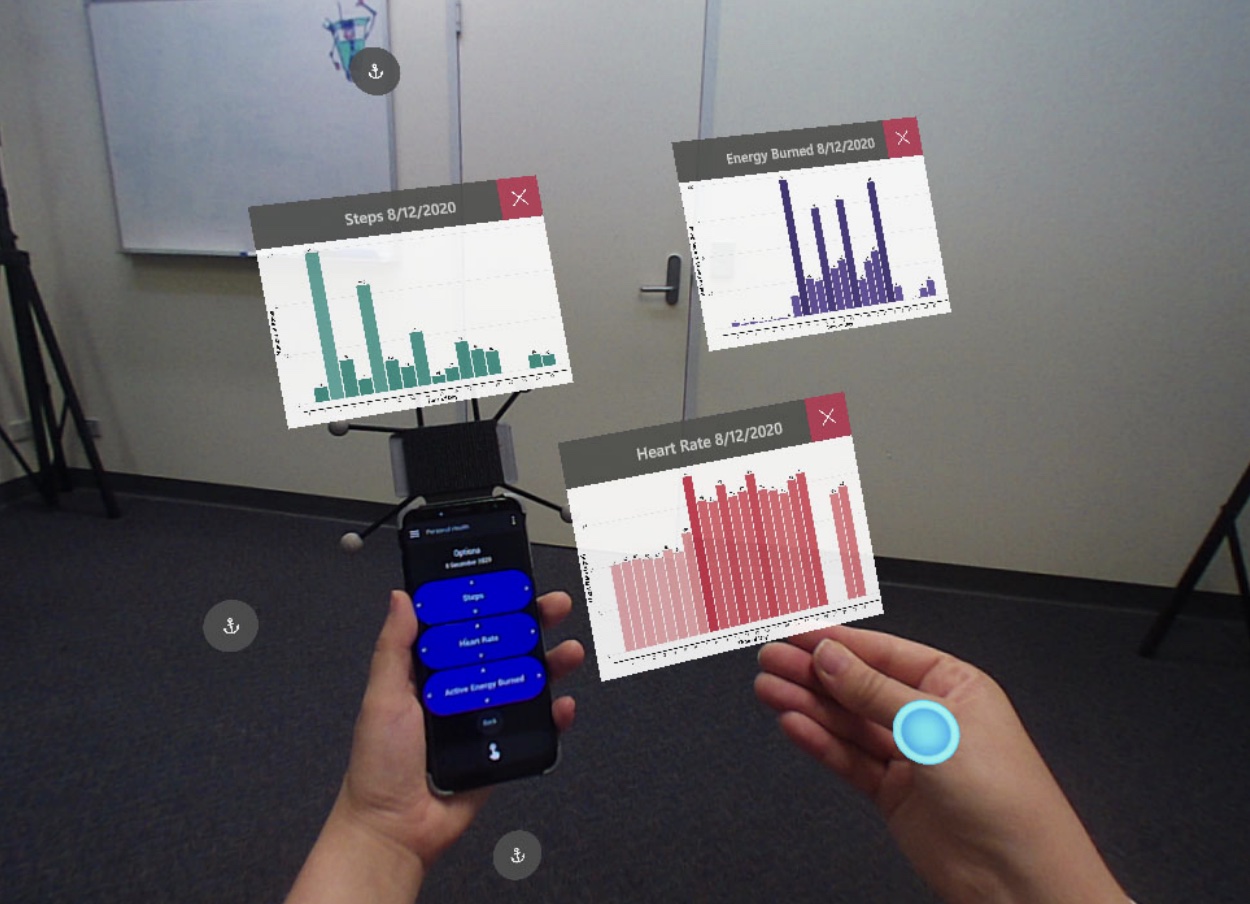

SecondSight: A Framework for Cross-Device Augmented Reality Interfaces

Reichherzer, Carolin, Jack Fraser, Damien Constantine Rompapas, Mark Billinghurst.Reichherzer, C., Fraser, J., Rompapas, D. C., & Billinghurst, M. (2021, May). SecondSight: A Framework for Cross-Device Augmented Reality Interfaces. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-6).

@inproceedings{reichherzer2021secondsight,

title={SecondSight: A Framework for Cross-Device Augmented Reality Interfaces},

author={Reichherzer, Carolin and Fraser, Jack and Rompapas, Damien Constantine and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--6},

year={2021}

}This paper describes a modular framework developed to facilitate the design space exploration of cross-device Augmented Reality (AR) interfaces that combine an AR head-mounted display (HMD) with a smartphone. Currently, there is a growing interest in how AR HMDs can be used with smartphones to improve the user’s AR experience. In this work, we describe a framework that enables rapid prototyping and evaluation of an interface. Our system enables different modes of interaction, content placement, and simulated AR HMD field of view to assess which combination is best suited to inform future researchers on design recommendations. We provide examples of how the framework could be used to create sample applications, the types of the studies which could be supported, and example results from a simple pilot study.

Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration

Allison Jing, Kieran May, Gun Lee, Mark Billinghurst.Jing, A., May, K., Lee, G., & Billinghurst, M. (2021). Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration. Frontiers in Virtual Reality, 2, 79.

@article{jing2021eye,

title={Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration},

author={Jing, Allison and May, Kieran and Lee, Gun and Billinghurst, Mark},

journal={Frontiers in Virtual Reality},

volume={2},

pages={79},

year={2021},

publisher={Frontiers}

}Gaze is one of the predominant communication cues and can provide valuable implicit information such as intention or focus when performing collaborative tasks. However, little research has been done on how virtual gaze cues combining spatial and temporal characteristics impact real-life physical tasks during face to face collaboration. In this study, we explore the effect of showing joint gaze interaction in an Augmented Reality (AR) interface by evaluating three bi-directional collaborative (BDC) gaze visualisations with three levels of gaze behaviours. Using three independent tasks, we found that all bi-directional collaborative BDC visualisations are rated significantly better at representing joint attention and user intention compared to a non-collaborative (NC) condition, and hence are considered more engaging. The Laser Eye condition, spatially embodied with gaze direction, is perceived significantly more effective as it encourages mutual gaze awareness with a relatively low mental effort in a less constrained workspace. In addition, by offering additional virtual representation that compensates for verbal descriptions and hand pointing, BDC gaze visualisations can encourage more conscious use of gaze cues coupled with deictic references during co-located symmetric collaboration. We provide a summary of the lessons learned, limitations of the study, and directions for future research.

First Contact‐Take 2: Using XR technology as a bridge between Māori, Pākehā and people from other cultures in Aotearoa, New Zealand

Mairi Gunn, Mark Billinghurst, Huidong Bai, Prasanth Sasikumar.Gunn, M., Billinghurst, M., Bai, H., & Sasikumar, P. (2021). First Contact‐Take 2: Using XR technology as a bridge between Māori, Pākehā and people from other cultures in Aotearoa, New Zealand. Virtual Creativity, 11(1), 67-90.

@article{gunn2021first,

title={First Contact-Take 2: Using XR technology as a bridge between M{\=a}ori, P{\=a}keh{\=a} and people from other cultures in Aotearoa, New Zealand},

author={Gunn, Mairi and Billinghurst, Mark and Bai, Huidong and Sasikumar, Prasanth},

journal={Virtual Creativity},

volume={11},

number={1},

pages={67--90},

year={2021},

publisher={Intellect}

}The art installation common/room explores human‐digital‐human encounter across cultural differences. It comprises a suite of extended reality (XR) experiences that use technology as a bridge to help support human connections with a view to overcoming intercultural discomfort (racism). The installations are exhibited as an informal dining room, where each table hosts a distinct experience designed to bring people together in a playful yet meaningful way. Each experience uses different technologies, including 360° 3D virtual reality (VR) in a headset (common/place), 180° 3D projection (Common Sense) and augmented reality (AR) (Come to the Table! and First Contact ‐ Take 2). This article focuses on the latter, First Contact ‐ Take 2, in which visitors are invited to sit at a dining table, wear an AR head-mounted display and encounter a recorded volumetric representation of an Indigenous Māori woman seated opposite them. She speaks directly to the visitor out of a culture that has refined collective endeavour and relational psychology over millennia. The contextual and methodological framework for this research is international commons scholarship and practice that sits within a set of relationships outlined by the Mātike Mai Report on constitutional transformation for Aotearoa, New Zealand. The goal is to practise and build new relationships between Māori and Tauiwi, including Pākehā.

ShowMeAround: Giving Virtual Tours Using Live 360 Video

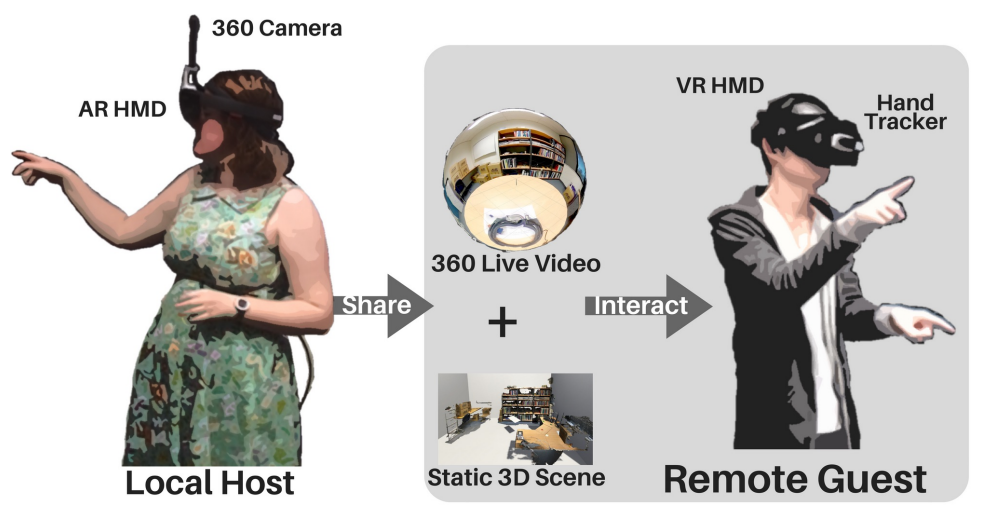

Alaeddin Nassani, Li Zhang, Huidong Bai, Mark Billinghurst.Nassani, A., Zhang, L., Bai, H., & Billinghurst, M. (2021, May). ShowMeAround: Giving Virtual Tours Using Live 360 Video. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-4).

@inproceedings{nassani2021showmearound,

title={ShowMeAround: Giving Virtual Tours Using Live 360 Video},

author={Nassani, Alaeddin and Zhang, Li and Bai, Huidong and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--4},

year={2021}

}This demonstration presents ShowMeAround, a video conferencing system designed to allow people to give virtual tours over live 360-video. Using ShowMeAround a host presenter walks through a real space and can live stream a 360-video view to a small group of remote viewers. The ShowMeAround interface has features such as remote pointing and viewpoint awareness to support natural collaboration between the viewers and host presenter. The system also enables sharing of pre-recorded high resolution 360 video and still images to further enhance the virtual tour experience.

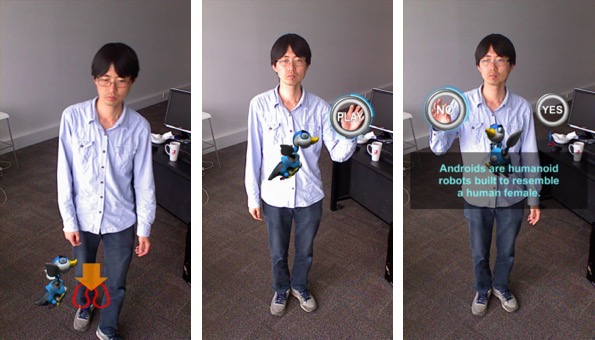

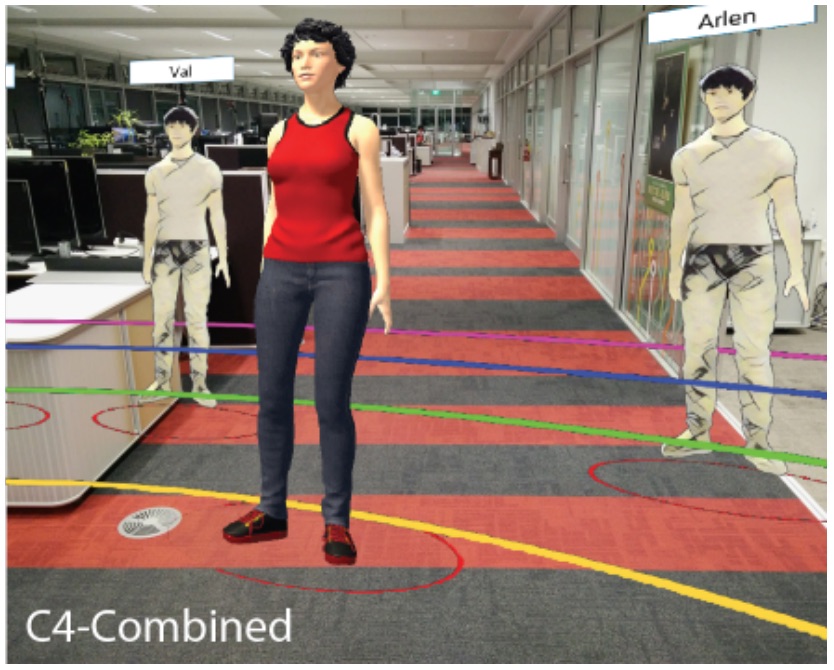

Manipulating Avatars for Enhanced Communication in Extended Reality

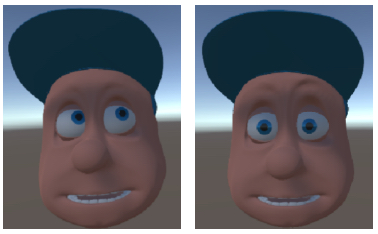

Jonathon Hart, Thammathip Piumsomboon, Gun A. Lee, Ross T. Smith, Mark Billinghurst.Hart, J. D., Piumsomboon, T., Lee, G. A., Smith, R. T., & Billinghurst, M. (2021, May). Manipulating Avatars for Enhanced Communication in Extended Reality. In 2021 IEEE International Conference on Intelligent Reality (ICIR) (pp. 9-16). IEEE.

@inproceedings{hart2021manipulating,

title={Manipulating Avatars for Enhanced Communication in Extended Reality},

author={Hart, Jonathon Derek and Piumsomboon, Thammathip and Lee, Gun A and Smith, Ross T and Billinghurst, Mark},

booktitle={2021 IEEE International Conference on Intelligent Reality (ICIR)},

pages={9--16},

year={2021},

organization={IEEE}

}Avatars are common virtual representations used in Extended Reality (XR) to support interaction and communication between remote collaborators. Recent advancements in wearable displays provide features such as eye and face-tracking, to enable avatars to express non-verbal cues in XR. The research in this paper investigates the impact of avatar visualization on Social Presence and user’s preference by simulating face tracking in an asymmetric XR remote collaboration between a desktop user and a Virtual Reality (VR) user. Our study was conducted between pairs of participants, one on a laptop computer supporting face tracking and the other being immersed in VR, experiencing different visualization conditions. They worked together to complete an island survival task. We found that the users preferred 3D avatars with facial expressions placed in the scene, compared to 2D screen attached avatars without facial expressions. Participants felt that the presence of the collaborator’s avatar improved overall communication, yet Social Presence was not significantly different between conditions as they mainly relied on audio for communication.

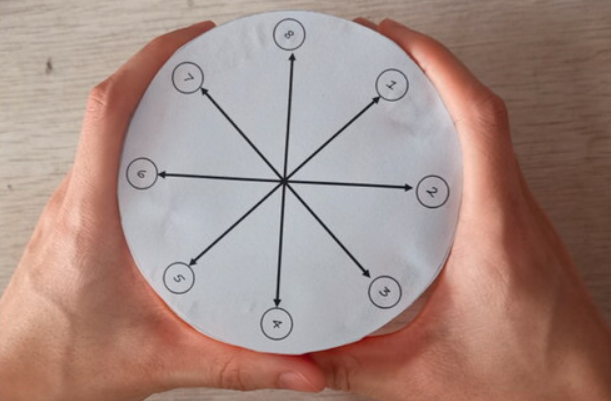

Adapting Fitts’ Law and N-Back to Assess Hand Proprioception

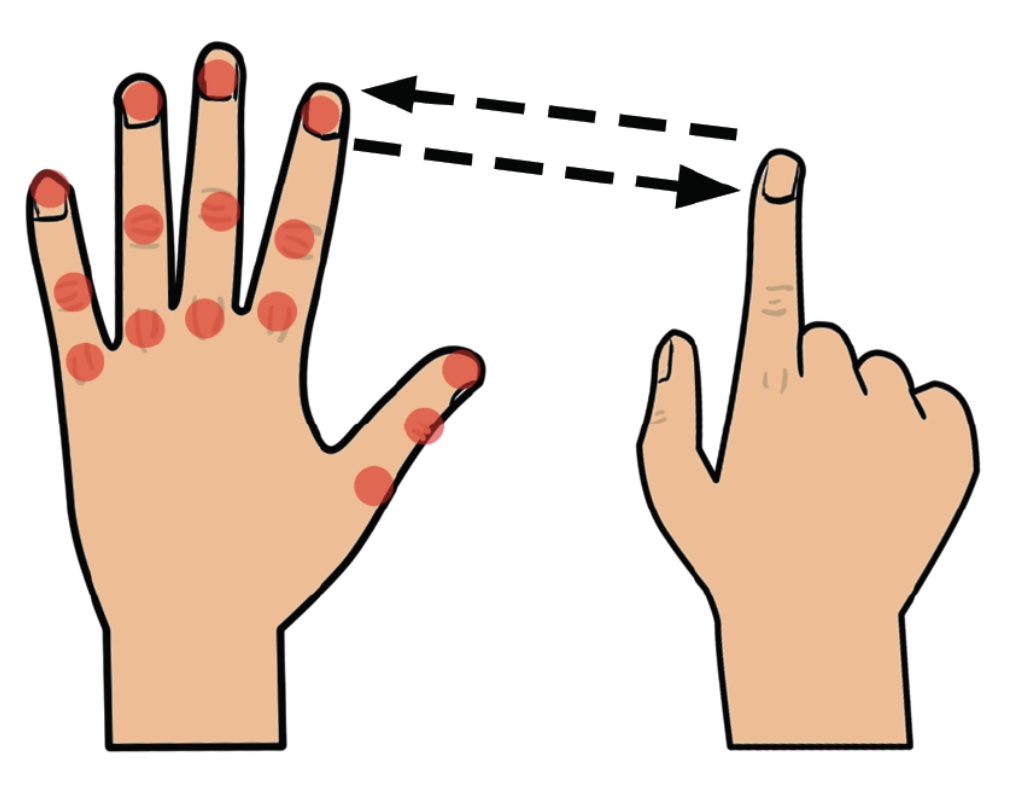

Tamil Gunasekaran, Ryo Hajika, Chloe Dolma Si Ying Haigh, Yun Suen Pai, Danielle Lottridge, Mark Billinghurst.Gunasekaran, T. S., Hajika, R., Haigh, C. D. S. Y., Pai, Y. S., Lottridge, D., & Billinghurst, M. (2021, May). Adapting Fitts’ Law and N-Back to Assess Hand Proprioception. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-7).

@inproceedings{gunasekaran2021adapting,

title={Adapting Fitts’ Law and N-Back to Assess Hand Proprioception},

author={Gunasekaran, Tamil Selvan and Hajika, Ryo and Haigh, Chloe Dolma Si Ying and Pai, Yun Suen and Lottridge, Danielle and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--7},

year={2021}

}Proprioception is the body’s ability to sense the position and movement of each limb, as well as the amount of effort exerted onto or by them. Methods to assess proprioception have been introduced before, yet there is little to no study on assessing the degree of proprioception on body parts for use cases like gesture recognition wearable computing. We propose the use of Fitts’ law coupled with the N-Back task to evaluate proprioception of the hand. We evaluate 15 distinct points at the back of the hand and assess the musing extended 3D Fitts’ law. Our results show that the index of difficulty of tapping point from thumb to pinky increases gradually with a linear regression factor of 0.1144. Additionally, participants perform the tap before performing the N-Back task. From these results, we discuss the fundamental limitations and suggest how Fitts’ law can be further extended to assess proprioception

XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing

Prasanth Sasikumar, Max Collins, Huidong Bai, Mark Billinghurst.Sasikumar, P., Collins, M., Bai, H., & Billinghurst, M. (2021, May). XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-4).

@inproceedings{sasikumar2021xrtb,

title={XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing},

author={Sasikumar, Prasanth and Collins, Max and Bai, Huidong and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--4},

year={2021}

}We present XRTeleBridge (XRTB), an application that integrates a Mixed Reality (MR) interface into existing teleconferencing solutions like Zoom. Unlike conventional webcam, XRTB provides a window into the virtual world to demonstrate and visualize content. Participants can join via webcam or via head mounted display (HMD) in a Virtual Reality (VR) environment. It enables users to embody 3D avatars with natural gestures and eye gaze. A camera in the virtual environment operates as a video feed to the teleconferencing software. An interface resembling a tablet mirrors the teleconferencing window inside the virtual environment, thus enabling the participant in the VR environment to see the webcam participants in real-time. This allows the presenter to view and interact with other participants seamlessly. To demonstrate the system’s functionalities, we created a virtual chemistry lab environment and presented an example lesson using the virtual space and virtual objects and effects.

Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR

Ihshan Gumilar, Amit Barde, Ashkan Hayati, Mark Billinghurst, Gun Lee, Abdul Momin, Charles Averill, Arindam Dey.Gumilar, I., Barde, A., Hayati, A. F., Billinghurst, M., Lee, G., Momin, A., ... & Dey, A. (2021, May). Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-7).

@inproceedings{gumilar2021connecting,

title={Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR},

author={Gumilar, Ihshan and Barde, Amit and Hayati, Ashkan F and Billinghurst, Mark and Lee, Gun and Momin, Abdul and Averill, Charles and Dey, Arindam},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--7},

year={2021}

}Hyperscanning is an emerging method for measuring two or more brains simultaneously. This method allows researchers to simultaneously record neural activity from two or more people. While this method has been extensively implemented over the last five years in the real-world to study inter-brain synchrony, there is little work that has been undertaken in the use of hyperscanning in virtual environments. Preliminary research in the area demonstrates that inter-brain synchrony in virtual environments can be achieved in a mannersimilar to thatseen in the real world. The study described in this paper proposes to further research in the area by studying how non-verbal communication cues in social interactions in virtual environments can afect inter-brain synchrony. In particular, we concentrate on the role eye gaze playsin inter-brain synchrony. The aim of this research is to explore how eye gaze afects inter-brain synchrony between users in a collaborative virtual environment

Tool-based asymmetric interaction for selection in VR.

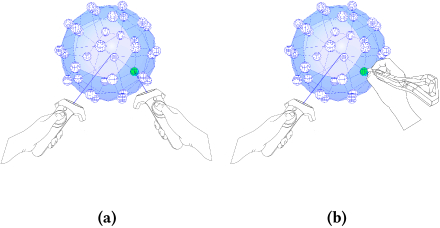

Qianyuan Zou; Huidong Bai; Gun Lee; Allan Fowler; Mark BillinghurstZou, Q., Bai, H., Zhang, Y., Lee, G., Allan, F., & Mark, B. (2021). Tool-based asymmetric interaction for selection in vr. In SIGGRAPH Asia 2021 Technical Communications (pp. 1-4).

@incollection{zou2021tool,

title={Tool-based asymmetric interaction for selection in vr},

author={Zou, Qianyuan and Bai, Huidong and Zhang, Yuewei and Lee, Gun and Allan, Fowler and Mark, Billinghurst},

booktitle={SIGGRAPH Asia 2021 Technical Communications},

pages={1--4},

year={2021}

}View: https://dl.acm.org/doi/abs/10.1145/3478512.3488615

Video: https://www.youtube.com/watch?v=FVk5lWtntGkMainstream Virtual Reality (VR) devices on the market nowadays mostly use symmetric interaction design for input, yet common practice by artists suggests asymmetric interaction using different input tools in each hand could be a better alternative for 3D modeling tasks in VR. In this paper, we explore the performance and usability of a tool-based asymmetric interaction method for a 3D object selection task in VR and compare it with a symmetric interface. The symmetric VR interface uses two identical handheld controllers to select points on a sphere, while the asymmetric interface uses a handheld controller and a stylus. We conducted a user study to compare these two interfaces and found that the asymmetric system was faster, required less workload, and was rated with better usability. We also discuss the opportunities for tool-based asymmetric input to optimize VR art workflows and future research directions.

eyemR-Talk: Using Speech to Visualise Shared MR Gaze Cues

Allison Jing , Brandon Matthews , Kieran May , Thomas Clarke , Gun Lee , Mark BillinghurstAllison Jing, Brandon Matthews, Kieran May, Thomas Clarke, Gun Lee, and Mark Billinghurst. 2021. EyemR-Talk: Using Speech to Visualise Shared MR Gaze Cues. In SIGGRAPH Asia 2021 Posters (SA '21 Posters). Association for Computing Machinery, New York, NY, USA, Article 16, 1–2. https://doi.org/10.1145/3476124.3488618

@inproceedings{10.1145/3476124.3488618,

author = {Jing, Allison and Matthews, Brandon and May, Kieran and Clarke, Thomas and Lee, Gun and Billinghurst, Mark},

title = {EyemR-Talk: Using Speech to Visualise Shared MR Gaze Cues},

year = {2021},

isbn = {9781450386876},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3476124.3488618},

doi = {10.1145/3476124.3488618},

abstract = {In this poster we present eyemR-Talk, a Mixed Reality (MR) collaboration system that uses speech input to trigger shared gaze visualisations between remote users. The system uses 360° panoramic video to support collaboration between a local user in the real world in an Augmented Reality (AR) view and a remote collaborator in Virtual Reality (VR). Using specific speech phrases to turn on virtual gaze visualisations, the system enables contextual speech-gaze interaction between collaborators. The overall benefit is to achieve more natural gaze awareness, leading to better communication and more effective collaboration.},

booktitle = {SIGGRAPH Asia 2021 Posters},

articleno = {16},

numpages = {2},

keywords = {Mixed Reality remote collaboration, gaze visualization, speech input},

location = {Tokyo, Japan},

series = {SA '21 Posters}

}In this poster we present eyemR-Talk, a Mixed Reality (MR) collaboration system that uses speech input to trigger shared gaze visualisations between remote users. The system uses 360° panoramic video to support collaboration between a local user in the real world in an Augmented Reality (AR) view and a remote collaborator in Virtual Reality (VR). Using specific speech phrases to turn on virtual gaze visualisations, the system enables contextual speech-gaze interaction between collaborators. The overall benefit is to achieve more natural gaze awareness, leading to better communication and more effective collaboration.

eyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration

Allison Jing , Kieran William May , Mahnoor Naeem , Gun Lee , Mark BillinghurstAllison Jing, Kieran William May, Mahnoor Naeem, Gun Lee, and Mark Billinghurst. 2021. EyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (CHI EA '21). Association for Computing Machinery, New York, NY, USA, Article 283, 1–7. https://doi.org/10.1145/3411763.3451844

@inproceedings{10.1145/3411763.3451844,

author = {Jing, Allison and May, Kieran William and Naeem, Mahnoor and Lee, Gun and Billinghurst, Mark},

title = {EyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration},

year = {2021},

isbn = {9781450380959},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3411763.3451844},

doi = {10.1145/3411763.3451844},

abstract = {Gaze is one of the most important communication cues in face-to-face collaboration. However, in remote collaboration, sharing dynamic gaze information is more difficult. In this research, we investigate how sharing gaze behavioural cues can improve remote collaboration in a Mixed Reality (MR) environment. To do this, we developed eyemR-Vis, a 360 panoramic Mixed Reality remote collaboration system that shows gaze behavioural cues as bi-directional spatial virtual visualisations shared between a local host and a remote collaborator. Preliminary results from an exploratory study indicate that using virtual cues to visualise gaze behaviour has the potential to increase co-presence, improve gaze awareness, encourage collaboration, and is inclined to be less physically demanding or mentally distracting.},

booktitle = {Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

articleno = {283},

numpages = {7},

keywords = {Human-Computer Interaction, Gaze Visualisation, Mixed Reality Remote Collaboration, CSCW},

location = {Yokohama, Japan},

series = {CHI EA '21}

}Gaze is one of the most important communication cues in face-to-face collaboration. However, in remote collaboration, sharing dynamic gaze information is more difficult. In this research, we investigate how sharing gaze behavioural cues can improve remote collaboration in a Mixed Reality (MR) environment. To do this, we developed eyemR-Vis, a 360 panoramic Mixed Reality remote collaboration system that shows gaze behavioural cues as bi-directional spatial virtual visualisations shared between a local host and a remote collaborator. Preliminary results from an exploratory study indicate that using virtual cues to visualise gaze behaviour has the potential to increase co-presence, improve gaze awareness, encourage collaboration, and is inclined to be less physically demanding or mentally distracting.

eyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators

Allison Jing , Kieran William May , Mahnoor Naeem , Gun Lee , Mark BillinghurstAllison Jing, Kieran William May, Mahnoor Naeem, Gun Lee, and Mark Billinghurst. 2021. EyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (CHI EA '21). Association for Computing Machinery, New York, NY, USA, Article 188, 1–4. https://doi.org/10.1145/3411763.3451545

@inproceedings{10.1145/3411763.3451545,

author = {Jing, Allison and May, Kieran William and Naeem, Mahnoor and Lee, Gun and Billinghurst, Mark},

title = {EyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators},

year = {2021},

isbn = {9781450380959},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3411763.3451545},

doi = {10.1145/3411763.3451545},

abstract = {This demonstration shows eyemR-Vis, a 360 panoramic Mixed Reality collaboration system that translates gaze behavioural cues to bi-directional visualisations between a local host (AR) and a remote collaborator (VR). The system is designed to share dynamic gaze behavioural cues as bi-directional spatial virtual visualisations between a local host and a remote collaborator. This enables richer communication of gaze through four visualisation techniques: browse, focus, mutual-gaze, and fixated circle-map. Additionally, our system supports simple bi-directional avatar interaction as well as panoramic video zoom. This makes interaction in the normally constrained remote task space more flexible and relatively natural. By showing visual communication cues that are physically inaccessible in the remote task space through reallocating and visualising the existing ones, our system aims to provide a more engaging and effective remote collaboration experience.},

booktitle = {Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

articleno = {188},

numpages = {4},

keywords = {Gaze Visualisation, Human-Computer Interaction, Mixed Reality Remote Collaboration, CSCW},

location = {Yokohama, Japan},

series = {CHI EA '21}

}This demonstration shows eyemR-Vis, a 360 panoramic Mixed Reality collaboration system that translates gaze behavioural cues to bi-directional visualisations between a local host (AR) and a remote collaborator (VR). The system is designed to share dynamic gaze behavioural cues as bi-directional spatial virtual visualisations between a local host and a remote collaborator. This enables richer communication of gaze through four visualisation techniques: browse, focus, mutual-gaze, and fixated circle-map. Additionally, our system supports simple bi-directional avatar interaction as well as panoramic video zoom. This makes interaction in the normally constrained remote task space more flexible and relatively natural. By showing visual communication cues that are physically inaccessible in the remote task space through reallocating and visualising the existing ones, our system aims to provide a more engaging and effective remote collaboration experience.- 2020

Time to Get Personal: Individualised Virtual Reality for Mental Health

Nilufar Baghaei , Lehan Stemmet , Andrej Hlasnik , Konstantin Emanov , Sylvia Hach , John A. Naslund , Mark Billinghurst , Imran Khaliq , Hai-Ning LiangNilufar Baghaei, Lehan Stemmet, Andrej Hlasnik, Konstantin Emanov, Sylvia Hach, John A. Naslund, Mark Billinghurst, Imran Khaliq, and Hai-Ning Liang. 2020. Time to Get Personal: Individualised Virtual Reality for Mental Health. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems (CHI EA ’20). Association for Computing Machinery, New York, NY, USA, 1–9. DOI:https://doi.org/10.1145/3334480.3382932

@inproceedings{baghaei2020time,

title={Time to Get Personal: Individualised Virtual Reality for Mental Health},

author={Baghaei, Nilufar and Stemmet, Lehan and Hlasnik, Andrej and Emanov, Konstantin and Hach, Sylvia and Naslund, John A and Billinghurst, Mark and Khaliq, Imran and Liang, Hai-Ning},

booktitle={Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--9},

year={2020}

}Mental health conditions pose a major challenge to healthcare providers and society at large. Early intervention can have significant positive impact on a person's prognosis, particularly important in improving mental health outcomes and functioning for young people. Virtual Reality (VR) in mental health is an emerging and innovative field. Recent studies support the use of VR technology in the treatment of anxiety, phobia, eating disorders, addiction, and pain management. However, there is little research on using VR for supporting, treatment and prevention of depression - a field that is very much emerging. There is also very little work done in offering individualised VR experience to users with mental health issues. This paper proposes iVR, a novel individualised VR for improving users' self-compassion, and in the long run, their positive mental health. We describe the concept, design, architecture and implementation of iVR and outline future work. We believe this contribution will pave the way for large-scale efficacy testing, clinical use, and potentially cost-effective delivery of VR technology for mental health therapy in future.

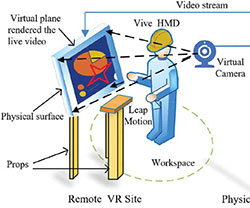

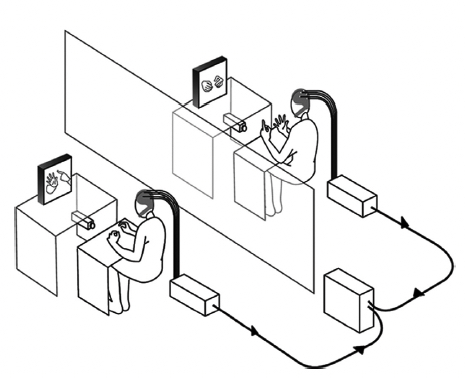

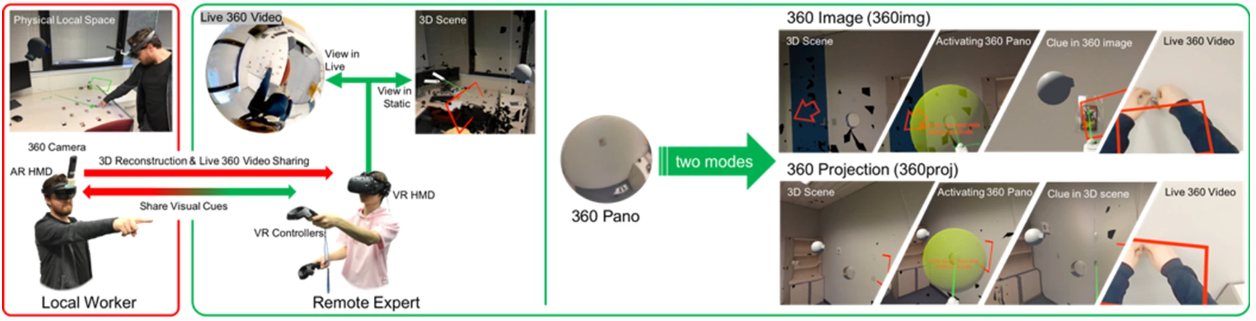

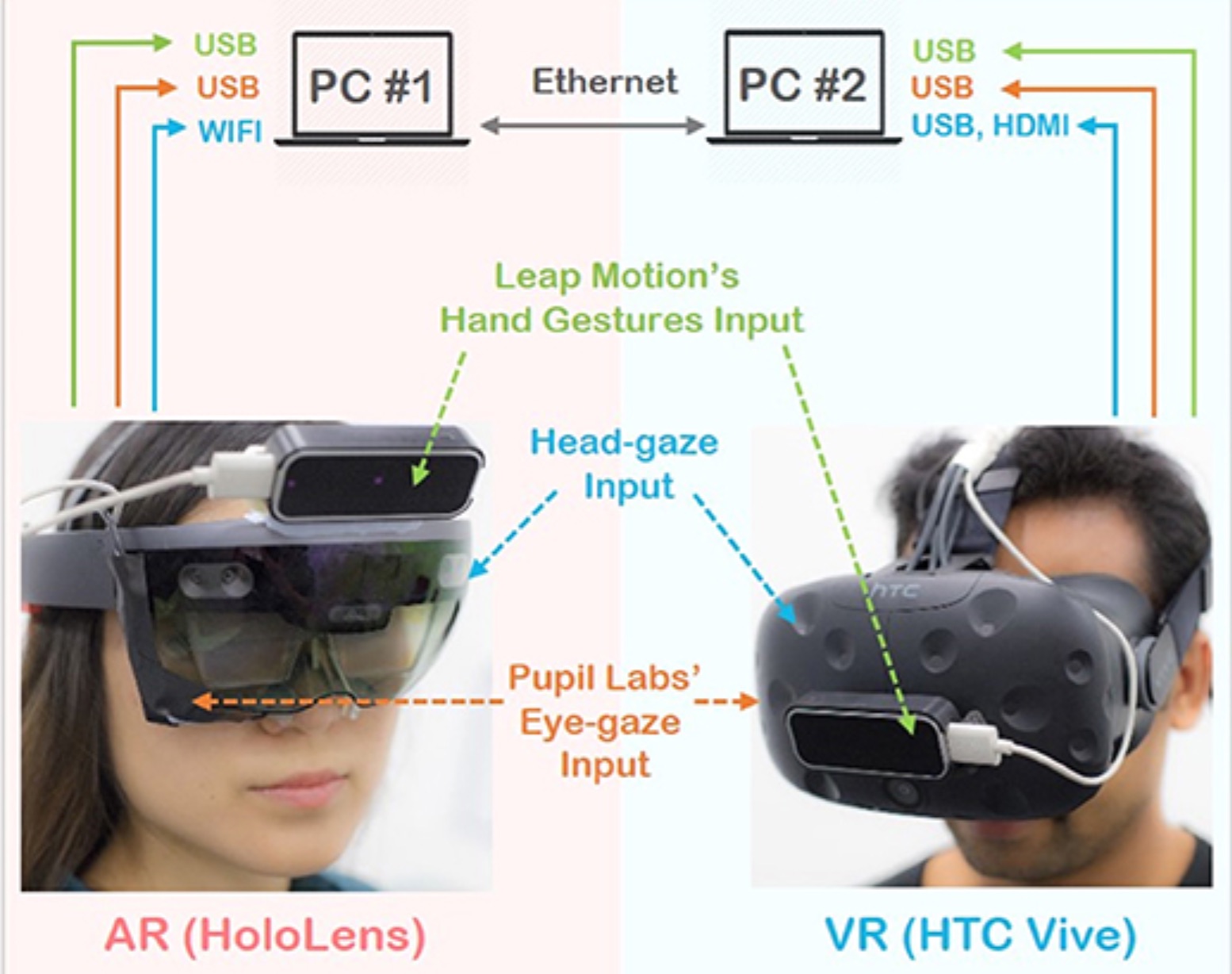

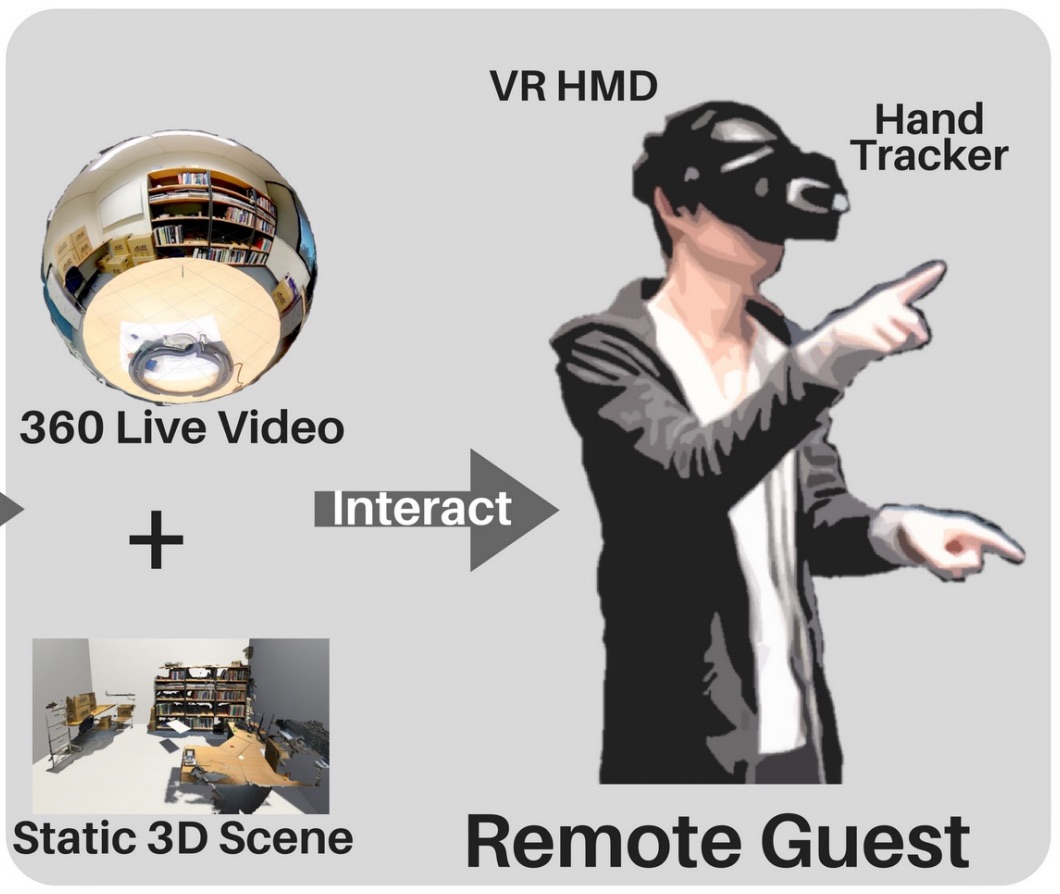

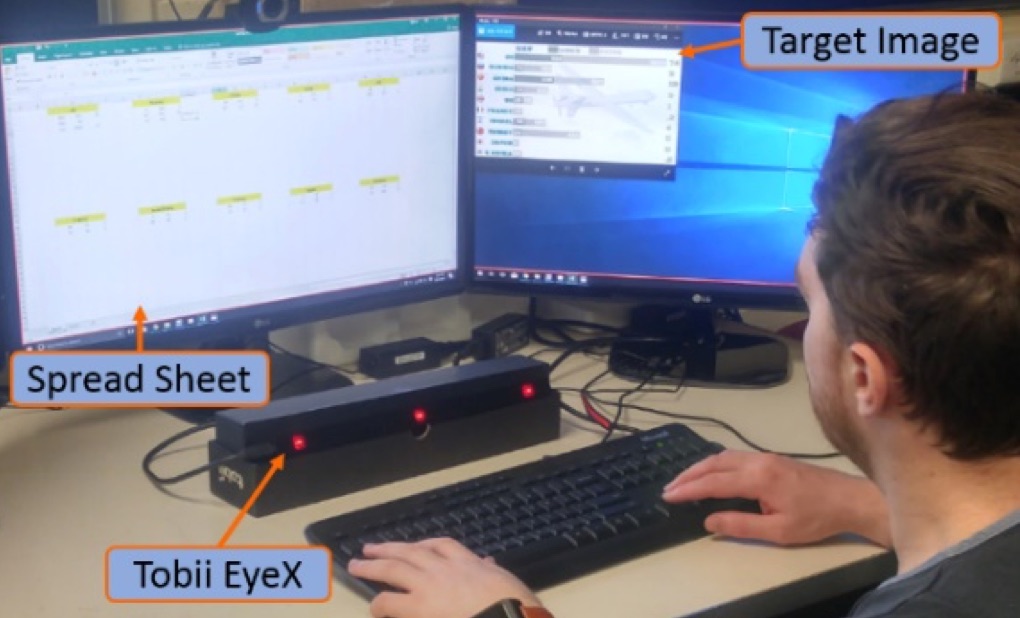

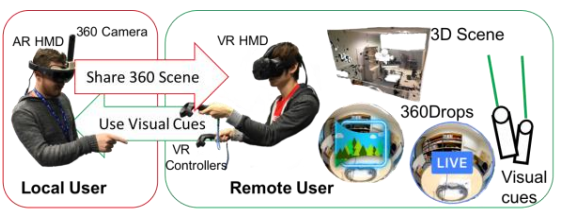

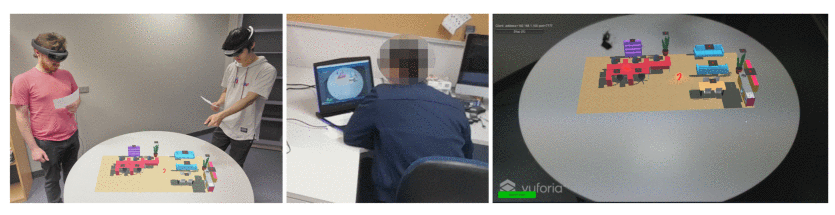

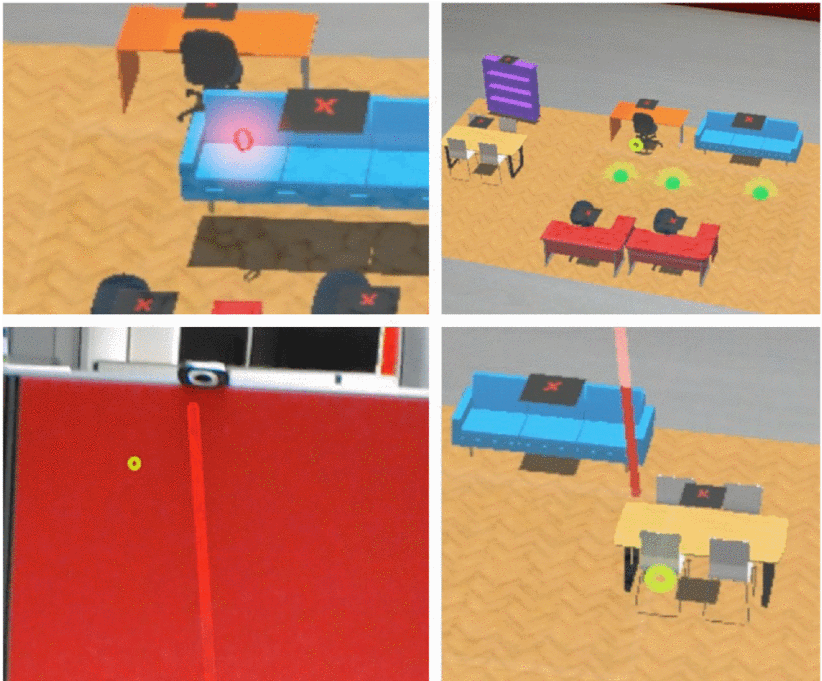

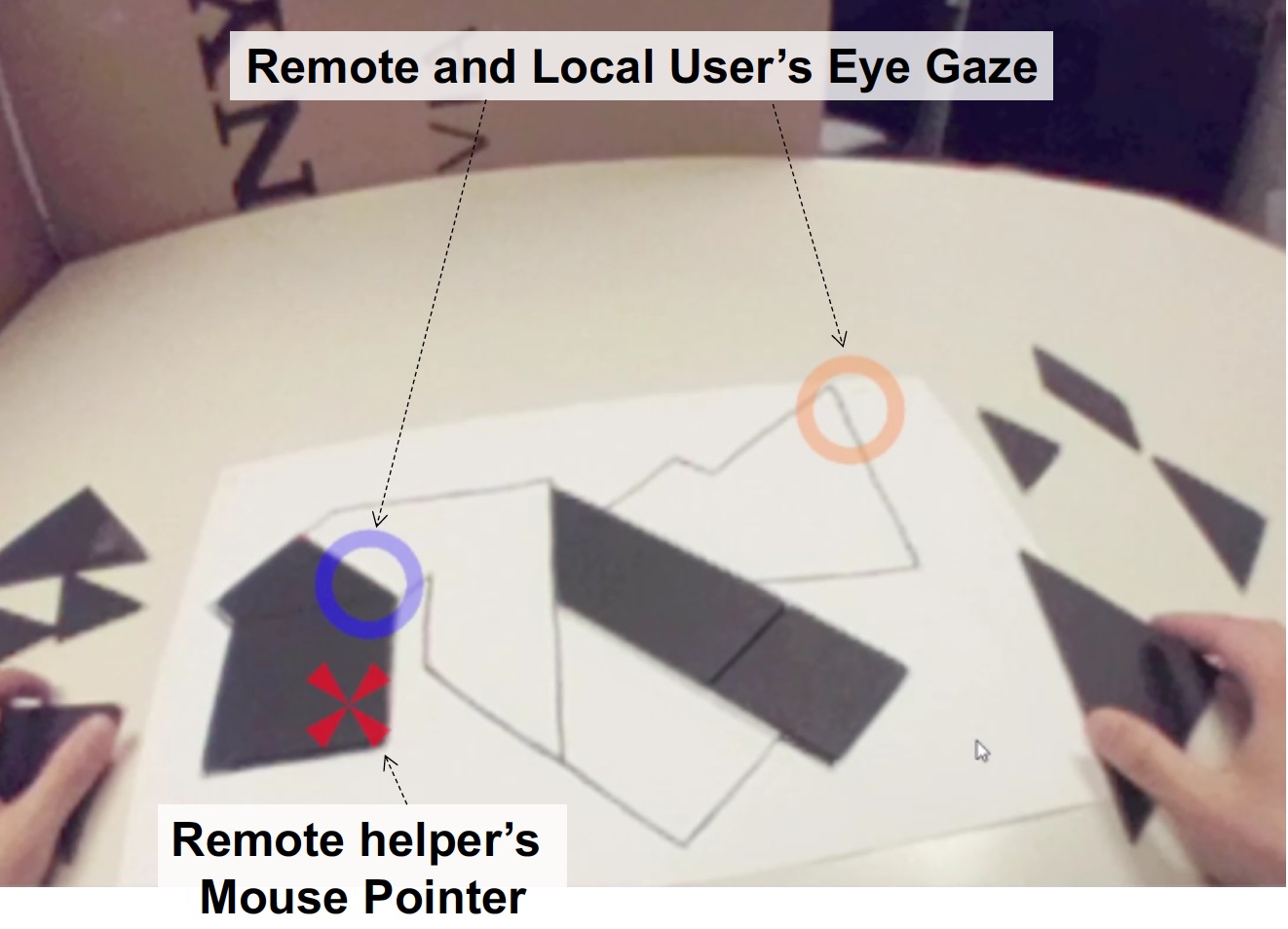

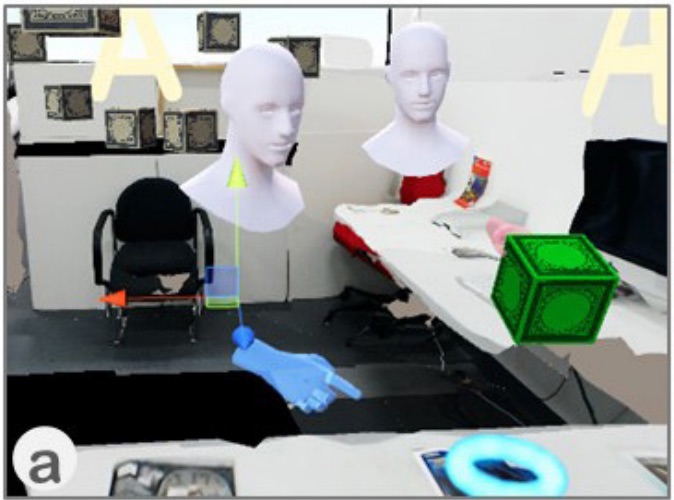

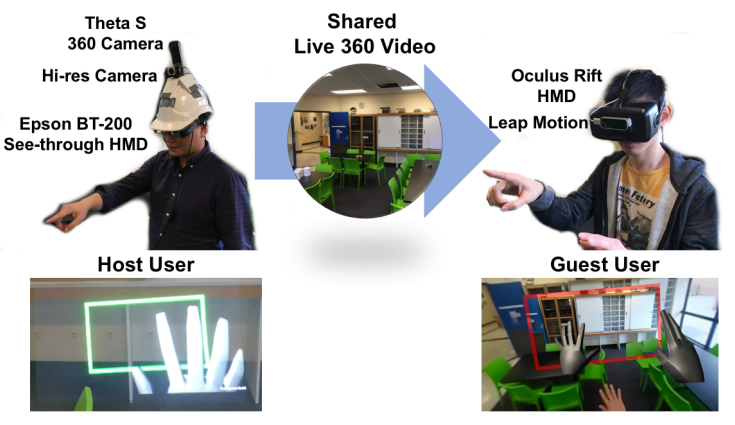

A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing

Huidong Bai , Prasanth Sasikumar , Jing Yang , Mark BillinghurstHuidong Bai, Prasanth Sasikumar, Jing Yang, and Mark Billinghurst. 2020. A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–13. DOI:https://doi.org/10.1145/3313831.3376550

@inproceedings{bai2020user,

title={A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing},

author={Bai, Huidong and Sasikumar, Prasanth and Yang, Jing and Billinghurst, Mark},

booktitle={Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems},

pages={1--13},

year={2020}

}Supporting natural communication cues is critical for people to work together remotely and face-to-face. In this paper we present a Mixed Reality (MR) remote collaboration system that enables a local worker to share a live 3D panorama of his/her surroundings with a remote expert. The remote expert can also share task instructions back to the local worker using visual cues in addition to verbal communication. We conducted a user study to investigate how sharing augmented gaze and gesture cues from the remote expert to the local worker could affect the overall collaboration performance and user experience. We found that by combing gaze and gesture cues, our remote collaboration system could provide a significantly stronger sense of co-presence for both the local and remote users than using the gaze cue alone. The combined cues were also rated significantly higher than the gaze in terms of ease of conveying spatial actions.

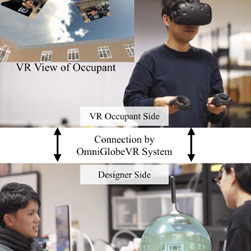

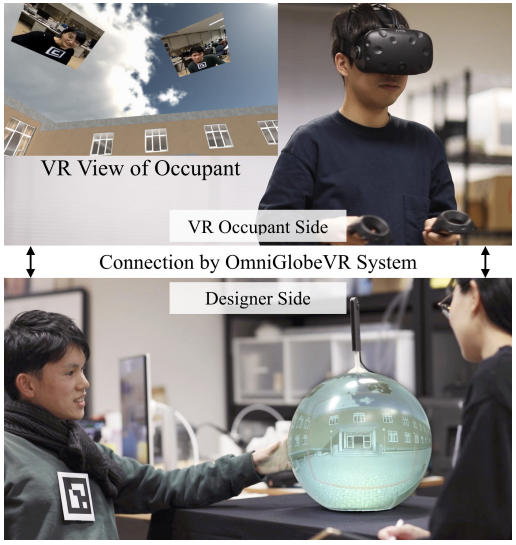

OmniGlobeVR: A Collaborative 360° Communication System for VR

Zhengqing Li , Liwei Chan , Theophilus Teo , Hideki KoikeZhengqing Li, Liwei Chan, Theophilus Teo, and Hideki Koike. 2020. OmniGlobeVR: A Collaborative 360° Communication System for VR. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems (CHI EA ’20). Association for Computing Machinery, New York, NY, USA, 1–8. DOI:https://doi.org/10.1145/3334480.3382869

@inproceedings{li2020omniglobevr,

title={OmniGlobeVR: A Collaborative 360 Communication System for VR},

author={Li, Zhengqing and Chan, Liwei and Teo, Theophilus and Koike, Hideki},

booktitle={Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--8},

year={2020}

}In this paper, we propose OmniGlobeVR, a novel collaboration tool based on an asymmetric cooperation system that supports communication and cooperation between a VR user (occupant) and multiple non-VR users (designers) across the virtual and physical platform. The OmniGlobeVR allows designer(s) to access the content of a VR space from any point of view using two view modes: 360° first-person mode and third-person mode. Furthermore, a proper interface of a shared gaze awareness cue is designed to enhance communication between the occupant and the designer(s). The system also has a face window feature that allows designer(s) to share their facial expressions and upper body gesture with the occupant in order to exchange and express information in a nonverbal context. Combined together, the OmniGlobeVR allows collaborators between the VR and non-VR platforms to cooperate while allowing designer(s) to easily access physical assets while working synchronously with the occupant in the VR space.

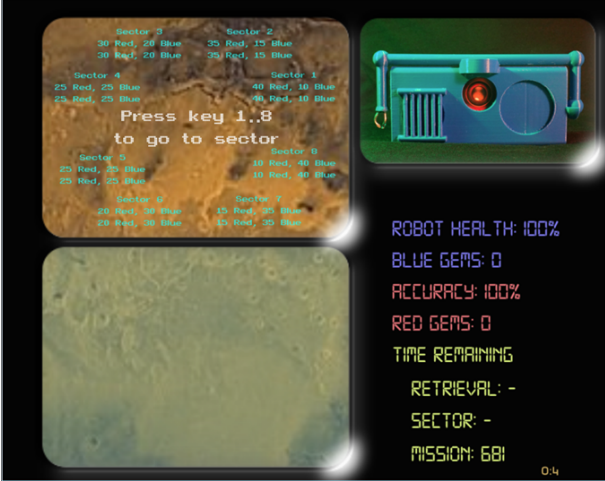

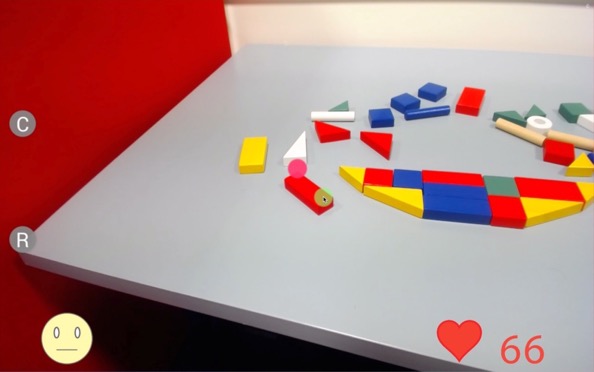

MazeRunVR: An Open Benchmark for VR Locomotion Performance, Preference and Sickness in the Wild

Kirill Ragozin , Kai Kunze , Karola Marky , Yun Suen PaiKirill Ragozin, Kai Kunze, Karola Marky, and Yun Suen Pai. 2020. MazeRunVR: An Open Benchmark for VR Locomotion Performance, Preference and Sickness in the Wild. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems (CHI EA ’20). Association for Computing Machinery, New York, NY, USA, 1–8. DOI:https://doi.org/10.1145/3334480.3383035

@inproceedings{ragozin2020mazerunvr,

title={MazeRunVR: An Open Benchmark for VR Locomotion Performance, Preference and Sickness in the Wild},

author={Ragozin, Kirill and Kunze, Kai and Marky, Karola and Pai, Yun Suen},

booktitle={Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--8},

year={2020}

}Locomotion in virtual reality (VR) is one of the biggest problems for large scale adoption of VR applications. Yet, to our knowledge, there are few studies conducted in-the-wild to understand performance metrics and general user preference for different mechanics. In this paper, we present the first steps towards an open framework to create a VR locomotion benchmark. As a viability study, we investigate how well the users move in VR when using three different locomotion mechanics. It was played in over 124 sessions across 10 countries in a period of three weeks. The included prototype locomotion mechanics are arm swing,walk-in-place and trackpad movement. We found that over-all, users performed significantly faster using arm swing and trackpad when compared to walk-in-place. For subjective preference, arm swing was significantly more preferred over the other two methods. Finally for induced sickness, walk-in-place was the overall most sickness-inducing locomotion method.

A Constrained Path Redirection for Passive Haptics

Lili Wang ; Zixiang Zhao ; Xuefeng Yang ; Huidong Bai ; Amit Barde ; Mark BillinghurstL. Wang, Z. Zhao, X. Yang, H. Bai, A. Barde and M. Billinghurst, "A Constrained Path Redirection for Passive Haptics," 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 2020, pp. 651-652, doi: 10.1109/VRW50115.2020.00176.

@inproceedings{wang2020constrained,

title={A Constrained Path Redirection for Passive Haptics},

author={Wang, Lili and Zhao, Zixiang and Yang, Xuefeng and Bai, Huidong and Barde, Amit and Billinghurst, Mark},

booktitle={2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages={651--652},

year={2020},

organization={IEEE}

}Navigation with passive haptic feedback can enhance users’ immersion in virtual environments. We propose a constrained path redirection method to provide users with corresponding haptic feedback at the right time and place. We have quantified the VR exploration practicality in a study and the results show advantages over steer-to-center method in terms of presence, and over Steinicke’s method in terms of matching errors and presence.

Neurophysiological Effects of Presence in Calm Virtual Environments

Arindam Dey ; Jane Phoon ; Shuvodeep Saha ; Chelsea Dobbins ; Mark BillinghurstA. Dey, J. Phoon, S. Saha, C. Dobbins and M. Billinghurst, "Neurophysiological Effects of Presence in Calm Virtual Environments," 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 2020, pp. 745-746, doi: 10.1109/VRW50115.2020.00223.

@inproceedings{dey2020neurophysiological,

title={Neurophysiological Effects of Presence in Calm Virtual Environments},

author={Dey, Arindam and Phoon, Jane and Saha, Shuvodeep and Dobbins, Chelsea and Billinghurst, Mark},

booktitle={2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages={745--746},

year={2020},

organization={IEEE}

}Presence, the feeling of being there, is an important factor that affects the overall experience of virtual reality. Presence is measured through post-experience subjective questionnaires. While questionnaires are a widely used method in human-based research, they suffer from participant biases, dishonest answers, and fatigue. In this paper, we measured the effects of different levels of presence (high and low) in virtual environments using physiological and neurological signals as an alternative method. Results indicated a significant effect of presence on both physiological and neurological signals.

Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality

Kunal Gupta, Ryo Hajika, Yun Suen Pai, Andreas Duenser, Martin Lochner, Mark BillinghurstK. Gupta, R. Hajika, Y. S. Pai, A. Duenser, M. Lochner and M. Billinghurst, "Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality," 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 2020, pp. 756-765, doi: 10.1109/VR46266.2020.1581313729558.

@inproceedings{gupta2020measuring,

title={Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality},

author={Gupta, Kunal and Hajika, Ryo and Pai, Yun Suen and Duenser, Andreas and Lochner, Martin and Billinghurst, Mark},

booktitle={2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={756--765},

year={2020},

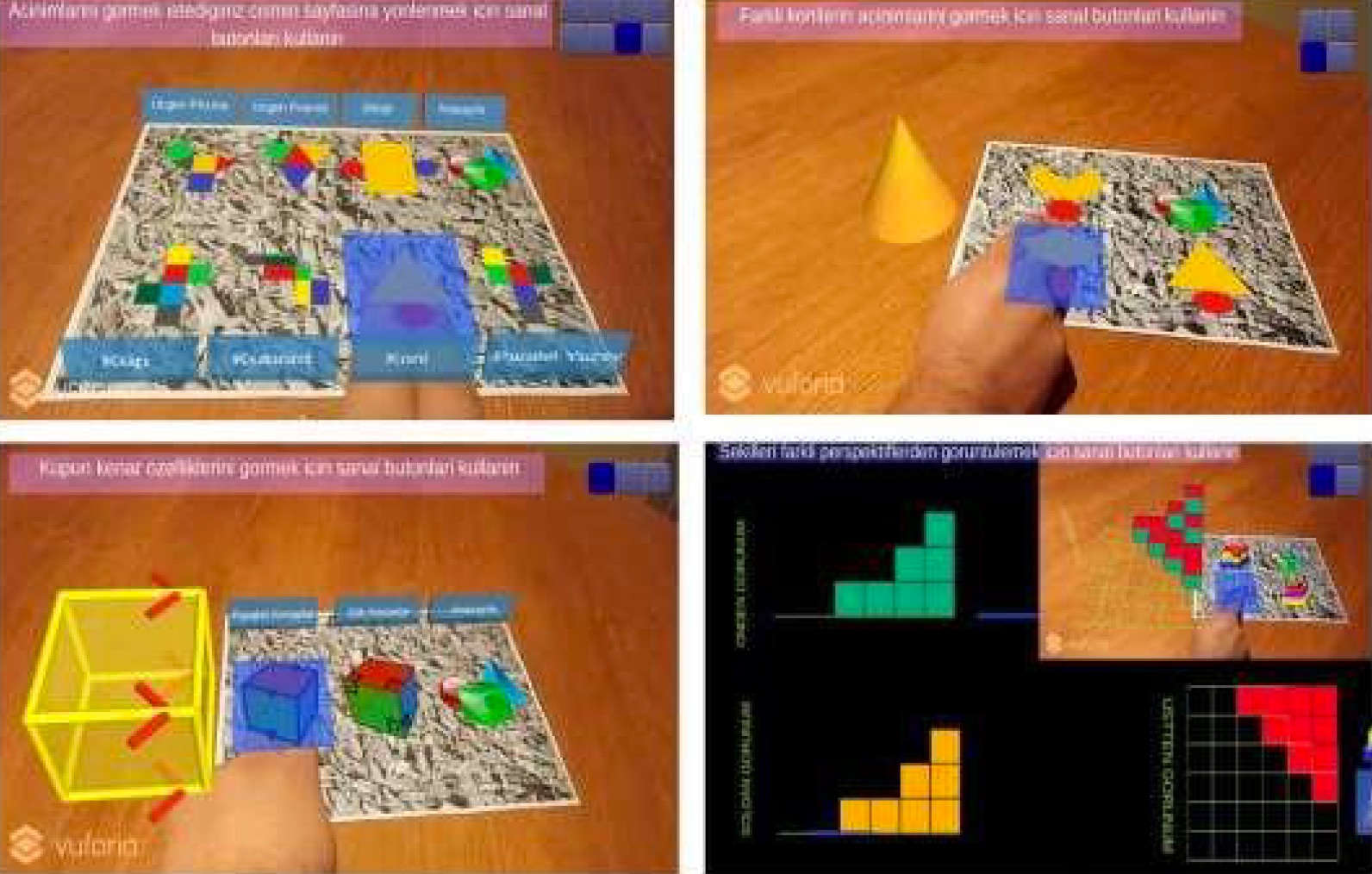

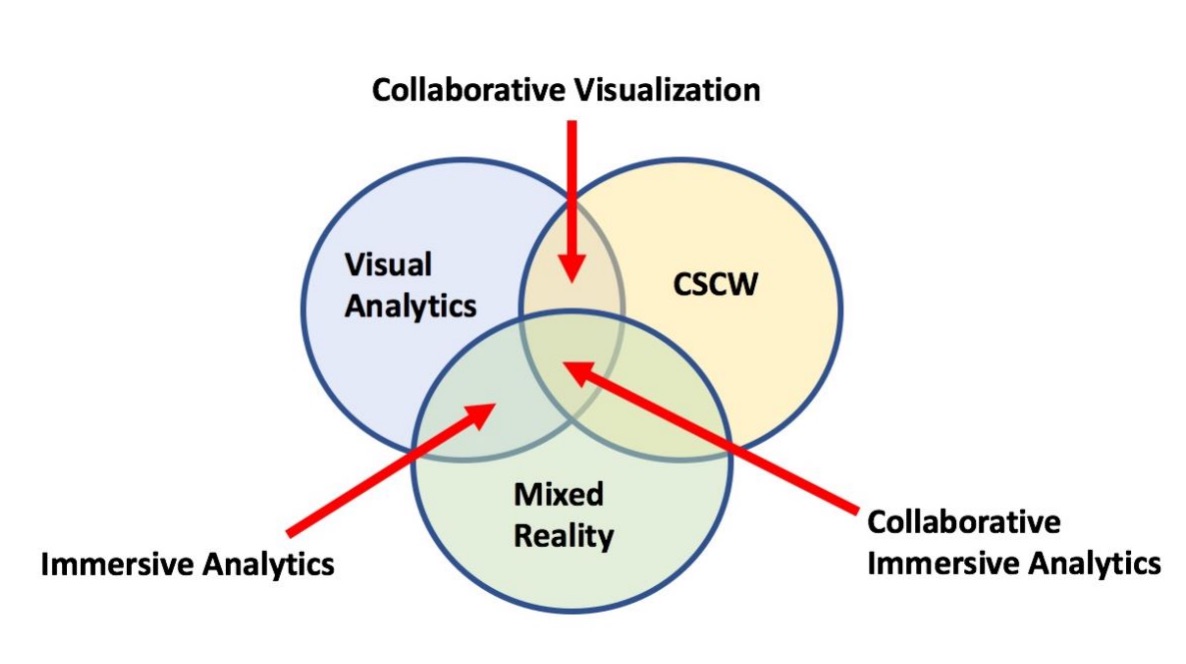

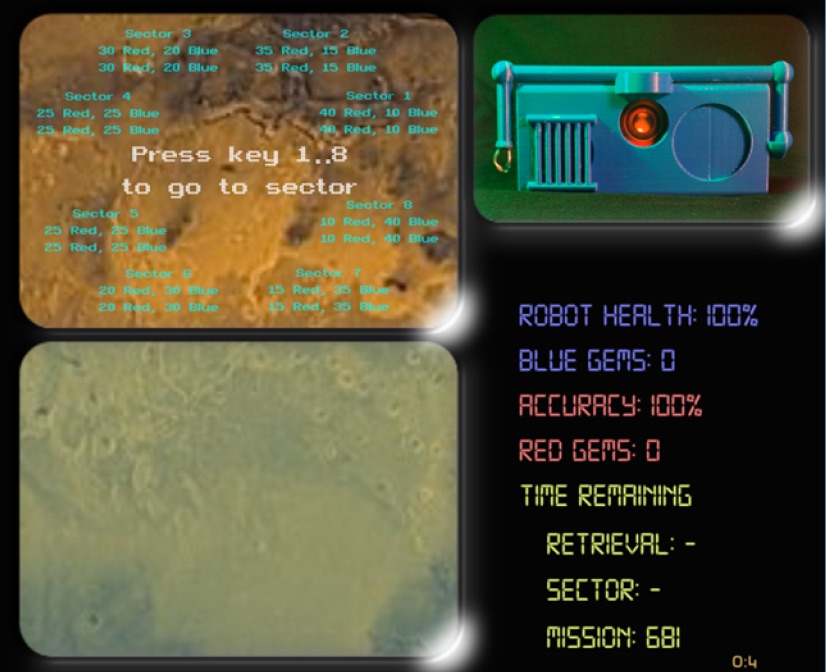

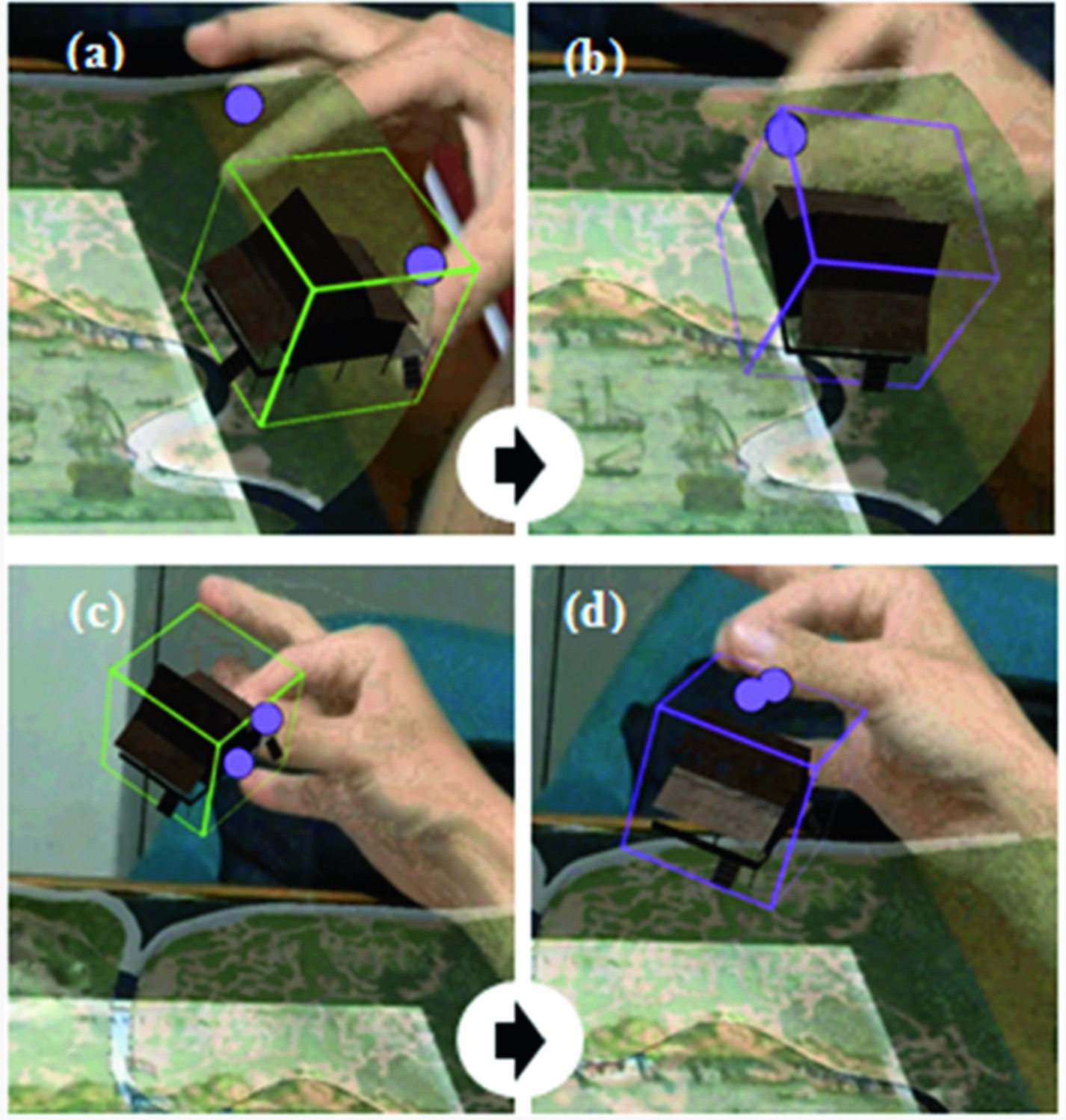

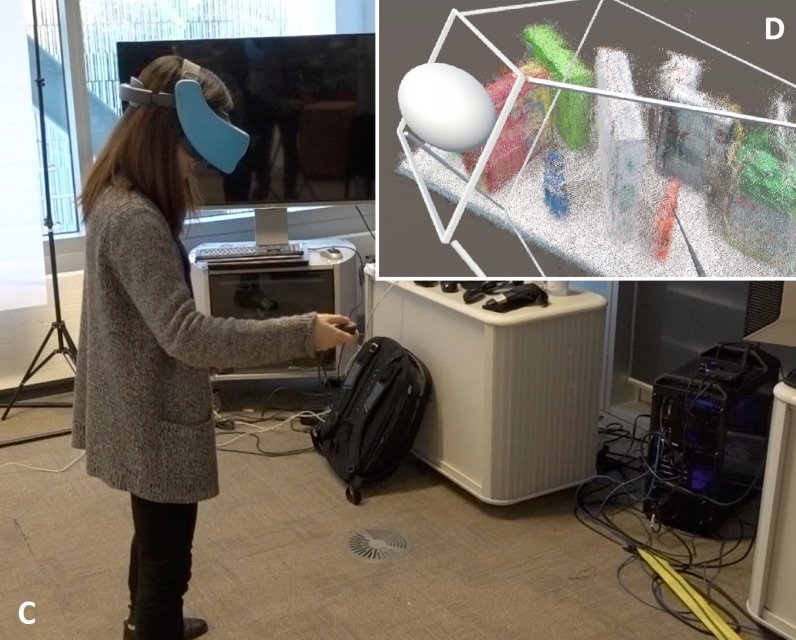

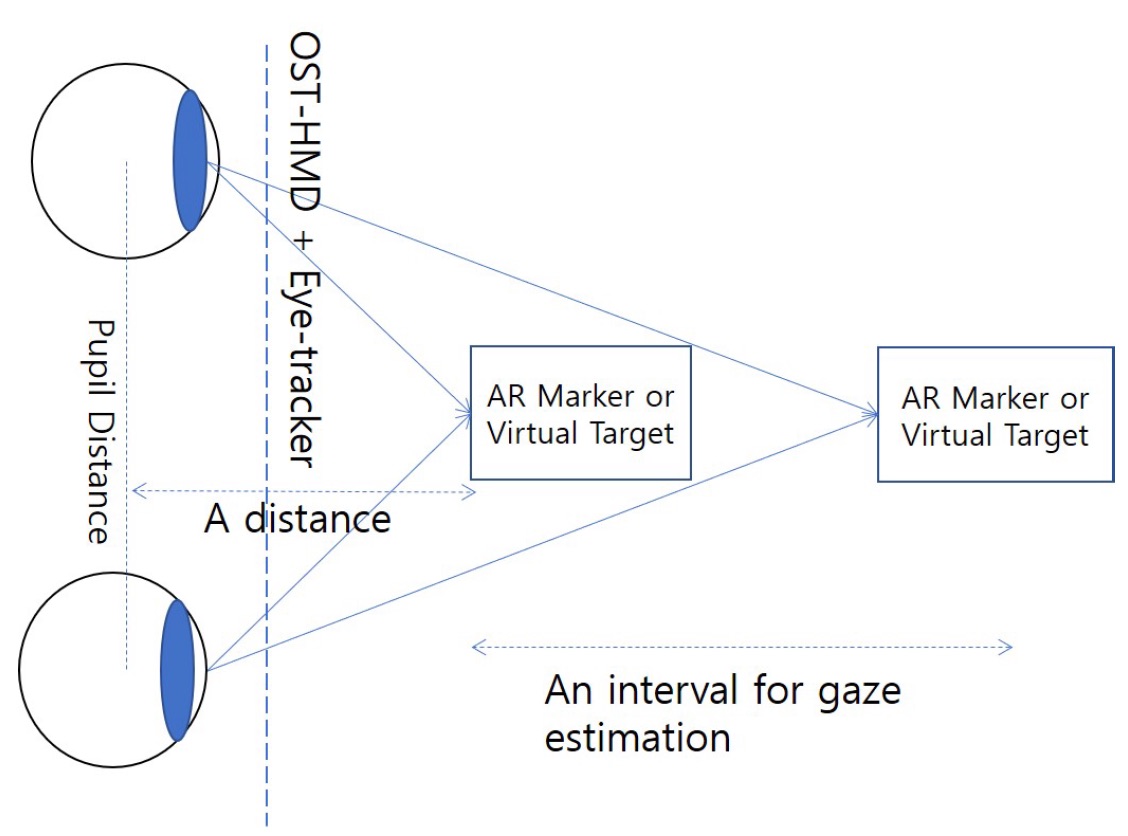

organization={IEEE}