Using Mixed Reality for Remote Collaboration

Theo Teo, from the Empathic Computing Laboratory, has just completed his PhD thesis on using Mixed Reality technology to improve remote collaboration. This blog post provides a quick summary of his PhD work.

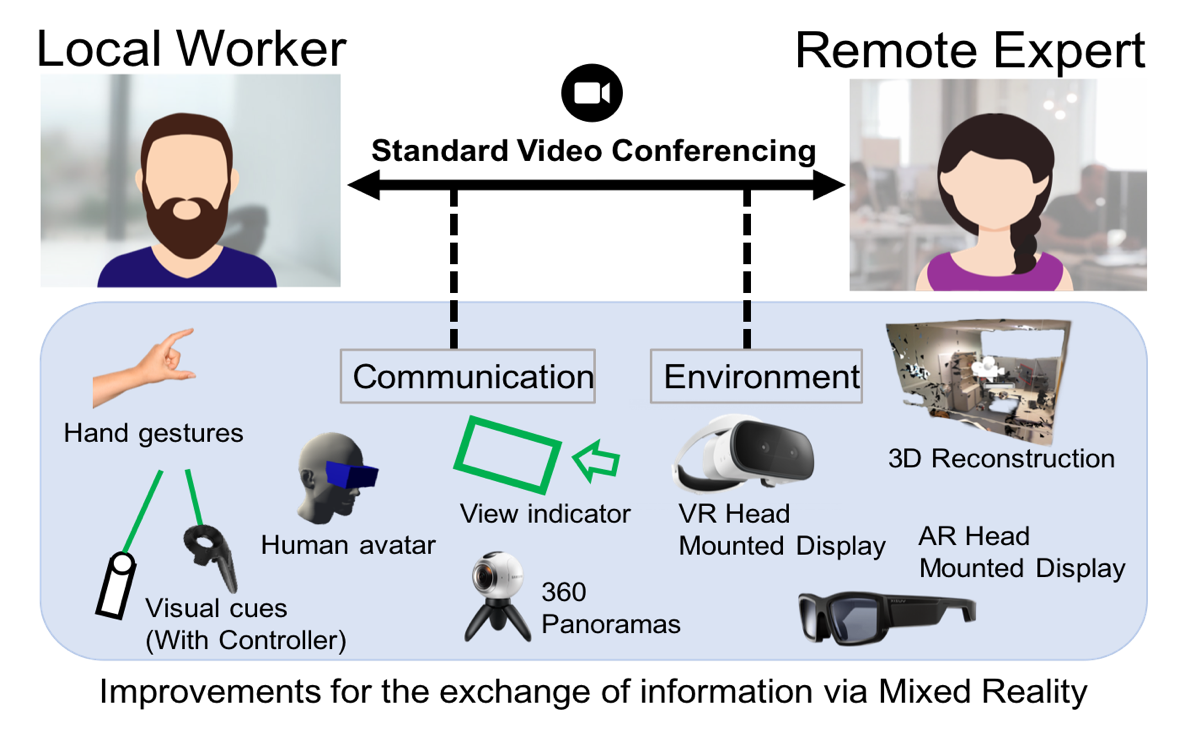

Recent advances in digital devices and hardware technology have enabled the development of telepresence systems to assist in a variety of real-world tasks. A typical example would be a local worker receive guidance or assistance from an expert worker who helps to solve tasks in real-time from a remote location.

Using Mixed Reality technology can help achieve immersive remote collaboration

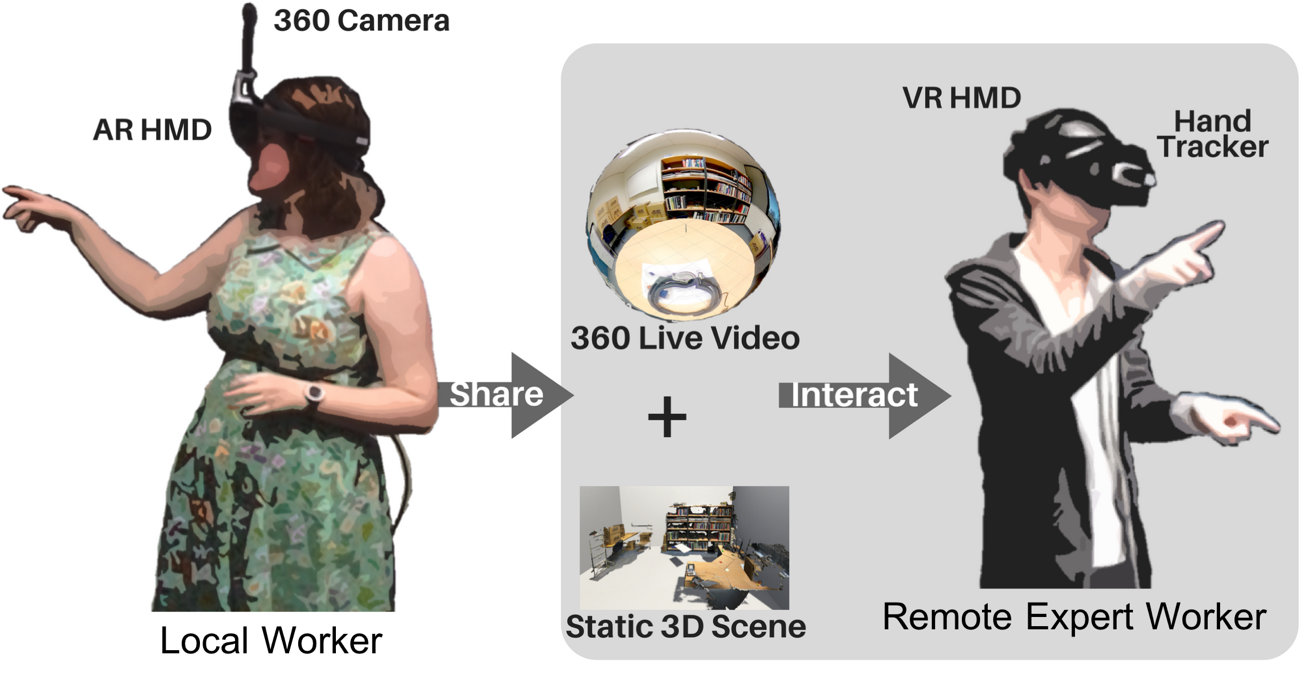

With the benefits of Augmented Reality (AR) and Virtual Reality (VR), the local worker can share his/her surroundings by steaming from a 360 video or 3D reconstruction to the remote expert worker. This allows the remote expert to be immersed into the shared environment using a VR headset, and send visual cues to provide nonverbal instructions to the local worker. A local worker wearing an AR headset can receive and view the visual cues to cooperate with the remote expert worker.

Sharing an environment through a 360 video offers a simple and portable configuration that allows a local worker to easily stream his/her surroundings. However, the camera location is mostly restricted and requires manual operation if the remote expert worker needs to move to see from a different perspective. Sharing an environment by using a 3D reconstruction overcomes these issues by scanning and reconstructing the local worker’s environment. It allows the remote expert worker to walk and navigate independently in the shared environment. Yet, it can be difficult to set up and consumes a large amount of bandwidth to operate.

With this limitation in mind, Theo combined 360 video and 3D reconstruction techniques into a hybrid system to offer a remote collaboration system that operates with high portability and independence. This approach involves bridging two systems, like a light switch, that switches between a 360 mode (360 video) and a 3D mode (3D reconstruction); or embedding 360 panorama images into the 3D environment to solve a larger variety of more complicated tasks.

A local worker shares her environment with a remote expert worker in two-ways using a hybrid system. This allows a remote expert worker to access the shared environment with more variations.

“It creates variation and it is more than just being hybrid. It is developed to unleash the better potential of a Mixed Reality technology. A hybrid system could be a steppingstone for future Mixed Reality remote collaboration, but it opens the gate that leads to it.” Theo said.

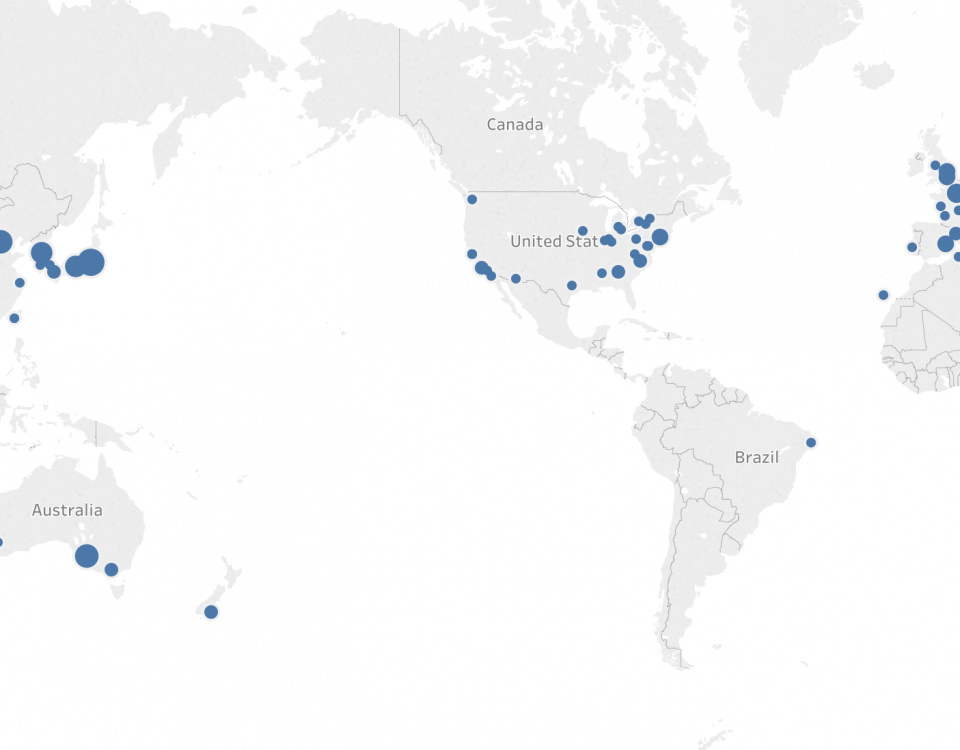

Theo has conducted several experiments to validate his concept. His tests with his colleagues and students around the university campus have shown promising results on object moving/searching tasks.

Theo said, “Participants tried the system and they enjoyed it. They thought it was useful as it provides them with the ability to solve tasks according to the situation”. “They thought the system can help remote expert workers to easily understand the local worker’s environment and quickly navigate in the environment to work with the local worker”.

In his future work, Theo plans to look at other different interaction techniques within the hybrid system. This may involve manipulating 360 panorama images in the 3D environment to offer scene editing or applying feedback such as haptic and proprioceptive stimulation to improve the user’s sense of social presence. He hoped that his works and findings can contribute towards designing future telecommunication systems and extending the existing capabilities of current MR remote collaboration system.

This research was funded by CSIRO Data61 PhD Scholarship and South Australian Research Fellowship project.

For more information see these research papers:

Lee, G. A., Teo, T., Kim, S., & Billinghurst, M. (2018, October). A user study on MR remote collaboration using live 360 video. In 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (pp. 153-164). IEEE.

Teo, T., Lawrence, L., Lee, G. A., Billinghurst, M., & Adcock, M. (2019, May). Mixed Reality remote collaboration combining 360 video and 3d reconstruction. In Proceedings of the 2019 CHI conference on human factors in computing systems (pp. 1-14).

Teo, T., F. Hayati, A., A. Lee, G., Billinghurst, M., & Adcock, M. (2019, November). A technique for mixed reality remote collaboration using 360 panoramas in 3d reconstructed scenes. In 25th ACM Symposium on Virtual Reality Software and Technology (pp. 1-11).