Gun Lee

Gun Lee

Senior Lecturer

Dr. Gun Lee is a Senior Lecturer investigating interaction and visualization methods for sharing virtual experiences in Augmented Reality (AR) and immersive 3D environments. Recently, using AR and wearable interfaces to improve remote collaborative experience has been one of his main research themes. Extending this research into sharing richer communication cues and scaling up to a larger group of participants are the next steps he is working on.

Before joining the Empathic Computing Lab, he was a Research Scientist at the Human Interface Technology Laboratory New Zealand (HIT Lab NZ, University of Canterbury), leading mobile and wearable AR research projects. Previously, he also worked at the Electronics and Telecommunications Institute (ETRI, Korea) as a researcher where he developed VR and AR technology for industrial applications, including immersive 3D visualization systems and virtual training systems.

Dr. Lee received his B.S. degree in Computer Science from Kyungpook National University (Korea), and received his Masters and Ph.D. degrees in Computer Science and Engineering at POSTECH (Korea), investigating immersive authoring method for creating VR and AR contents using 3D user interfaces.

Personal website: http://gun-a-lee.appspot.com

Projects

-

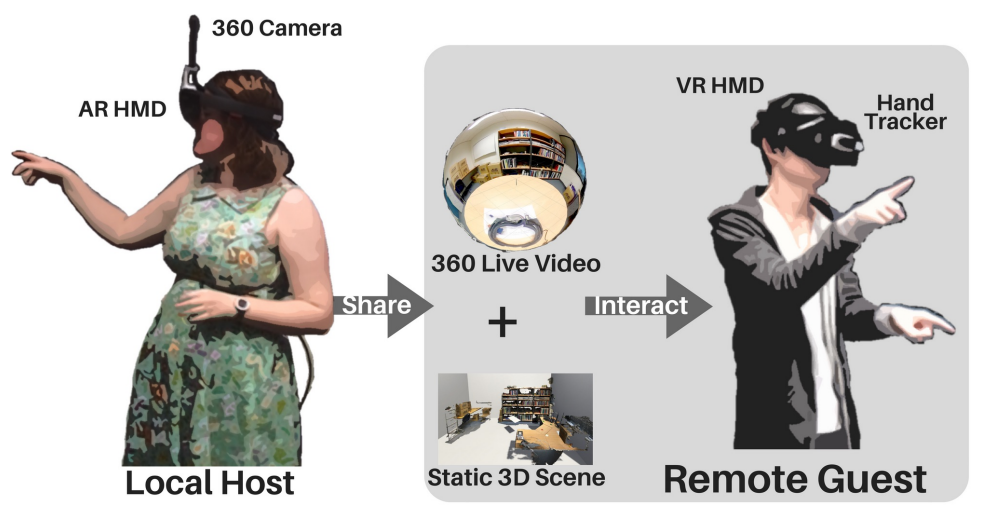

SharedSphere

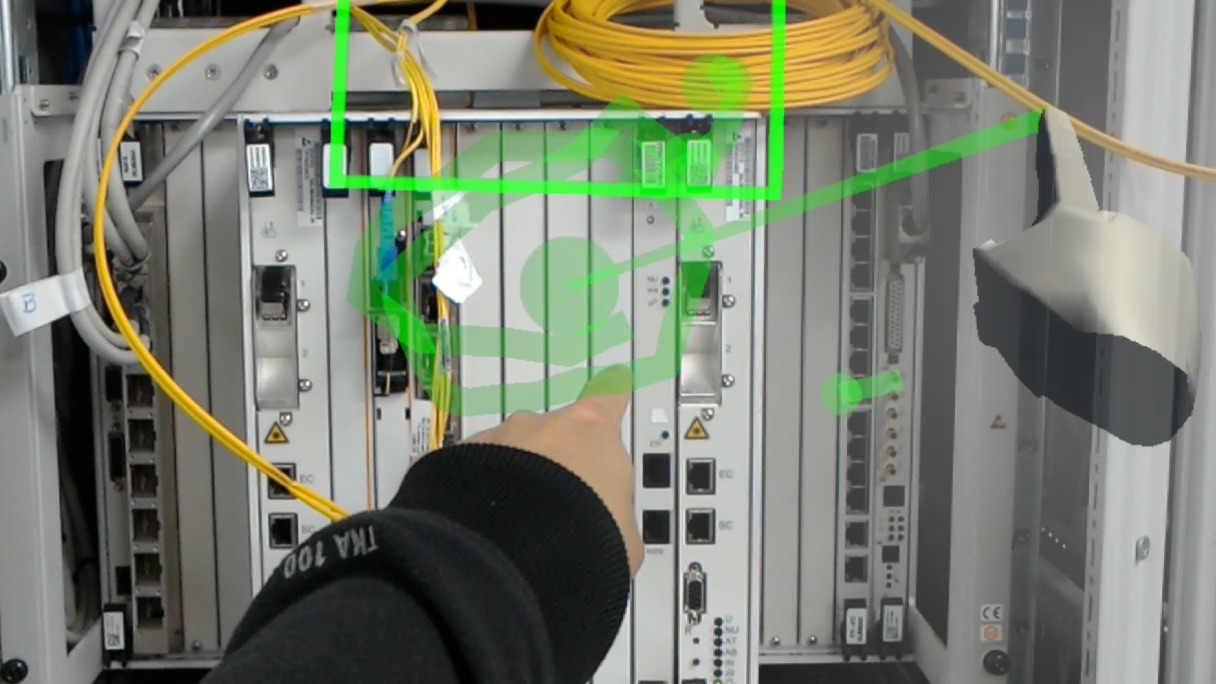

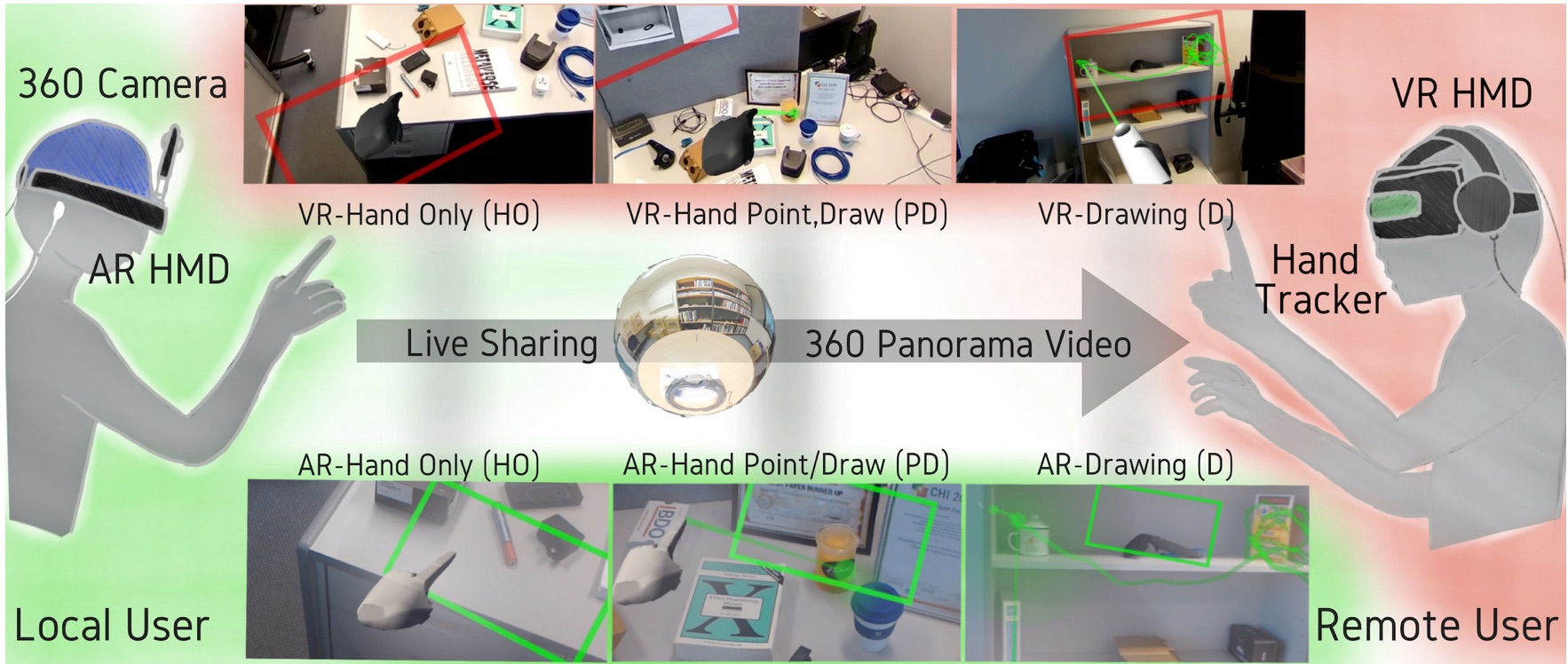

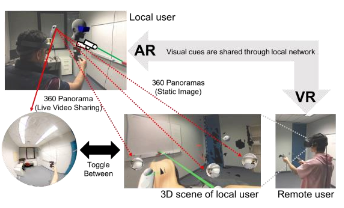

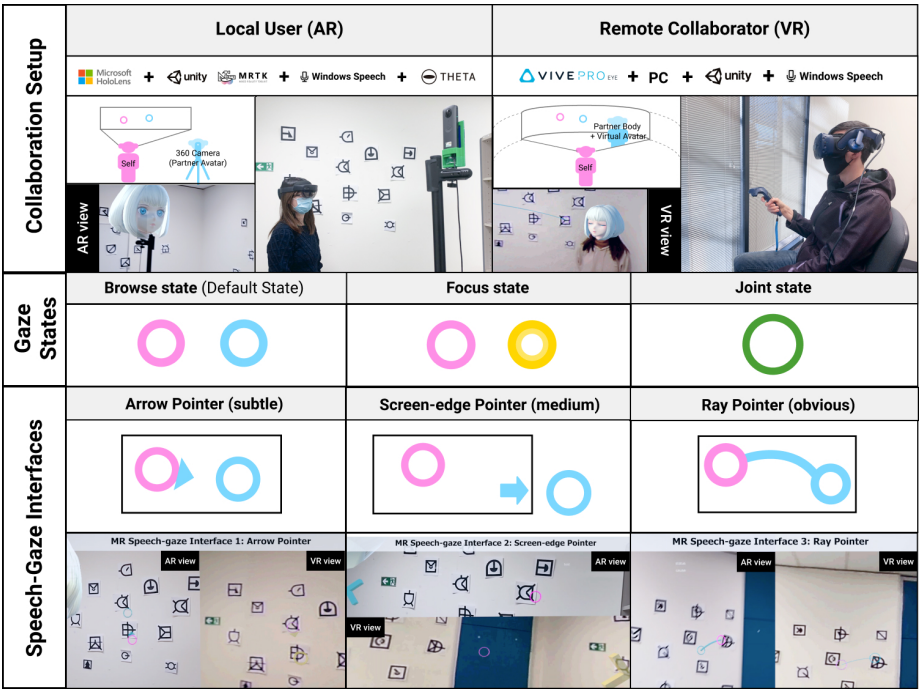

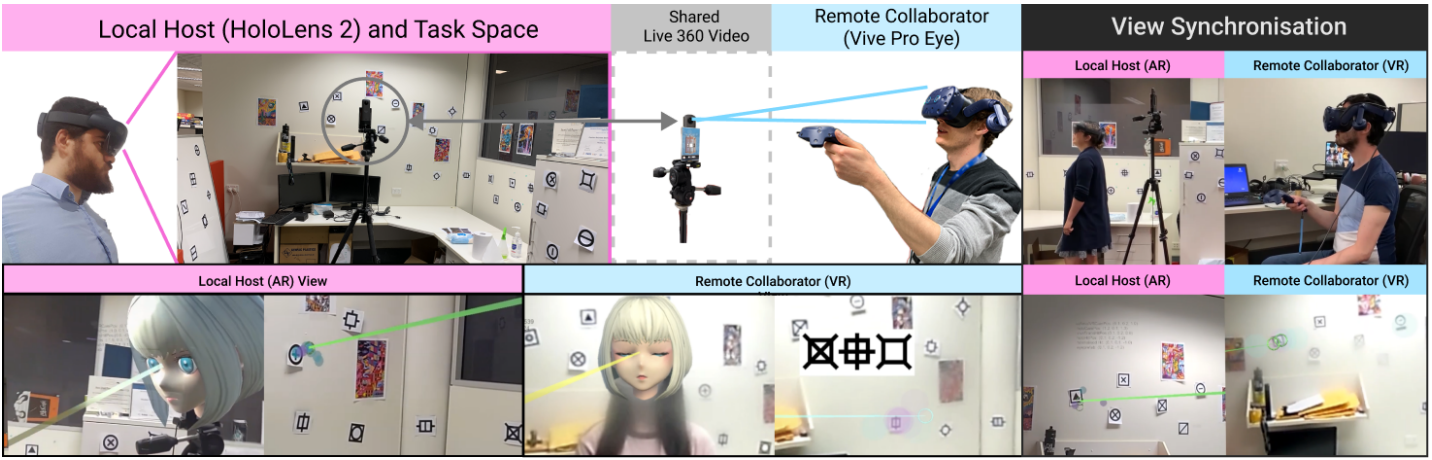

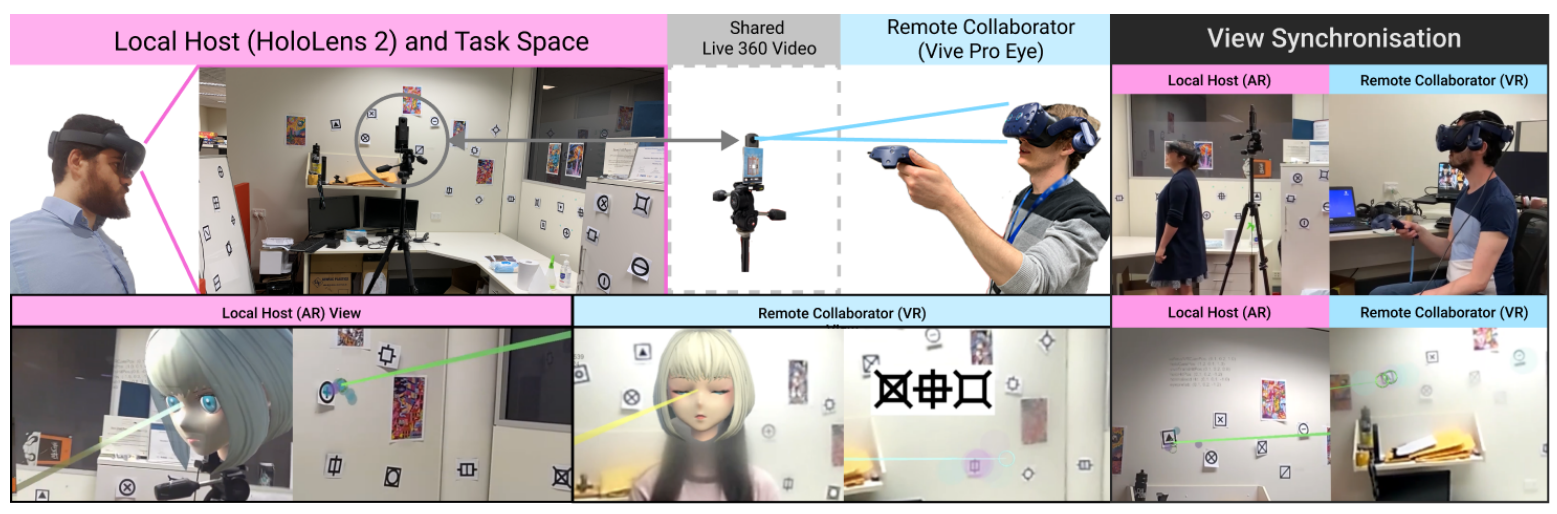

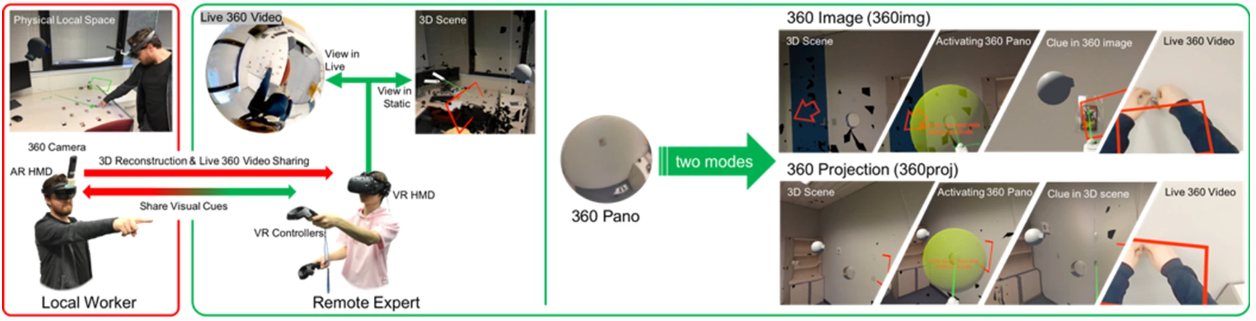

SharedSphere is a Mixed Reality based remote collaboration system which not only allows sharing a live captured immersive 360 panorama, but also supports enriched two-way communication and collaboration through sharing non-verbal communication cues, such as view awareness cues, drawn annotation, and hand gestures.

-

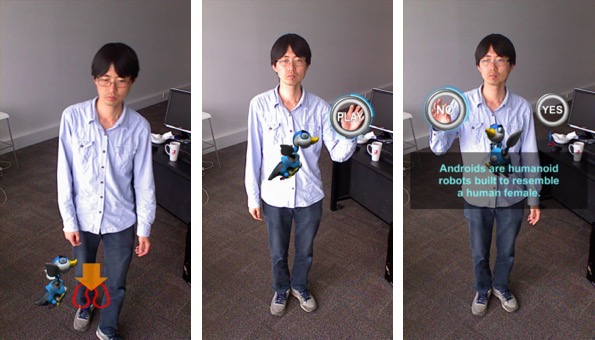

Augmented Mirrors

Mirrors are physical displays that show our real world in reflection. While physical mirrors simply show what is in the real world scene, with help of digital technology, we can also alter the reality reflected in the mirror. The Augmented Mirrors project aims at exploring visualisation interaction techniques for exploiting mirrors as Augmented Reality (AR) displays. The project especially focuses on using user interface agents for guiding user interaction with Augmented Mirrors.

-

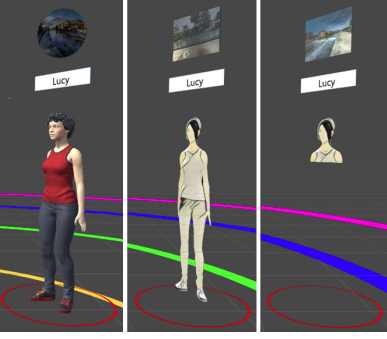

Mini-Me

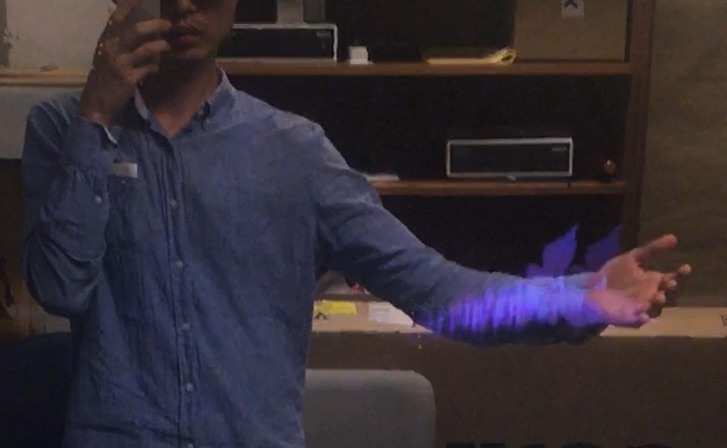

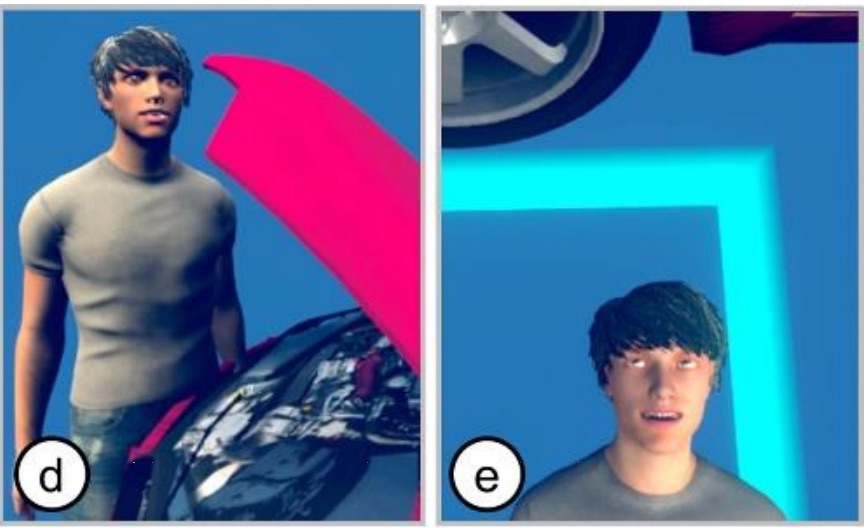

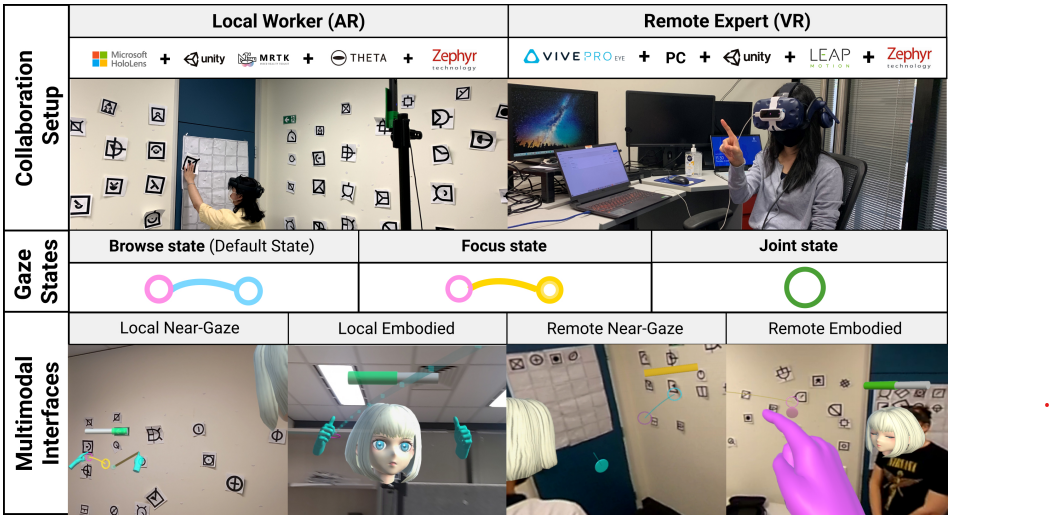

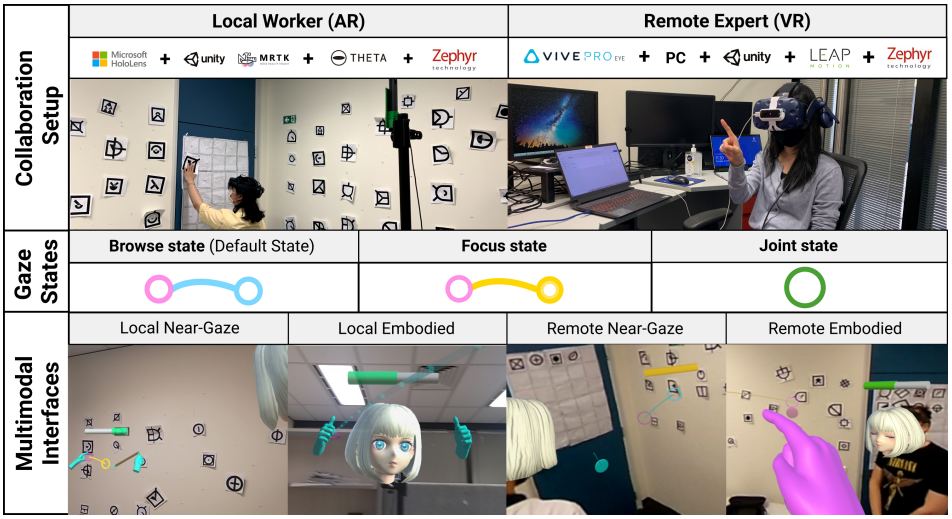

Mini-Me is an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user’s gaze direction and body gestures while it transforms in size and orientation to stay within the AR user’s field of view. We tested Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration.

-

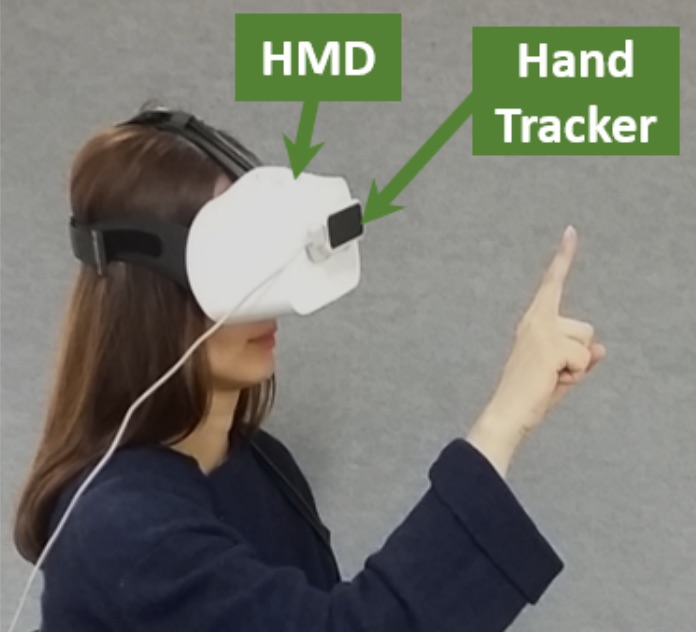

Pinpointing

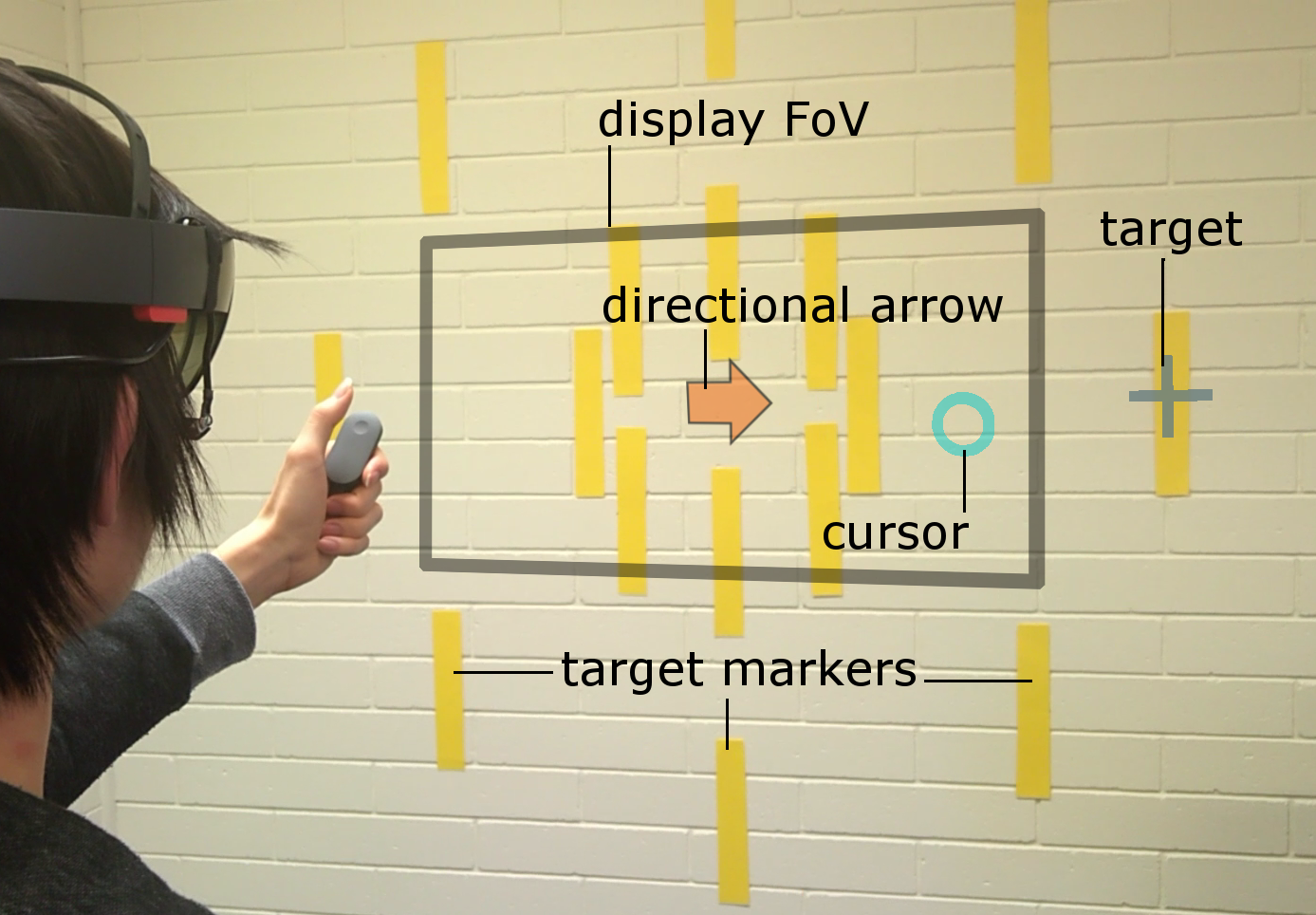

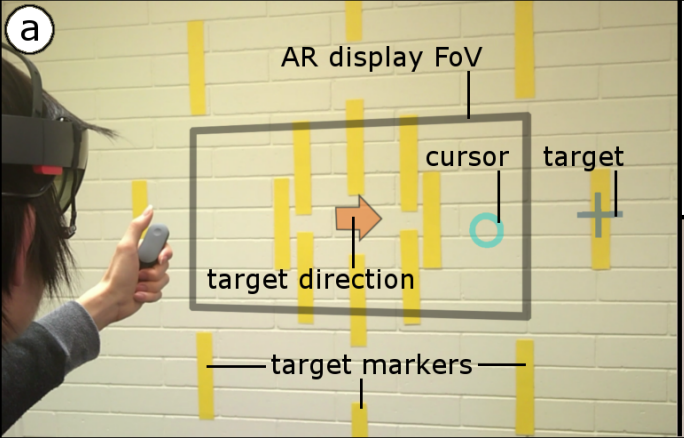

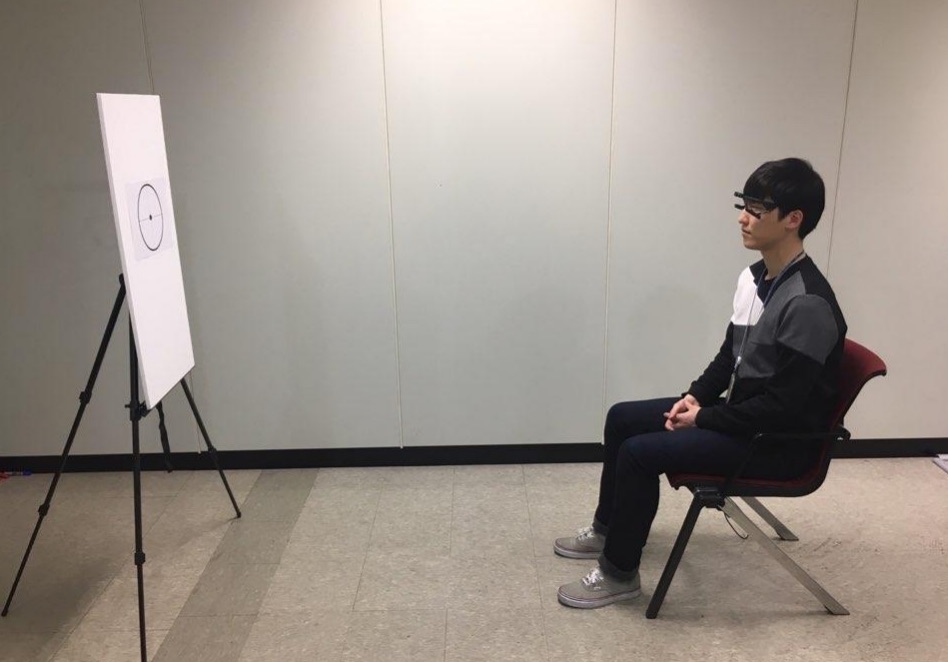

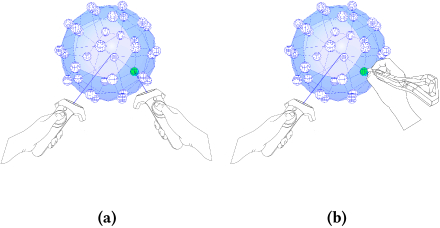

Head and eye movement can be leveraged to improve the user’s interaction repertoire for wearable displays. Head movements are deliberate and accurate, and provide the current state-of-the-art pointing technique. Eye gaze can potentially be faster and more ergonomic, but suffers from low accuracy due to calibration errors and drift of wearable eye-tracking sensors. This work investigates precise, multimodal selection techniques using head motion and eye gaze. A comparison of speed and pointing accuracy reveals the relative merits of each method, including the achievable target size for robust selection. We demonstrate and discuss example applications for augmented reality, including compact menus with deep structure, and a proof-of-concept method for on-line correction of calibration drift.

-

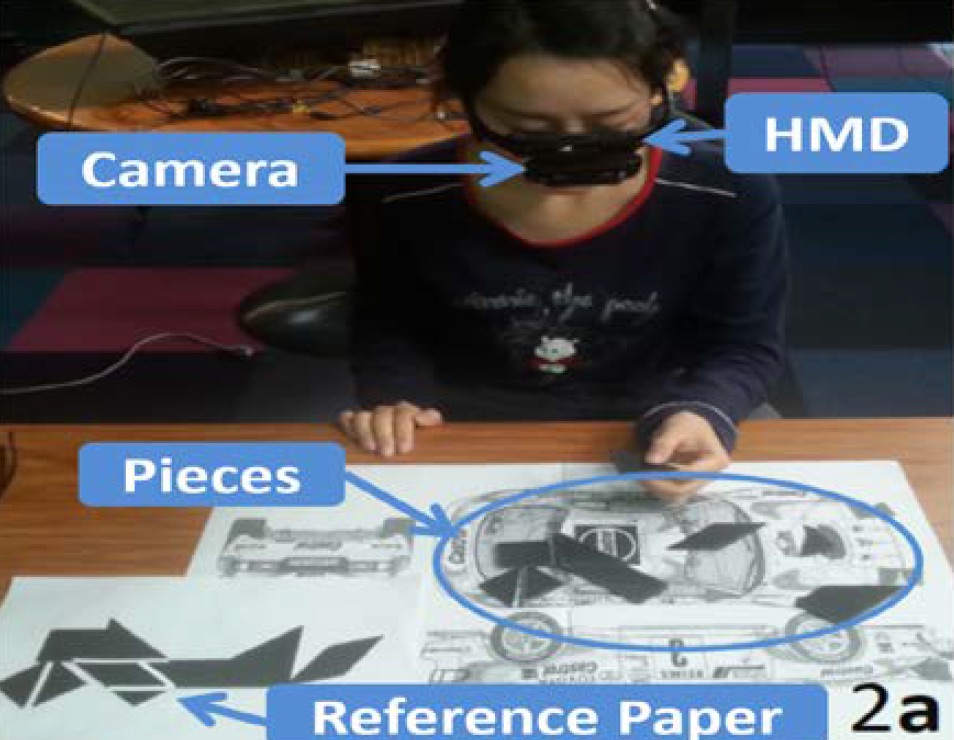

Empathy Glasses

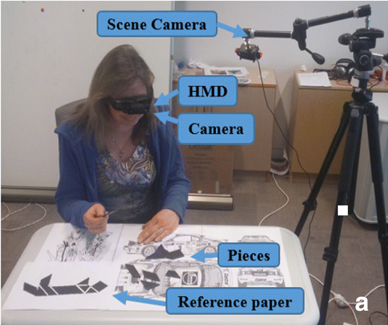

We have been developing a remote collaboration system with Empathy Glasses, a head worn display designed to create a stronger feeling of empathy between remote collaborators. To do this, we combined a head- mounted see-through display with a facial expression recognition system, a heart rate sensor, and an eye tracker. The goal is to enable a remote person to see and hear from another person's perspective and to understand how they are feeling. In this way, the system shares non-verbal cues that could help increase empathy between remote collaborators.

-

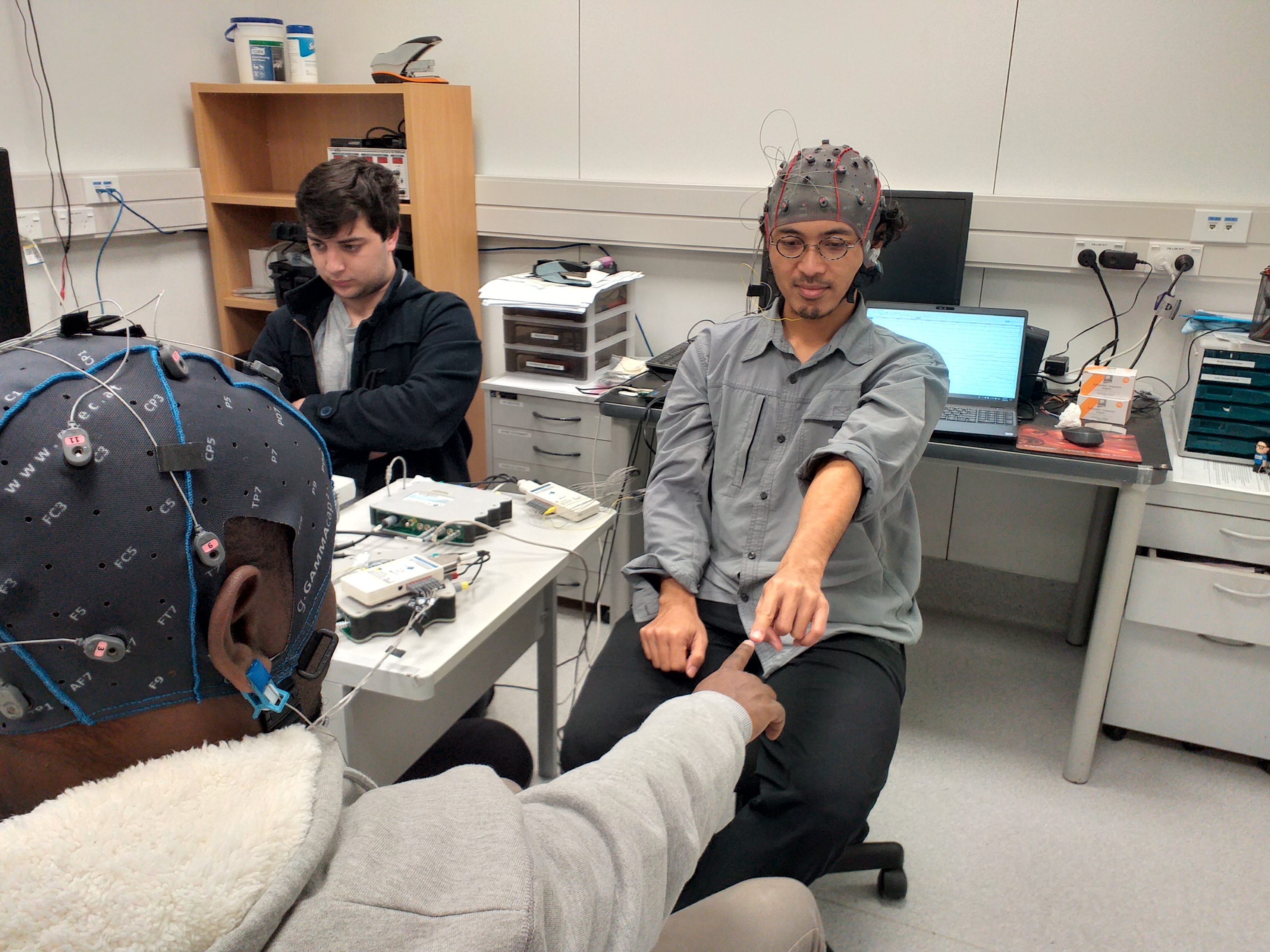

Brain Synchronisation in VR

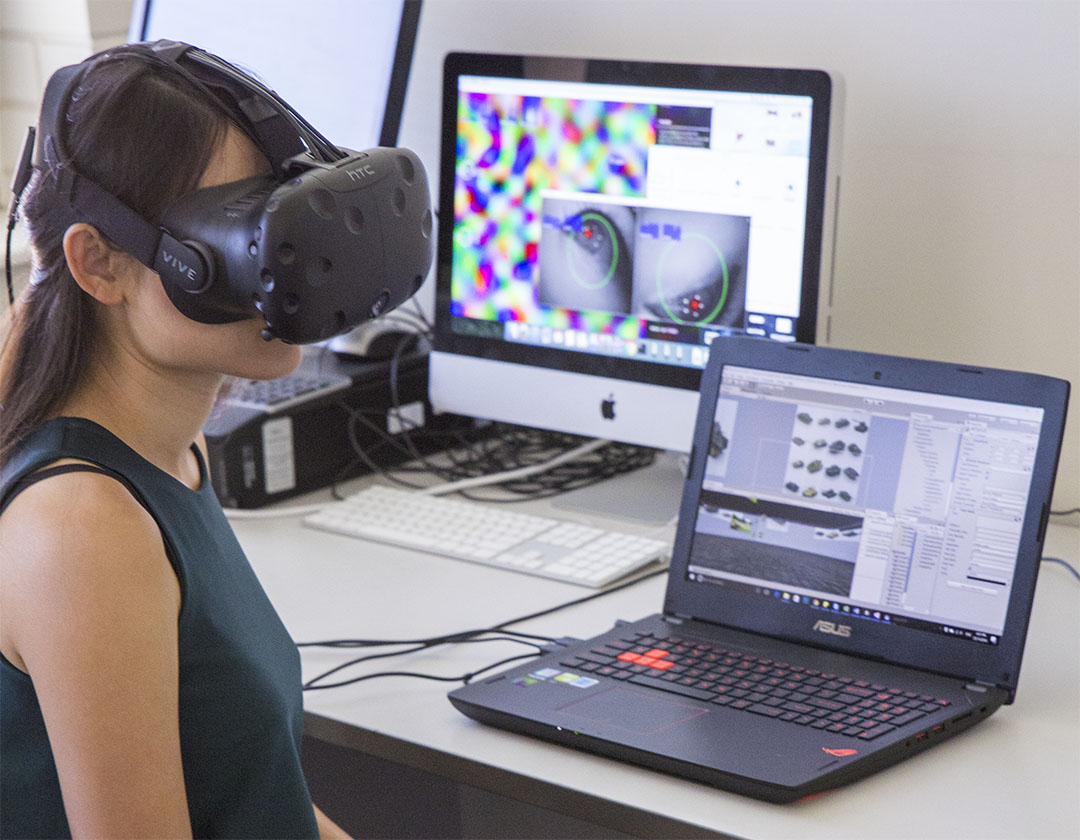

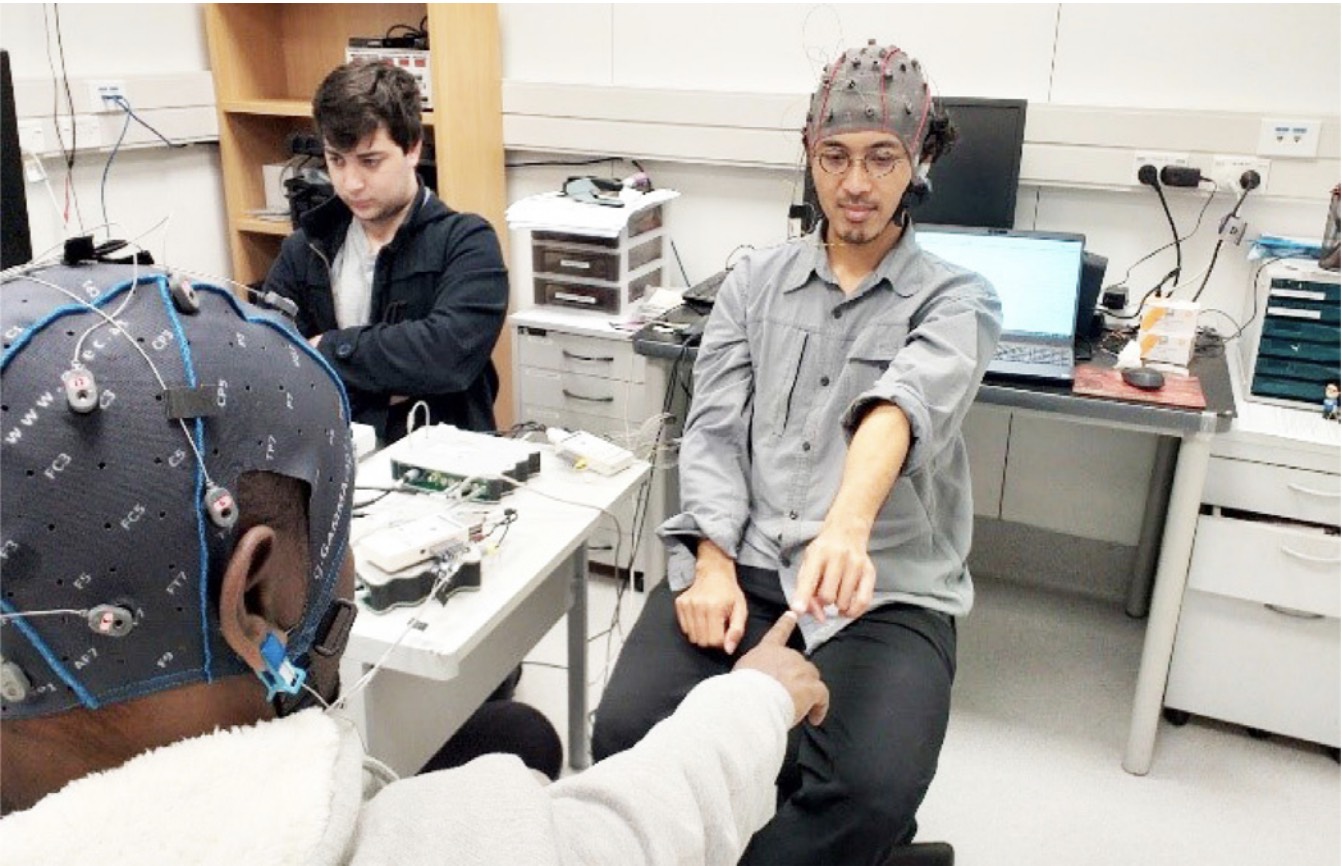

Collaborative Virtual Reality have been the subject of research for nearly three decades now. This has led to a deep understanding of how individuals interact in such environments and some of the factors that impede these interactions. However, despite this knowledge we still do not fully understand how inter-personal interactions in virtual environments are reflected in the physiological domain. This project seeks to answer the question by monitoring neural activity of participants in collaborative virtual environments. We do this by using a technique known as Hyperscanning, which refers to the simultaneous acquisition of neural activity from two or more people. In this project we use Hyperscanning to determine if individuals interacting in a virtual environment exhibit inter-brain synchrony. The goal of this project is to first study the phenomenon of inter-brain synchrony, and then find means of inducing and expediting it by making changes in the virtual environment. This project feeds into the overarching goals of the Empathic Computing Laboratory that seek to bring individuals closer using technology as a vehicle to evoke empathy.

-

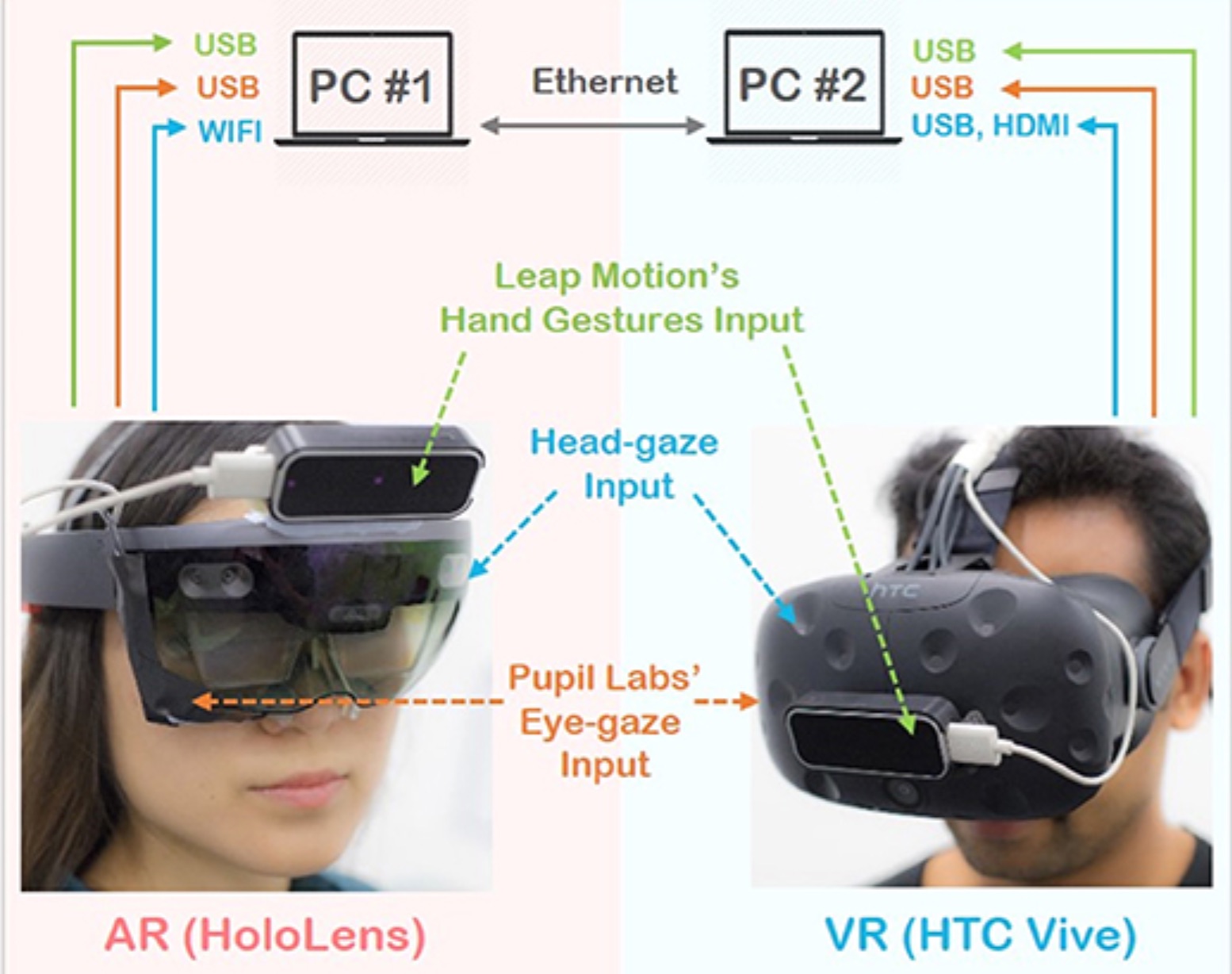

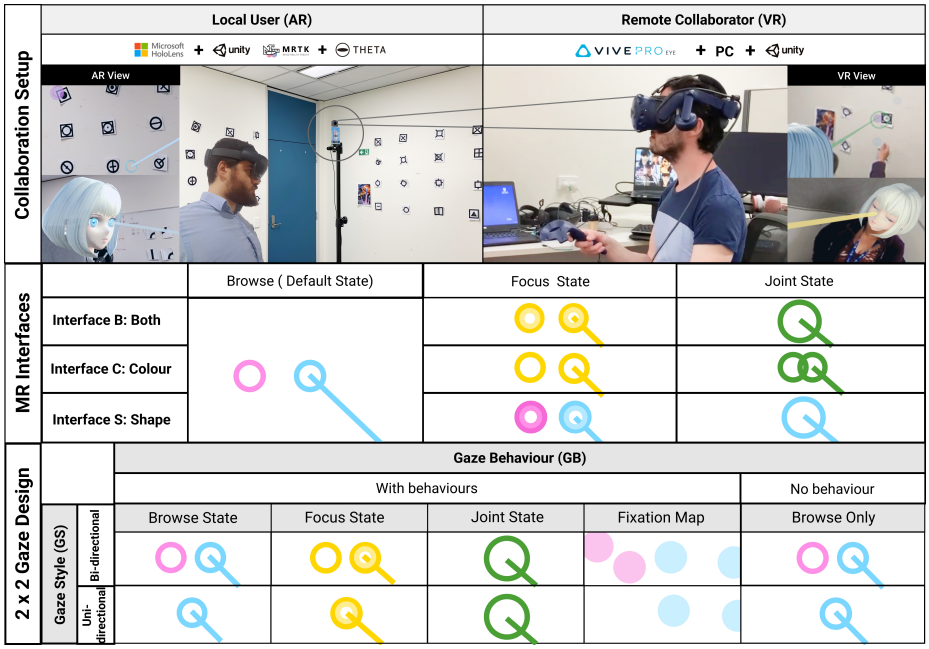

Sharing Gesture and Gaze Cues for Enhancing MR Collaboration

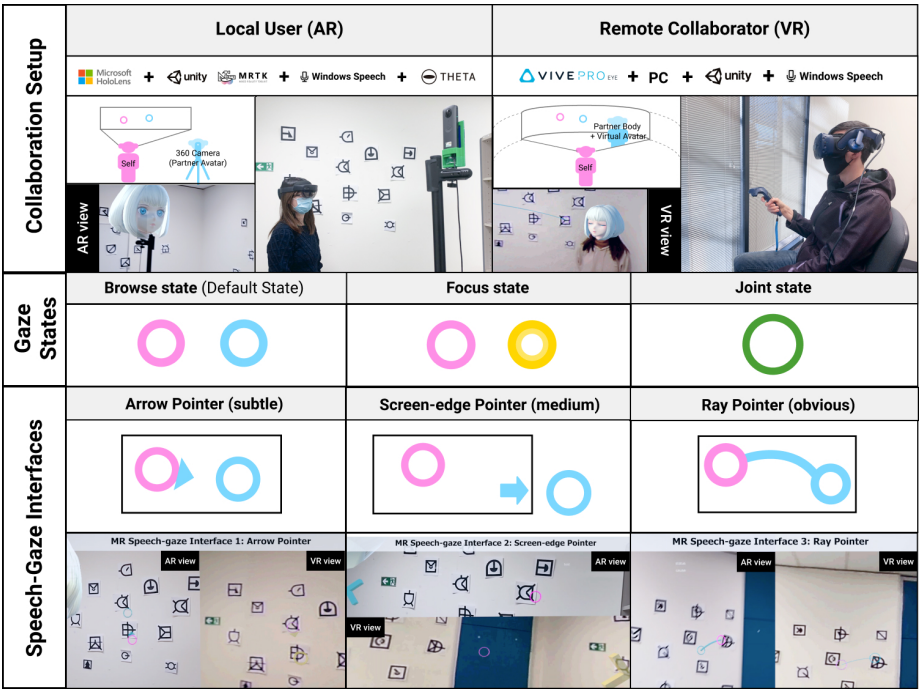

This research focuses on visualizing shared gaze cues, designing interfaces for collaborative experience, and incorporating multimodal interaction techniques and physiological cues to support empathic Mixed Reality (MR) remote collaboration using HoloLens 2, Vive Pro Eye, Meta Pro, HP Omnicept, Theta V 360 camera, Windows Speech Recognition, Leap motion hand tracking, and Zephyr/Shimmer Sensing technologies

-

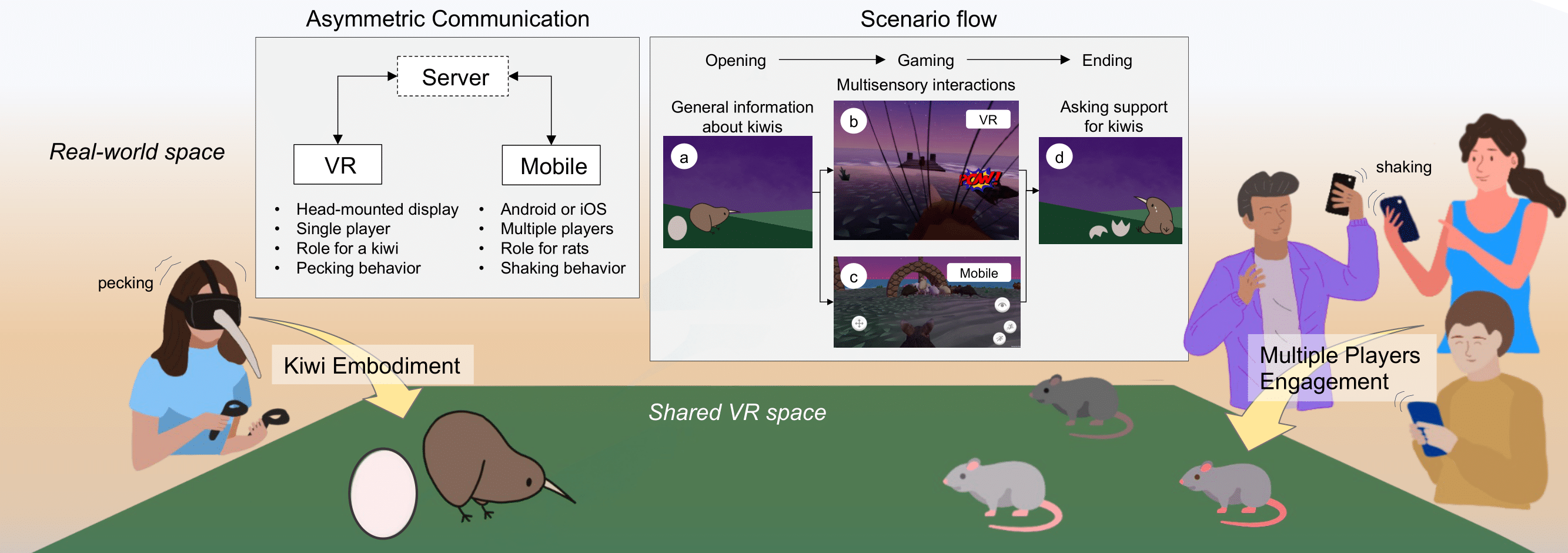

KiwiRescuer: A new interactive exhibition using an asymmetric interaction

This research demo aims to address the problem of passive and dull museum exhibition experiences that many audiences still encounter. The current approaches to exhibitions are typically less interactive and mostly provide single sensory information (e.g., visual, auditory, or haptic) in a one-to-one experience.

-

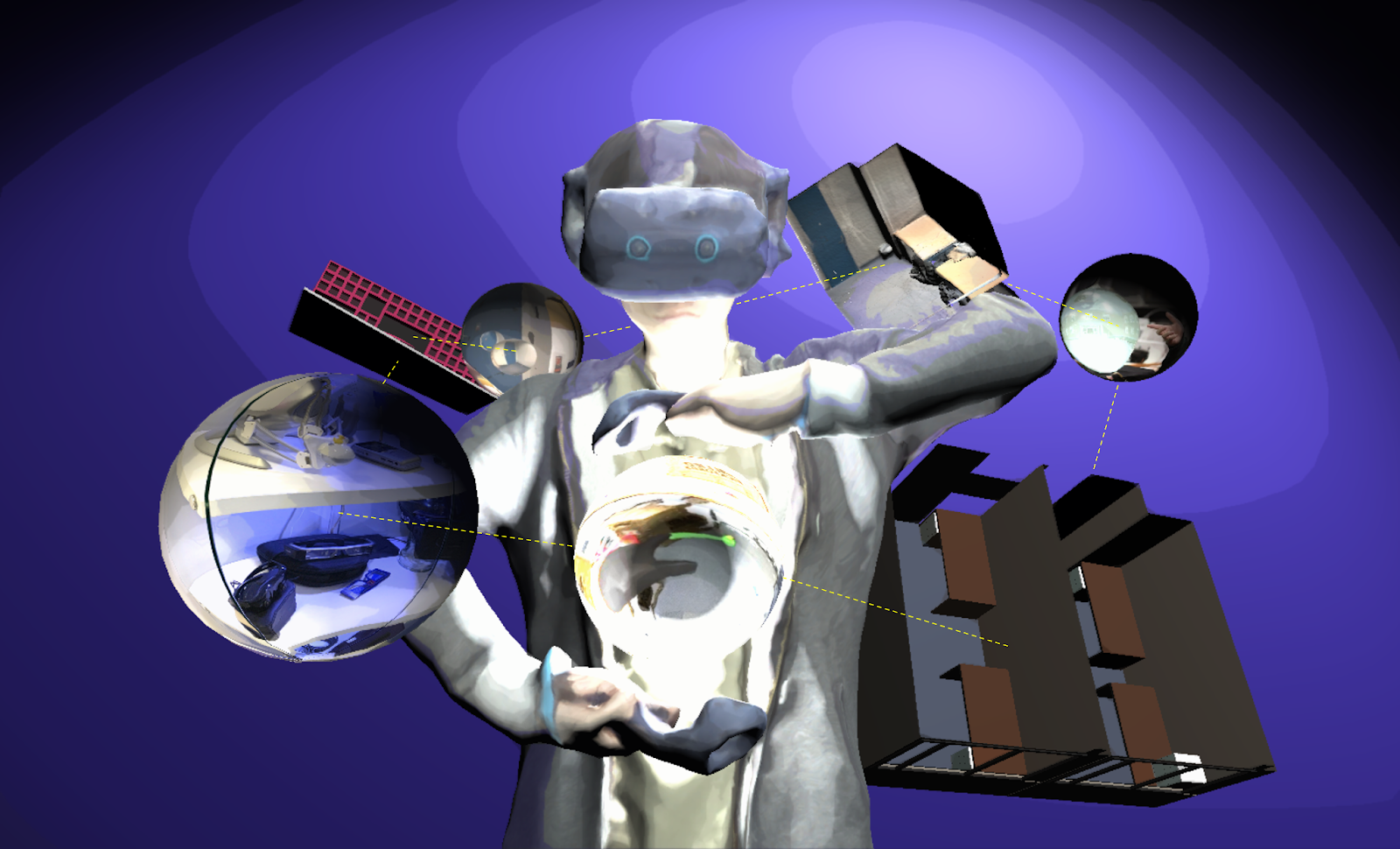

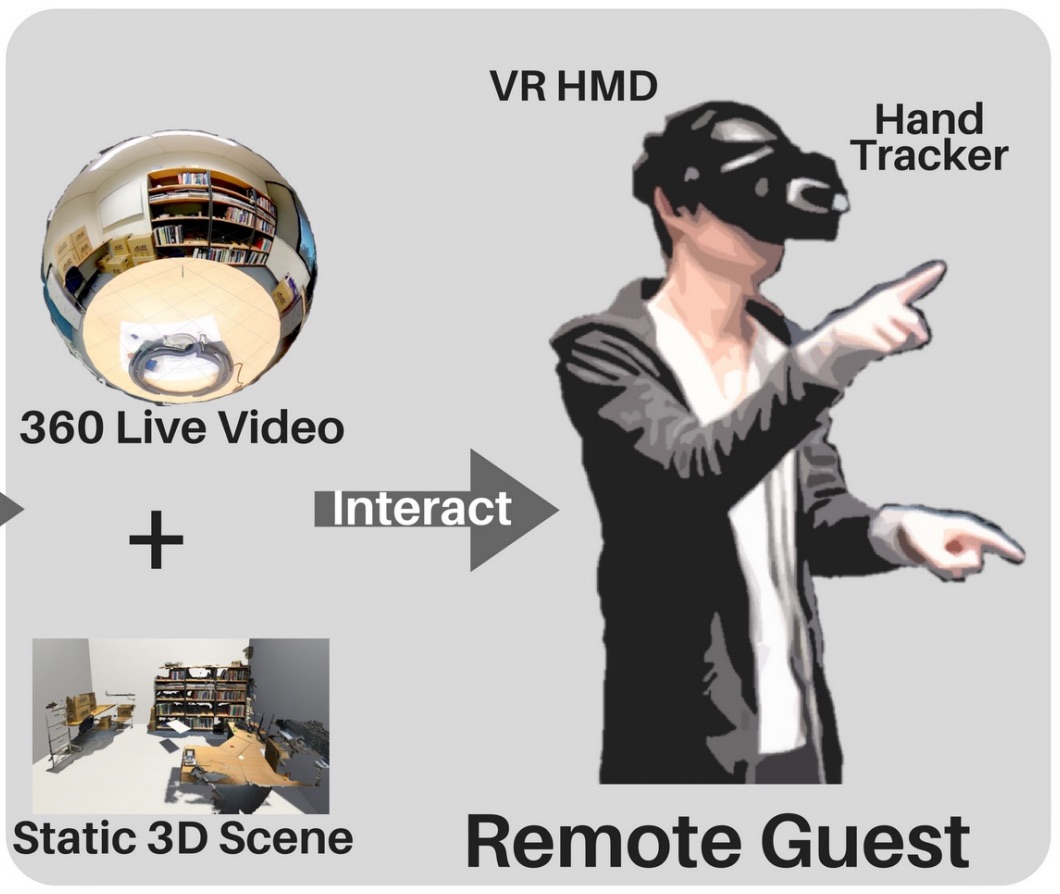

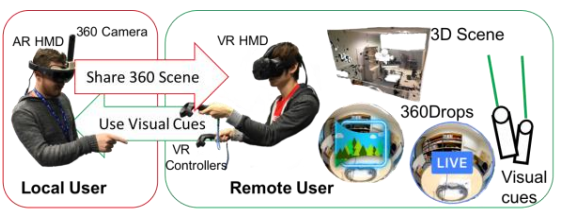

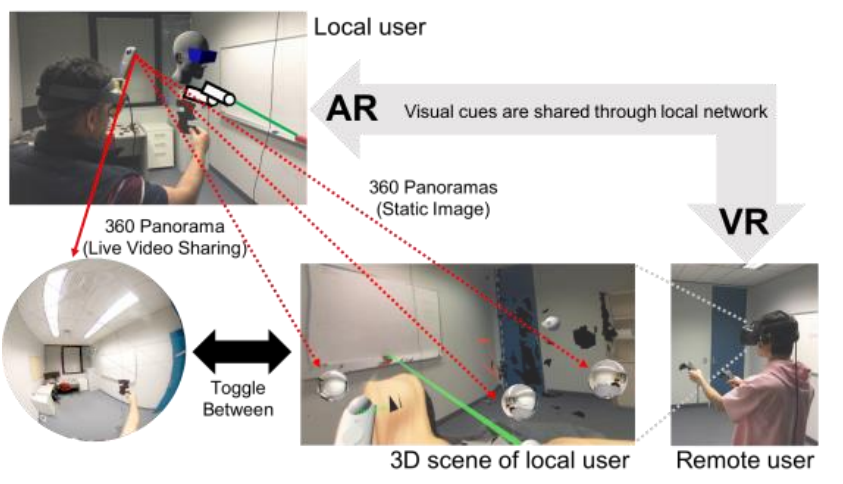

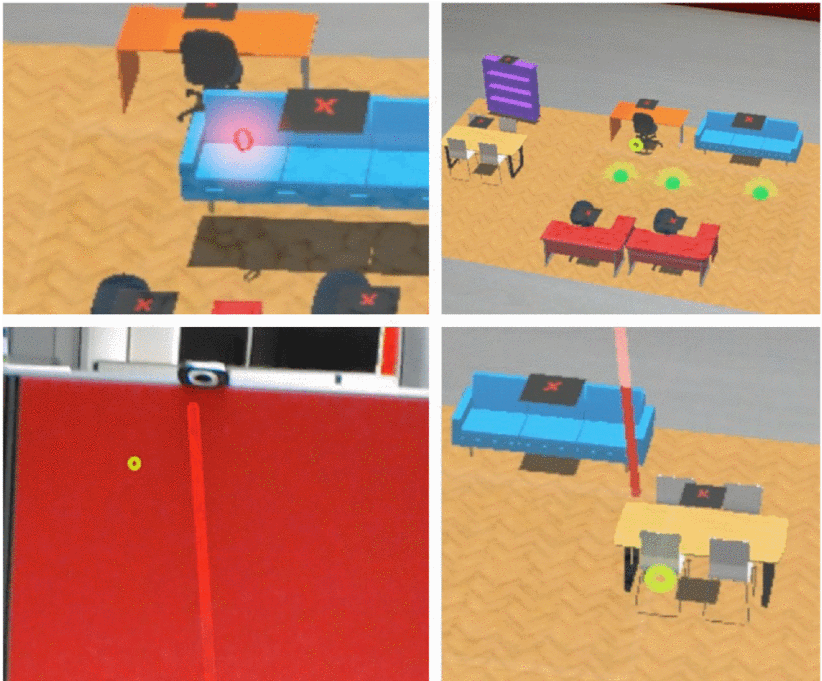

Using 3D Spaces and 360 Video Content for Collaboration

This project explores techniques to enhance collaborative experience in Mixed Reality environments using 3D reconstructions, 360 videos and 2D images. Previous research has shown that 360 video can provide a high resolution immersive visual space for collaboration, but little spatial information. Conversely, 3D scanned environments can provide high quality spatial cues, but with poor visual resolution. This project combines both approaches, enabling users to switch between a 3D view or 360 video of a collaborative space. In this hybrid interface, users can pick the representation of space best suited to the needs of the collaborative task. The project seeks to provide design guidelines for collaboration systems to enable empathic collaboration by sharing visual cues and environments across time and space.

-

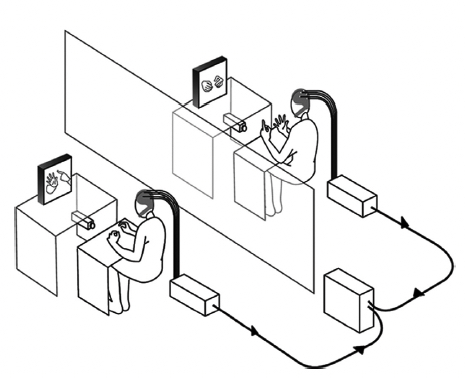

MPConnect: A Mixed Presence Mixed Reality System

This project explores how a Mixed Presence Mixed Reality System can enhance remote collaboration. Collaborative Mixed Reality (MR) is a popular area of research, but most work has focused on one-to-one systems where either both collaborators are co-located or the collaborators are remote from one another. For example, remote users might collaborate in a shared Virtual Reality (VR) system, or a local worker might use an Augmented Reality (AR) display to connect with a remote expert to help them complete a task.

-

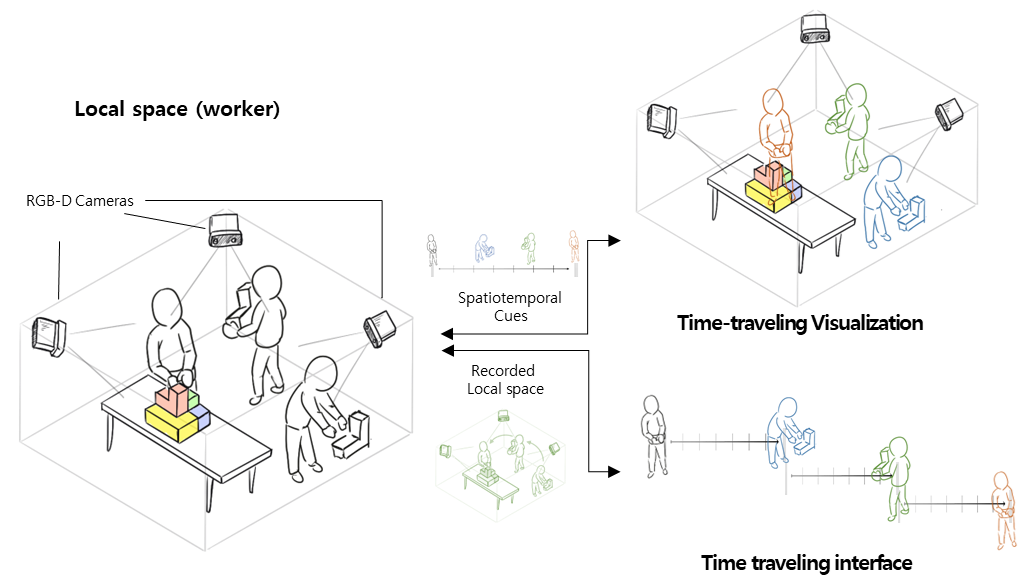

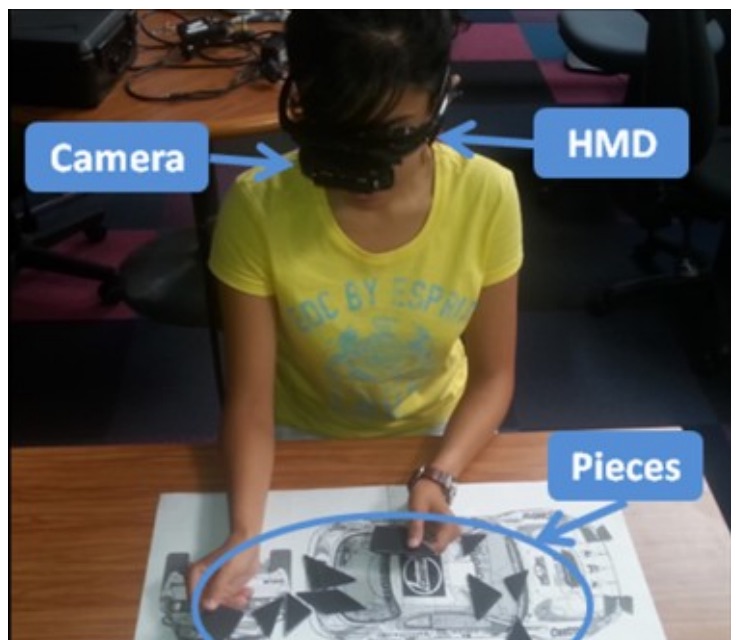

AR-based spatiotemporal interface and visualization for the physical task

The proposed study aims to assist in solving physical tasks such as mechanical assembly or collaborative design efficiently by using augmented reality-based space-time visualization techniques. In particular, when disassembling/reassembling is required, 3D recording of past actions and playback visualization are used to help memorize the exact assembly order and position of objects in the task. This study proposes a novel method that employs 3D-based spatial information recording and augmented reality-based playback to effectively support these types of physical tasks.

Publications

-

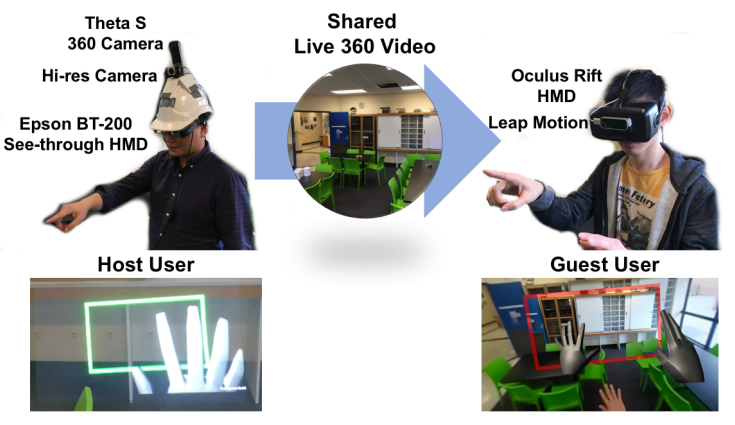

Mixed Reality Collaboration through Sharing a Live Panorama

Gun A. Lee, Theophilus Teo, Seungwon Kim, Mark BillinghurstGun A. Lee, Theophilus Teo, Seungwon Kim, and Mark Billinghurst. 2017. Mixed reality collaboration through sharing a live panorama. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (SA '17). ACM, New York, NY, USA, Article 14, 4 pages. http://doi.acm.org/10.1145/3132787.3139203

@inproceedings{Lee:2017:MRC:3132787.3139203,

author = {Lee, Gun A. and Teo, Theophilus and Kim, Seungwon and Billinghurst, Mark},

title = {Mixed Reality Collaboration Through Sharing a Live Panorama},

booktitle = {SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

series = {SA '17},

year = {2017},

isbn = {978-1-4503-5410-3},

location = {Bangkok, Thailand},

pages = {14:1--14:4},

articleno = {14},

numpages = {4},

url = {http://doi.acm.org/10.1145/3132787.3139203},

doi = {10.1145/3132787.3139203},

acmid = {3139203},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {panorama, remote collaboration, shared experience},

}One of the popular features on modern social networking platforms is sharing live 360 panorama video. This research investigates on how to further improve shared live panorama based collaborative experiences by applying Mixed Reality (MR) technology. SharedSphere is a wearable MR remote collaboration system. In addition to sharing a live captured immersive panorama, SharedSphere enriches the collaboration through overlaying MR visualisation of non-verbal communication cues (e.g., view awareness and gestures cues). User feedback collected through a preliminary user study indicated that sharing of live 360 panorama video was beneficial by providing a more immersive experience and supporting view independence. Users also felt that the view awareness cues were helpful for understanding the remote collaborator’s focus. -

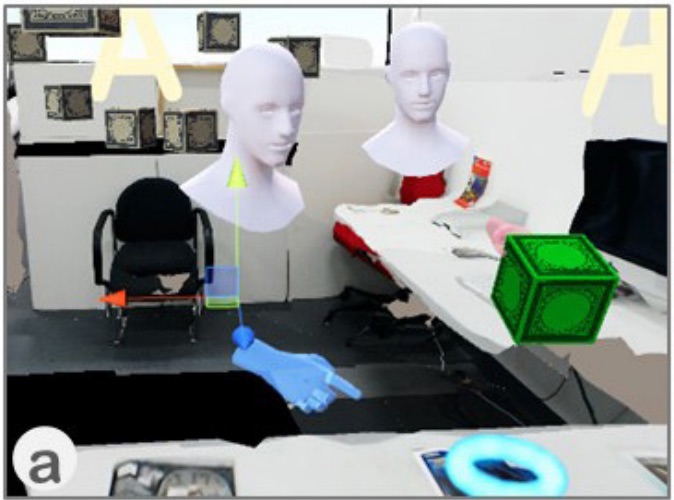

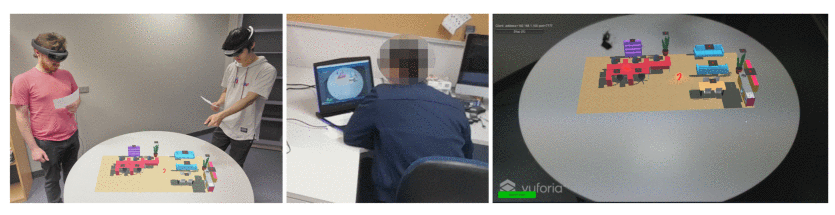

Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration

Thammathip Piumsomboon, Gun A Lee, Jonathon D Hart, Barrett Ens, Robert W Lindeman, Bruce H Thomas, Mark BillinghurstThammathip Piumsomboon, Gun A. Lee, Jonathon D. Hart, Barrett Ens, Robert W. Lindeman, Bruce H. Thomas, and Mark Billinghurst. 2018. Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA, Paper 46, 13 pages. DOI: https://doi.org/10.1145/3173574.3173620

@inproceedings{Piumsomboon:2018:MAA:3173574.3173620,

author = {Piumsomboon, Thammathip and Lee, Gun A. and Hart, Jonathon D. and Ens, Barrett and Lindeman, Robert W. and Thomas, Bruce H. and Billinghurst, Mark},

title = {Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration},

booktitle = {Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI '18},

year = {2018},

isbn = {978-1-4503-5620-6},

location = {Montreal QC, Canada},

pages = {46:1--46:13},

articleno = {46},

numpages = {13},

url = {http://doi.acm.org/10.1145/3173574.3173620},

doi = {10.1145/3173574.3173620},

acmid = {3173620},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, avatar, awareness, gaze, gesture, mixed reality, redirected, remote collaboration, remote embodiment, virtual reality},

}

[download]We present Mini-Me, an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user's gaze direction and body gestures while it transforms in size and orientation to stay within the AR user's field of view. A user study was conducted to evaluate Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration. -

Pinpointing: Precise Head-and Eye-Based Target Selection for Augmented Reality

Mikko Kytö, Barrett Ens, Thammathip Piumsomboon, Gun A Lee, Mark BillinghurstMikko Kytö, Barrett Ens, Thammathip Piumsomboon, Gun A. Lee, and Mark Billinghurst. 2018. Pinpointing: Precise Head- and Eye-Based Target Selection for Augmented Reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA, Paper 81, 14 pages. DOI: https://doi.org/10.1145/3173574.3173655

@inproceedings{Kyto:2018:PPH:3173574.3173655,

author = {Kyt\"{o}, Mikko and Ens, Barrett and Piumsomboon, Thammathip and Lee, Gun A. and Billinghurst, Mark},

title = {Pinpointing: Precise Head- and Eye-Based Target Selection for Augmented Reality},

booktitle = {Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI '18},

year = {2018},

isbn = {978-1-4503-5620-6},

location = {Montreal QC, Canada},

pages = {81:1--81:14},

articleno = {81},

numpages = {14},

url = {http://doi.acm.org/10.1145/3173574.3173655},

doi = {10.1145/3173574.3173655},

acmid = {3173655},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, eye tracking, gaze interaction, head-worn display, refinement techniques, target selection},

}View: https://dl.acm.org/ft_gateway.cfm?id=3173655&ftid=1958752&dwn=1&CFID=51906271&CFTOKEN=b63dc7f7afbcc656-4D4F3907-C934-F85B-2D539C0F52E3652A

Video: https://youtu.be/nCX8zIEmv0sHead and eye movement can be leveraged to improve the user's interaction repertoire for wearable displays. Head movements are deliberate and accurate, and provide the current state-of-the-art pointing technique. Eye gaze can potentially be faster and more ergonomic, but suffers from low accuracy due to calibration errors and drift of wearable eye-tracking sensors. This work investigates precise, multimodal selection techniques using head motion and eye gaze. A comparison of speed and pointing accuracy reveals the relative merits of each method, including the achievable target size for robust selection. We demonstrate and discuss example applications for augmented reality, including compact menus with deep structure, and a proof-of-concept method for on-line correction of calibration drift. -

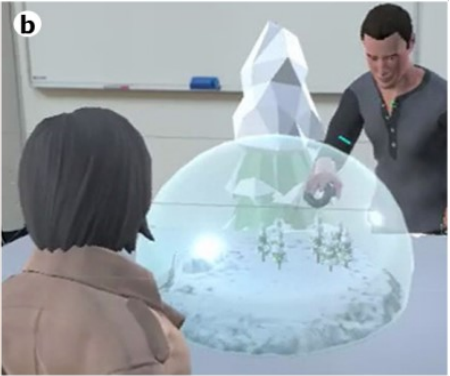

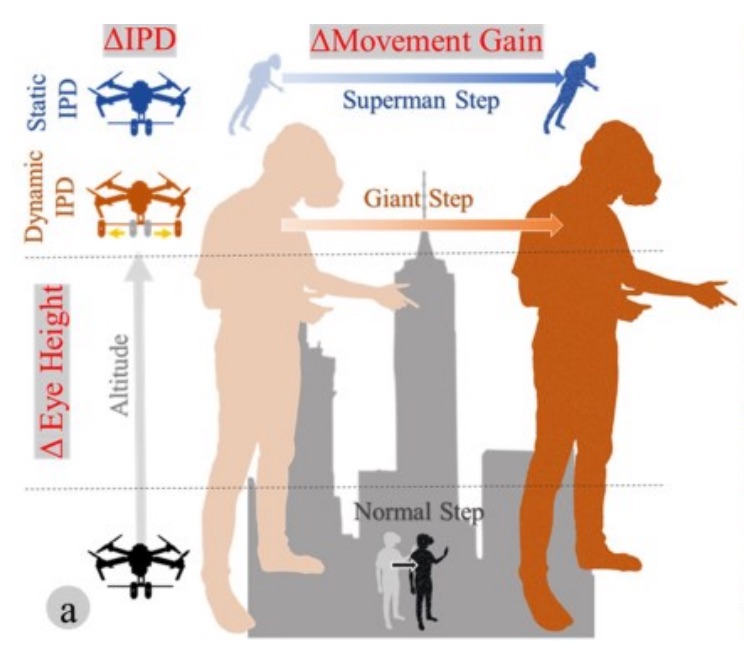

Snow Dome: A Multi-Scale Interaction in Mixed Reality Remote Collaboration

Thammathip Piumsomboon, Gun A Lee, Mark BillinghurstThammathip Piumsomboon, Gun A. Lee, and Mark Billinghurst. 2018. Snow Dome: A Multi-Scale Interaction in Mixed Reality Remote Collaboration. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (CHI EA '18). ACM, New York, NY, USA, Paper D115, 4 pages. DOI: https://doi.org/10.1145/3170427.3186495

@inproceedings{Piumsomboon:2018:SDM:3170427.3186495,

author = {Piumsomboon, Thammathip and Lee, Gun A. and Billinghurst, Mark},

title = {Snow Dome: A Multi-Scale Interaction in Mixed Reality Remote Collaboration},

booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI EA '18},

year = {2018},

isbn = {978-1-4503-5621-3},

location = {Montreal QC, Canada},

pages = {D115:1--D115:4},

articleno = {D115},

numpages = {4},

url = {http://doi.acm.org/10.1145/3170427.3186495},

doi = {10.1145/3170427.3186495},

acmid = {3186495},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, avatar, mixed reality, multiple, remote collaboration, remote embodiment, scale, virtual reality},

}View: http://delivery.acm.org/10.1145/3190000/3186495/D115.pdf?ip=130.220.8.189&id=3186495&acc=ACTIVE%20SERVICE&key=65D80644F295BC0D%2E66BF2BADDFDC7DE0%2EF0418AF7A4636953%2E4D4702B0C3E38B35&__acm__=1531308619_3eface84d74bae70fd47b11af3589b10

Video: https://youtu.be/nm8A9wzobIEWe present Snow Dome, a Mixed Reality (MR) remote collaboration application that supports a multi-scale interaction for a Virtual Reality (VR) user. We share a local Augmented Reality (AR) user's reconstructed space with a remote VR user who has an ability to scale themselves up into a giant or down into a miniature for different perspectives and interaction at that scale within the shared space. -

Filtering Shared Social Data in AR

Alaeddin Nassani, Huidong Bai, Gun Lee, Mark Billinghurst, Tobias Langlotz, Robert W LindemanAlaeddin Nassani, Huidong Bai, Gun Lee, Mark Billinghurst, Tobias Langlotz, and Robert W. Lindeman. 2018. Filtering Shared Social Data in AR. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (CHI EA '18). ACM, New York, NY, USA, Paper LBW100, 6 pages. DOI: https://doi.org/10.1145/3170427.3188609

@inproceedings{Nassani:2018:FSS:3170427.3188609,

author = {Nassani, Alaeddin and Bai, Huidong and Lee, Gun and Billinghurst, Mark and Langlotz, Tobias and Lindeman, Robert W.},

title = {Filtering Shared Social Data in AR},

booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI EA '18},

year = {2018},

isbn = {978-1-4503-5621-3},

location = {Montreal QC, Canada},

pages = {LBW100:1--LBW100:6},

articleno = {LBW100},

numpages = {6},

url = {http://doi.acm.org/10.1145/3170427.3188609},

doi = {10.1145/3170427.3188609},

acmid = {3188609},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {360 panoramas, augmented reality, live video stream, sharing social experiences, virtual avatars},

}View: http://delivery.acm.org/10.1145/3190000/3188609/LBW100.pdf?ip=130.220.8.189&id=3188609&acc=ACTIVE%20SERVICE&key=65D80644F295BC0D%2E66BF2BADDFDC7DE0%2EF0418AF7A4636953%2E4D4702B0C3E38B35&__acm__=1531309037_59c3c6e906e725ca712e49b5a67d51af

Video: https://youtu.be/W-CDpBqe1yIWe describe a method and a prototype implementation for filtering shared social data (eg, 360 video) in a wearable Augmented Reality (eg, HoloLens) application. The data filtering is based on user-viewer relationships. For example, when sharing a 360 video, if the user has an intimate relationship with the viewer, then full fidelity (ie the 360 video) of the user's environment is visible. But if the two are strangers then only a snapshot image is shared. By varying the fidelity of the shared content, the viewer is able to focus more on the data shared by their close relations and differentiate this from other content. Also, the approach enables the sharing-user to have more control over the fidelity of the content shared with their contacts for privacy. -

The Effect of Collaboration Styles and View Independence on Video-Mediated Remote Collaboration

Seungwon Kim, Mark Billinghurst, Gun LeeKim, S., Billinghurst, M., & Lee, G. (2018). The Effect of Collaboration Styles and View Independence on Video-Mediated Remote Collaboration. Computer Supported Cooperative Work (CSCW), 1-39.

@Article{Kim2018,

author="Kim, Seungwon

and Billinghurst, Mark

and Lee, Gun",

title="The Effect of Collaboration Styles and View Independence on Video-Mediated Remote Collaboration",

journal="Computer Supported Cooperative Work (CSCW)",

year="2018",

month="Jun",

day="02",

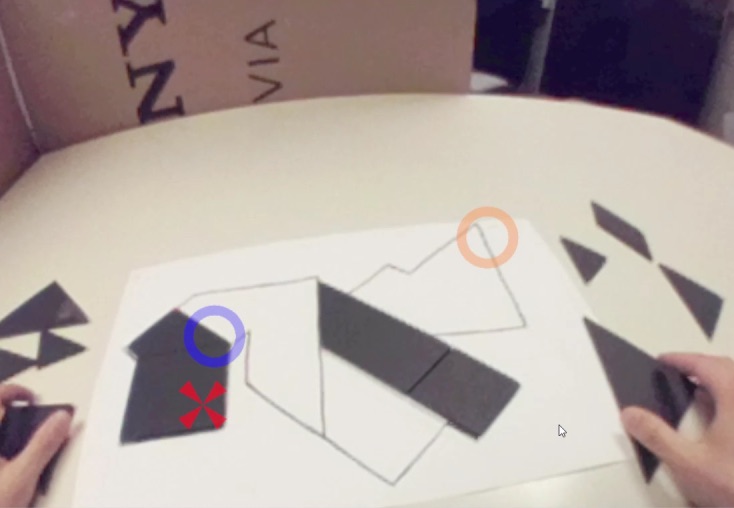

abstract="This paper investigates how different collaboration styles and view independence affect remote collaboration. Our remote collaboration system shares a live video of a local user's real-world task space with a remote user. The remote user can have an independent view or a dependent view of a shared real-world object manipulation task and can draw virtual annotations onto the real-world objects as a visual communication cue. With the system, we investigated two different collaboration styles; (1) remote expert collaboration where a remote user has the solution and gives instructions to a local partner and (2) mutual collaboration where neither user has a solution but both remote and local users share ideas and discuss ways to solve the real-world task. In the user study, the remote expert collaboration showed a number of benefits over the mutual collaboration. With the remote expert collaboration, participants had better communication from the remote user to the local user, more aligned focus between participants, and the remote participants' feeling of enjoyment and togetherness. However, the benefits were not always apparent at the local participants' end, especially with measures of enjoyment and togetherness. The independent view also had several benefits over the dependent view, such as allowing remote participants to freely navigate around the workspace while having a wider fully zoomed-out view. The benefits of the independent view were more prominent in the mutual collaboration than in the remote expert collaboration, especially in enabling the remote participants to see the workspace.",

issn="1573-7551",

doi="10.1007/s10606-018-9324-2",

url="https://doi.org/10.1007/s10606-018-9324-2"

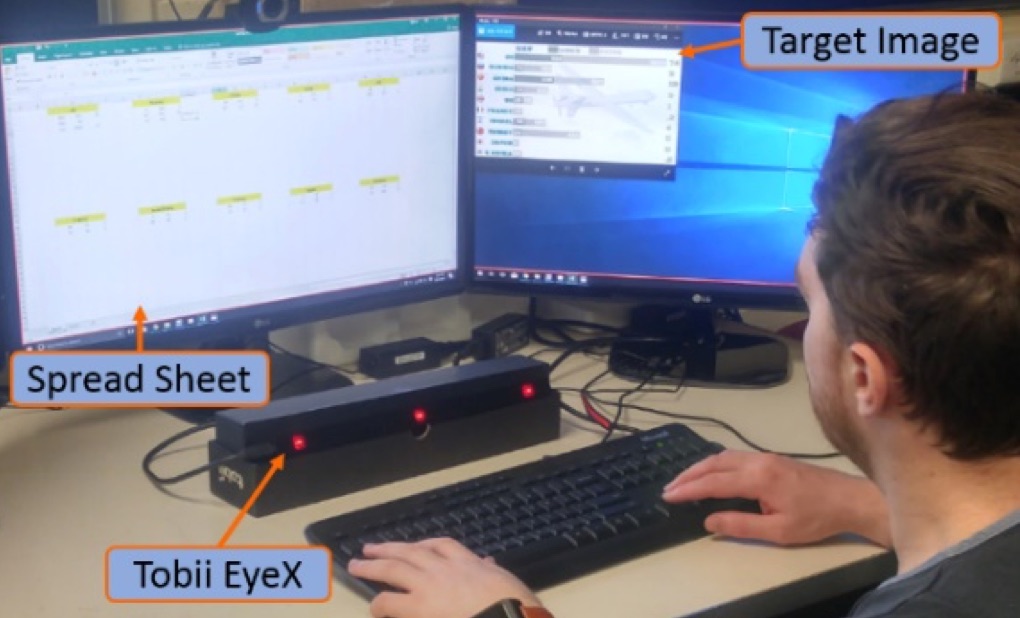

}This paper investigates how different collaboration styles and view independence affect remote collaboration. Our remote collaboration system shares a live video of a local user’s real-world task space with a remote user. The remote user can have an independent view or a dependent view of a shared real-world object manipulation task and can draw virtual annotations onto the real-world objects as a visual communication cue. With the system, we investigated two different collaboration styles; (1) remote expert collaboration where a remote user has the solution and gives instructions to a local partner and (2) mutual collaboration where neither user has a solution but both remote and local users share ideas and discuss ways to solve the real-world task. In the user study, the remote expert collaboration showed a number of benefits over the mutual collaboration. With the remote expert collaboration, participants had better communication from the remote user to the local user, more aligned focus between participants, and the remote participants’ feeling of enjoyment and togetherness. However, the benefits were not always apparent at the local participants’ end, especially with measures of enjoyment and togetherness. The independent view also had several benefits over the dependent view, such as allowing remote participants to freely navigate around the workspace while having a wider fully zoomed-out view. The benefits of the independent view were more prominent in the mutual collaboration than in the remote expert collaboration, especially in enabling the remote participants to see the workspace. -

User Interface Agents for Guiding Interaction with Augmented Virtual Mirrors

Gun Lee, Omprakash Rudhru, Hye Sun Park, Ho Won Kim, and Mark BillinghurstGun Lee, Omprakash Rudhru, Hye Sun Park, Ho Won Kim, and Mark Billinghurst. User Interface Agents for Guiding Interaction with Augmented Virtual Mirrors. In Proceedings of ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, 109-116. http://dx.doi.org/10.2312/egve.20171347

@inproceedings {egve.20171347,

booktitle = {ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments},

editor = {Robert W. Lindeman and Gerd Bruder and Daisuke Iwai},

title = {{User Interface Agents for Guiding Interaction with Augmented Virtual Mirrors}},

author = {Lee, Gun A. and Rudhru, Omprakash and Park, Hye Sun and Kim, Ho Won and Billinghurst, Mark},

year = {2017},

publisher = {The Eurographics Association},

ISSN = {1727-530X},

ISBN = {978-3-03868-038-3},

DOI = {10.2312/egve.20171347}

}This research investigates using user interface (UI) agents for guiding gesture based interaction with Augmented Virtual Mirrors. Compared to prior work in gesture interaction, where graphical symbols are used for guiding user interaction, we propose using UI agents. We explore two approaches for using UI agents: 1) using a UI agent as a delayed cursor and 2) using a UI agent as an interactive button. We conducted two user studies to evaluate the proposed designs. The results from the user studies show that UI agents are effective for guiding user interactions in a similar way as a traditional graphical user interface providing visual cues, while they are useful in emotionally engaging with users. -

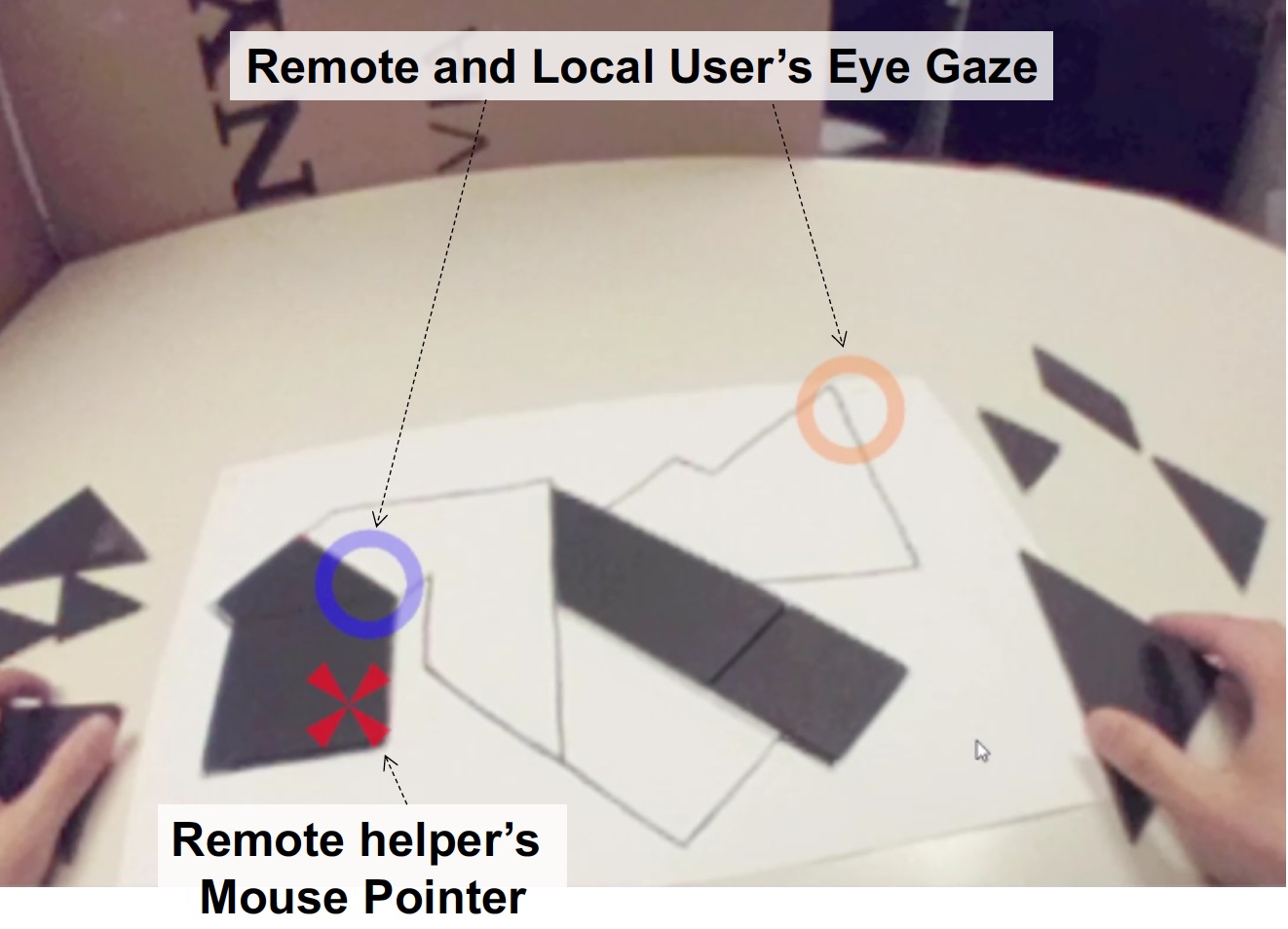

Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze

Gun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark BillinghurstGun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark Billinghurst. 2017. Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze. In Proceedings of ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, pp. 197-204. http://dx.doi.org/10.2312/egve.20171359

@inproceedings {egve.20171359,

booktitle = {ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments},

editor = {Robert W. Lindeman and Gerd Bruder and Daisuke Iwai},

title = {{Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze}},

author = {Lee, Gun A. and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammathip and Norman, Mitchell and Billinghurst, Mark},

year = {2017},

publisher = {The Eurographics Association},

ISSN = {1727-530X},

ISBN = {978-3-03868-038-3},

DOI = {10.2312/egve.20171359}

}To improve remote collaboration in video conferencing systems, researchers have been investigating augmenting visual cues onto a shared live video stream. In such systems, a person wearing a head-mounted display (HMD) and camera can share her view of the surrounding real-world with a remote collaborator to receive assistance on a real-world task. While this concept of augmented video conferencing (AVC) has been actively investigated, there has been little research on how sharing gaze cues might affect the collaboration in video conferencing. This paper investigates how sharing gaze in both directions between a local worker and remote helper in an AVC system affects the collaboration and communication. Using a prototype AVC system that shares the eye gaze of both users, we conducted a user study that compares four conditions with different combinations of eye gaze sharing between the two users. The results showed that sharing each other’s gaze significantly improved collaboration and communication. -

Exploring Natural Eye-Gaze-Based Interaction for Immersive Virtual Reality

Thammathip Piumsomboon, Gun Lee, Robert W. Lindeman and Mark BillinghurstThammathip Piumsomboon, Gun Lee, Robert W. Lindeman and Mark Billinghurst. 2017. Exploring Natural Eye-Gaze-Based Interaction for Immersive Virtual Reality. In 2017 IEEE Symposium on 3D User Interfaces (3DUI), pp. 36-39. https://doi.org/10.1109/3DUI.2017.7893315

@INPROCEEDINGS{7893315,

author={T. Piumsomboon and G. Lee and R. W. Lindeman and M. Billinghurst},

booktitle={2017 IEEE Symposium on 3D User Interfaces (3DUI)},

title={Exploring natural eye-gaze-based interaction for immersive virtual reality},

year={2017},

volume={},

number={},

pages={36-39},

keywords={gaze tracking;gesture recognition;helmet mounted displays;virtual reality;Duo-Reticles;Nod and Roll;Radial Pursuit;cluttered-object selection;eye tracking technology;eye-gaze selection;head-gesture-based interaction;head-mounted display;immersive virtual reality;inertial reticles;natural eye movements;natural eye-gaze-based interaction;smooth pursuit;vestibulo-ocular reflex;Electronic mail;Erbium;Gaze tracking;Painting;Portable computers;Resists;Two dimensional displays;H.5.2 [Information Interfaces and Presentation]: User Interfaces—Interaction styles},

doi={10.1109/3DUI.2017.7893315},

ISSN={},

month={March},}Eye tracking technology in a head-mounted display has undergone rapid advancement in recent years, making it possible for researchers to explore new interaction techniques using natural eye movements. This paper explores three novel eye-gaze-based interaction techniques: (1) Duo-Reticles, eye-gaze selection based on eye-gaze and inertial reticles, (2) Radial Pursuit, cluttered-object selection that takes advantage of smooth pursuit, and (3) Nod and Roll, head-gesture-based interaction based on the vestibulo-ocular reflex. In an initial user study, we compare each technique against a baseline condition in a scenario that demonstrates its strengths and weaknesses. -

Do You See What I See? The Effect of Gaze Tracking on Task Space Remote Collaboration

Kunal Gupta, Gun A. Lee and Mark BillinghurstKunal Gupta, Gun A. Lee and Mark Billinghurst. 2016. Do You See What I See? The Effect of Gaze Tracking on Task Space Remote Collaboration. IEEE Transactions on Visualization and Computer Graphics Vol.22, No.11, pp.2413-2422. https://doi.org/10.1109/TVCG.2016.2593778

@ARTICLE{7523400,

author={K. Gupta and G. A. Lee and M. Billinghurst},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Do You See What I See? The Effect of Gaze Tracking on Task Space Remote Collaboration},

year={2016},

volume={22},

number={11},

pages={2413-2422},

keywords={cameras;gaze tracking;helmet mounted displays;eye-tracking camera;gaze tracking;head-mounted camera;head-mounted display;remote collaboration;task space remote collaboration;virtual gaze information;virtual pointer;wearable interface;Cameras;Collaboration;Computers;Gaze tracking;Head;Prototypes;Teleconferencing;Computer conferencing;Computer-supported collaborative work;teleconferencing;videoconferencing},

doi={10.1109/TVCG.2016.2593778},

ISSN={1077-2626},

month={Nov},}We present results from research exploring the effect of sharing virtual gaze and pointing cues in a wearable interface for remote collaboration. A local worker wears a Head-mounted Camera, Eye-tracking camera and a Head-Mounted Display and shares video and virtual gaze information with a remote helper. The remote helper can provide feedback using a virtual pointer on the live video view. The prototype system was evaluated with a formal user study. Comparing four conditions, (1) NONE (no cue), (2) POINTER, (3) EYE-TRACKER and (4) BOTH (both pointer and eye-tracker cues), we observed that the task completion performance was best in the BOTH condition with a significant difference of POINTER and EYETRACKER individually. The use of eye-tracking and a pointer also significantly improved the co-presence felt between the users. We discuss the implications of this research and the limitations of the developed system that could be improved in further work. -

Hand gestures and visual annotation in live 360 panorama-based mixed reality remote collaboration

Theophilus Teo, Gun A. Lee, Mark Billinghurst, Matt AdcockTheophilus Teo, Gun A. Lee, Mark Billinghurst, and Matt Adcock. 2018. Hand gestures and visual annotation in live 360 panorama-based mixed reality remote collaboration. In Proceedings of the 30th Australian Conference on Computer-Human Interaction (OzCHI '18). ACM, New York, NY, USA, 406-410. DOI: https://doi.org/10.1145/3292147.3292200

BibTeX | EndNote | ACM Ref

@inproceedings{Teo:2018:HGV:3292147.3292200,

author = {Teo, Theophilus and Lee, Gun A. and Billinghurst, Mark and Adcock, Matt},

title = {Hand Gestures and Visual Annotation in Live 360 Panorama-based Mixed Reality Remote Collaboration},

booktitle = {Proceedings of the 30th Australian Conference on Computer-Human Interaction},

series = {OzCHI '18},

year = {2018},

isbn = {978-1-4503-6188-0},

location = {Melbourne, Australia},

pages = {406--410},

numpages = {5},

url = {http://doi.acm.org/10.1145/3292147.3292200},

doi = {10.1145/3292147.3292200},

acmid = {3292200},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {gesture communication, mixed reality, remote collaboration},

}In this paper, we investigate hand gestures and visual annotation cues overlaid in a live 360 panorama-based Mixed Reality remote collaboration. The prototype system captures 360 live panorama video of the surroundings of a local user and shares it with another person in a remote location. The two users wearing Augmented Reality or Virtual Reality head-mounted displays can collaborate using augmented visual communication cues such as virtual hand gestures, ray pointing, and drawing annotations. Our preliminary user evaluation comparing these cues found that using visual annotation cues (ray pointing and drawing annotation) helps local users perform collaborative tasks faster, easier, making less errors and with better understanding, compared to using only virtual hand gestures. -

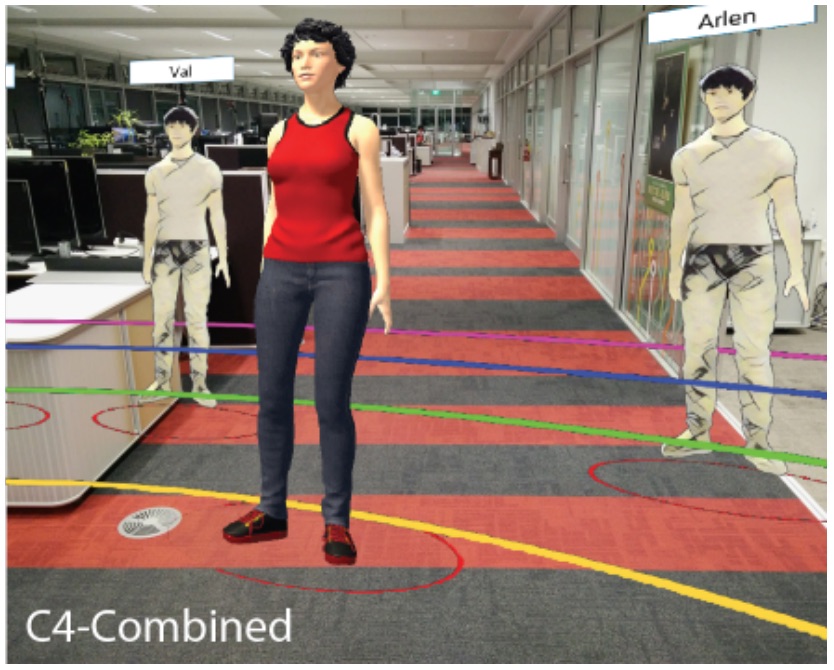

The effects of sharing awareness cues in collaborative mixed reality

Piumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M.Piumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M. (2019). The effects of sharing awareness cues in collaborative mixed reality. Front. Rob, 6(5).

@article{piumsomboon2019effects,

title={The effects of sharing awareness cues in collaborative mixed reality},

author={Piumsomboon, Thammathip and Dey, Arindam and Ens, Barrett and Lee, Gun and Billinghurst, Mark},

year={2019}

}Augmented and Virtual Reality provide unique capabilities for Mixed Reality collaboration. This paper explores how different combinations of virtual awareness cues can provide users with valuable information about their collaborator's attention and actions. In a user study (n = 32, 16 pairs), we compared different combinations of three cues: Field-of-View (FoV) frustum, Eye-gaze ray, and Head-gaze ray against a baseline condition showing only virtual representations of each collaborator's head and hands. Through a collaborative object finding and placing task, the results showed that awareness cues significantly improved user performance, usability, and subjective preferences, with the combination of the FoV frustum and the Head-gaze ray being best. This work establishes the feasibility of room-scale MR collaboration and the utility of providing virtual awareness cues. -

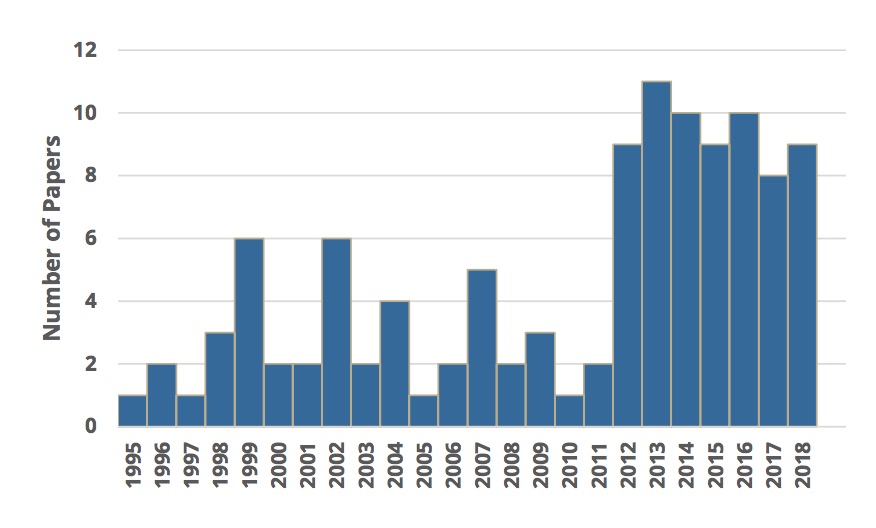

Revisiting collaboration through mixed reality: The evolution of groupware

Ens, B., Lanir, J., Tang, A., Bateman, S., Lee, G., Piumsomboon, T., & Billinghurst, M.Ens, B., Lanir, J., Tang, A., Bateman, S., Lee, G., Piumsomboon, T., & Billinghurst, M. (2019). Revisiting collaboration through mixed reality: The evolution of groupware. International Journal of Human-Computer Studies.

@article{ens2019revisiting,

title={Revisiting collaboration through mixed reality: The evolution of groupware},

author={Ens, Barrett and Lanir, Joel and Tang, Anthony and Bateman, Scott and Lee, Gun and Piumsomboon, Thammathip and Billinghurst, Mark},

journal={International Journal of Human-Computer Studies},

year={2019},

publisher={Elsevier}

}Collaborative Mixed Reality (MR) systems are at a critical point in time as they are soon to become more commonplace. However, MR technology has only recently matured to the point where researchers can focus deeply on the nuances of supporting collaboration, rather than needing to focus on creating the enabling technology. In parallel, but largely independently, the field of Computer Supported Cooperative Work (CSCW) has focused on the fundamental concerns that underlie human communication and collaboration over the past 30-plus years. Since MR research is now on the brink of moving into the real world, we reflect on three decades of collaborative MR research and try to reconcile it with existing theory from CSCW, to help position MR researchers to pursue fruitful directions for their work. To do this, we review the history of collaborative MR systems, investigating how the common taxonomies and frameworks in CSCW and MR research can be applied to existing work on collaborative MR systems, exploring where they have fallen behind, and look for new ways to describe current trends. Through identifying emergent trends, we suggest future directions for MR, and also find where CSCW researchers can explore new theory that more fully represents the future of working, playing and being with others. -

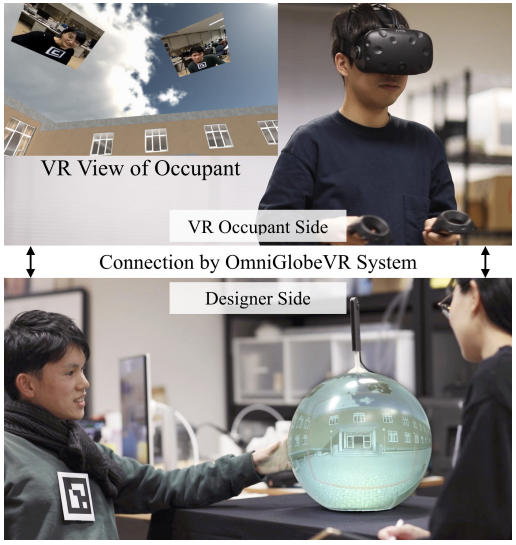

On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction

Piumsomboon, T., Lee, G. A., Irlitti, A., Ens, B., Thomas, B. H., & Billinghurst, M.Piumsomboon, T., Lee, G. A., Irlitti, A., Ens, B., Thomas, B. H., & Billinghurst, M. (2019, April). On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (p. 228). ACM.

@inproceedings{piumsomboon2019shoulder,

title={On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction},

author={Piumsomboon, Thammathip and Lee, Gun A and Irlitti, Andrew and Ens, Barrett and Thomas, Bruce H and Billinghurst, Mark},

booktitle={Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages={228},

year={2019},

organization={ACM}

}We propose a multi-scale Mixed Reality (MR) collaboration between the Giant, a local Augmented Reality user, and the Miniature, a remote Virtual Reality user, in Giant-Miniature Collaboration (GMC). The Miniature is immersed in a 360-video shared by the Giant who can physically manipulate the Miniature through a tangible interface, a combined 360-camera with a 6 DOF tracker. We implemented a prototype system as a proof of concept and conducted a user study (n=24) comprising of four parts comparing: A) two types of virtual representations, B) three levels of Miniature control, C) three levels of 360-video view dependencies, and D) four 360-camera placement positions on the Giant. The results show users prefer a shoulder mounted camera view, while a view frustum with a complimentary avatar is a good visualization for the Miniature virtual representation. From the results, we give design recommendations and demonstrate an example Giant-Miniature Interaction. -

Evaluating the Combination of Visual Communication Cues for HMD-based Mixed Reality Remote Collaboration

Kim, S., Lee, G., Huang, W., Kim, H., Woo, W., & Billinghurst, M.Kim, S., Lee, G., Huang, W., Kim, H., Woo, W., & Billinghurst, M. (2019, April). Evaluating the Combination of Visual Communication Cues for HMD-based Mixed Reality Remote Collaboration. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (p. 173). ACM.

@inproceedings{kim2019evaluating,

title={Evaluating the Combination of Visual Communication Cues for HMD-based Mixed Reality Remote Collaboration},

author={Kim, Seungwon and Lee, Gun and Huang, Weidong and Kim, Hayun and Woo, Woontack and Billinghurst, Mark},

booktitle={Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages={173},

year={2019},

organization={ACM}

}Many researchers have studied various visual communication cues (e.g. pointer, sketching, and hand gesture) in Mixed Reality remote collaboration systems for real-world tasks. However, the effect of combining them has not been so well explored. We studied the effect of these cues in four combinations: hand only, hand + pointer, hand + sketch, and hand + pointer + sketch, with three problem tasks: Lego, Tangram, and Origami. The study results showed that the participants completed the task significantly faster and felt a significantly higher level of usability when the sketch cue is added to the hand gesture cue, but not with adding the pointer cue. Participants also preferred the combinations including hand and sketch cues over the other combinations. However, using additional cues (pointer or sketch) increased the perceived mental effort and did not improve the feeling of co-presence. We discuss the implications of these results and future research directions. -

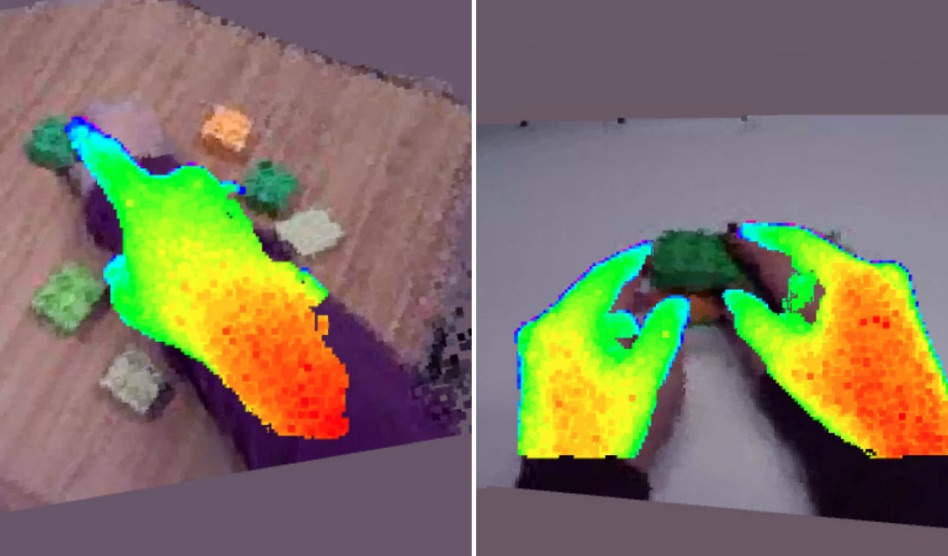

Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction

Teo, T., Lawrence, L., Lee, G. A., Billinghurst, M., & Adcock, M.Teo, T., Lawrence, L., Lee, G. A., Billinghurst, M., & Adcock, M. (2019, April). Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (p. 201). ACM.

@inproceedings{teo2019mixed,

title={Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction},

author={Teo, Theophilus and Lawrence, Louise and Lee, Gun A and Billinghurst, Mark and Adcock, Matt},

booktitle={Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages={201},

year={2019},

organization={ACM}

}Remote Collaboration using Virtual Reality (VR) and Augmented Reality (AR) has recently become a popular way for people from different places to work together. Local workers can collaborate with remote helpers by sharing 360-degree live video or 3D virtual reconstruction of their surroundings. However, each of these techniques has benefits and drawbacks. In this paper we explore mixing 360 video and 3D reconstruction together for remote collaboration, by preserving benefits of both systems while reducing drawbacks of each. We developed a hybrid prototype and conducted user study to compare benefits and problems of using 360 or 3D alone to clarify the needs for mixing the two, and also to evaluate the prototype system. We found participants performed significantly better on collaborative search tasks in 360 and felt higher social presence, yet 3D also showed potential to complement. Participant feedback collected after trying our hybrid system provided directions for improvement. -

Sharing Emotion by Displaying a Partner Near the Gaze Point in a Telepresence System

Kim, S., Billinghurst, M., Lee, G., Norman, M., Huang, W., & He, J.Kim, S., Billinghurst, M., Lee, G., Norman, M., Huang, W., & He, J. (2019, July). Sharing Emotion by Displaying a Partner Near the Gaze Point in a Telepresence System. In 2019 23rd International Conference in Information Visualization–Part II (pp. 86-91). IEEE.

@inproceedings{kim2019sharing,

title={Sharing Emotion by Displaying a Partner Near the Gaze Point in a Telepresence System},

author={Kim, Seungwon and Billinghurst, Mark and Lee, Gun and Norman, Mitchell and Huang, Weidong and He, Jian},

booktitle={2019 23rd International Conference in Information Visualization--Part II},

pages={86--91},

year={2019},

organization={IEEE}

}In this paper, we explore the effect of showing a remote partner close to user gaze point in a teleconferencing system. We implemented a gaze following function in a teleconferencing system and investigate if this improves the user's feeling of emotional interdependence. We developed a prototype system that shows a remote partner close to the user's current gaze point and conducted a user study comparing it to a condition displaying the partner fixed in the corner of a screen. Our results showed that showing a partner close to their gaze point helped users feel a higher level of emotional interdependence. In addition, we compared the effect of our method between small and big displays, but there was no significant difference in the users' feeling of emotional interdependence even though the big display was preferred. -

Supporting Visual Annotation Cues in a Live 360 Panorama-based Mixed Reality Remote Collaboration

Teo, T., Lee, G. A., Billinghurst, M., & Adcock, M.Teo, T., Lee, G. A., Billinghurst, M., & Adcock, M. (2019, March). Supporting Visual Annotation Cues in a Live 360 Panorama-based Mixed Reality Remote Collaboration. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 1187-1188). IEEE.

@inproceedings{teo2019supporting,

title={Supporting Visual Annotation Cues in a Live 360 Panorama-based Mixed Reality Remote Collaboration},

author={Teo, Theophilus and Lee, Gun A and Billinghurst, Mark and Adcock, Matt},

booktitle={2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={1187--1188},

year={2019},

organization={IEEE}

}We propose enhancing live 360 panorama-based Mixed Reality (MR) remote collaboration through supporting visual annotation cues. Prior work on live 360 panorama-based collaboration used MR visualization to overlay visual cues, such as view frames and virtual hands, yet they were not registered onto the shared physical workspace, hence had limitations in accuracy for pointing or marking objects. Our prototype system uses spatial mapping and tracking feature of an Augmented Reality head-mounted display to show visual annotation cues accurately registered onto the physical environment. We describe the design and implementation details of our prototype system, and discuss on how such feature could help improve MR remote collaboration. -

Binaural Spatialization over a Bone Conduction Headset: The Perception of Elevation

Barde, A., Lindeman, R. W., Lee, G., & Billinghurst, M.Barde, A., Lindeman, R. W., Lee, G., & Billinghurst, M. (2019, August). Binaural Spatialization over a Bone Conduction Headset: The Perception of Elevation. In Audio Engineering Society Conference: 2019 AES INTERNATIONAL CONFERENCE ON HEADPHONE TECHNOLOGY. Audio Engineering Society.

@inproceedings{barde2019binaural,

title={Binaural Spatialization over a Bone Conduction Headset: The Perception of Elevation},

author={Barde, Amit and Lindeman, Robert W and Lee, Gun and Billinghurst, Mark},

booktitle={Audio Engineering Society Conference: 2019 AES INTERNATIONAL CONFERENCE ON HEADPHONE TECHNOLOGY},

year={2019},

organization={Audio Engineering Society}

}Binaural spatialization over a bone conduction headset in the vertical plane was investigated using inexpensive and commercially available hardware and software components. The aim of the study was to assess the acuity of binaurally spatialized presentations in the vertical plane. The level of externalization achievable was also explored. Results demonstrate good correlation between established perceptual traits for headphone based auditory localization using non-individualized HRTFs, though localization accuracy appears to be significant worse. A distinct pattern of compressed localization judgments is observed with participants tending to localize the presented stimulus within an approximately 20° range on either side of the inter-aural plane. Localization error was approximately 21° in the vertical plane. Participants reported a good level of externalization. We’ve been able to demonstrate an acceptable level of spatial resolution and externalization is achievable using an inexpensive bone conduction headset and software components. -

Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface

Piumsomboon, T., Lee, G. A., Ens, B., Thomas, B. H., & Billinghurst, M.Piumsomboon, T., Lee, G. A., Ens, B., Thomas, B. H., & Billinghurst, M. (2018). Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface. IEEE transactions on visualization and computer graphics, 24(11), 2974-2982.

@article{piumsomboon2018superman,

title={Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface},

author={Piumsomboon, Thammathip and Lee, Gun A and Ens, Barrett and Thomas, Bruce H and Billinghurst, Mark},

journal={IEEE transactions on visualization and computer graphics},

volume={24},

number={11},

pages={2974--2982},

year={2018},

publisher={IEEE}

}The advancements in Mixed Reality (MR), Unmanned Aerial Vehicle, and multi-scale collaborative virtual environments have led to new interface opportunities for remote collaboration. This paper explores a novel concept of flying telepresence for multi-scale mixed reality remote collaboration. This work could enable remote collaboration at a larger scale such as building construction. We conducted a user study with three experiments. The first experiment compared two interfaces, static and dynamic IPD, on simulator sickness and body size perception. The second experiment tested the user perception of a virtual object size under three levels of IPD and movement gain manipulation with a fixed eye height in a virtual environment having reduced or rich visual cues. Our last experiment investigated the participant’s body size perception for two levels of manipulation of the IPDs and heights using stereo video footage to simulate a flying telepresence experience. The studies found that manipulating IPDs and eye height influenced the user’s size perception. We present our findings and share the recommendations for designing a multi-scale MR flying telepresence interface. -

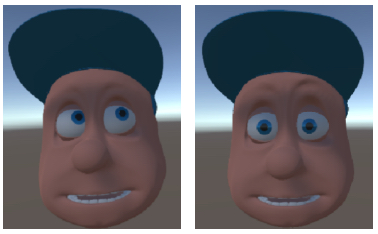

Emotion Sharing and Augmentation in Cooperative Virtual Reality Games

Hart, J. D., Piumsomboon, T., Lawrence, L., Lee, G. A., Smith, R. T., & Billinghurst, M.Hart, J. D., Piumsomboon, T., Lawrence, L., Lee, G. A., Smith, R. T., & Billinghurst, M. (2018, October). Emotion Sharing and Augmentation in Cooperative Virtual Reality Games. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts (pp. 453-460). ACM.

@inproceedings{hart2018emotion,

title={Emotion Sharing and Augmentation in Cooperative Virtual Reality Games},

author={Hart, Jonathon D and Piumsomboon, Thammathip and Lawrence, Louise and Lee, Gun A and Smith, Ross T and Billinghurst, Mark},

booktitle={Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts},

pages={453--460},

year={2018},

organization={ACM}

}We present preliminary findings from sharing and augmenting facial expression in cooperative social Virtual Reality (VR) games. We implemented a prototype system for capturing and sharing facial expression between VR players through their avatar. We describe our current prototype system and how it could be assimilated into a system for enhancing social VR experience. Two social VR games were created for a preliminary user study. We discuss our findings from the user study, potential games for this system, and future directions for this research. -

Using Freeze Frame and Visual Notifications in an Annotation Drawing Interface for Remote Collaboration.

Kim, S., Billinghurst, M., Lee, C., & Lee, GKim, S., Billinghurst, M., Lee, C., & Lee, G. (2018). Using Freeze Frame and Visual Notifications in an Annotation Drawing Interface for Remote Collaboration. KSII Transactions on Internet & Information Systems, 12(12).

@article{kim2018using,

title={Using Freeze Frame and Visual Notifications in an Annotation Drawing Interface for Remote Collaboration.},

author={Kim, Seungwon and Billinghurst, Mark and Lee, Chilwoo and Lee, Gun},

journal={KSII Transactions on Internet \& Information Systems},

volume={12},

number={12},

year={2018}

}This paper describes two user studies in remote collaboration between two users with a video conferencing system where a remote user can draw annotations on the live video of the local user’s workspace. In these two studies, the local user had the control of the view when sharing the first-person view, but our interfaces provided instant control of the shared view to the remote users. The first study investigates methods for assisting drawing annotations. The auto-freeze method, a novel solution for drawing annotations, is compared to a prior solution (manual freeze method) and a baseline (non-freeze) condition. Results show that both local and remote users preferred the auto-freeze method, which is easy to use and allows users to quickly draw annotations. The manual-freeze method supported precise drawing, but was less preferred because of the need for manual input. The second study explores visual notification for better local user awareness. We propose two designs: the red-box and both-freeze notifications, and compare these to the baseline, no notification condition. Users preferred the less obtrusive red-box notification that improved awareness of when annotations were made by remote users, and had a significantly lower level of interruption compared to the both-freeze condition.

-

Sharing and Augmenting Emotion in Collaborative Mixed Reality

Hart, J. D., Piumsomboon, T., Lee, G., & Billinghurst, M.Hart, J. D., Piumsomboon, T., Lee, G., & Billinghurst, M. (2018, October). Sharing and Augmenting Emotion in Collaborative Mixed Reality. In 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 212-213). IEEE.

@inproceedings{hart2018sharing,

title={Sharing and Augmenting Emotion in Collaborative Mixed Reality},

author={Hart, Jonathon D and Piumsomboon, Thammathip and Lee, Gun and Billinghurst, Mark},

booktitle={2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={212--213},

year={2018},

organization={IEEE}

}We present a concept of emotion sharing and augmentation for collaborative mixed-reality. To depict the ideal use case of such system, we give two example scenarios. We describe our prototype system for capturing and augmenting emotion through facial expression, eye-gaze, voice, physiological data and share them through their virtual representation, and discuss on future research directions with potential applications. -

Filtering 3D Shared Surrounding Environments by Social Proximity in AR

Nassani, A., Bai, H., Lee, G., Langlotz, T., Billinghurst, M., & Lindeman, R. W.Nassani, A., Bai, H., Lee, G., Langlotz, T., Billinghurst, M., & Lindeman, R. W. (2018, October). Filtering 3D Shared Surrounding Environments by Social Proximity in AR. In 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 123-124). IEEE.

@inproceedings{nassani2018filtering,

title={Filtering 3D Shared Surrounding Environments by Social Proximity in AR},

author={Nassani, Alaeddin and Bai, Huidong and Lee, Gun and Langlotz, Tobias and Billinghurst, Mark and Lindeman, Robert W},

booktitle={2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={123--124},

year={2018},

organization={IEEE}

}In this poster, we explore the social sharing of surrounding environments on wearable Augmented Reality (AR) devices. In particular, we propose filtering the level of detail of sharing the surrounding environment based on the social proximity between the viewer and the sharer. We test the effect of having the filter (varying levels of detail) on the shared surrounding environment on the sense of privacy from both viewer and sharer perspectives and conducted a pilot study using HoloLens. We report on semi-structured questionnaire results and suggest future directions in the social sharing of surrounding environments. -

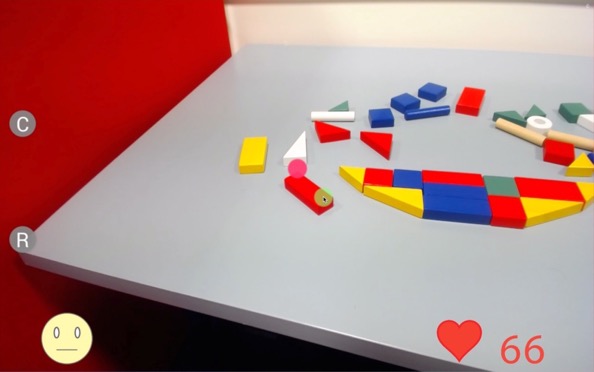

Enhancing player engagement through game balancing in digitally augmented physical games

Altimira, D., Clarke, J., Lee, G., Billinghurst, M., & Bartneck, C.Altimira, D., Clarke, J., Lee, G., Billinghurst, M., & Bartneck, C. (2017). Enhancing player engagement through game balancing in digitally augmented physical games. International Journal of Human-Computer Studies, 103, 35-47.

@article{altimira2017enhancing,

title={Enhancing player engagement through game balancing in digitally augmented physical games},

author={Altimira, David and Clarke, Jenny and Lee, Gun and Billinghurst, Mark and Bartneck, Christoph and others},

journal={International Journal of Human-Computer Studies},

volume={103},

pages={35--47},

year={2017},

publisher={Elsevier}

}Game balancing can be used to compensate for differences in players' skills, in particular in games where players compete against each other. It can help providing the right level of challenge and hence enhance engagement. However, there is a lack of understanding of game balancing design and how different game adjustments affect player engagement. This understanding is important for the design of balanced physical games. In this paper we report on how altering the game equipment in a digitally augmented table tennis game, such as the table size and bat-head size statically and dynamically, can affect game balancing and player engagement. We found these adjustments enhanced player engagement compared to the no-adjustment condition. The understanding of how the adjustments impacted on player engagement helped us to derive a set of balancing strategies to facilitate engaging game experiences. We hope that this understanding can contribute to improve physical activity experiences and encourage people to get engaged in physical activity. -

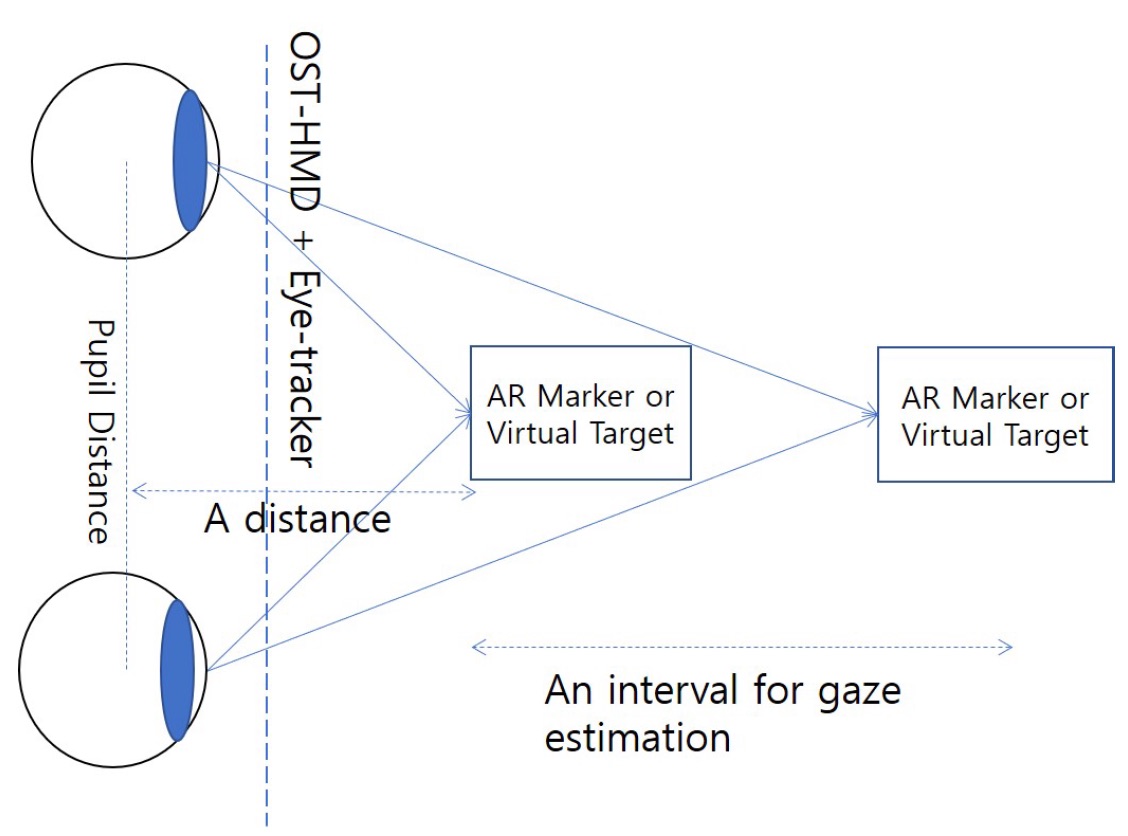

Estimating Gaze Depth Using Multi-Layer Perceptron

Lee, Y., Shin, C., Plopski, A., Itoh, Y., Piumsomboon, T., Dey, A., ... & Billinghurst, M. (2017, June). Estimating Gaze Depth Using Multi-Layer Perceptron. In 2017 International Symposium on Ubiquitous Virtual Reality (ISUVR) (pp. 26-29). IEEE.

@inproceedings{lee2017estimating,

title={Estimating Gaze Depth Using Multi-Layer Perceptron},

author={Lee, Youngho and Shin, Choonsung and Plopski, Alexander and Itoh, Yuta and Piumsomboon, Thammathip and Dey, Arindam and Lee, Gun and Kim, Seungwon and Billinghurst, Mark},

booktitle={2017 International Symposium on Ubiquitous Virtual Reality (ISUVR)},

pages={26--29},

year={2017},

organization={IEEE}

}In this paper we describe a new method for determining gaze depth in a head mounted eye-tracker. Eyetrackers are being incorporated into head mounted displays (HMDs), and eye-gaze is being used for interaction in Virtual and Augmented Reality. For some interaction methods, it is important to accurately measure the x- and y-direction of the eye-gaze and especially the focal depth information. Generally, eye tracking technology has a high accuracy in x- and y-directions, but not in depth. We used a binocular gaze tracker with two eye cameras, and the gaze vector was input to an MLP neural network for training and estimation. For the performance evaluation, data was obtained from 13 people gazing at fixed points at distances from 1m to 5m. The gaze classification into fixed distances produced an average classification error of nearly 10%, and an average error distance of 0.42m. This is sufficient for some Augmented Reality applications, but more research is needed to provide an estimate of a user’s gaze moving in continuous space. -

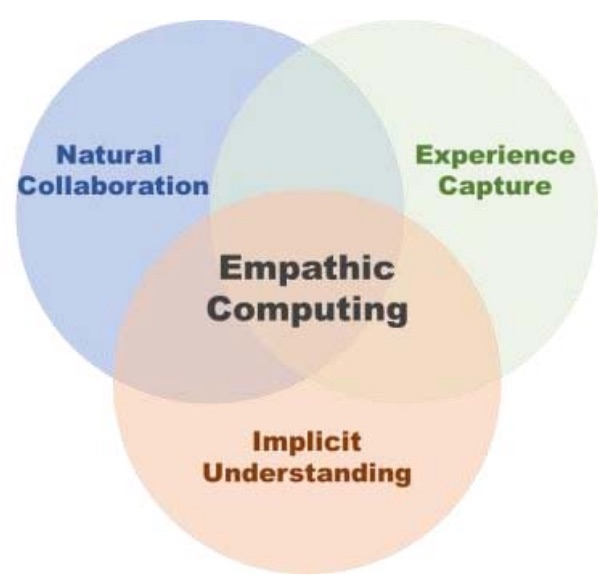

Empathic mixed reality: Sharing what you feel and interacting with what you see

Piumsomboon, T., Lee, Y., Lee, G. A., Dey, A., & Billinghurst, M.Piumsomboon, T., Lee, Y., Lee, G. A., Dey, A., & Billinghurst, M. (2017, June). Empathic mixed reality: Sharing what you feel and interacting with what you see. In 2017 International Symposium on Ubiquitous Virtual Reality (ISUVR) (pp. 38-41). IEEE.

@inproceedings{piumsomboon2017empathic,

title={Empathic mixed reality: Sharing what you feel and interacting with what you see},

author={Piumsomboon, Thammathip and Lee, Youngho and Lee, Gun A and Dey, Arindam and Billinghurst, Mark},

booktitle={2017 International Symposium on Ubiquitous Virtual Reality (ISUVR)},

pages={38--41},

year={2017},

organization={IEEE}

}Empathic Computing is a research field that aims to use technology to create deeper shared understanding or empathy between people. At the same time, Mixed Reality (MR) technology provides an immersive experience that can make an ideal interface for collaboration. In this paper, we present some of our research into how MR technology can be applied to creating Empathic Computing experiences. This includes exploring how to share gaze in a remote collaboration between Augmented Reality (AR) and Virtual Reality (VR) environments, using physiological signals to enhance collaborative VR, and supporting interaction through eye-gaze in VR. Early outcomes indicate that as we design collaborative interfaces to enhance empathy between people, this could also benefit the personal experience of the individual interacting with the interface. -

The Social AR Continuum: Concept and User Study

Nassani, A., Lee, G., Billinghurst, M., Langlotz, T., Hoermann, S., & Lindeman, R. W.Nassani, A., Lee, G., Billinghurst, M., Langlotz, T., Hoermann, S., & Lindeman, R. W. (2017, October). [POSTER] The Social AR Continuum: Concept and User Study. In 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct) (pp. 7-8). IEEE.

@inproceedings{nassani2017poster,

title={[POSTER] The Social AR Continuum: Concept and User Study},

author={Nassani, Alaeddin and Lee, Gun and Billinghurst, Mark and Langlotz, Tobias and Hoermann, Simon and Lindeman, Robert W},

booktitle={2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct)},

pages={7--8},

year={2017},

organization={IEEE}

}In this poster, we describe The Social AR Continuum, a space that encompasses different dimensions of Augmented Reality (AR) for sharing social experiences. We explore various dimensions, discuss options for each dimension, and brainstorm possible scenarios where these options might be useful. We describe a prototype interface using the contact placement dimension, and report on feedback from potential users which supports its usefulness for visualising social contacts. Based on this concept work, we suggest user studies in the social AR space, and give insights into future directions. -

Mutually Shared Gaze in Augmented Video Conference

Lee, G., Kim, S., Lee, Y., Dey, A., Piumsomboon, T., Norman, M., & Billinghurst, M.Lee, G., Kim, S., Lee, Y., Dey, A., Piumsomboon, T., Norman, M., & Billinghurst, M. (2017, October). Mutually Shared Gaze in Augmented Video Conference. In Adjunct Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR-Adjunct 2017 (pp. 79-80). Institute of Electrical and Electronics Engineers Inc..

@inproceedings{lee2017mutually,

title={Mutually Shared Gaze in Augmented Video Conference},

author={Lee, Gun and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammatip and Norman, Mitchell and Billinghurst, Mark},

booktitle={Adjunct Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR-Adjunct 2017},

pages={79--80},

year={2017},

organization={Institute of Electrical and Electronics Engineers Inc.}

}Augmenting video conference with additional visual cues has been studied to improve remote collaboration. A common setup is a person wearing a head-mounted display (HMD) and camera sharing her view of the workspace with a remote collaborator and getting assistance on a real-world task. While this configuration has been extensively studied, there has been little research on how sharing gaze cues might affect the collaboration. This research investigates how sharing gaze in both directions between a local worker and remote helper affects the collaboration and communication. We developed a prototype system that shares the eye gaze of both users, and conducted a user study. Preliminary results showed that sharing gaze significantly improves the awareness of each other's focus, hence improving collaboration. -

The effect of user embodiment in AV cinematic experience

Chen, J., Lee, G., Billinghurst, M., Lindeman, R. W., and Bartneck, C.Chen, J., Lee, G., Billinghurst, M., Lindeman, R. W., & Bartneck, C. (2017). The effect of user embodiment in AV cinematic experience.

@article{chen2017effect,

title={The effect of user embodiment in AV cinematic experience},

author={Chen, Joshua and Lee, Gun and Billinghurst, Mark and Lindeman, Robert W and Bartneck, Christoph},

year={2017}

}View: https://ir.canterbury.ac.nz/bitstream/handle/10092/15405/icat-egve2017-JoshuaChen.pdf?sequence=2Virtual Reality (VR) is becoming a popular medium for viewing immersive cinematic experiences using 360◦ panoramic movies and head mounted displays. There are previous research on user embodiment in real-time rendered VR, but not in relation to cinematic VR based on 360 panoramic video. In this paper we explore the effects of introducing the user’s real body into cinematic VR experiences. We conducted a study evaluating how the type of movie and user embodiment affects the sense of presence and user engagement. We found that when participants were able to see their own body in the VR movie, there was significant increase in the sense of Presence, yet user engagement was not significantly affected. We discuss on the implications of the results and how it can be expanded in the future. -

A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs

Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M.Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M. (2017, November). A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs. In Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos (pp. 1-2). Eurographics Association.

@inproceedings{lee2017gaze,

title={A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs},

author={Lee, Youngho and Piumsomboon, Thammathip and Ens, Barrett and Lee, Gun and Dey, Arindam and Billinghurst, Mark},

booktitle={Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos},

pages={1--2},

year={2017},

organization={Eurographics Association}

}The rapid developement of machine learning algorithms can be leveraged for potential software solutions in many domains including techniques for depth estimation of human eye gaze. In this paper, we propose an implicit and continuous data acquisition method for 3D gaze depth estimation for an optical see-Through head mounted display (OST-HMD) equipped with an eye tracker. Our method constantly monitoring and generating user gaze data for training our machine learning algorithm. The gaze data acquired through the eye-tracker include the inter-pupillary distance (IPD) and the gaze distance to the real andvirtual target for each eye.

-

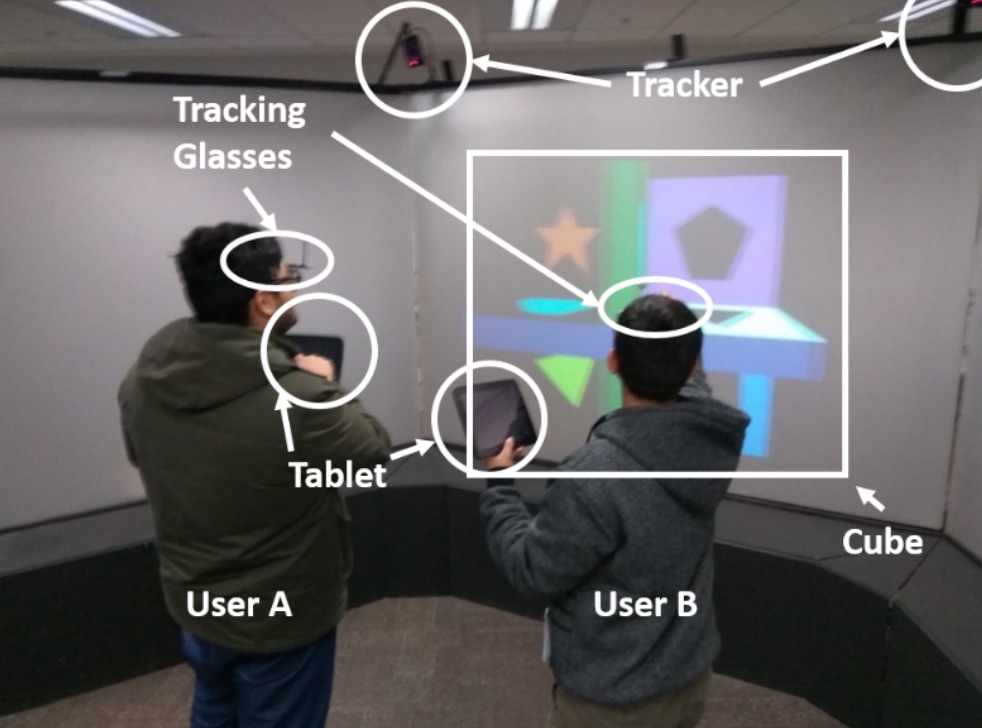

Collaborative View Configurations for Multi-user Interaction with a Wall-size Display

Kim, H., Kim, Y., Lee, G., Billinghurst, M., & Bartneck, C.Kim, H., Kim, Y., Lee, G., Billinghurst, M., & Bartneck, C. (2017, November). Collaborative view configurations for multi-user interaction with a wall-size display. In Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments (pp. 189-196). Eurographics Association.

@inproceedings{kim2017collaborative,

title={Collaborative view configurations for multi-user interaction with a wall-size display},

author={Kim, Hyungon and Kim, Yeongmi and Lee, Gun and Billinghurst, Mark and Bartneck, Christoph},

booktitle={Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments},

pages={189--196},

year={2017},

organization={Eurographics Association}

}This paper explores the effects of different collaborative view configuration on face-to-face collaboration using a wall-size display and the relationship between view configuration and multi-user interaction. Three different view configurations (shared view, split screen, and split screen with navigation information) for multi-user collaboration with a wall-size display were introduced and evaluated in a user study. From the experiment results, several insights for designing a virtual environment with a wall-size display were discussed. The shared view configuration does not disturb collaboration despite control conflict and can provide an effective collaboration. The split screen view configuration can provide independent collaboration while it can take users’ attention. The navigation information can reduce the interaction required for the navigational task while an overall interaction performance may not increase. -

Exploring enhancements for remote mixed reality collaboration

Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M.Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M. (2017, November). Exploring enhancements for remote mixed reality collaboration. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 16). ACM.

@inproceedings{piumsomboon2017exploring,

title={Exploring enhancements for remote mixed reality collaboration},

author={Piumsomboon, Thammathip and Day, Arindam and Ens, Barrett and Lee, Youngho and Lee, Gun and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={16},

year={2017},

organization={ACM}

}In this paper, we explore techniques for enhancing remote Mixed Reality (MR) collaboration in terms of communication and interaction. We created CoVAR, a MR system for remote collaboration between an Augmented Reality (AR) and Augmented Virtuality (AV) users. Awareness cues and AV-Snap-to-AR interface were proposed for enhancing communication. Collaborative natural interaction, and AV-User-Body-Scaling were implemented for enhancing interaction. We conducted an exploratory study examining the awareness cues and the collaborative gaze, and the results showed the benefits of the proposed techniques for enhancing communication and interaction. -

AR social continuum: representing social contacts

Nassani, A., Lee, G., Billinghurst, M., Langlotz, T., & Lindeman, R. W.Nassani, A., Lee, G., Billinghurst, M., Langlotz, T., & Lindeman, R. W. (2017, November). AR social continuum: representing social contacts. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 6). ACM.

@inproceedings{nassani2017ar,

title={AR social continuum: representing social contacts},

author={Nassani, Alaeddin and Lee, Gun and Billinghurst, Mark and Langlotz, Tobias and Lindeman, Robert W},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={6},

year={2017},

organization={ACM}

}One of the key problems with representing social networks in Augmented Reality (AR) is how to differentiate between contacts. In this paper we explore how visual and spatial cues based on social relationships can be used to represent contacts in social AR applications, making it easier to distinguish between them. Previous implementations of social AR have been mostly focusing on location based visualization with no focus on the social relationship to the user. In contrast, we explore how to visualise social relationships in mobile AR environments using proximity and visual fidelity filters. We ran a focus group to explore different options for representing social contacts in a mobile an AR application. We also conducted a user study to test a head-worn AR prototype using proximity and visual fidelity filters. We found out that filtering social contacts on wearable AR is preferred and useful. We discuss the results of focus group and the user study, and provide insights into directions for future work. -

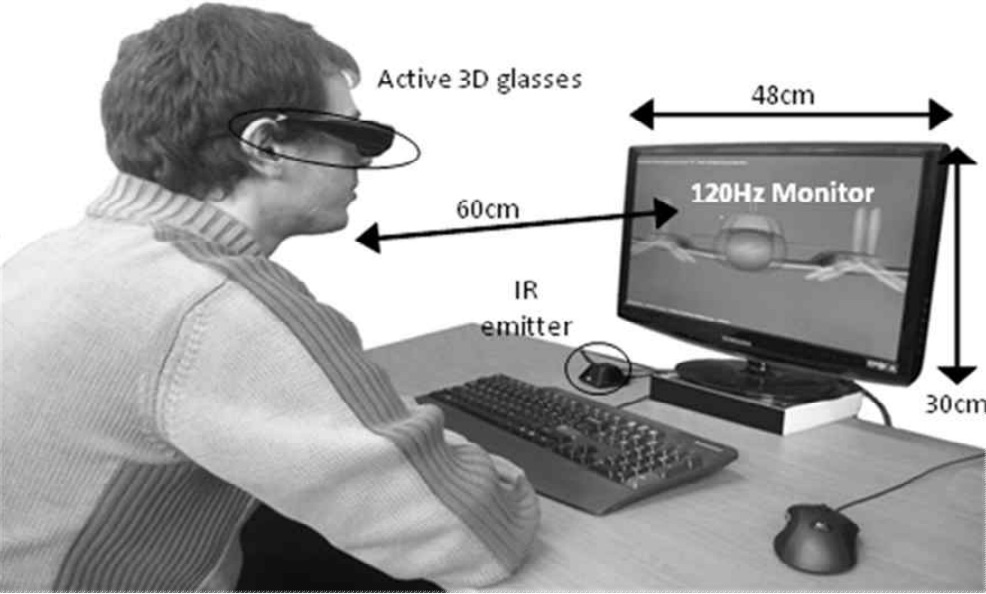

Adaptive Interpupillary Distance Adjustment for Stereoscopic 3D Visualization.

Kim, H., Lee, G., & Billinghurst, M.Kim, H., Lee, G., & Billinghurst, M. (2015, March). Adaptive Interpupillary Distance Adjustment for Stereoscopic 3D Visualization. In Proceedings of the 14th Annual ACM SIGCHI_NZ conference on Computer-Human Interaction (p. 2). ACM.

@inproceedings{kim2015adaptive,

title={Adaptive Interpupillary Distance Adjustment for Stereoscopic 3D Visualization},

author={Kim, Hyungon and Lee, Gun and Billinghurst, Mark},

booktitle={Proceedings of the 14th Annual ACM SIGCHI\_NZ conference on Computer-Human Interaction},

pages={2},

year={2015},

organization={ACM}

}View: https://ir.canterbury.ac.nz/bitstream/handle/10092/12239/12648701_chinz2013-kim.pdf?sequence=1Stereoscopic visualization creates illusions of depth through disparity between the images shown to left and right eyes of the viewer. While the stereoscopic visualization is widely adopted in immersive visualization systems to improve user experience, it can also cause visual discomfort if the stereoscopic viewing parameters are not adjusted appropriately. These parameters are usually manually adjusted based on human factors and empirical knowledge of the developer or even the user. However, scenes with dynamic change in scale and configuration can lead into continuous adjustment of these parameters while viewing. In this paper, we propose a method to adjust the interpupillary distance adaptively and automatically according to the configuration of the 3D scene, so that the visualized scene can maintain sufficient stereo effect while reducing visual discomfort. -

User Defined Gestures for Augmented Virtual Mirrors: A Guessability Study

Lee, G. A., Wong, J., Park, H. S., Choi, J. S., Park, C. J., & Billinghurst, M.Lee, G. A., Wong, J., Park, H. S., Choi, J. S., Park, C. J., & Billinghurst, M. (2015, April). User defined gestures for augmented virtual mirrors: a guessability study. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (pp. 959-964). ACM.

@inproceedings{lee2015user,

title={User defined gestures for augmented virtual mirrors: a guessability study},

author={Lee, Gun A and Wong, Jonathan and Park, Hye Sun and Choi, Jin Sung and Park, Chang Joon and Billinghurst, Mark},

booktitle={Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems},

pages={959--964},

year={2015},

organization={ACM}

}Public information displays are evolving from passive screens into more interactive and smarter ubiquitous computing platforms. In this research we investigate applying gesture interaction and Augmented Reality (AR) technologies to make public information displays more intuitive and easy to use. We focus especially on designing intuitive gesture based interaction methods to use in combination with an augmented virtual mirror interface. As an initial step, we conducted a user study to indentify the gestures that users feel are natural for performing common tasks when interacting with augmented virtual mirror displays. We report initial findings from the study, discuss design guidelines, and suggest future research directions. -

Automatically Freezing Live Video for Annotation during Remote Collaboration

Kim, S., Lee, G. A., Ha, S., Sakata, N., & Billinghurst, M.Kim, S., Lee, G. A., Ha, S., Sakata, N., & Billinghurst, M. (2015, April). Automatically freezing live video for annotation during remote collaboration. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (pp. 1669-1674). ACM.

@inproceedings{kim2015automatically,

title={Automatically freezing live video for annotation during remote collaboration},

author={Kim, Seungwon and Lee, Gun A and Ha, Sangtae and Sakata, Nobuchika and Billinghurst, Mark},

booktitle={Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems},

pages={1669--1674},

year={2015},

organization={ACM}

}Drawing annotations on shared live video has been investigated as a tool for remote collaboration. However, if a local user changes the viewpoint of a shared live video while a remote user is drawing an annotation, the annotation is projected and drawn at wrong place. Prior work suggested manually freezing the video while annotating to solve the issue, but this needs additional user input. We introduce a solution that automatically freezes the video, and present the results of a user study comparing it with manual freeze and no freeze conditions. Auto-freeze was most preferred by both remote and local participants who felt it best solved the issue of annotations appearing in the wrong place. With auto-freeze, remote users were able to draw annotations quicker, while the local users were able to understand the annotations clearer. -

A comparative study of simulated augmented reality displays for vehicle navigation

Jose, R., Lee, G. A., & Billinghurst, M.Jose, R., Lee, G. A., & Billinghurst, M. (2016, November). A comparative study of simulated augmented reality displays for vehicle navigation. In Proceedings of the 28th Australian conference on computer-human interaction (pp. 40-48). ACM.

@inproceedings{jose2016comparative,

title={A comparative study of simulated augmented reality displays for vehicle navigation},

author={Jose, Richie and Lee, Gun A and Billinghurst, Mark},

booktitle={Proceedings of the 28th Australian conference on computer-human interaction},

pages={40--48},

year={2016},

organization={ACM}