Seungwon Kim

Seungwon Kim

Research Fellow

Dr. Seungwon Kim is a Postdoctoral Research Fellow investigating the remote collaboration system. With Augmented Reality technology, he adds visual communication cues (such as pointer, sketch, and virtual hands) in the shared live video of a video conferencing system and studies the effect of them for better remote collaboration. He has presented papers at major A/A* international conferences and journals such as CHI, ISMAR, JVCI, TIIS, and CSCW. He also has reviewed dozens of papers at CHI, ISMAR, VR, TVCG, BIT, and IMWUT.

He received his Ph.D. in Human Interface Technology from HITLab NZ in November 2016 with supervision of Prof. Mark Billinghurst, Dr. Gun Lee, and Dr. Christoph Bartneck. During the Ph.D, He received UC doctoral scholarship from University of Canterbury from April of 2012 to April of 2016. He developed one of the early remote collaboration interfaces that anchors virtual sketches in real world without a marker and previous data, and introduced the auto freeze function for a drawing annotation interface.

In 2013, he was selected through the Microsoft Worldwide Internship program by Nexus group at Microsoft Research (MSR) in Redmond. At MSR, he developed interfaces for Skype that includes three additional views (a high quality image view, a map view, and a scene view) together with a live video stream.

He completed Bachelor and Master Degrees in Computer Science in University of Tasmania, and received Tasmanian International Scholarship (TIS) during the Degrees. He is also a golden key member that is only available to top 15 percent students during the Bachelor or Master Degrees.

Projects

-

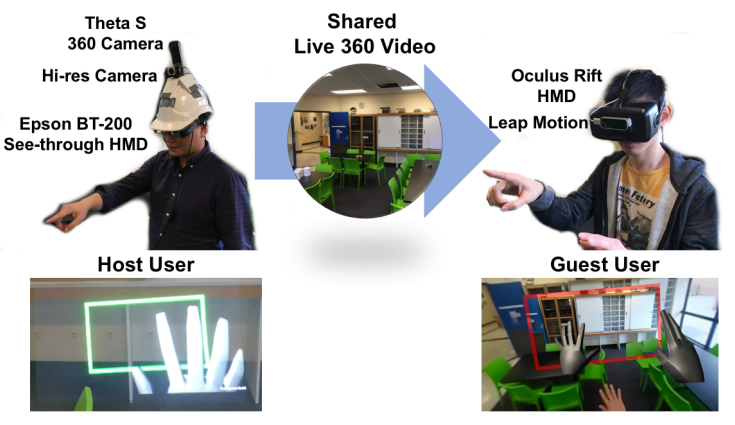

SharedSphere

SharedSphere is a Mixed Reality based remote collaboration system which not only allows sharing a live captured immersive 360 panorama, but also supports enriched two-way communication and collaboration through sharing non-verbal communication cues, such as view awareness cues, drawn annotation, and hand gestures.

-

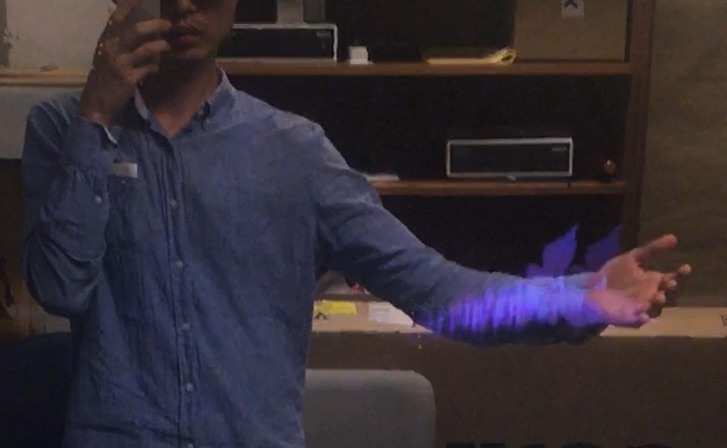

Augmented Mirrors

Mirrors are physical displays that show our real world in reflection. While physical mirrors simply show what is in the real world scene, with help of digital technology, we can also alter the reality reflected in the mirror. The Augmented Mirrors project aims at exploring visualisation interaction techniques for exploiting mirrors as Augmented Reality (AR) displays. The project especially focuses on using user interface agents for guiding user interaction with Augmented Mirrors.

-

Empathy Glasses

We have been developing a remote collaboration system with Empathy Glasses, a head worn display designed to create a stronger feeling of empathy between remote collaborators. To do this, we combined a head- mounted see-through display with a facial expression recognition system, a heart rate sensor, and an eye tracker. The goal is to enable a remote person to see and hear from another person's perspective and to understand how they are feeling. In this way, the system shares non-verbal cues that could help increase empathy between remote collaborators.

-

Sharing Gesture and Gaze Cues for Enhancing MR Collaboration

This research focuses on visualizing shared gaze cues, designing interfaces for collaborative experience, and incorporating multimodal interaction techniques and physiological cues to support empathic Mixed Reality (MR) remote collaboration using HoloLens 2, Vive Pro Eye, Meta Pro, HP Omnicept, Theta V 360 camera, Windows Speech Recognition, Leap motion hand tracking, and Zephyr/Shimmer Sensing technologies

Publications

-

Mixed Reality Collaboration through Sharing a Live Panorama

Gun A. Lee, Theophilus Teo, Seungwon Kim, Mark BillinghurstGun A. Lee, Theophilus Teo, Seungwon Kim, and Mark Billinghurst. 2017. Mixed reality collaboration through sharing a live panorama. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (SA '17). ACM, New York, NY, USA, Article 14, 4 pages. http://doi.acm.org/10.1145/3132787.3139203

@inproceedings{Lee:2017:MRC:3132787.3139203,

author = {Lee, Gun A. and Teo, Theophilus and Kim, Seungwon and Billinghurst, Mark},

title = {Mixed Reality Collaboration Through Sharing a Live Panorama},

booktitle = {SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

series = {SA '17},

year = {2017},

isbn = {978-1-4503-5410-3},

location = {Bangkok, Thailand},

pages = {14:1--14:4},

articleno = {14},

numpages = {4},

url = {http://doi.acm.org/10.1145/3132787.3139203},

doi = {10.1145/3132787.3139203},

acmid = {3139203},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {panorama, remote collaboration, shared experience},

}One of the popular features on modern social networking platforms is sharing live 360 panorama video. This research investigates on how to further improve shared live panorama based collaborative experiences by applying Mixed Reality (MR) technology. SharedSphere is a wearable MR remote collaboration system. In addition to sharing a live captured immersive panorama, SharedSphere enriches the collaboration through overlaying MR visualisation of non-verbal communication cues (e.g., view awareness and gestures cues). User feedback collected through a preliminary user study indicated that sharing of live 360 panorama video was beneficial by providing a more immersive experience and supporting view independence. Users also felt that the view awareness cues were helpful for understanding the remote collaborator’s focus. -

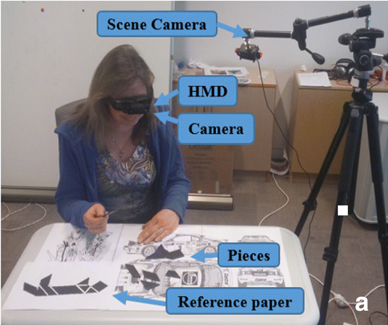

The Effect of Collaboration Styles and View Independence on Video-Mediated Remote Collaboration

Seungwon Kim, Mark Billinghurst, Gun LeeKim, S., Billinghurst, M., & Lee, G. (2018). The Effect of Collaboration Styles and View Independence on Video-Mediated Remote Collaboration. Computer Supported Cooperative Work (CSCW), 1-39.

@Article{Kim2018,

author="Kim, Seungwon

and Billinghurst, Mark

and Lee, Gun",

title="The Effect of Collaboration Styles and View Independence on Video-Mediated Remote Collaboration",

journal="Computer Supported Cooperative Work (CSCW)",

year="2018",

month="Jun",

day="02",

abstract="This paper investigates how different collaboration styles and view independence affect remote collaboration. Our remote collaboration system shares a live video of a local user's real-world task space with a remote user. The remote user can have an independent view or a dependent view of a shared real-world object manipulation task and can draw virtual annotations onto the real-world objects as a visual communication cue. With the system, we investigated two different collaboration styles; (1) remote expert collaboration where a remote user has the solution and gives instructions to a local partner and (2) mutual collaboration where neither user has a solution but both remote and local users share ideas and discuss ways to solve the real-world task. In the user study, the remote expert collaboration showed a number of benefits over the mutual collaboration. With the remote expert collaboration, participants had better communication from the remote user to the local user, more aligned focus between participants, and the remote participants' feeling of enjoyment and togetherness. However, the benefits were not always apparent at the local participants' end, especially with measures of enjoyment and togetherness. The independent view also had several benefits over the dependent view, such as allowing remote participants to freely navigate around the workspace while having a wider fully zoomed-out view. The benefits of the independent view were more prominent in the mutual collaboration than in the remote expert collaboration, especially in enabling the remote participants to see the workspace.",

issn="1573-7551",

doi="10.1007/s10606-018-9324-2",

url="https://doi.org/10.1007/s10606-018-9324-2"

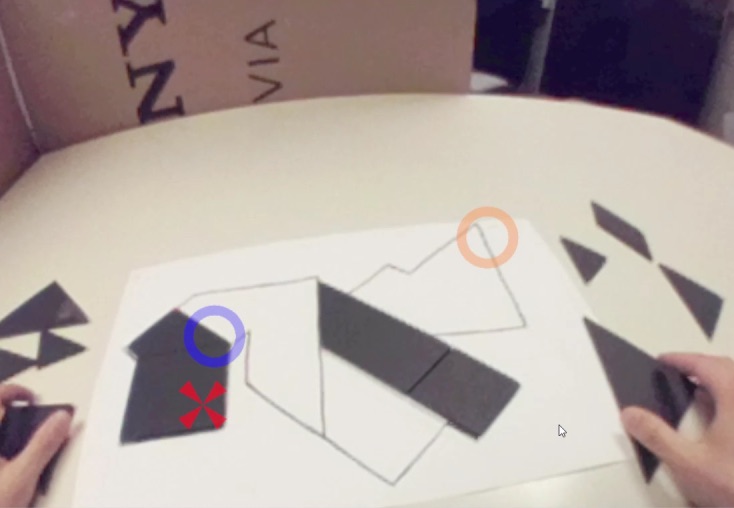

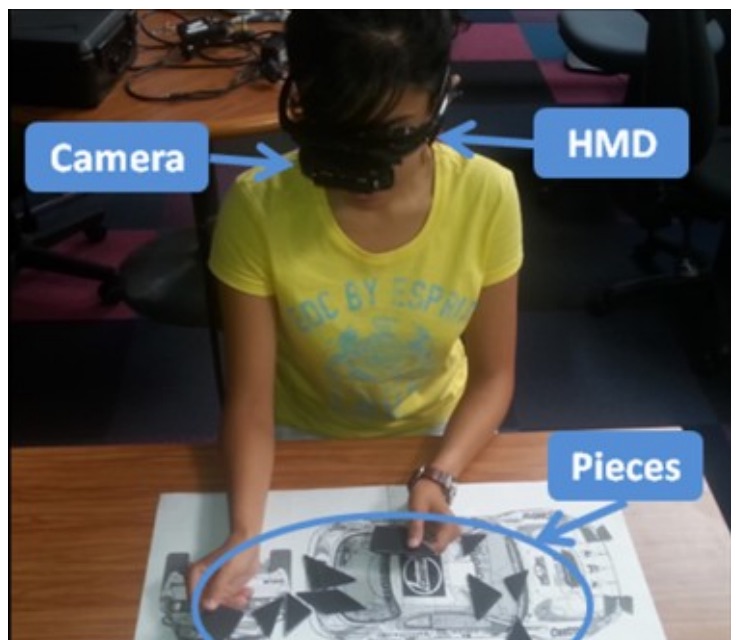

}This paper investigates how different collaboration styles and view independence affect remote collaboration. Our remote collaboration system shares a live video of a local user’s real-world task space with a remote user. The remote user can have an independent view or a dependent view of a shared real-world object manipulation task and can draw virtual annotations onto the real-world objects as a visual communication cue. With the system, we investigated two different collaboration styles; (1) remote expert collaboration where a remote user has the solution and gives instructions to a local partner and (2) mutual collaboration where neither user has a solution but both remote and local users share ideas and discuss ways to solve the real-world task. In the user study, the remote expert collaboration showed a number of benefits over the mutual collaboration. With the remote expert collaboration, participants had better communication from the remote user to the local user, more aligned focus between participants, and the remote participants’ feeling of enjoyment and togetherness. However, the benefits were not always apparent at the local participants’ end, especially with measures of enjoyment and togetherness. The independent view also had several benefits over the dependent view, such as allowing remote participants to freely navigate around the workspace while having a wider fully zoomed-out view. The benefits of the independent view were more prominent in the mutual collaboration than in the remote expert collaboration, especially in enabling the remote participants to see the workspace. -

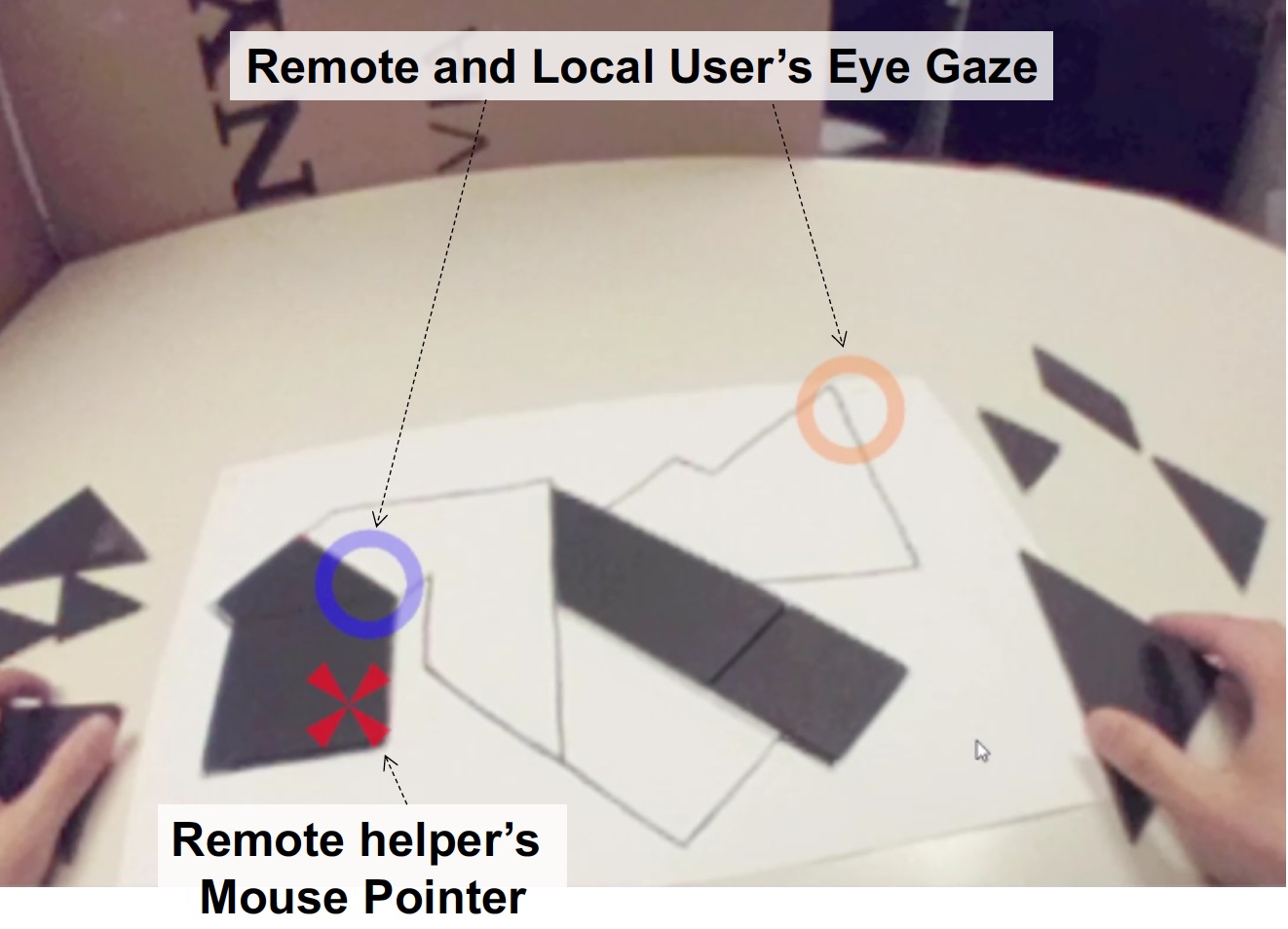

Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze

Gun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark BillinghurstGun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark Billinghurst. 2017. Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze. In Proceedings of ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, pp. 197-204. http://dx.doi.org/10.2312/egve.20171359

@inproceedings {egve.20171359,

booktitle = {ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments},

editor = {Robert W. Lindeman and Gerd Bruder and Daisuke Iwai},

title = {{Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze}},

author = {Lee, Gun A. and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammathip and Norman, Mitchell and Billinghurst, Mark},

year = {2017},

publisher = {The Eurographics Association},

ISSN = {1727-530X},

ISBN = {978-3-03868-038-3},

DOI = {10.2312/egve.20171359}

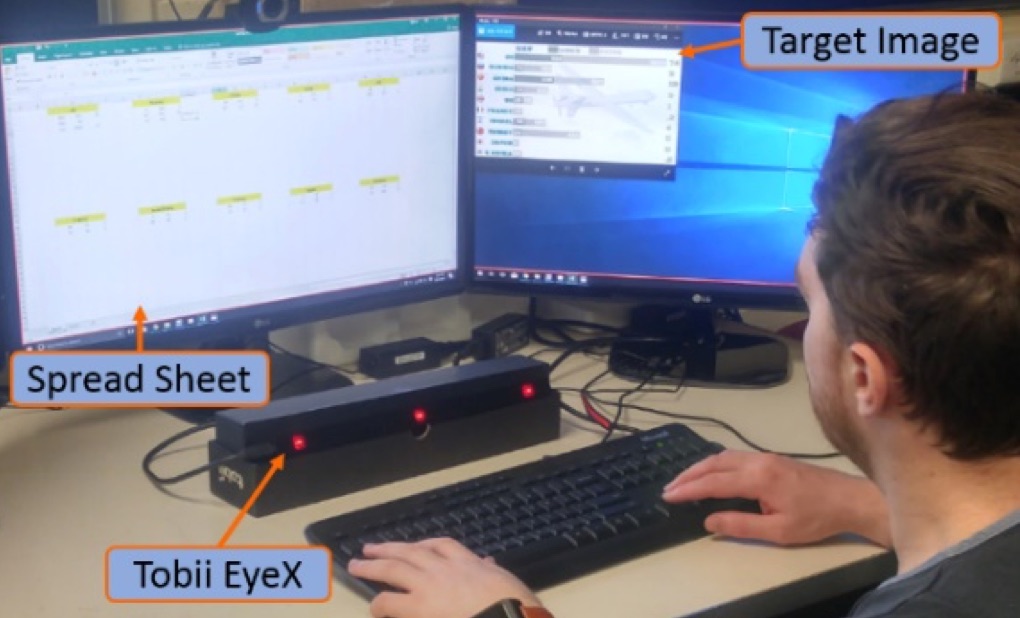

}To improve remote collaboration in video conferencing systems, researchers have been investigating augmenting visual cues onto a shared live video stream. In such systems, a person wearing a head-mounted display (HMD) and camera can share her view of the surrounding real-world with a remote collaborator to receive assistance on a real-world task. While this concept of augmented video conferencing (AVC) has been actively investigated, there has been little research on how sharing gaze cues might affect the collaboration in video conferencing. This paper investigates how sharing gaze in both directions between a local worker and remote helper in an AVC system affects the collaboration and communication. Using a prototype AVC system that shares the eye gaze of both users, we conducted a user study that compares four conditions with different combinations of eye gaze sharing between the two users. The results showed that sharing each other’s gaze significantly improved collaboration and communication. -

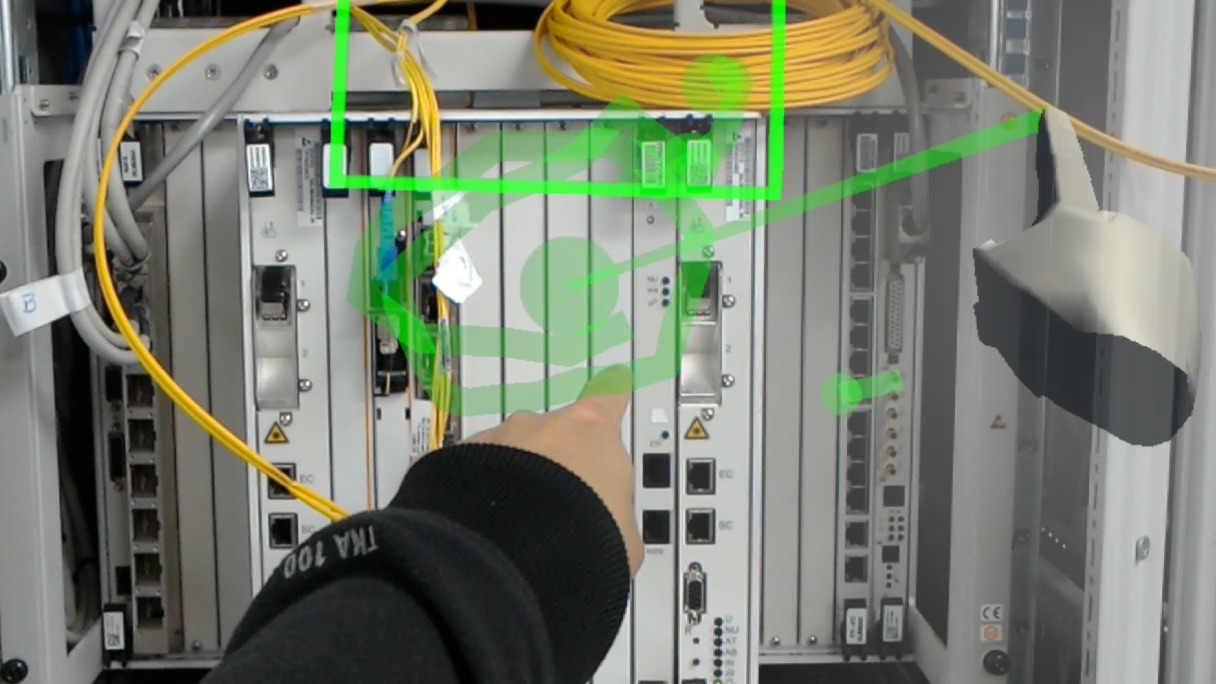

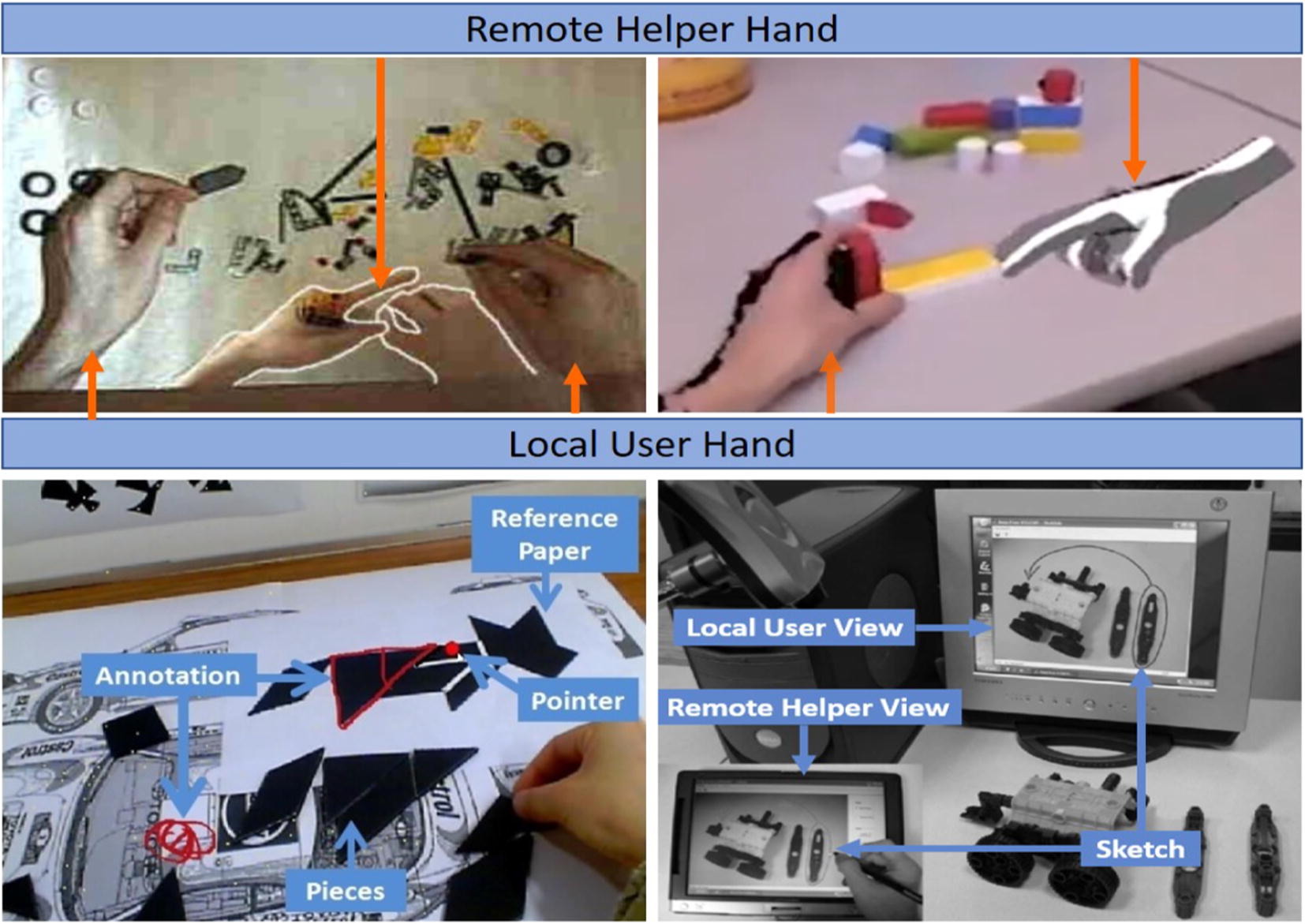

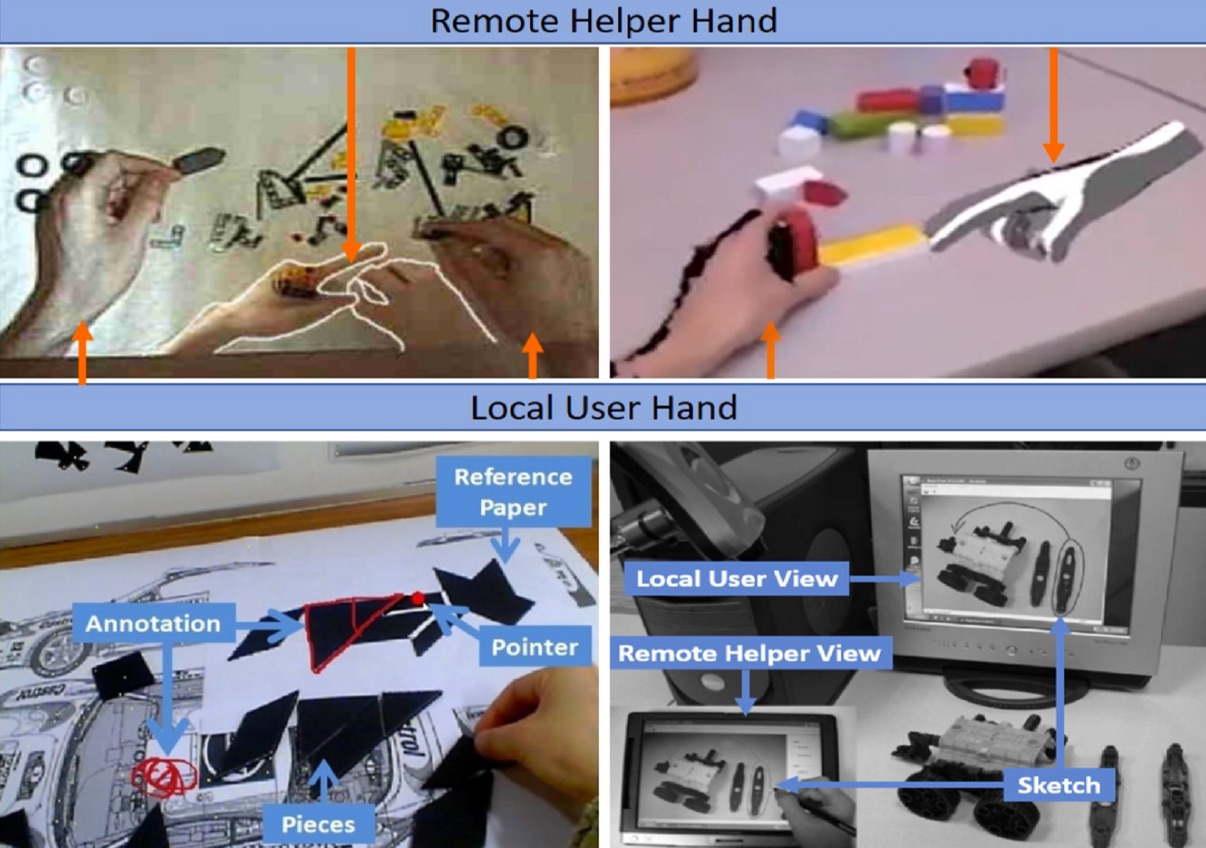

Sharing hand gesture and sketch cues in remote collaboration

W. Huang, S. Kim, M. Billinghurst, L. AlemHuang, W., Kim, S., Billinghurst, M., & Alem, L. (2019). Sharing hand gesture and sketch cues in remote collaboration. Journal of Visual Communication and Image Representation, 58, 428-438.

@article{huang2019sharing,

title={Sharing hand gesture and sketch cues in remote collaboration},

author={Huang, Weidong and Kim, Seungwon and Billinghurst, Mark and Alem, Leila},

journal={Journal of Visual Communication and Image Representation},

volume={58},

pages={428--438},

year={2019},

publisher={Elsevier}

}Many systems have been developed to support remote guidance, where a local worker manipulates objects under guidance of a remote expert helper. These systems typically use speech and visual cues between the local worker and the remote helper, where the visual cues could be pointers, hand gestures, or sketches. However, the effects of combining visual cues together in remote collaboration has not been fully explored. We conducted a user study comparing remote collaboration with an interface that combined hand gestures and sketching (the HandsInTouch interface) to one that only used hand gestures, when solving two tasks; Lego assembly and repairing a laptop. In the user study, we found that (1) adding sketch cues improved the task completion time, only with the repairing task as this had complex object manipulation but (2) using gesture and sketching together created a higher task load for the user. -

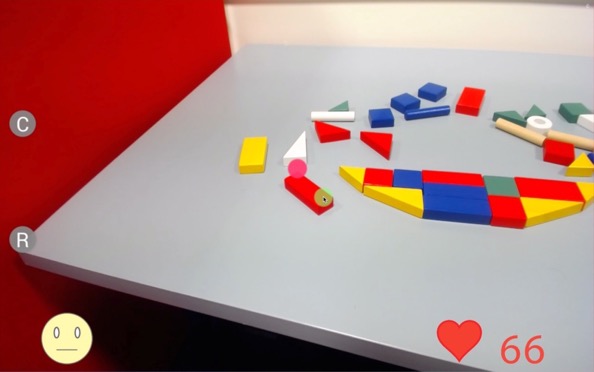

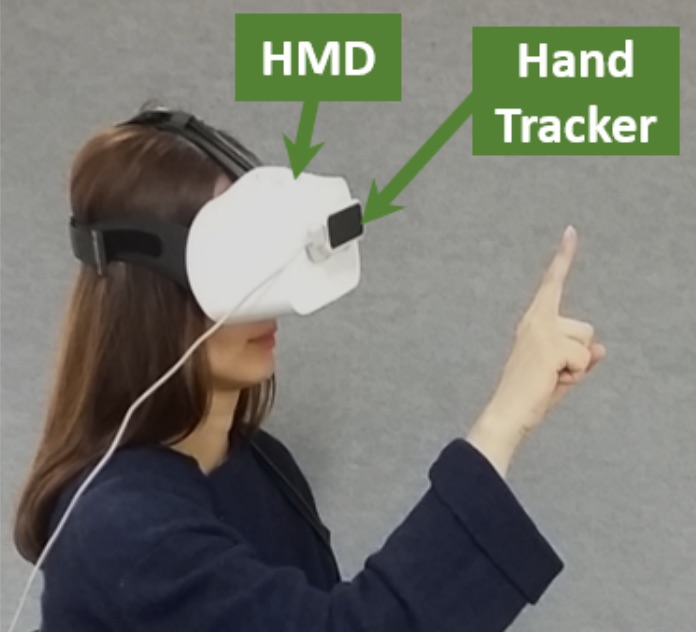

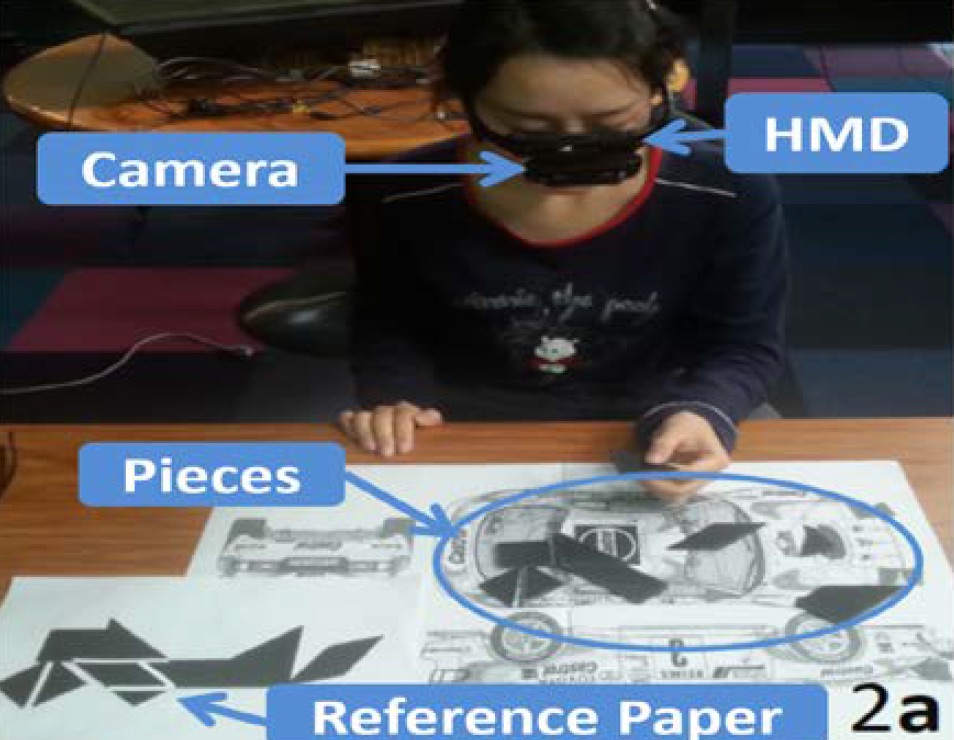

Evaluating the Combination of Visual Communication Cues for HMD-based Mixed Reality Remote Collaboration

Kim, S., Lee, G., Huang, W., Kim, H., Woo, W., & Billinghurst, M.Kim, S., Lee, G., Huang, W., Kim, H., Woo, W., & Billinghurst, M. (2019, April). Evaluating the Combination of Visual Communication Cues for HMD-based Mixed Reality Remote Collaboration. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (p. 173). ACM.

@inproceedings{kim2019evaluating,

title={Evaluating the Combination of Visual Communication Cues for HMD-based Mixed Reality Remote Collaboration},

author={Kim, Seungwon and Lee, Gun and Huang, Weidong and Kim, Hayun and Woo, Woontack and Billinghurst, Mark},

booktitle={Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages={173},

year={2019},

organization={ACM}

}Many researchers have studied various visual communication cues (e.g. pointer, sketching, and hand gesture) in Mixed Reality remote collaboration systems for real-world tasks. However, the effect of combining them has not been so well explored. We studied the effect of these cues in four combinations: hand only, hand + pointer, hand + sketch, and hand + pointer + sketch, with three problem tasks: Lego, Tangram, and Origami. The study results showed that the participants completed the task significantly faster and felt a significantly higher level of usability when the sketch cue is added to the hand gesture cue, but not with adding the pointer cue. Participants also preferred the combinations including hand and sketch cues over the other combinations. However, using additional cues (pointer or sketch) increased the perceived mental effort and did not improve the feeling of co-presence. We discuss the implications of these results and future research directions. -

Sharing Emotion by Displaying a Partner Near the Gaze Point in a Telepresence System

Kim, S., Billinghurst, M., Lee, G., Norman, M., Huang, W., & He, J.Kim, S., Billinghurst, M., Lee, G., Norman, M., Huang, W., & He, J. (2019, July). Sharing Emotion by Displaying a Partner Near the Gaze Point in a Telepresence System. In 2019 23rd International Conference in Information Visualization–Part II (pp. 86-91). IEEE.

@inproceedings{kim2019sharing,

title={Sharing Emotion by Displaying a Partner Near the Gaze Point in a Telepresence System},

author={Kim, Seungwon and Billinghurst, Mark and Lee, Gun and Norman, Mitchell and Huang, Weidong and He, Jian},

booktitle={2019 23rd International Conference in Information Visualization--Part II},

pages={86--91},

year={2019},

organization={IEEE}

}In this paper, we explore the effect of showing a remote partner close to user gaze point in a teleconferencing system. We implemented a gaze following function in a teleconferencing system and investigate if this improves the user's feeling of emotional interdependence. We developed a prototype system that shows a remote partner close to the user's current gaze point and conducted a user study comparing it to a condition displaying the partner fixed in the corner of a screen. Our results showed that showing a partner close to their gaze point helped users feel a higher level of emotional interdependence. In addition, we compared the effect of our method between small and big displays, but there was no significant difference in the users' feeling of emotional interdependence even though the big display was preferred. -

HandsInTouch: sharing gestures in remote collaboration

Huang, W., Billinghurst, M., Alem, L., & Kim, S.Huang, W., Billinghurst, M., Alem, L., & Kim, S. (2018, December). HandsInTouch: sharing gestures in remote collaboration. In Proceedings of the 30th Australian Conference on Computer-Human Interaction (pp. 396-400). ACM.

@inproceedings{huang2018handsintouch,

title={HandsInTouch: sharing gestures in remote collaboration},

author={Huang, Weidong and Billinghurst, Mark and Alem, Leila and Kim, Seungwon},

booktitle={Proceedings of the 30th Australian Conference on Computer-Human Interaction},

pages={396--400},

year={2018},

organization={ACM}

}Many systems have been developed to support remote collaboration, where hand gestures or sketches can be shared. However, the effect of combining gesture and sketching together has not been fully explored and understood. In this paper we describe HandsInTouch, a system in which both hand gestures and sketches made by a remote helper are shown to a local user in real time. We conducted a user study to test the usability of the system and the usefulness of combing gesture and sketching for remote collaboration. We discuss results and make recommendations for system design and future work. -

Using Freeze Frame and Visual Notifications in an Annotation Drawing Interface for Remote Collaboration.

Kim, S., Billinghurst, M., Lee, C., & Lee, GKim, S., Billinghurst, M., Lee, C., & Lee, G. (2018). Using Freeze Frame and Visual Notifications in an Annotation Drawing Interface for Remote Collaboration. KSII Transactions on Internet & Information Systems, 12(12).

@article{kim2018using,

title={Using Freeze Frame and Visual Notifications in an Annotation Drawing Interface for Remote Collaboration.},

author={Kim, Seungwon and Billinghurst, Mark and Lee, Chilwoo and Lee, Gun},

journal={KSII Transactions on Internet \& Information Systems},

volume={12},

number={12},

year={2018}

}This paper describes two user studies in remote collaboration between two users with a video conferencing system where a remote user can draw annotations on the live video of the local user’s workspace. In these two studies, the local user had the control of the view when sharing the first-person view, but our interfaces provided instant control of the shared view to the remote users. The first study investigates methods for assisting drawing annotations. The auto-freeze method, a novel solution for drawing annotations, is compared to a prior solution (manual freeze method) and a baseline (non-freeze) condition. Results show that both local and remote users preferred the auto-freeze method, which is easy to use and allows users to quickly draw annotations. The manual-freeze method supported precise drawing, but was less preferred because of the need for manual input. The second study explores visual notification for better local user awareness. We propose two designs: the red-box and both-freeze notifications, and compare these to the baseline, no notification condition. Users preferred the less obtrusive red-box notification that improved awareness of when annotations were made by remote users, and had a significantly lower level of interruption compared to the both-freeze condition.

-

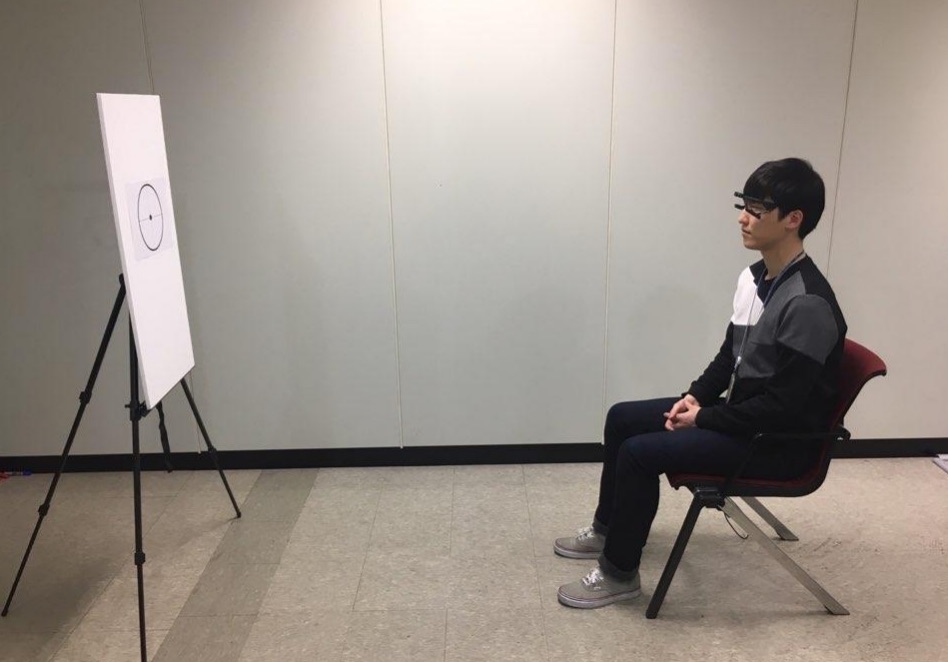

Estimating Gaze Depth Using Multi-Layer Perceptron

Lee, Y., Shin, C., Plopski, A., Itoh, Y., Piumsomboon, T., Dey, A., ... & Billinghurst, M. (2017, June). Estimating Gaze Depth Using Multi-Layer Perceptron. In 2017 International Symposium on Ubiquitous Virtual Reality (ISUVR) (pp. 26-29). IEEE.

@inproceedings{lee2017estimating,

title={Estimating Gaze Depth Using Multi-Layer Perceptron},

author={Lee, Youngho and Shin, Choonsung and Plopski, Alexander and Itoh, Yuta and Piumsomboon, Thammathip and Dey, Arindam and Lee, Gun and Kim, Seungwon and Billinghurst, Mark},

booktitle={2017 International Symposium on Ubiquitous Virtual Reality (ISUVR)},

pages={26--29},

year={2017},

organization={IEEE}

}In this paper we describe a new method for determining gaze depth in a head mounted eye-tracker. Eyetrackers are being incorporated into head mounted displays (HMDs), and eye-gaze is being used for interaction in Virtual and Augmented Reality. For some interaction methods, it is important to accurately measure the x- and y-direction of the eye-gaze and especially the focal depth information. Generally, eye tracking technology has a high accuracy in x- and y-directions, but not in depth. We used a binocular gaze tracker with two eye cameras, and the gaze vector was input to an MLP neural network for training and estimation. For the performance evaluation, data was obtained from 13 people gazing at fixed points at distances from 1m to 5m. The gaze classification into fixed distances produced an average classification error of nearly 10%, and an average error distance of 0.42m. This is sufficient for some Augmented Reality applications, but more research is needed to provide an estimate of a user’s gaze moving in continuous space. -

Mutually Shared Gaze in Augmented Video Conference

Lee, G., Kim, S., Lee, Y., Dey, A., Piumsomboon, T., Norman, M., & Billinghurst, M.Lee, G., Kim, S., Lee, Y., Dey, A., Piumsomboon, T., Norman, M., & Billinghurst, M. (2017, October). Mutually Shared Gaze in Augmented Video Conference. In Adjunct Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR-Adjunct 2017 (pp. 79-80). Institute of Electrical and Electronics Engineers Inc..

@inproceedings{lee2017mutually,

title={Mutually Shared Gaze in Augmented Video Conference},

author={Lee, Gun and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammatip and Norman, Mitchell and Billinghurst, Mark},

booktitle={Adjunct Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR-Adjunct 2017},

pages={79--80},

year={2017},

organization={Institute of Electrical and Electronics Engineers Inc.}

}Augmenting video conference with additional visual cues has been studied to improve remote collaboration. A common setup is a person wearing a head-mounted display (HMD) and camera sharing her view of the workspace with a remote collaborator and getting assistance on a real-world task. While this configuration has been extensively studied, there has been little research on how sharing gaze cues might affect the collaboration. This research investigates how sharing gaze in both directions between a local worker and remote helper affects the collaboration and communication. We developed a prototype system that shares the eye gaze of both users, and conducted a user study. Preliminary results showed that sharing gaze significantly improves the awareness of each other's focus, hence improving collaboration. -

Automatically Freezing Live Video for Annotation during Remote Collaboration

Kim, S., Lee, G. A., Ha, S., Sakata, N., & Billinghurst, M.Kim, S., Lee, G. A., Ha, S., Sakata, N., & Billinghurst, M. (2015, April). Automatically freezing live video for annotation during remote collaboration. In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (pp. 1669-1674). ACM.

@inproceedings{kim2015automatically,

title={Automatically freezing live video for annotation during remote collaboration},

author={Kim, Seungwon and Lee, Gun A and Ha, Sangtae and Sakata, Nobuchika and Billinghurst, Mark},

booktitle={Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems},

pages={1669--1674},

year={2015},

organization={ACM}

}Drawing annotations on shared live video has been investigated as a tool for remote collaboration. However, if a local user changes the viewpoint of a shared live video while a remote user is drawing an annotation, the annotation is projected and drawn at wrong place. Prior work suggested manually freezing the video while annotating to solve the issue, but this needs additional user input. We introduce a solution that automatically freezes the video, and present the results of a user study comparing it with manual freeze and no freeze conditions. Auto-freeze was most preferred by both remote and local participants who felt it best solved the issue of annotations appearing in the wrong place. With auto-freeze, remote users were able to draw annotations quicker, while the local users were able to understand the annotations clearer. -

A review on communication cues for augmented reality based remote guidance

Weidong Huang, Mathew Wakefield, Troels Ammitsbøl Rasmussen, Seungwon Kim & Mark BillinghurstHuang, W., Wakefield, M., Rasmussen, T. A., Kim, S., & Billinghurst, M. (2022). A review on communication cues for augmented reality based remote guidance. Journal on Multimodal User Interfaces, 1-18.

@article{huang2022review,

title={A review on communication cues for augmented reality based remote guidance},

author={Huang, Weidong and Wakefield, Mathew and Rasmussen, Troels Ammitsb{\o}l and Kim, Seungwon and Billinghurst, Mark},

journal={Journal on Multimodal User Interfaces},

pages={1--18},

year={2022},

publisher={Springer}

}Remote guidance on physical tasks is a type of collaboration in which a local worker is guided by a remote helper to operate on a set of physical objects. It has many applications in industrial sections such as remote maintenance and how to support this type of remote collaboration has been researched for almost three decades. Although a range of different modern computing tools and systems have been proposed, developed and used to support remote guidance in different application scenarios, it is essential to provide communication cues in a shared visual space to achieve common ground for effective communication and collaboration. In this paper, we conduct a selective review to summarize communication cues, approaches that implement the cues and their effects on augmented reality based remote guidance. We also discuss challenges and propose possible future research and development directions. -

Mixed reality collaboration through sharing a live panorama

Gun A. Lee , Theophilus Teo , Seungwon Kim , Mark BillinghurstG. A. Lee, T. Teo, S. Kim, and M. Billinghurst. (2017). “Mixed reality collaboration through sharing a live panorama”. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (SA 2017). ACM, New York, NY, USA, Article 14, 4 pages.

@inproceedings{10.1145/3132787.3139203,

author = {Lee, Gun A. and Teo, Theophilus and Kim, Seungwon and Billinghurst, Mark},

title = {Mixed Reality Collaboration through Sharing a Live Panorama},

year = {2017},

isbn = {9781450354103},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3132787.3139203},

doi = {10.1145/3132787.3139203},

abstract = {One of the popular features on modern social networking platforms is sharing live 360 panorama video. This research investigates on how to further improve shared live panorama based collaborative experiences by applying Mixed Reality (MR) technology. Shared-Sphere is a wearable MR remote collaboration system. In addition to sharing a live captured immersive panorama, SharedSphere enriches the collaboration through overlaying MR visualisation of non-verbal communication cues (e.g., view awareness and gestures cues). User feedback collected through a preliminary user study indicated that sharing of live 360 panorama video was beneficial by providing a more immersive experience and supporting view independence. Users also felt that the view awareness cues were helpful for understanding the remote collaborator's focus.},

booktitle = {SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

articleno = {14},

numpages = {4},

keywords = {shared experience, panorama, remote collaboration},

location = {Bangkok, Thailand},

series = {SA '17}

}One of the popular features on modern social networking platforms is sharing live 360 panorama video. This research investigates on how to further improve shared live panorama based collaborative experiences by applying Mixed Reality (MR) technology. Shared-Sphere is a wearable MR remote collaboration system. In addition to sharing a live captured immersive panorama, SharedSphere enriches the collaboration through overlaying MR visualisation of non-verbal communication cues (e.g., view awareness and gestures cues). User feedback collected through a preliminary user study indicated that sharing of live 360 panorama video was beneficial by providing a more immersive experience and supporting view independence. Users also felt that the view awareness cues were helpful for understanding the remote collaborator's focus.