SharedSphere

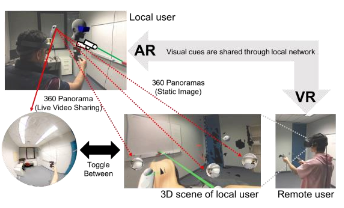

SharedSphere is a Mixed Reality (MR) remote collaboration and shared experience platform. It combines an Augmented Reality (AR) experience for a local worker out in the field with a Virtual Reality (VR) experience for a remote expert. The two users are connected through a live 360 panorama video captured and shared from the host user.

As a MR 360 panoramic video conferencing platform, SharedSphere supports view independence which allows the remote expert to look around the local worker’s surroundings independent of the worker’s orientation. SharedSphere also incorporates situational awareness cues that allow each of the users to see what direction the other person is looking at. The technology supports intuitive gesture sharing. The remote expert can see the local worker’s hand gestures in the live video, and Shared Sphere uses motion sensing in the VR environment to create a virtual copy of the expert’s hand gestures for the local user. Using virtual hands, the remote expert can point at objects of interest or even draw annotations on the real-world objects.

Publications

-

Mixed Reality Collaboration through Sharing a Live Panorama

Gun A. Lee, Theophilus Teo, Seungwon Kim, Mark BillinghurstGun A. Lee, Theophilus Teo, Seungwon Kim, and Mark Billinghurst. 2017. Mixed reality collaboration through sharing a live panorama. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (SA '17). ACM, New York, NY, USA, Article 14, 4 pages. http://doi.acm.org/10.1145/3132787.3139203

@inproceedings{Lee:2017:MRC:3132787.3139203,

author = {Lee, Gun A. and Teo, Theophilus and Kim, Seungwon and Billinghurst, Mark},

title = {Mixed Reality Collaboration Through Sharing a Live Panorama},

booktitle = {SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

series = {SA '17},

year = {2017},

isbn = {978-1-4503-5410-3},

location = {Bangkok, Thailand},

pages = {14:1--14:4},

articleno = {14},

numpages = {4},

url = {http://doi.acm.org/10.1145/3132787.3139203},

doi = {10.1145/3132787.3139203},

acmid = {3139203},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {panorama, remote collaboration, shared experience},

}One of the popular features on modern social networking platforms is sharing live 360 panorama video. This research investigates on how to further improve shared live panorama based collaborative experiences by applying Mixed Reality (MR) technology. SharedSphere is a wearable MR remote collaboration system. In addition to sharing a live captured immersive panorama, SharedSphere enriches the collaboration through overlaying MR visualisation of non-verbal communication cues (e.g., view awareness and gestures cues). User feedback collected through a preliminary user study indicated that sharing of live 360 panorama video was beneficial by providing a more immersive experience and supporting view independence. Users also felt that the view awareness cues were helpful for understanding the remote collaborator’s focus. -

Hand gestures and visual annotation in live 360 panorama-based mixed reality remote collaboration

Theophilus Teo, Gun A. Lee, Mark Billinghurst, Matt AdcockTheophilus Teo, Gun A. Lee, Mark Billinghurst, and Matt Adcock. 2018. Hand gestures and visual annotation in live 360 panorama-based mixed reality remote collaboration. In Proceedings of the 30th Australian Conference on Computer-Human Interaction (OzCHI '18). ACM, New York, NY, USA, 406-410. DOI: https://doi.org/10.1145/3292147.3292200

BibTeX | EndNote | ACM Ref

@inproceedings{Teo:2018:HGV:3292147.3292200,

author = {Teo, Theophilus and Lee, Gun A. and Billinghurst, Mark and Adcock, Matt},

title = {Hand Gestures and Visual Annotation in Live 360 Panorama-based Mixed Reality Remote Collaboration},

booktitle = {Proceedings of the 30th Australian Conference on Computer-Human Interaction},

series = {OzCHI '18},

year = {2018},

isbn = {978-1-4503-6188-0},

location = {Melbourne, Australia},

pages = {406--410},

numpages = {5},

url = {http://doi.acm.org/10.1145/3292147.3292200},

doi = {10.1145/3292147.3292200},

acmid = {3292200},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {gesture communication, mixed reality, remote collaboration},

}In this paper, we investigate hand gestures and visual annotation cues overlaid in a live 360 panorama-based Mixed Reality remote collaboration. The prototype system captures 360 live panorama video of the surroundings of a local user and shares it with another person in a remote location. The two users wearing Augmented Reality or Virtual Reality head-mounted displays can collaborate using augmented visual communication cues such as virtual hand gestures, ray pointing, and drawing annotations. Our preliminary user evaluation comparing these cues found that using visual annotation cues (ray pointing and drawing annotation) helps local users perform collaborative tasks faster, easier, making less errors and with better understanding, compared to using only virtual hand gestures. -

A Technique for Mixed Reality Remote Collaboration using 360 Panoramas in 3D Reconstructed Scenes

Theophilus Teo, Ashkan F. Hayati, Gun A. Lee, Mark Billinghurst, Matt Adcock@inproceedings{teo2019technique,

title={A Technique for Mixed Reality Remote Collaboration using 360 Panoramas in 3D Reconstructed Scenes},

author={Teo, Theophilus and F. Hayati, Ashkan and A. Lee, Gun and Billinghurst, Mark and Adcock, Matt},

booktitle={25th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2019}

}Mixed Reality (MR) remote collaboration provides an enhanced immersive experience where a remote user can provide verbal and nonverbal assistance to a local user to increase the efficiency and performance of the collaboration. This is usually achieved by sharing the local user's environment through live 360 video or a 3D scene, and using visual cues to gesture or point at real objects allowing for better understanding and collaborative task performance. While most of prior work used one of the methods to capture the surrounding environment, there may be situations where users have to choose between using 360 panoramas or 3D scene reconstruction to collaborate, as each have unique benefits and limitations. In this paper we designed a prototype system that combines 360 panoramas into a 3D scene to introduce a novel way for users to interact and collaborate with each other. We evaluated the prototype through a user study which compared the usability and performance of our proposed approach to live 360 video collaborative system, and we found that participants enjoyed using different ways to access the local user's environment although it took them longer time to learn to use our system. We also collected subjective feedback for future improvements and provide directions for future research. -

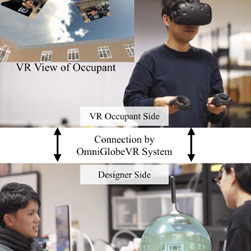

OmniGlobeVR: A Collaborative 360° Communication System for VR

Zhengqing Li , Liwei Chan , Theophilus Teo , Hideki KoikeZhengqing Li, Liwei Chan, Theophilus Teo, and Hideki Koike. 2020. OmniGlobeVR: A Collaborative 360° Communication System for VR. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems (CHI EA ’20). Association for Computing Machinery, New York, NY, USA, 1–8. DOI:https://doi.org/10.1145/3334480.3382869

@inproceedings{li2020omniglobevr,

title={OmniGlobeVR: A Collaborative 360 Communication System for VR},

author={Li, Zhengqing and Chan, Liwei and Teo, Theophilus and Koike, Hideki},

booktitle={Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--8},

year={2020}

}In this paper, we propose OmniGlobeVR, a novel collaboration tool based on an asymmetric cooperation system that supports communication and cooperation between a VR user (occupant) and multiple non-VR users (designers) across the virtual and physical platform. The OmniGlobeVR allows designer(s) to access the content of a VR space from any point of view using two view modes: 360° first-person mode and third-person mode. Furthermore, a proper interface of a shared gaze awareness cue is designed to enhance communication between the occupant and the designer(s). The system also has a face window feature that allows designer(s) to share their facial expressions and upper body gesture with the occupant in order to exchange and express information in a nonverbal context. Combined together, the OmniGlobeVR allows collaborators between the VR and non-VR platforms to cooperate while allowing designer(s) to easily access physical assets while working synchronously with the occupant in the VR space.