Ryo Hajika

Ryo Hajika

Visiting Researcher

Ryo is a visiting researcher in the Empathic Computing Laboratory at the University of Auckland.

Projects

-

Haptic Hongi

This project explores if XR technologies help overcome intercultural discomfort by using Augmented Reality (AR) and haptic feedback to present a traditional Māori greeting. Using a Hololens2 AR headset, guests see a pre-recorded volumetric virtual video of Tania, a Māori woman, who greets them in a re-imagined, contemporary first encounter between indigenous Māori and newcomers. The visitors, manuhiri, consider their response in the absence of usual social pressures. After a brief introduction, the virtual Tania slowly leans forward, inviting the visitor to ‘hongi’, a pressing together of noses and foreheads in a gesture symbolising “ ...peace and oneness of thought, purpose, desire, and hope”. This is felt as a haptic response delivered via a custom-made actuator built into the visitors' AR headset.

-

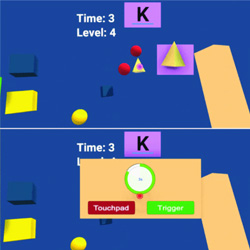

RadarHand

RadarHand is a wrist-worn wearable system that uses radar sensing to detect on-skin proprioceptive hand gestures, making it easy to interact with simple finger motions. Radar has the advantage of being robust, private, small, penetrating materials and requiring low computation costs. In this project, we first evaluated the proprioceptive nature of the back of the hand and found that the thumb is the most proprioceptive of all the finger joints, followed by the index finger, middle finger, ring finger and pinky finger. This helped determine the types of gestures most suitable for the system. Next, we trained deep-learning models for gesture classification. Out of 27 gesture group possibilities, we achieved 92% accuracy for a generic set of seven gestures and 93% accuracy for the proprioceptive set of eight gestures. We also evaluated RadarHand's performance in real-time and achieved an accuracy of between 74% and 91% depending if the system or user initiates the gesture first. This research could contribute to a new generation of radar-based interfaces that allow people to interact with computers in a more natural way.

Publications

-

Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality

Kunal Gupta, Ryo Hajika, Yun Suen Pai, Andreas Duenser, Martin Lochner, Mark BillinghurstK. Gupta, R. Hajika, Y. S. Pai, A. Duenser, M. Lochner and M. Billinghurst, "Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality," 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 2020, pp. 756-765, doi: 10.1109/VR46266.2020.1581313729558.

@inproceedings{gupta2020measuring,

title={Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality},

author={Gupta, Kunal and Hajika, Ryo and Pai, Yun Suen and Duenser, Andreas and Lochner, Martin and Billinghurst, Mark},

booktitle={2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={756--765},

year={2020},

organization={IEEE}

}With the advancement of Artificial Intelligence technology to make smart devices, understanding how humans develop trust in virtual agents is emerging as a critical research field. Through our research, we report on a novel methodology to investigate user’s trust in auditory assistance in a Virtual Reality (VR) based search task, under both high and low cognitive load and under varying levels of agent accuracy. We collected physiological sensor data such as electroencephalography (EEG), galvanic skin response (GSR), and heart-rate variability (HRV), subjective data through questionnaire such as System Trust Scale (STS), Subjective Mental Effort Questionnaire (SMEQ) and NASA-TLX. We also collected a behavioral measure of trust (congruency of users’ head motion in response to valid/ invalid verbal advice from the agent). Our results indicate that our custom VR environment enables researchers to measure and understand human trust in virtual agents using the matrices, and both cognitive load and agent accuracy play an important role in trust formation. We discuss the implications of the research and directions for future work. -

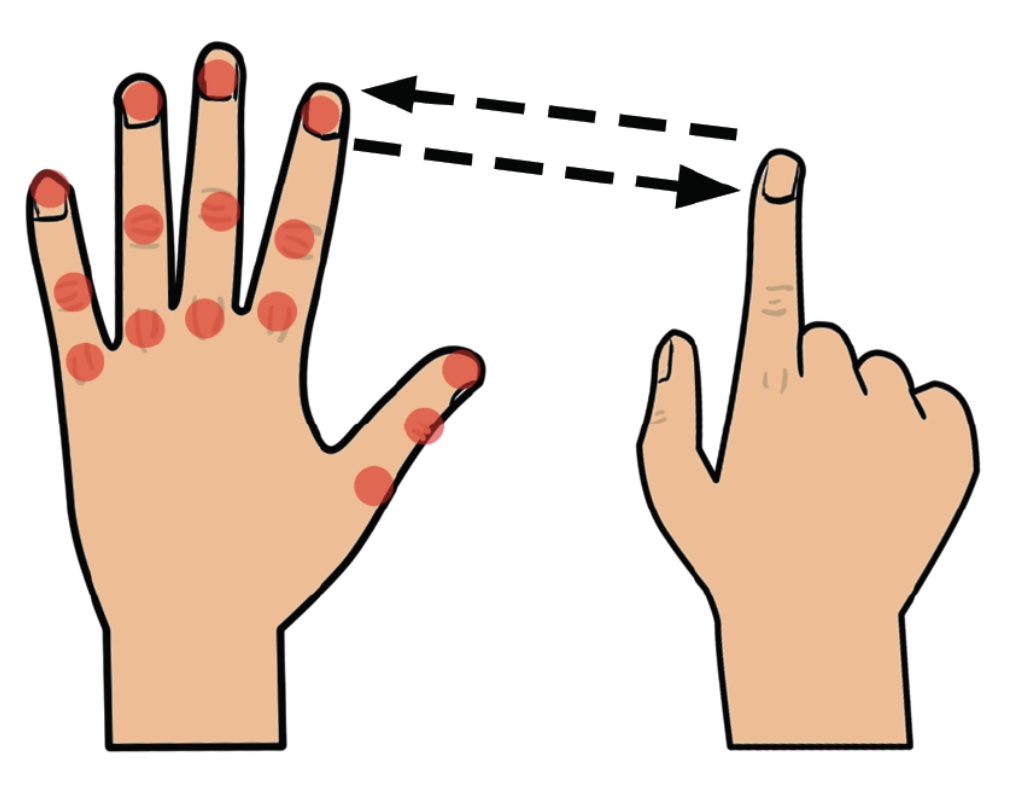

Adapting Fitts’ Law and N-Back to Assess Hand Proprioception

Tamil Gunasekaran, Ryo Hajika, Chloe Dolma Si Ying Haigh, Yun Suen Pai, Danielle Lottridge, Mark Billinghurst.Gunasekaran, T. S., Hajika, R., Haigh, C. D. S. Y., Pai, Y. S., Lottridge, D., & Billinghurst, M. (2021, May). Adapting Fitts’ Law and N-Back to Assess Hand Proprioception. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-7).

@inproceedings{gunasekaran2021adapting,

title={Adapting Fitts’ Law and N-Back to Assess Hand Proprioception},

author={Gunasekaran, Tamil Selvan and Hajika, Ryo and Haigh, Chloe Dolma Si Ying and Pai, Yun Suen and Lottridge, Danielle and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--7},

year={2021}

}Proprioception is the body’s ability to sense the position and movement of each limb, as well as the amount of effort exerted onto or by them. Methods to assess proprioception have been introduced before, yet there is little to no study on assessing the degree of proprioception on body parts for use cases like gesture recognition wearable computing. We propose the use of Fitts’ law coupled with the N-Back task to evaluate proprioception of the hand. We evaluate 15 distinct points at the back of the hand and assess the musing extended 3D Fitts’ law. Our results show that the index of difficulty of tapping point from thumb to pinky increases gradually with a linear regression factor of 0.1144. Additionally, participants perform the tap before performing the N-Back task. From these results, we discuss the fundamental limitations and suggest how Fitts’ law can be further extended to assess proprioception -

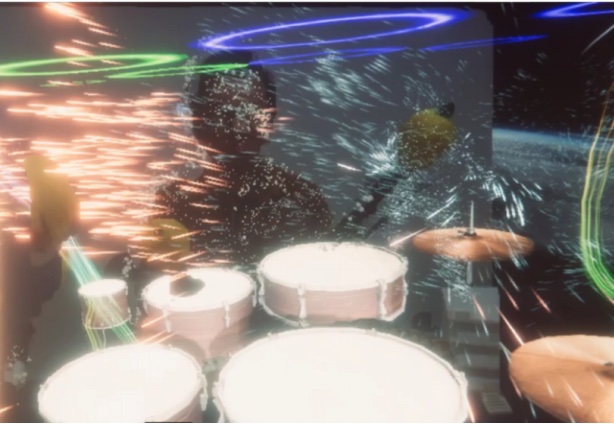

NeuralDrum: Perceiving Brain Synchronicity in XR Drumming

Y. S. Pai, Ryo Hajika, Kunla Gupta, Prasnth Sasikumar, Mark Billinghurst.Pai, Y. S., Hajika, R., Gupta, K., Sasikumar, P., & Billinghurst, M. (2020). NeuralDrum: Perceiving Brain Synchronicity in XR Drumming. In SIGGRAPH Asia 2020 Technical Communications (pp. 1-4).

@incollection{pai2020neuraldrum,

title={NeuralDrum: Perceiving Brain Synchronicity in XR Drumming},

author={Pai, Yun Suen and Hajika, Ryo and Gupta, Kunal and Sasikumar, Prasanth and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2020 Technical Communications},

pages={1--4},

year={2020}

}Brain synchronicity is a neurological phenomena where two or more individuals have their brain activation in phase when performing a shared activity. We present NeuralDrum, an extended reality (XR) drumming experience that allows two players to drum together while their brain signals are simultaneously measured. We calculate the Phase Locking Value (PLV) to determine their brain synchronicity and use this to directly affect their visual and auditory experience in the game, creating a closed feedback loop. In a pilot study, we logged and analysed the users’ brain signals as well as had them answer a subjective questionnaire regarding their perception of synchronicity with their partner and the overall experience. From the results, we discuss design implications to further improve NeuralDrum and propose methods to integrate brain synchronicity into interactive experiences. -

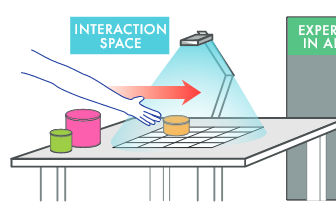

RaITIn: Radar-Based Identification for Tangible Interactions

Tamil Selvan Gunasekaran , Ryo Hajika , Yun Suen Pai , Eiji Hayashi , Mark BillinghurstGunasekaran, T. S., Hajika, R., Pai, Y. S., Hayashi, E., & Billinghurst, M. (2022, April). RaITIn: Radar-Based Identification for Tangible Interactions. In CHI Conference on Human Factors in Computing Systems Extended Abstracts (pp. 1-7).

@inproceedings{gunasekaran2022raitin,

title={RaITIn: Radar-Based Identification for Tangible Interactions},

author={Gunasekaran, Tamil Selvan and Hajika, Ryo and Pai, Yun Suen and Hayashi, Eiji and Billinghurst, Mark},

booktitle={CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--7},

year={2022}

}Radar is primarily used for applications like tracking and large-scale ranging, and its use for object identification has been rarely explored. This paper introduces RaITIn, a radar-based identification (ID) method for tangible interactions. Unlike conventional radar solutions, RaITIn can track and identify objects on a tabletop scale. We use frequency modulated continuous wave (FMCW) radar sensors to classify different objects embedded with low-cost radar reflectors of varying sizes on a tabletop setup. We also introduce Stackable IDs, where different objects can be stacked and combined to produce unique IDs. The result allows RaITIn to accurately identify visually identical objects embedded with different low-cost reflector configurations. When combined with a radar’s ability for tracking, it creates novel tabletop interaction modalities. We discuss possible applications and areas for future work.