Huidong Bai

Huidong Bai

Research Fellow

Dr. Huidong Bai is a Research Fellow at the Empathic Computing Laboratory (ECL) established within the Auckland Bioengineering Institute (ABI, University of Auckland). His areas of research include exploring remote collaborative Mixed Reality (MR) interfaces with spatial scene reconstruction and segmentation, as well as integrating empathic sensing and computing into the collaboration system to enhance shared communication cues.

Before joining the ECL, he was a Postdoctoral Fellow at the Human Interface Technology Laboratory New Zealand (HIT Lab NZ, University of Canterbury), and focused on multimodal natural interaction for mobile and wearable Augmented Reality (AR).

Dr. Bai received his Ph.D. from the HIT Lab NZ in 2016, supervised by Prof. Mark Billinghurst and Prof. Ramakrishnan Mukundan. During his Ph.D., he was also a software engineer intern in the Vuforia team at Qualcomm in 2013 for developing a mobile AR teleconferencing system, and has been an engineering director in a start-up, Envisage AR since 2015 for developing industrial MR/AR applications.

Projects

-

Sharing Gesture and Gaze Cues for Enhancing MR Collaboration

This research focuses on visualizing shared gaze cues, designing interfaces for collaborative experience, and incorporating multimodal interaction techniques and physiological cues to support empathic Mixed Reality (MR) remote collaboration using HoloLens 2, Vive Pro Eye, Meta Pro, HP Omnicept, Theta V 360 camera, Windows Speech Recognition, Leap motion hand tracking, and Zephyr/Shimmer Sensing technologies

-

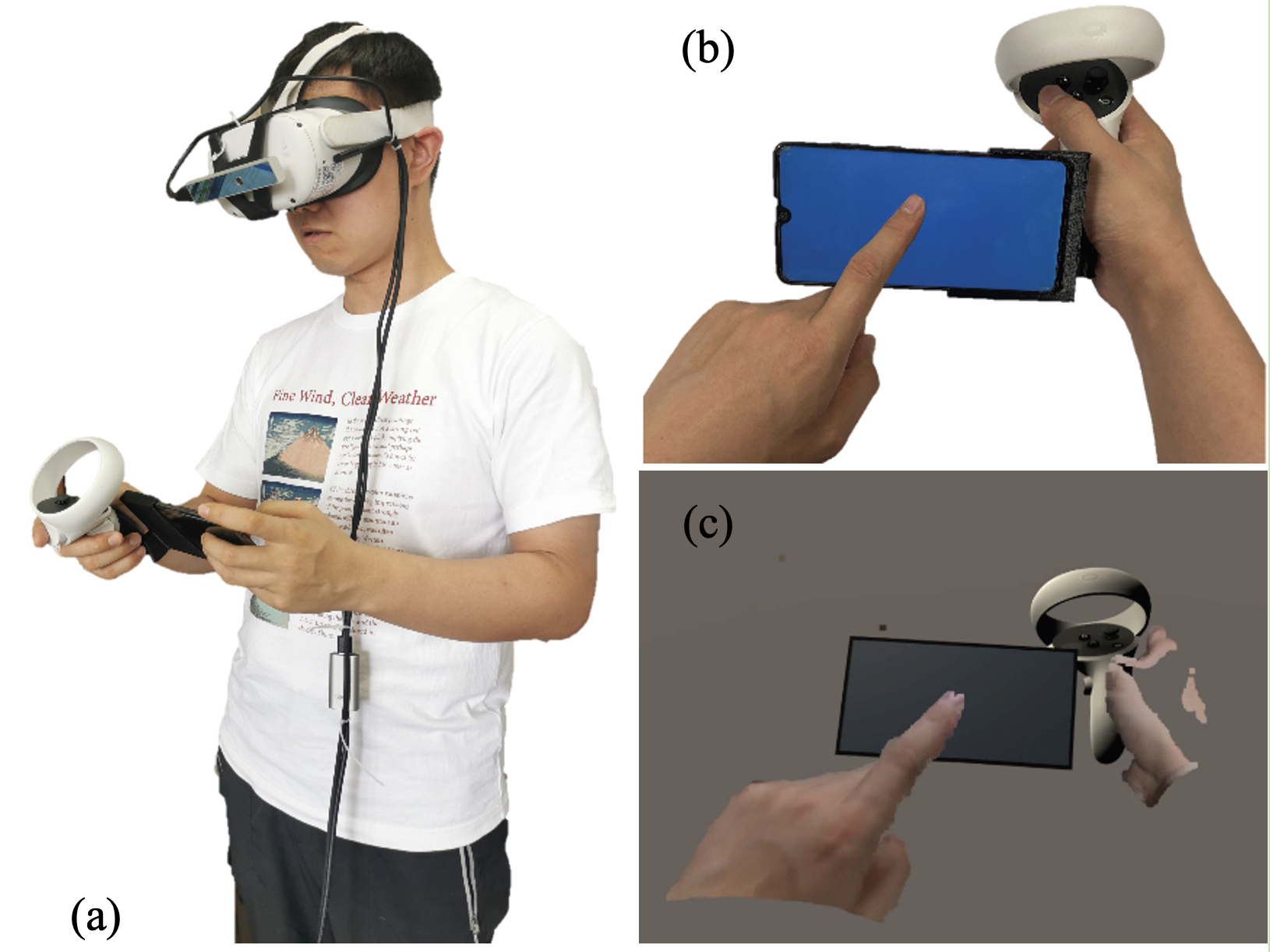

Using a Mobile Phone in VR

Virtual Reality (VR) Head-Mounted Display (HMD) technology immerses a user in a computer generated virtual environment. However, a VR HMD also blocks the users’ view of their physical surroundings, and so prevents them from using their mobile phones in a natural manner. In this project, we present a novel Augmented Virtuality (AV) interface that enables people to naturally interact with a mobile phone in real time in a virtual environment. The system allows the user to wear a VR HMD while seeing his/her 3D hands captured by a depth sensor and rendered in different styles, and enables the user to operate a virtual mobile phone aligned with their real phone.

-

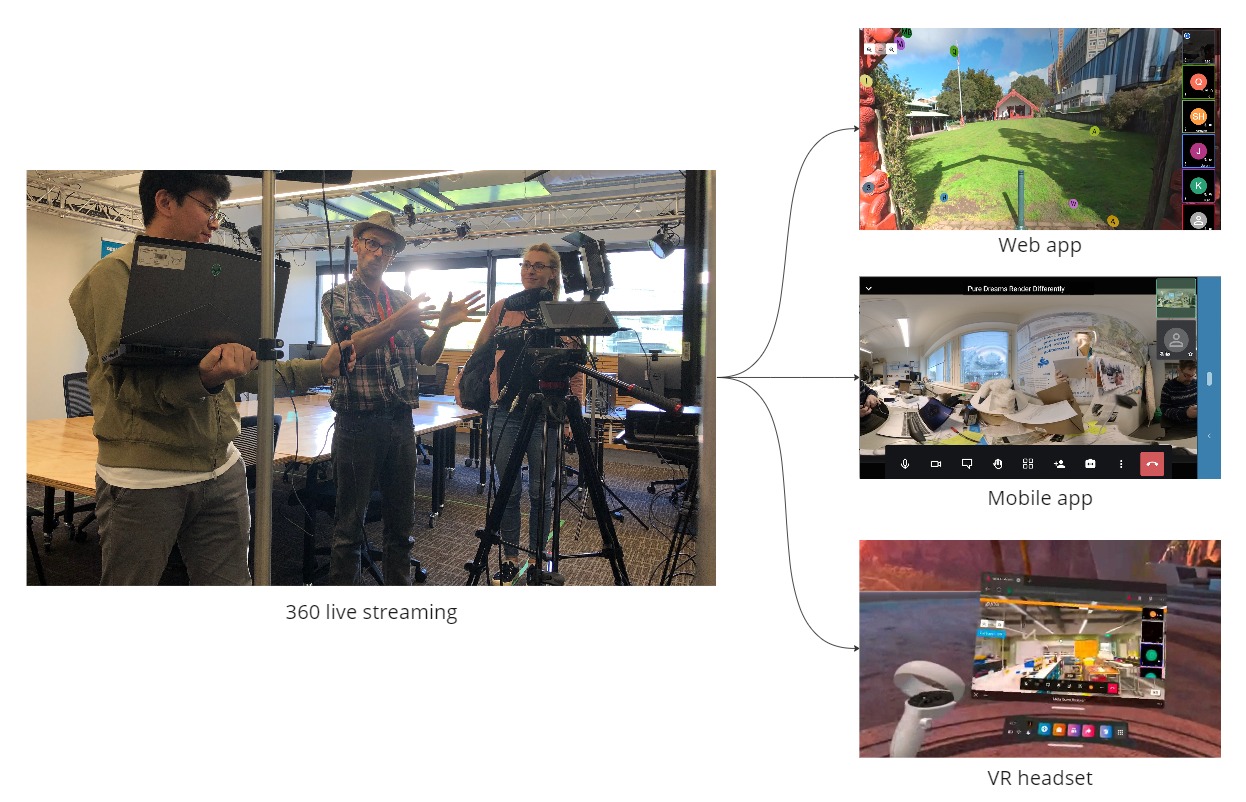

Show Me Around

This project introduces an immersive way to experience a conference call - by using a 360° camera to live stream a person’s surroundings to remote viewers. Viewers have the ability to freely look around the host video and get a better understanding of the sender’s surroundings. Viewers can also observe where the other participants are looking, allowing them to understand better the conversation and what people are paying attention to. In a user study of the system, people found it much more immersive than a traditional video conferencing call and reported that they felt that they were transported to a remote location. Possible applications of this system include virtual tourism, education, industrial monitoring, entertainment, and more.

-

Haptic Hongi

This project explores if XR technologies help overcome intercultural discomfort by using Augmented Reality (AR) and haptic feedback to present a traditional Māori greeting. Using a Hololens2 AR headset, guests see a pre-recorded volumetric virtual video of Tania, a Māori woman, who greets them in a re-imagined, contemporary first encounter between indigenous Māori and newcomers. The visitors, manuhiri, consider their response in the absence of usual social pressures. After a brief introduction, the virtual Tania slowly leans forward, inviting the visitor to ‘hongi’, a pressing together of noses and foreheads in a gesture symbolising “ ...peace and oneness of thought, purpose, desire, and hope”. This is felt as a haptic response delivered via a custom-made actuator built into the visitors' AR headset.

-

Asymmetric Interaction for VR sketching

This project explores how tool-based asymmetric VR interfaces can be used by artists to create immersive artwork more effectively. Most VR interfaces use two input methods of the same type, such as two handheld controllers or two bare-hand gestures. However, it is common for artists to use different tools in each hand, such as a pencil and sketch pad. The research involves developed interaction methods that use different input methods in the edge hand, such as a stylus and gesture. Using this interface, artists can rapidly sketch their designs in VR. User studies are being conducted to compare asymmetric and symmetric interfaces to see which provides the best performance and which the users prefer more.

Publications

-

Real-time visual representations for mobile mixed reality remote collaboration.

Gao, L., Bai, H., He, W., Billinghurst, M., & Lindeman, R. W.Gao, L., Bai, H., He, W., Billinghurst, M., & Lindeman, R. W. (2018, December). Real-time visual representations for mobile mixed reality remote collaboration. In SIGGRAPH Asia 2018 Virtual & Augmented Reality (p. 15). ACM.

@inproceedings{gao2018real,

title={Real-time visual representations for mobile mixed reality remote collaboration},

author={Gao, Lei and Bai, Huidong and He, Weiping and Billinghurst, Mark and Lindeman, Robert W},

booktitle={SIGGRAPH Asia 2018 Virtual \& Augmented Reality},

pages={15},

year={2018},

organization={ACM}

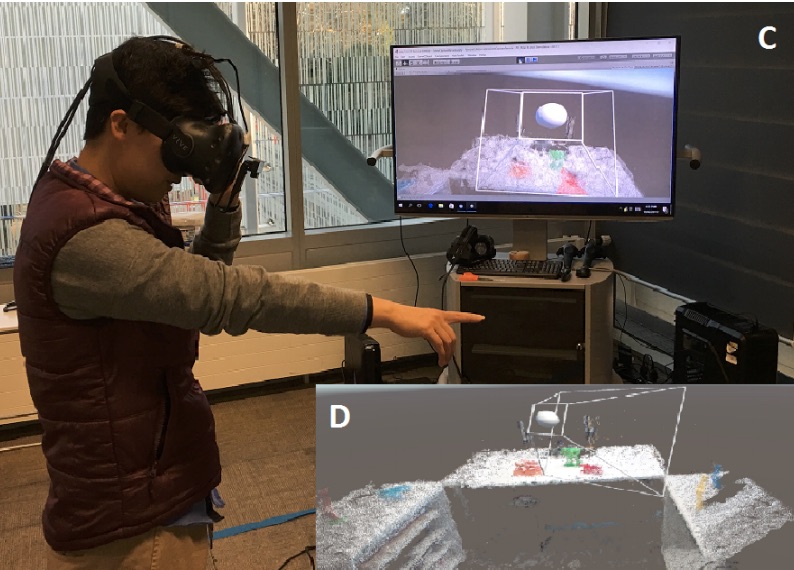

}In this study we present a Mixed-Reality based mobile remote collaboration system that enables an expert providing real-time assistance over a physical distance. By using the Google ARCore position tracking, we can integrate the keyframes captured with one external depth sensor attached to the mobile phone as one single 3D point-cloud data set to present the local physical environment into the VR world. This captured local scene is then wirelessly streamed to the remote side for the expert to view while wearing a mobile VR headset (HTC VIVE Focus). In this case, the remote expert can immerse himself/herself in the VR scene and provide guidance just as sharing the same work environment with the local worker. In addition, the remote guidance is also streamed back to the local side as an AR cue overlaid on top of the local video see-through display. Our proposed mobile remote collaboration system supports a pair of participants performing as one remote expert guiding one local worker on some physical tasks in a more natural and efficient way in a large scale work space from a distance by simulating the face-to-face co-work experience using the Mixed-Reality technique. -

Filtering 3D Shared Surrounding Environments by Social Proximity in AR

Nassani, A., Bai, H., Lee, G., Langlotz, T., Billinghurst, M., & Lindeman, R. W.Nassani, A., Bai, H., Lee, G., Langlotz, T., Billinghurst, M., & Lindeman, R. W. (2018, October). Filtering 3D Shared Surrounding Environments by Social Proximity in AR. In 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 123-124). IEEE.

@inproceedings{nassani2018filtering,

title={Filtering 3D Shared Surrounding Environments by Social Proximity in AR},

author={Nassani, Alaeddin and Bai, Huidong and Lee, Gun and Langlotz, Tobias and Billinghurst, Mark and Lindeman, Robert W},

booktitle={2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={123--124},

year={2018},

organization={IEEE}

}In this poster, we explore the social sharing of surrounding environments on wearable Augmented Reality (AR) devices. In particular, we propose filtering the level of detail of sharing the surrounding environment based on the social proximity between the viewer and the sharer. We test the effect of having the filter (varying levels of detail) on the shared surrounding environment on the sense of privacy from both viewer and sharer perspectives and conducted a pilot study using HoloLens. We report on semi-structured questionnaire results and suggest future directions in the social sharing of surrounding environments. -

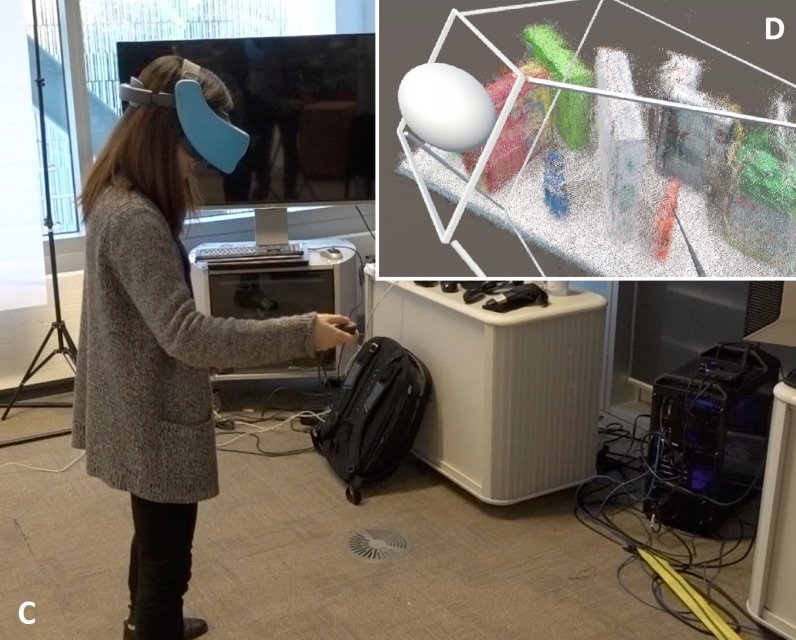

Static local environment capturing and sharing for MR remote collaboration

Gao, L., Bai, H., Lindeman, R., & Billinghurst, M.Gao, L., Bai, H., Lindeman, R., & Billinghurst, M. (2017, November). Static local environment capturing and sharing for MR remote collaboration. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 17). ACM.

@inproceedings{gao2017static,

title={Static local environment capturing and sharing for MR remote collaboration},

author={Gao, Lei and Bai, Huidong and Lindeman, Rob and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={17},

year={2017},

organization={ACM}

}We present a Mixed Reality (MR) system that supports entire scene capturing of the local physical work environment for remote collaboration in a large-scale workspace. By integrating the key-frames captured with external depth sensor as one single 3D point-cloud data set, our system could reconstruct the entire local physical workspace into the VR world. In this case, the remote helper could observe the local scene independently from the local user's current head and camera position, and provide gesture guiding information even before the local user staring at the target object. We conducted a pilot study to evaluate the usability of the system by comparing it with our previous oriented view system which only sharing the current camera view together with the real-time head orientation data. Our results indicate that this entire scene capturing and sharing system could significantly increase the remote helper's spatial awareness of the local work environment, especially in a large-scale workspace, and gain an overwhelming user preference (80%) than previous system. -

A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing

Huidong Bai , Prasanth Sasikumar , Jing Yang , Mark BillinghurstHuidong Bai, Prasanth Sasikumar, Jing Yang, and Mark Billinghurst. 2020. A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–13. DOI:https://doi.org/10.1145/3313831.3376550

@inproceedings{bai2020user,

title={A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing},

author={Bai, Huidong and Sasikumar, Prasanth and Yang, Jing and Billinghurst, Mark},

booktitle={Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems},

pages={1--13},

year={2020}

}Supporting natural communication cues is critical for people to work together remotely and face-to-face. In this paper we present a Mixed Reality (MR) remote collaboration system that enables a local worker to share a live 3D panorama of his/her surroundings with a remote expert. The remote expert can also share task instructions back to the local worker using visual cues in addition to verbal communication. We conducted a user study to investigate how sharing augmented gaze and gesture cues from the remote expert to the local worker could affect the overall collaboration performance and user experience. We found that by combing gaze and gesture cues, our remote collaboration system could provide a significantly stronger sense of co-presence for both the local and remote users than using the gaze cue alone. The combined cues were also rated significantly higher than the gaze in terms of ease of conveying spatial actions. -

A Constrained Path Redirection for Passive Haptics

Lili Wang ; Zixiang Zhao ; Xuefeng Yang ; Huidong Bai ; Amit Barde ; Mark BillinghurstL. Wang, Z. Zhao, X. Yang, H. Bai, A. Barde and M. Billinghurst, "A Constrained Path Redirection for Passive Haptics," 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 2020, pp. 651-652, doi: 10.1109/VRW50115.2020.00176.

@inproceedings{wang2020constrained,

title={A Constrained Path Redirection for Passive Haptics},

author={Wang, Lili and Zhao, Zixiang and Yang, Xuefeng and Bai, Huidong and Barde, Amit and Billinghurst, Mark},

booktitle={2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages={651--652},

year={2020},

organization={IEEE}

}Navigation with passive haptic feedback can enhance users’ immersion in virtual environments. We propose a constrained path redirection method to provide users with corresponding haptic feedback at the right time and place. We have quantified the VR exploration practicality in a study and the results show advantages over steer-to-center method in terms of presence, and over Steinicke’s method in terms of matching errors and presence. -

Bringing full-featured mobile phone interaction into virtual reality

H Bai, L Zhang, J Yang, M BillinghurstBai, H., Zhang, L., Yang, J., & Billinghurst, M. (2021). Bringing full-featured mobile phone interaction into virtual reality. Computers & Graphics, 97, 42-53.

@article{bai2021bringing,

title={Bringing full-featured mobile phone interaction into virtual reality},

author={Bai, Huidong and Zhang, Li and Yang, Jing and Billinghurst, Mark},

journal={Computers \& Graphics},

volume={97},

pages={42--53},

year={2021},

publisher={Elsevier}

}Virtual Reality (VR) Head-Mounted Display (HMD) technology immerses a user in a computer generated virtual environment. However, a VR HMD also blocks the users’ view of their physical surroundings, and so prevents them from using their mobile phones in a natural manner. In this paper, we present a novel Augmented Virtuality (AV) interface that enables people to naturally interact with a mobile phone in real time in a virtual environment. The system allows the user to wear a VR HMD while seeing his/her 3D hands captured by a depth sensor and rendered in different styles, and enables the user to operate a virtual mobile phone aligned with their real phone. We conducted a formal user study to compare the AV interface with physical touch interaction on user experience in five mobile applications. Participants reported that our system brought the real mobile phone into the virtual world. Unfortunately, the experiment results indicated that using a phone with our AV interfaces in VR was more difficult than the regular smartphone touch interaction, with increased workload and lower system usability, especially for a typing task. We ran a follow-up study to compare different hand visualizations for text typing using the AV interface. Participants felt that a skin-colored hand visualization method provided better usability and immersiveness than other hand rendering styles.

-

First Contact‐Take 2: Using XR technology as a bridge between Māori, Pākehā and people from other cultures in Aotearoa, New Zealand

Mairi Gunn, Mark Billinghurst, Huidong Bai, Prasanth Sasikumar.Gunn, M., Billinghurst, M., Bai, H., & Sasikumar, P. (2021). First Contact‐Take 2: Using XR technology as a bridge between Māori, Pākehā and people from other cultures in Aotearoa, New Zealand. Virtual Creativity, 11(1), 67-90.

@article{gunn2021first,

title={First Contact-Take 2: Using XR technology as a bridge between M{\=a}ori, P{\=a}keh{\=a} and people from other cultures in Aotearoa, New Zealand},

author={Gunn, Mairi and Billinghurst, Mark and Bai, Huidong and Sasikumar, Prasanth},

journal={Virtual Creativity},

volume={11},

number={1},

pages={67--90},

year={2021},

publisher={Intellect}

}The art installation common/room explores human‐digital‐human encounter across cultural differences. It comprises a suite of extended reality (XR) experiences that use technology as a bridge to help support human connections with a view to overcoming intercultural discomfort (racism). The installations are exhibited as an informal dining room, where each table hosts a distinct experience designed to bring people together in a playful yet meaningful way. Each experience uses different technologies, including 360° 3D virtual reality (VR) in a headset (common/place), 180° 3D projection (Common Sense) and augmented reality (AR) (Come to the Table! and First Contact ‐ Take 2). This article focuses on the latter, First Contact ‐ Take 2, in which visitors are invited to sit at a dining table, wear an AR head-mounted display and encounter a recorded volumetric representation of an Indigenous Māori woman seated opposite them. She speaks directly to the visitor out of a culture that has refined collective endeavour and relational psychology over millennia. The contextual and methodological framework for this research is international commons scholarship and practice that sits within a set of relationships outlined by the Mātike Mai Report on constitutional transformation for Aotearoa, New Zealand. The goal is to practise and build new relationships between Māori and Tauiwi, including Pākehā. -

ShowMeAround: Giving Virtual Tours Using Live 360 Video

Alaeddin Nassani, Li Zhang, Huidong Bai, Mark Billinghurst.Nassani, A., Zhang, L., Bai, H., & Billinghurst, M. (2021, May). ShowMeAround: Giving Virtual Tours Using Live 360 Video. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-4).

@inproceedings{nassani2021showmearound,

title={ShowMeAround: Giving Virtual Tours Using Live 360 Video},

author={Nassani, Alaeddin and Zhang, Li and Bai, Huidong and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--4},

year={2021}

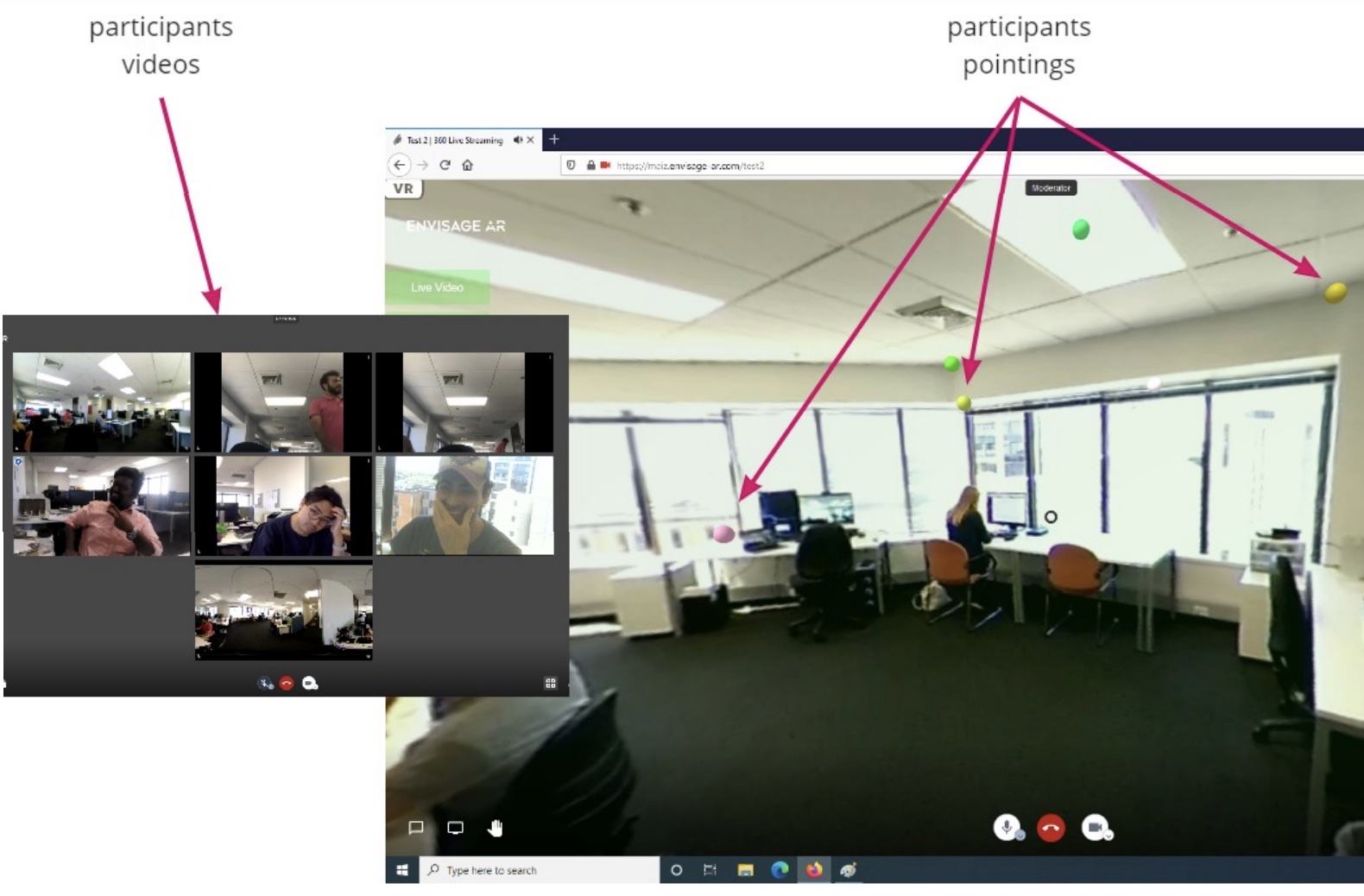

}This demonstration presents ShowMeAround, a video conferencing system designed to allow people to give virtual tours over live 360-video. Using ShowMeAround a host presenter walks through a real space and can live stream a 360-video view to a small group of remote viewers. The ShowMeAround interface has features such as remote pointing and viewpoint awareness to support natural collaboration between the viewers and host presenter. The system also enables sharing of pre-recorded high resolution 360 video and still images to further enhance the virtual tour experience. -

XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing

Prasanth Sasikumar, Max Collins, Huidong Bai, Mark Billinghurst.Sasikumar, P., Collins, M., Bai, H., & Billinghurst, M. (2021, May). XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-4).

@inproceedings{sasikumar2021xrtb,

title={XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing},

author={Sasikumar, Prasanth and Collins, Max and Bai, Huidong and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--4},

year={2021}

}We present XRTeleBridge (XRTB), an application that integrates a Mixed Reality (MR) interface into existing teleconferencing solutions like Zoom. Unlike conventional webcam, XRTB provides a window into the virtual world to demonstrate and visualize content. Participants can join via webcam or via head mounted display (HMD) in a Virtual Reality (VR) environment. It enables users to embody 3D avatars with natural gestures and eye gaze. A camera in the virtual environment operates as a video feed to the teleconferencing software. An interface resembling a tablet mirrors the teleconferencing window inside the virtual environment, thus enabling the participant in the VR environment to see the webcam participants in real-time. This allows the presenter to view and interact with other participants seamlessly. To demonstrate the system’s functionalities, we created a virtual chemistry lab environment and presented an example lesson using the virtual space and virtual objects and effects. -

HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality

Zhang, L., He, W., Cao, Z., Wang, S., Bai, H., & Billinghurst, M.Zhang, L., He, W., Cao, Z., Wang, S., Bai, H., & Billinghurst, M. (2022). HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality. International Journal of Human–Computer Interaction, 1-15.

@article{zhang2022hapticproxy,

title={HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality},

author={Zhang, Li and He, Weiping and Cao, Zhiwei and Wang, Shuxia and Bai, Huidong and Billinghurst, Mark},

journal={International Journal of Human--Computer Interaction},

pages={1--15},

year={2022},

publisher={Taylor \& Francis}

}Consistent visual and haptic feedback is an important way to improve the user experience when interacting with virtual objects. However, the perception provided in Augmented Reality (AR) mainly comes from visual cues and amorphous tactile feedback. This work explores how to simulate positional vibrotactile feedback (PVF) with multiple vibration motors when colliding with virtual objects in AR. By attaching spatially distributed vibration motors on a physical haptic proxy, users can obtain an augmented collision experience with positional vibration sensations from the contact point with virtual objects. We first developed a prototype system and conducted a user study to optimize the design parameters. Then we investigated the effect of PVF on user performance and experience in a virtual and real object alignment task in the AR environment. We found that this approach could significantly reduce the alignment offset between virtual and physical objects with tolerable task completion time increments. With the PVF cue, participants obtained a more comprehensive perception of the offset direction, more useful information, and a more authentic AR experience. -

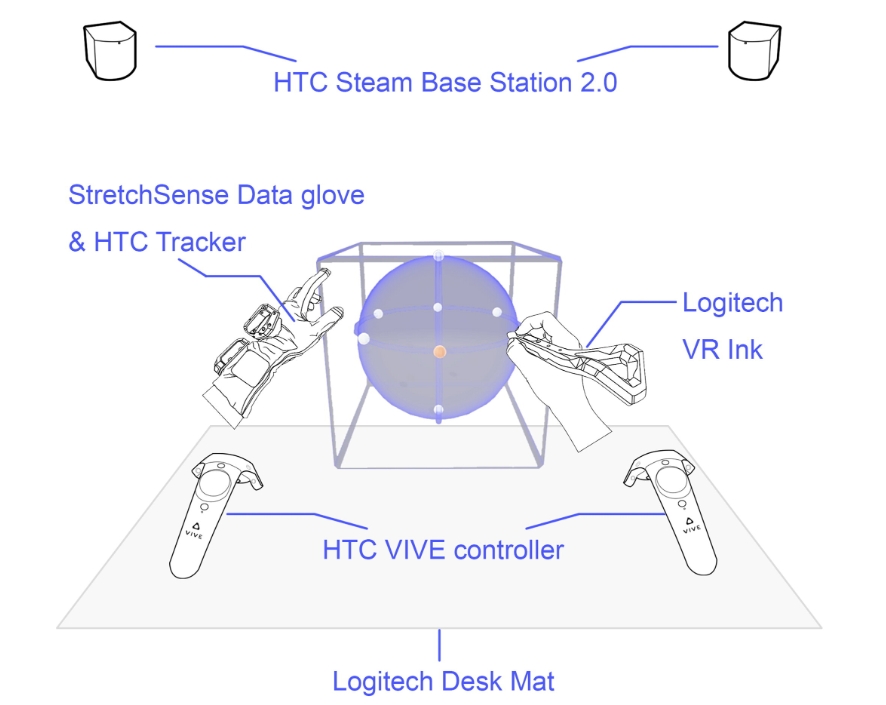

Asymmetric interfaces with stylus and gesture for VR sketching

Qianyuan Zou; Huidong Bai; Lei Gao; Allan Fowler; Mark BillinghurstZou, Q., Bai, H., Gao, L., Fowler, A., & Billinghurst, M. (2022, March). Asymmetric interfaces with stylus and gesture for VR sketching. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 968-969). IEEE.

@inproceedings{zou2022asymmetric,

title={Asymmetric interfaces with stylus and gesture for VR sketching},

author={Zou, Qianyuan and Bai, Huidong and Gao, Lei and Fowler, Allan and Billinghurst, Mark},

booktitle={2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages={968--969},

year={2022},

organization={IEEE}

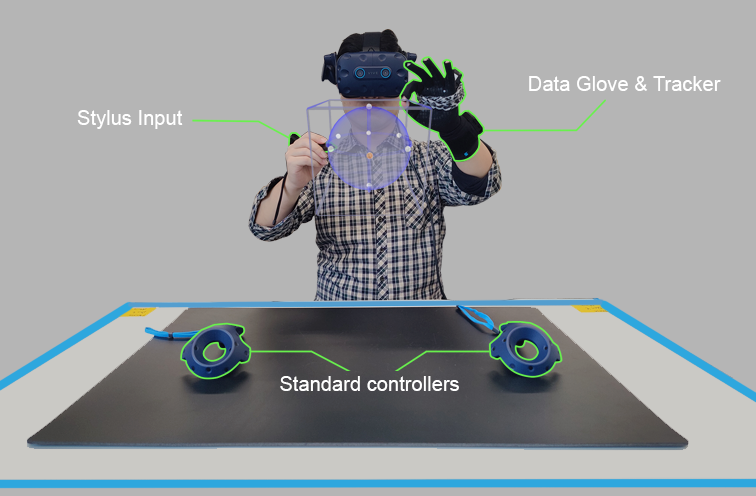

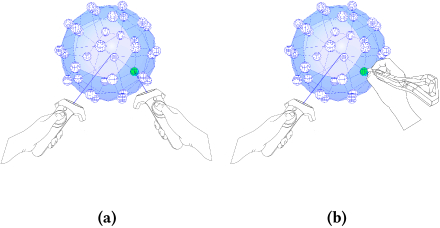

}Virtual Reality (VR) can be used for design and artistic applications. However, traditional symmetrical input devices are not specifically designed as creative tools and may not fully meet artist needs. In this demonstration, we present a variety of tool-based asymmetric VR interfaces to assist artists to create artwork with better performance and easier effort. These interaction methods allow artists to hold different tools in their hands, such as wearing a data glove on the left hand and holding a stylus in the right-hand. We demonstrate this by showing a stylus and glove based sketching interface. We conducted a pilot study showing that most users prefer to create art with different tools in both hands. -

Tool-based asymmetric interaction for selection in VR.

Qianyuan Zou; Huidong Bai; Gun Lee; Allan Fowler; Mark BillinghurstZou, Q., Bai, H., Zhang, Y., Lee, G., Allan, F., & Mark, B. (2021). Tool-based asymmetric interaction for selection in vr. In SIGGRAPH Asia 2021 Technical Communications (pp. 1-4).

@incollection{zou2021tool,

title={Tool-based asymmetric interaction for selection in vr},

author={Zou, Qianyuan and Bai, Huidong and Zhang, Yuewei and Lee, Gun and Allan, Fowler and Mark, Billinghurst},

booktitle={SIGGRAPH Asia 2021 Technical Communications},

pages={1--4},

year={2021}

}View: https://dl.acm.org/doi/abs/10.1145/3478512.3488615

Video: https://www.youtube.com/watch?v=FVk5lWtntGkMainstream Virtual Reality (VR) devices on the market nowadays mostly use symmetric interaction design for input, yet common practice by artists suggests asymmetric interaction using different input tools in each hand could be a better alternative for 3D modeling tasks in VR. In this paper, we explore the performance and usability of a tool-based asymmetric interaction method for a 3D object selection task in VR and compare it with a symmetric interface. The symmetric VR interface uses two identical handheld controllers to select points on a sphere, while the asymmetric interface uses a handheld controller and a stylus. We conducted a user study to compare these two interfaces and found that the asymmetric system was faster, required less workload, and was rated with better usability. We also discuss the opportunities for tool-based asymmetric input to optimize VR art workflows and future research directions. -

First Contact-Take 2 Using XR to Overcome Intercultural Discomfort (racism)

Gunn, M., Sasikumar, P., & Bai, H -

Come to the Table! Haere mai ki te tēpu!.

Gunn, M., Bai, H., & Sasikumar, P. -

Jitsi360: Using 360 images for live tours.

Nassani, A., Bai, H., & Billinghurst, M. -

Designing, Prototyping and Testing of 360-degree Spatial Audio Conferencing for Virtual Tours.

Nassani, A., Barde, A., Bai, H., Nanayakkara, S., & Billinghurst, M. -

Implementation of Attention-Based Spatial Audio for 360° Environments.

Nassani, A., Barde, A., Bai, H., Nanayakkara, S., & Billinghurst, M. -

LightSense-Long Distance

Uwe Rieger, Yinan Liu, Tharindu Kaluarachchi, Amit Barde, Huidong Bai, Alaeddin Nassani, Suranga Nanayakkara, Mark Billinghurst.Rieger, U., Liu, Y., Kaluarachchi, T., Barde, A., Bai, H., Nassani, A., ... & Billinghurst, M. (2023). LightSense-Long Distance. In ACM SIGGRAPH Asia 2023 Art Gallery (pp. 1-2).

@incollection{rieger2023lightsense,

title={LightSense-Long Distance},

author={Rieger, Uwe and Liu, Yinan and Kaluarachchi, Tharindu and Barde, Amit and Bai, Huidong and Nassani, Alaeddin and Nanayakkara, Suranga and Billinghurst, Mark},

booktitle={ACM SIGGRAPH Asia 2023 Art Gallery},

pages={1--2},

year={2023}

}'LightSense - Long Distance' explores remote interaction with architectural space. It is a virtual extension of the project 'LightSense,' which is currently presented at the exhibition 'Cyber Physical: Architecture in Real Time' at EPFL Pavilions in Switzerland. Using numerous VR headsets, the setup at the Art Gallery at SIGGRAPH Asia establishes a direct connection between both exhibition sites in Sydney and Lausanne.'LightSense' at EPFL Pavilions is an immersive installation that allows the audience to engage in intimate interaction with a living architectural body. It consists of a 12-meter-long construction that combines a lightweight structure with projected 3D holographic animations. At its core sits a neural network, which has been trained on sixty thousand poems. This allows the structure to engage, lead, and sustain conversations with the visitor. Its responses are truly associative, unpredictable, meaningful, magical, and deeply emotional. Analysing the emotional tenor of the conversation, 'LightSense' can transform into a series of hybrid architectural volumes, immersing the visitors in Pavilions of Love, Anger, Curiosity, and Joy.'LightSense's' physical construction is linked to a digital twin. Movement, holographic animations, sound, and text responses are controlled by the cloud-based AI system. This combination creates a location-independent cyber-physical system. As such, the 'Long Distance' version, which premiered at SIGGRAPH Asia, enables the visitors in Sydney to directly engage with the physical setup in Lausanne. Using VR headsets with a new 360-degree 4K live streaming system, the visitors find themselves teleported to face 'LightSense', able to engage in a direct conversation with the structure on-site.'LightSense - Long Distance' leaves behind the notion of architecture being a place-bound and static environment. Instead, it points toward the next generation of responsive buildings that transcend space, are capable of dynamic behaviour, and able to accompany their visitors as creative partners. -

Stylus and Gesture Asymmetric Interaction for Fast and Precise Sketching in Virtual Reality

Qianyuan Zou, Huidong Bai, Lei Gao, Gun A. Lee, Allan Fowler & Mark BillinghurstZou, Q., Bai, H., Gao, L., Lee, G. A., Fowler, A., & Billinghurst, M. (2024). Stylus and Gesture Asymmetric Interaction for Fast and Precise Sketching in Virtual Reality. International Journal of Human–Computer Interaction, 1-18.

@article{zou2024stylus,

title={Stylus and Gesture Asymmetric Interaction for Fast and Precise Sketching in Virtual Reality},

author={Zou, Qianyuan and Bai, Huidong and Gao, Lei and Lee, Gun A and Fowler, Allan and Billinghurst, Mark},

journal={International Journal of Human--Computer Interaction},

pages={1--18},

year={2024},

publisher={Taylor \& Francis}

}This research investigates fast and precise Virtual Reality (VR) sketching methods with different tool-based asymmetric interfaces. In traditional real-world drawing, artists commonly employ an asymmetric interaction system where each hand holds different tools, facilitating diverse and nuanced artistic expressions. However, in virtual reality (VR), users are typically limited to using identical tools in both hands for drawing. To bridge this gap, we aim to introduce specifically designed tools in VR that replicate the varied tool configurations found in the real world. Hence, we developed a VR sketching system supporting three hybrid input techniques using a standard VR controller, a VR stylus, or a data glove. We conducted a formal user study consisting of an internal comparative experiment with four conditions and three tasks to compare three asymmetric input methods with each other and with a traditional symmetric controller-based solution based on questionnaires and performance evaluations. The results showed that in contrast to symmetric dual VR controller interfaces, the asymmetric input with gestures significantly reduced task completion times while maintaining good usability and input accuracy with a low task workload. This shows the value of asymmetric input methods for VR sketching. We also found that the overall user experience could be further improved by optimizing the tracking stability of the data glove and the VR stylus. -

Cognitive Load Measurement with Physiological Sensors in Virtual Reality during Physical Activity

Mohammad Ahmadi, Samantha W. Michalka, Sabrina Lenzoni, Marzieh Ahmadi Najafabadi, Huidong Bai, Alexander Sumich, Burkhard Wuensche, and Mark Billinghurst.Ahmadi, M., Michalka, S. W., Lenzoni, S., Ahmadi Najafabadi, M., Bai, H., Sumich, A., ... & Billinghurst, M. (2023, October). Cognitive Load Measurement with Physiological Sensors in Virtual Reality during Physical Activity. In Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology (pp. 1-11).

@inproceedings{ahmadi2023cognitive,

title={Cognitive Load Measurement with Physiological Sensors in Virtual Reality during Physical Activity},

author={Ahmadi, Mohammad and Michalka, Samantha W and Lenzoni, Sabrina and Ahmadi Najafabadi, Marzieh and Bai, Huidong and Sumich, Alexander and Wuensche, Burkhard and Billinghurst, Mark},

booktitle={Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2023}

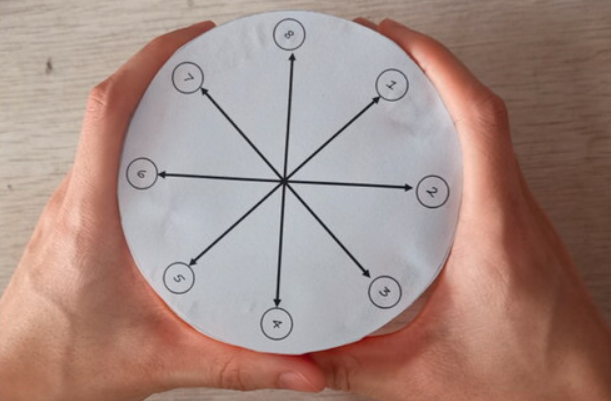

}Many Virtual Reality (VR) experiences, such as learning tools, would benefit from utilising mental states such as cognitive load. Increases in cognitive load (CL) are often reflected in the alteration of physiological responses, such as pupil dilation (PD), electrodermal cctivity (EDA), heart rate (HR), and electroencephalography (EEG). However, the relationship between these physiological responses and cognitive load are usually measured while participants sit in front of a computer screen, whereas VR environments often require a high degree of physical movement. This physical activity can affect the measured signals, making it unclear how suitable these measures are for use in interactive Virtual Reality (VR). We investigate the suitability of four physiological measures as correlates of cognitive load in interactive VR. Suitable measures must be robust enough to allow the learner to move within VR and be temporally responsive enough to be a useful metric for adaptation. We recorded PD, EDA, HR, and EEG data from nineteen participants during a sequence memory task at varying levels of cognitive load using VR, while in the standing position and using their dominant arm to play a game. We observed significant linear relationships between cognitive load and PD, EDA, and EEG frequency band power, but not HR. PD showed the most reliable relationship but has a slower response rate than EEG. Our results suggest the potential for use of PD, EDA, and EEG in this type of interactive VR environment, but additional studies will be needed to assess feasibility under conditions of greater movement. -

A hybrid 2D–3D tangible interface combining a smartphone and controller for virtual reality

Li Zhang, Weiping He, Huidong Bai, Qianyuan Zou, Shuxia Wang, and Mark Billinghurst.Zhang, L., He, W., Bai, H., Zou, Q., Wang, S., & Billinghurst, M. (2023). A hybrid 2D–3D tangible interface combining a smartphone and controller for virtual reality. Virtual Reality, 27(2), 1273-1291.

@article{zhang2023hybrid,

title={A hybrid 2D--3D tangible interface combining a smartphone and controller for virtual reality},

author={Zhang, Li and He, Weiping and Bai, Huidong and Zou, Qianyuan and Wang, Shuxia and Billinghurst, Mark},

journal={Virtual Reality},

volume={27},

number={2},

pages={1273--1291},

year={2023},

publisher={Springer}

}Virtual reality (VR) controllers are widely used for 3D virtual object selection and manipulation in immersive virtual worlds, while touchscreen-based devices like smartphones or tablets provide precise 2D tangible input. However, VR controllers and touchscreens are used separately in most cases. This research physically integrates a VR controller and a smartphone to create a hybrid 2D–3D tangible interface for VR interactions, combining the strength of both devices. The hybrid interface inherits physical buttons, 3D tracking, and spatial input from the VR controller while having tangible feedback, 2D precise input, and content display from the smartphone’s touchscreen. We review the capabilities of VR controllers and smartphones to summarize design principles and then present a design space with nine typical interaction paradigms for the hybrid interface. We developed an interactive prototype and three application modes to demonstrate the combination of individual interaction paradigms in various VR scenarios. We conducted a formal user study through a guided walkthrough to evaluate the usability of the hybrid interface. The results were positive, with participants reporting above-average usability and rating the system as excellent on four out of six user experience questionnaire scales. We also described two use cases to demonstrate the potential of the hybrid interface. -

The impact of virtual agents’ multimodal communication on brain activity and cognitive load in virtual reality

Zhuang Chang, Huidong Bai, Li Zhang, Kunal Gupta, Weiping He, and Mark Billinghurst.Chang, Z., Bai, H., Zhang, L., Gupta, K., He, W., & Billinghurst, M. (2022). The impact of virtual agents’ multimodal communication on brain activity and cognitive load in virtual reality. Frontiers in Virtual Reality, 3, 995090.

@article{chang2022impact,

title={The impact of virtual agents’ multimodal communication on brain activity and cognitive load in virtual reality},

author={Chang, Zhuang and Bai, Huidong and Zhang, Li and Gupta, Kunal and He, Weiping and Billinghurst, Mark},

journal={Frontiers in Virtual Reality},

volume={3},

pages={995090},

year={2022},

publisher={Frontiers Media SA}

}Related research has shown that collaborating with Intelligent Virtual Agents (IVAs) embodied in Augmented Reality (AR) or Virtual Reality (VR) can improve task performance and reduce task load. Human cognition and behaviors are controlled by brain activities, which can be captured and reflected by Electroencephalogram (EEG) signals. However, little research has been done to understand users’ cognition and behaviors using EEG while interacting with IVAs embodied in AR and VR environments. In this paper, we investigate the impact of the virtual agent’s multimodal communication in VR on users’ EEG signals as measured by alpha band power. We develop a desert survival game where the participants make decisions collaboratively with the virtual agent in VR. We evaluate three different communication methods based on a within-subject pilot study: 1) a Voice-only Agent, 2) an Embodied Agent with speech and gaze, and 3) a Gestural Agent with a gesture pointing at the object while talking about it. No significant difference was found in the EEG alpha band power. However, the alpha band ERD/ERS calculated around the moment when the virtual agent started speaking indicated providing a virtual body for the sudden speech could avoid the abrupt attentional demand when the agent started speaking. Moreover, a sudden gesture coupled with the speech induced more attentional demands, even though the speech was matched with the virtual body. This work is the first to explore the impact of IVAs’ interaction methods in VR on users’ brain activity, and our findings contribute to the IVAs interaction design. -

Using Virtual Replicas to Improve Mixed Reality Remote Collaboration

Huayuan Tian, Gun A. Lee, Huidong Bai, and Mark Billinghurst.Tian, H., Lee, G. A., Bai, H., & Billinghurst, M. (2023). Using virtual replicas to improve mixed reality remote collaboration. IEEE Transactions on Visualization and Computer Graphics, 29(5), 2785-2795.

@article{tian2023using,

title={Using virtual replicas to improve mixed reality remote collaboration},

author={Tian, Huayuan and Lee, Gun A and Bai, Huidong and Billinghurst, Mark},

journal={IEEE Transactions on Visualization and Computer Graphics},

volume={29},

number={5},

pages={2785--2795},

year={2023},

publisher={IEEE}

}In this paper, we explore how virtual replicas can enhance Mixed Reality (MR) remote collaboration with a 3D reconstruction of the task space. People in different locations may need to work together remotely on complicated tasks. For example, a local user could follow a remote expert's instructions to complete a physical task. However, it could be challenging for the local user to fully understand the remote expert's intentions without effective spatial referencing and action demonstration. In this research, we investigate how virtual replicas can work as a spatial communication cue to improve MR remote collaboration. This approach segments the foreground manipulable objects in the local environment and creates corresponding virtual replicas of physical task objects. The remote user can then manipulate these virtual replicas to explain the task and guide their partner. This enables the local user to rapidly and accurately understand the remote expert's intentions and instructions. Our user study with an object assembly task found that using virtual replica manipulation was more efficient than using 3D annotation drawing in an MR remote collaboration scenario. We report and discuss the findings and limitations of our system and study, and present directions for future research. -

Hapticproxy: Providing positional vibrotactile feedback on a physical proxy for virtual-real interaction in augmented reality

Li Zhang, Weiping He, Zhiwei Cao, Shuxia Wang, Huidong Bai, and Mark Billinghurst.Zhang, L., He, W., Cao, Z., Wang, S., Bai, H., & Billinghurst, M. (2023). Hapticproxy: Providing positional vibrotactile feedback on a physical proxy for virtual-real interaction in augmented reality. International Journal of Human–Computer Interaction, 39(3), 449-463.

@article{zhang2023hapticproxy,

title={Hapticproxy: Providing positional vibrotactile feedback on a physical proxy for virtual-real interaction in augmented reality},

author={Zhang, Li and He, Weiping and Cao, Zhiwei and Wang, Shuxia and Bai, Huidong and Billinghurst, Mark},

journal={International Journal of Human--Computer Interaction},

volume={39},

number={3},

pages={449--463},

year={2023},

publisher={Taylor \& Francis}

}Consistent visual and haptic feedback is an important way to improve the user experience when interacting with virtual objects. However, the perception provided in Augmented Reality (AR) mainly comes from visual cues and amorphous tactile feedback. This work explores how to simulate positional vibrotactile feedback (PVF) with multiple vibration motors when colliding with virtual objects in AR. By attaching spatially distributed vibration motors on a physical haptic proxy, users can obtain an augmented collision experience with positional vibration sensations from the contact point with virtual objects. We first developed a prototype system and conducted a user study to optimize the design parameters. Then we investigated the effect of PVF on user performance and experience in a virtual and real object alignment task in the AR environment. We found that this approach could significantly reduce the alignment offset between virtual and physical objects with tolerable task completion time increments. With the PVF cue, participants obtained a more comprehensive perception of the offset direction, more useful information, and a more authentic AR experience. -

Robot-enabled tangible virtual assembly with coordinated midair object placement

Li Zhang, Yizhe Liu, Huidong Bai, Qianyuan Zou, Zhuang Chang, Weiping He, Shuxia Wang, and Mark Billinghurst.Zhang, L., Liu, Y., Bai, H., Zou, Q., Chang, Z., He, W., ... & Billinghurst, M. (2023). Robot-enabled tangible virtual assembly with coordinated midair object placement. Robotics and Computer-Integrated Manufacturing, 79, 102434.

@article{zhang2023robot,

title={Robot-enabled tangible virtual assembly with coordinated midair object placement},

author={Zhang, Li and Liu, Yizhe and Bai, Huidong and Zou, Qianyuan and Chang, Zhuang and He, Weiping and Wang, Shuxia and Billinghurst, Mark},

journal={Robotics and Computer-Integrated Manufacturing},

volume={79},

pages={102434},

year={2023},

publisher={Elsevier}

}Li Zhang, Yizhe Liu, Huidong Bai, Qianyuan Zou, Zhuang Chang, Weiping He, Shuxia Wang, and Mark Billinghurst.