Prasanth Sasikumar

Prasanth Sasikumar

PhD Student

Prasanth Sasikumar is a PhD candidate with particular interests in Multimodal input in Remote Collaboration and scene reconstruction. He received his Master’s degree in Human-Computer interaction at the University of Canterbury in 2017. For his Masters Thesis he has worked on incorporating wearable and non wearable Haptic devices in VR sponsored by MBIE as part of NZ/Korea Human-Digital Content Interaction for Immersive 4D Home Entertainment project.

Prasanth has a keen interest in VR and AR applications and how they may assist industry to better solve problems. Currently, he is doing his PhD research under the supervision of Prof. Mark Billinghurst and Dr. Huidong Bai in Empathic Computing Lab at the University of Auckland.

Website: https://www.prasanthsasikumar.com/

Projects

-

Haptic Hongi

This project explores if XR technologies help overcome intercultural discomfort by using Augmented Reality (AR) and haptic feedback to present a traditional Māori greeting. Using a Hololens2 AR headset, guests see a pre-recorded volumetric virtual video of Tania, a Māori woman, who greets them in a re-imagined, contemporary first encounter between indigenous Māori and newcomers. The visitors, manuhiri, consider their response in the absence of usual social pressures. After a brief introduction, the virtual Tania slowly leans forward, inviting the visitor to ‘hongi’, a pressing together of noses and foreheads in a gesture symbolising “ ...peace and oneness of thought, purpose, desire, and hope”. This is felt as a haptic response delivered via a custom-made actuator built into the visitors' AR headset.

Publications

-

A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing

Huidong Bai , Prasanth Sasikumar , Jing Yang , Mark BillinghurstHuidong Bai, Prasanth Sasikumar, Jing Yang, and Mark Billinghurst. 2020. A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20). Association for Computing Machinery, New York, NY, USA, 1–13. DOI:https://doi.org/10.1145/3313831.3376550

@inproceedings{bai2020user,

title={A User Study on Mixed Reality Remote Collaboration with Eye Gaze and Hand Gesture Sharing},

author={Bai, Huidong and Sasikumar, Prasanth and Yang, Jing and Billinghurst, Mark},

booktitle={Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems},

pages={1--13},

year={2020}

}Supporting natural communication cues is critical for people to work together remotely and face-to-face. In this paper we present a Mixed Reality (MR) remote collaboration system that enables a local worker to share a live 3D panorama of his/her surroundings with a remote expert. The remote expert can also share task instructions back to the local worker using visual cues in addition to verbal communication. We conducted a user study to investigate how sharing augmented gaze and gesture cues from the remote expert to the local worker could affect the overall collaboration performance and user experience. We found that by combing gaze and gesture cues, our remote collaboration system could provide a significantly stronger sense of co-presence for both the local and remote users than using the gaze cue alone. The combined cues were also rated significantly higher than the gaze in terms of ease of conveying spatial actions. -

First Contact‐Take 2: Using XR technology as a bridge between Māori, Pākehā and people from other cultures in Aotearoa, New Zealand

Mairi Gunn, Mark Billinghurst, Huidong Bai, Prasanth Sasikumar.Gunn, M., Billinghurst, M., Bai, H., & Sasikumar, P. (2021). First Contact‐Take 2: Using XR technology as a bridge between Māori, Pākehā and people from other cultures in Aotearoa, New Zealand. Virtual Creativity, 11(1), 67-90.

@article{gunn2021first,

title={First Contact-Take 2: Using XR technology as a bridge between M{\=a}ori, P{\=a}keh{\=a} and people from other cultures in Aotearoa, New Zealand},

author={Gunn, Mairi and Billinghurst, Mark and Bai, Huidong and Sasikumar, Prasanth},

journal={Virtual Creativity},

volume={11},

number={1},

pages={67--90},

year={2021},

publisher={Intellect}

}The art installation common/room explores human‐digital‐human encounter across cultural differences. It comprises a suite of extended reality (XR) experiences that use technology as a bridge to help support human connections with a view to overcoming intercultural discomfort (racism). The installations are exhibited as an informal dining room, where each table hosts a distinct experience designed to bring people together in a playful yet meaningful way. Each experience uses different technologies, including 360° 3D virtual reality (VR) in a headset (common/place), 180° 3D projection (Common Sense) and augmented reality (AR) (Come to the Table! and First Contact ‐ Take 2). This article focuses on the latter, First Contact ‐ Take 2, in which visitors are invited to sit at a dining table, wear an AR head-mounted display and encounter a recorded volumetric representation of an Indigenous Māori woman seated opposite them. She speaks directly to the visitor out of a culture that has refined collective endeavour and relational psychology over millennia. The contextual and methodological framework for this research is international commons scholarship and practice that sits within a set of relationships outlined by the Mātike Mai Report on constitutional transformation for Aotearoa, New Zealand. The goal is to practise and build new relationships between Māori and Tauiwi, including Pākehā. -

XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing

Prasanth Sasikumar, Max Collins, Huidong Bai, Mark Billinghurst.Sasikumar, P., Collins, M., Bai, H., & Billinghurst, M. (2021, May). XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-4).

@inproceedings{sasikumar2021xrtb,

title={XRTB: A Cross Reality Teleconference Bridge to incorporate 3D interactivity to 2D Teleconferencing},

author={Sasikumar, Prasanth and Collins, Max and Bai, Huidong and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--4},

year={2021}

}We present XRTeleBridge (XRTB), an application that integrates a Mixed Reality (MR) interface into existing teleconferencing solutions like Zoom. Unlike conventional webcam, XRTB provides a window into the virtual world to demonstrate and visualize content. Participants can join via webcam or via head mounted display (HMD) in a Virtual Reality (VR) environment. It enables users to embody 3D avatars with natural gestures and eye gaze. A camera in the virtual environment operates as a video feed to the teleconferencing software. An interface resembling a tablet mirrors the teleconferencing window inside the virtual environment, thus enabling the participant in the VR environment to see the webcam participants in real-time. This allows the presenter to view and interact with other participants seamlessly. To demonstrate the system’s functionalities, we created a virtual chemistry lab environment and presented an example lesson using the virtual space and virtual objects and effects. -

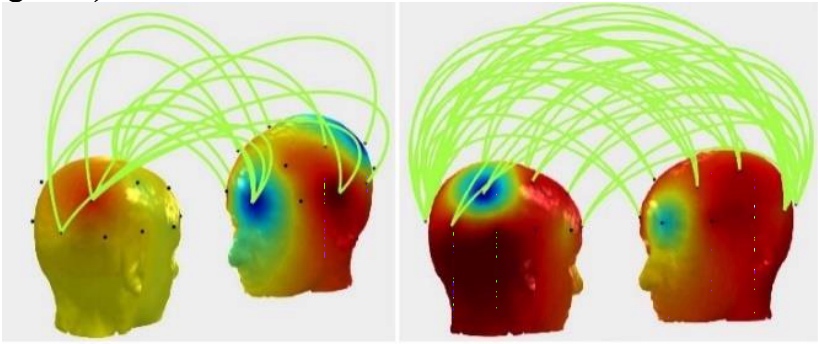

Inter-brain connectivity: Comparisons between real and virtual environments using hyperscanning

Amit Barde, Nastaran Saffaryazdi, P. Withana, N. Patel, Prasanth Sasikumar, Mark BillinghurstBarde, A., Saffaryazdi, N., Withana, P., Patel, N., Sasikumar, P., & Billinghurst, M. (2019, October). Inter-brain connectivity: Comparisons between real and virtual environments using hyperscanning. In 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 338-339). IEEE.

@inproceedings{barde2019inter,

title={Inter-brain connectivity: Comparisons between real and virtual environments using hyperscanning},

author={Barde, Amit and Saffaryazdi, Nastaran and Withana, Pawan and Patel, Nakul and Sasikumar, Prasanth and Billinghurst, Mark},

booktitle={2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={338--339},

year={2019},

organization={IEEE}

}Inter-brain connectivity between pairs of people was explored during a finger tracking task in the real-world and in Virtual Reality (VR). This was facilitated by the use of a dual EEG set-up that allowed us to use hyperscanning to simultaneously record the neural activity of both participants. We found that similar levels of inter-brain synchrony can be elicited in the real-world and VR for the same task. This is the first time that hyperscanning has been used to compare brain activity for the same task performed in real and virtual environments. -

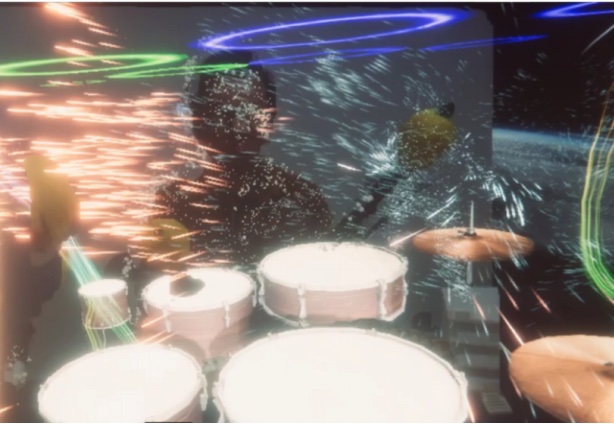

NeuralDrum: Perceiving Brain Synchronicity in XR Drumming

Y. S. Pai, Ryo Hajika, Kunla Gupta, Prasnth Sasikumar, Mark Billinghurst.Pai, Y. S., Hajika, R., Gupta, K., Sasikumar, P., & Billinghurst, M. (2020). NeuralDrum: Perceiving Brain Synchronicity in XR Drumming. In SIGGRAPH Asia 2020 Technical Communications (pp. 1-4).

@incollection{pai2020neuraldrum,

title={NeuralDrum: Perceiving Brain Synchronicity in XR Drumming},

author={Pai, Yun Suen and Hajika, Ryo and Gupta, Kunal and Sasikumar, Prasanth and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2020 Technical Communications},

pages={1--4},

year={2020}

}Brain synchronicity is a neurological phenomena where two or more individuals have their brain activation in phase when performing a shared activity. We present NeuralDrum, an extended reality (XR) drumming experience that allows two players to drum together while their brain signals are simultaneously measured. We calculate the Phase Locking Value (PLV) to determine their brain synchronicity and use this to directly affect their visual and auditory experience in the game, creating a closed feedback loop. In a pilot study, we logged and analysed the users’ brain signals as well as had them answer a subjective questionnaire regarding their perception of synchronicity with their partner and the overall experience. From the results, we discuss design implications to further improve NeuralDrum and propose methods to integrate brain synchronicity into interactive experiences. -

Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR

Ihshan Gumilar , Amit Barde , Prasanth Sasikumar , Mark Billinghurst , Ashkan F. Hayati , Gun Lee , Yuda Munarko , Sanjit Singh , Abdul MominGumilar, I., Barde, A., Sasikumar, P., Billinghurst, M., Hayati, A. F., Lee, G., ... & Momin, A. (2022, April). Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR. In CHI Conference on Human Factors in Computing Systems Extended Abstracts (pp. 1-7).

@inproceedings{gumilar2022inter,

title={Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR},

author={Gumilar, Ihshan and Barde, Amit and Sasikumar, Prasanth and Billinghurst, Mark and Hayati, Ashkan F and Lee, Gun and Munarko, Yuda and Singh, Sanjit and Momin, Abdul},

booktitle={CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--7},

year={2022}

}Brain activity sometimes synchronises when people collaborate together on real world tasks. Understanding this process could to lead to improvements in face to face and remote collaboration. In this paper we report on an experiment exploring the relationship between eye gaze and inter-brain synchrony in Virtual Reality (VR). The experiment recruited pairs who were asked to perform finger-tracking exercises in VR with three different gaze conditions: averted, direct, and natural, while their brain activity was recorded. We found that gaze direction has a significant effect on inter-brain synchrony during collaboration for this task in VR. This shows that representing natural gaze could influence inter-brain synchrony in VR, which may have implications for avatar design for social VR. We discuss implications of our research and possible directions for future work. -

haptic HONGI: Reflections on collaboration in the transdisciplinary creation of an AR artwork in Creating Digitally

Gunn, M., Campbell, A., Billinghurst, M., Sasikumar, P., Lawn, W., Muthukumarana, S -

First Contact-Take 2 Using XR to Overcome Intercultural Discomfort (racism)

Gunn, M., Sasikumar, P., & Bai, H -

Come to the Table! Haere mai ki te tēpu!.

Gunn, M., Bai, H., & Sasikumar, P.