Jonathon Hart

Jonathon Hart

PhD Student

Jonathon is a PhD student at the Empathic Computing Lab. He received his Honours degree at the University of Adelaide. He was employed as a Research Assistant at the University of South Australia (UniSA) where he created an app called “Healthy Drinks” that is designed to monitor the soft drink intake of remote Indigenous communities in Northern Territory. He currently works as a Project Officer at UniSA designing, building and constructing both the hardware and software for a remote collaborative space. He is interested in using this remote collaborative space to help people understand each other by using gaze and gesture cues in a number of scenarios in Augmented Reality environments.

Projects

-

Mini-Me

Mini-Me is an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user’s gaze direction and body gestures while it transforms in size and orientation to stay within the AR user’s field of view. We tested Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration.

-

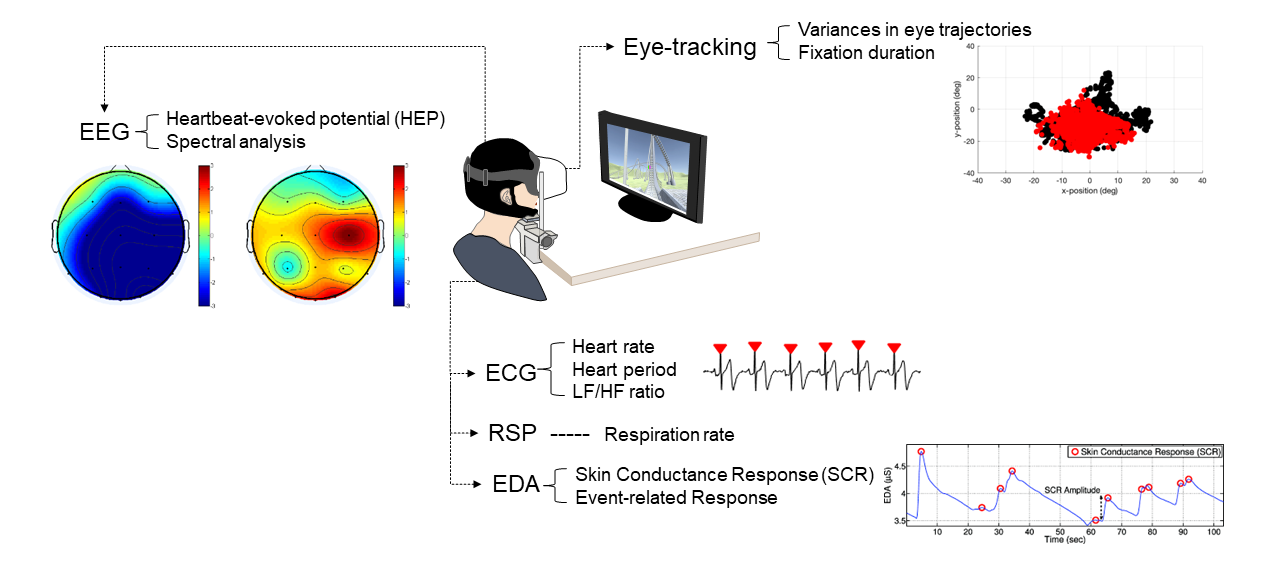

Detecting of the Onset of Cybersickness using Physiological Cues

In this project we explore if the onset of cybersickness can be detected by considering multiple physiological signals simultaneously from users in VR. We are particularly interested in physiological cues that can be collected from the current generation of VR HMDs, such as eye-gaze, and heart rate. We are also interested in exploring other physiological cues that could be available in the near future in VR HMDs, such as GSR and EEG.

-

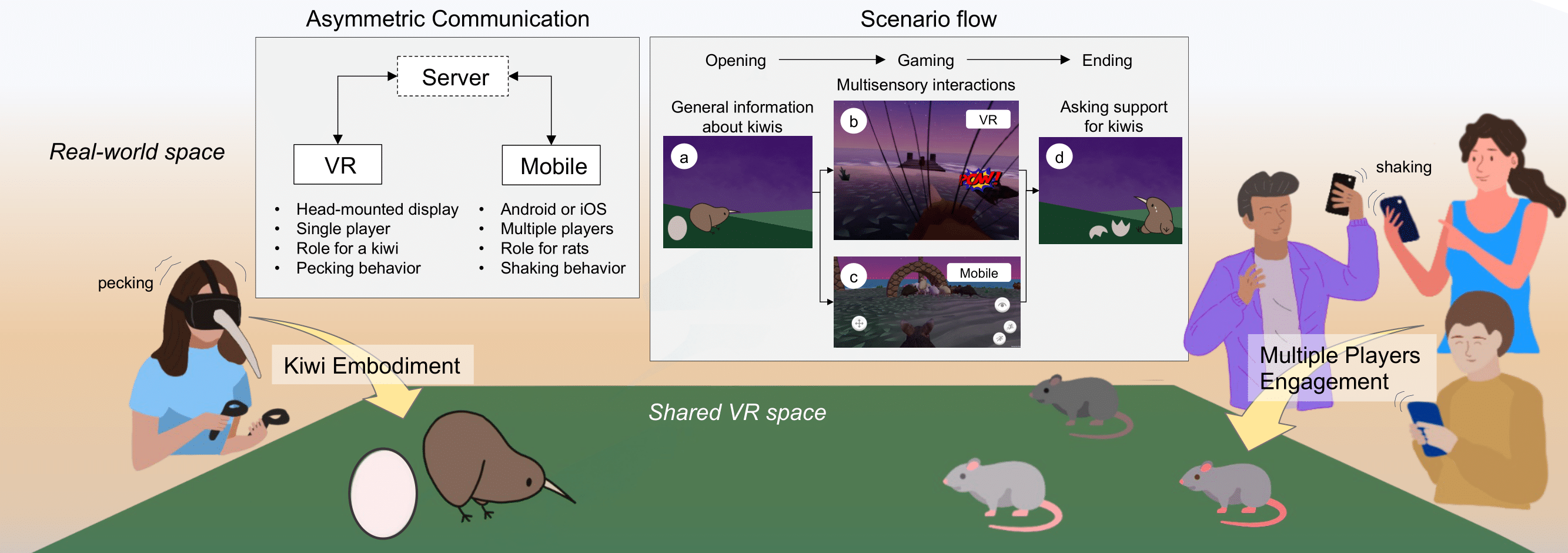

KiwiRescuer: A new interactive exhibition using an asymmetric interaction

This research demo aims to address the problem of passive and dull museum exhibition experiences that many audiences still encounter. The current approaches to exhibitions are typically less interactive and mostly provide single sensory information (e.g., visual, auditory, or haptic) in a one-to-one experience.

Publications

-

Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration

Thammathip Piumsomboon, Gun A Lee, Jonathon D Hart, Barrett Ens, Robert W Lindeman, Bruce H Thomas, Mark BillinghurstThammathip Piumsomboon, Gun A. Lee, Jonathon D. Hart, Barrett Ens, Robert W. Lindeman, Bruce H. Thomas, and Mark Billinghurst. 2018. Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA, Paper 46, 13 pages. DOI: https://doi.org/10.1145/3173574.3173620

@inproceedings{Piumsomboon:2018:MAA:3173574.3173620,

author = {Piumsomboon, Thammathip and Lee, Gun A. and Hart, Jonathon D. and Ens, Barrett and Lindeman, Robert W. and Thomas, Bruce H. and Billinghurst, Mark},

title = {Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration},

booktitle = {Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI '18},

year = {2018},

isbn = {978-1-4503-5620-6},

location = {Montreal QC, Canada},

pages = {46:1--46:13},

articleno = {46},

numpages = {13},

url = {http://doi.acm.org/10.1145/3173574.3173620},

doi = {10.1145/3173574.3173620},

acmid = {3173620},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, avatar, awareness, gaze, gesture, mixed reality, redirected, remote collaboration, remote embodiment, virtual reality},

}

[download]We present Mini-Me, an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user's gaze direction and body gestures while it transforms in size and orientation to stay within the AR user's field of view. A user study was conducted to evaluate Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration. -

Emotion Sharing and Augmentation in Cooperative Virtual Reality Games

Hart, J. D., Piumsomboon, T., Lawrence, L., Lee, G. A., Smith, R. T., & Billinghurst, M.Hart, J. D., Piumsomboon, T., Lawrence, L., Lee, G. A., Smith, R. T., & Billinghurst, M. (2018, October). Emotion Sharing and Augmentation in Cooperative Virtual Reality Games. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts (pp. 453-460). ACM.

@inproceedings{hart2018emotion,

title={Emotion Sharing and Augmentation in Cooperative Virtual Reality Games},

author={Hart, Jonathon D and Piumsomboon, Thammathip and Lawrence, Louise and Lee, Gun A and Smith, Ross T and Billinghurst, Mark},

booktitle={Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts},

pages={453--460},

year={2018},

organization={ACM}

}We present preliminary findings from sharing and augmenting facial expression in cooperative social Virtual Reality (VR) games. We implemented a prototype system for capturing and sharing facial expression between VR players through their avatar. We describe our current prototype system and how it could be assimilated into a system for enhancing social VR experience. Two social VR games were created for a preliminary user study. We discuss our findings from the user study, potential games for this system, and future directions for this research. -

Sharing and Augmenting Emotion in Collaborative Mixed Reality

Hart, J. D., Piumsomboon, T., Lee, G., & Billinghurst, M.Hart, J. D., Piumsomboon, T., Lee, G., & Billinghurst, M. (2018, October). Sharing and Augmenting Emotion in Collaborative Mixed Reality. In 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 212-213). IEEE.

@inproceedings{hart2018sharing,

title={Sharing and Augmenting Emotion in Collaborative Mixed Reality},

author={Hart, Jonathon D and Piumsomboon, Thammathip and Lee, Gun and Billinghurst, Mark},

booktitle={2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={212--213},

year={2018},

organization={IEEE}

}We present a concept of emotion sharing and augmentation for collaborative mixed-reality. To depict the ideal use case of such system, we give two example scenarios. We describe our prototype system for capturing and augmenting emotion through facial expression, eye-gaze, voice, physiological data and share them through their virtual representation, and discuss on future research directions with potential applications. -

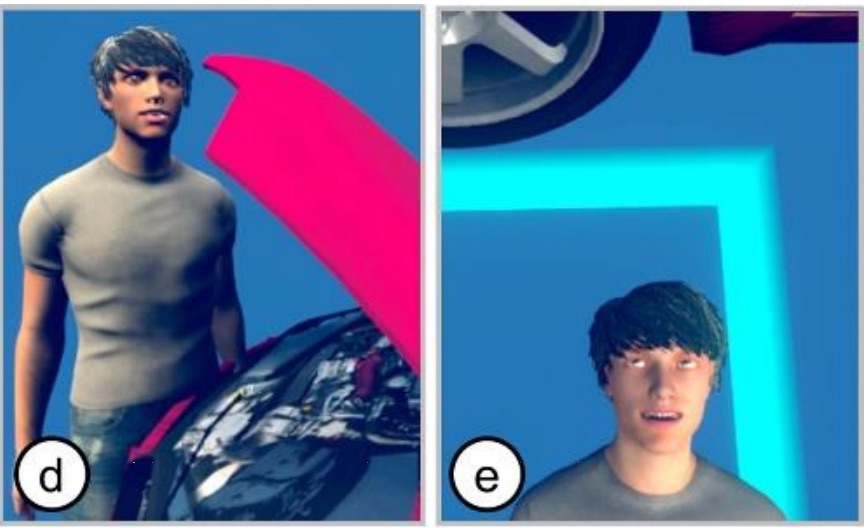

Manipulating Avatars for Enhanced Communication in Extended Reality

Jonathon Hart, Thammathip Piumsomboon, Gun A. Lee, Ross T. Smith, Mark Billinghurst.Hart, J. D., Piumsomboon, T., Lee, G. A., Smith, R. T., & Billinghurst, M. (2021, May). Manipulating Avatars for Enhanced Communication in Extended Reality. In 2021 IEEE International Conference on Intelligent Reality (ICIR) (pp. 9-16). IEEE.

@inproceedings{hart2021manipulating,

title={Manipulating Avatars for Enhanced Communication in Extended Reality},

author={Hart, Jonathon Derek and Piumsomboon, Thammathip and Lee, Gun A and Smith, Ross T and Billinghurst, Mark},

booktitle={2021 IEEE International Conference on Intelligent Reality (ICIR)},

pages={9--16},

year={2021},

organization={IEEE}

}Avatars are common virtual representations used in Extended Reality (XR) to support interaction and communication between remote collaborators. Recent advancements in wearable displays provide features such as eye and face-tracking, to enable avatars to express non-verbal cues in XR. The research in this paper investigates the impact of avatar visualization on Social Presence and user’s preference by simulating face tracking in an asymmetric XR remote collaboration between a desktop user and a Virtual Reality (VR) user. Our study was conducted between pairs of participants, one on a laptop computer supporting face tracking and the other being immersed in VR, experiencing different visualization conditions. They worked together to complete an island survival task. We found that the users preferred 3D avatars with facial expressions placed in the scene, compared to 2D screen attached avatars without facial expressions. Participants felt that the presence of the collaborator’s avatar improved overall communication, yet Social Presence was not significantly different between conditions as they mainly relied on audio for communication.