Hyunwoo Cho

Hyunwoo Cho

PhD Student

Hyunwoo is a PhD student at the Empathic Computing Lab. He received his Master’s degree in computer science at the Korea Advanced Institute of Science and Technology (KAIST). He’s worked at the Electronics and Telecommunications Research Institute (ETRI) as a permanent basis full-time researcher. Now, Hyunwoo is currently working on “Spatiotemporal Interaction in Mixed Reality Collaboration” under supervision of Prof. Mark Bullinghurst, Dr. Gun Lee and Dr. Thammathip Piumsomboon.

Projects

-

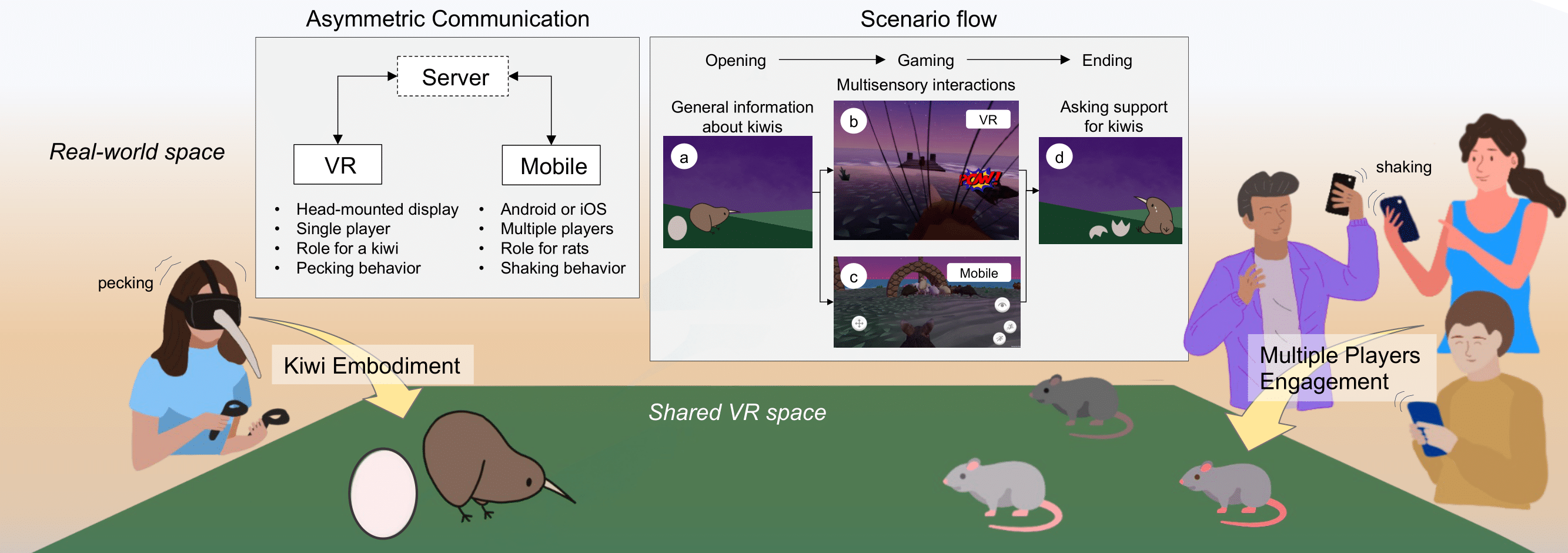

KiwiRescuer: A new interactive exhibition using an asymmetric interaction

This research demo aims to address the problem of passive and dull museum exhibition experiences that many audiences still encounter. The current approaches to exhibitions are typically less interactive and mostly provide single sensory information (e.g., visual, auditory, or haptic) in a one-to-one experience.

-

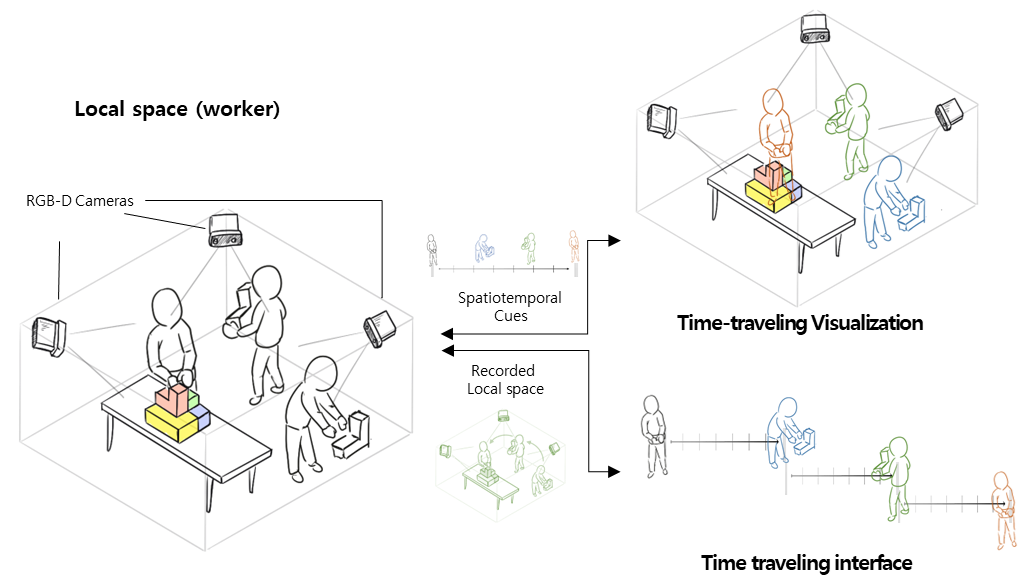

AR-based spatiotemporal interface and visualization for the physical task

The proposed study aims to assist in solving physical tasks such as mechanical assembly or collaborative design efficiently by using augmented reality-based space-time visualization techniques. In particular, when disassembling/reassembling is required, 3D recording of past actions and playback visualization are used to help memorize the exact assembly order and position of objects in the task. This study proposes a novel method that employs 3D-based spatial information recording and augmented reality-based playback to effectively support these types of physical tasks.

Publications

-

An Asynchronous Hybrid Cross Reality Collaborative System

Hyunwoo Cho, Bowen Yuan, Jonathon Derek Hart, Eunhee Chang, Zhuang Chang, Jiashuo Cao, Gun A. Lee, Thammathip Piumsomboon, and Mark Billinghurst.Cho, H., Yuan, B., Hart, J. D., Chang, E., Chang, Z., Cao, J., ... & Billinghurst, M. (2023, October). An asynchronous hybrid cross reality collaborative system. In 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 70-73). IEEE.

@inproceedings{cho2023asynchronous,

title={An asynchronous hybrid cross reality collaborative system},

author={Cho, Hyunwoo and Yuan, Bowen and Hart, Jonathon Derek and Chang, Eunhee and Chang, Zhuang and Cao, Jiashuo and Lee, Gun A and Piumsomboon, Thammathip and Billinghurst, Mark},

booktitle={2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={70--73},

year={2023},

organization={IEEE}

}This work presents a Mixed Reality (MR)-based asynchronous hybrid cross reality collaborative system which supports recording and playback of user actions in three-dimensional task space at different periods in time. Using this system, an expert user can record a task process such as virtual object placement or assembly, which can then be viewed by other users in either Augmented Reality (AR) or Virtual Reality (VR) views at later points in time to complete the task. In VR, the pre-scanned 3D workspace can be experienced to enhance the understanding of spatial information. Alternatively, AR can provide real-scale information to help the workers manipulate real world objects, and complete the task assignment. Users can also seamlessly move between AR and VR views as desired. In this way the system can contribute to improving task performance and co-presence during asynchronous collaboration. -

Time Travellers: An Asynchronous Cross Reality Collaborative System

Hyunwoo Cho, Bowen Yuan, Jonathon Derek Hart, Zhuang Chang, Jiashuo Cao, Eunhee Chang, and Mark Billinghurst.Cho, H., Yuan, B., Hart, J. D., Chang, Z., Cao, J., Chang, E., & Billinghurst, M. (2023, October). Time Travellers: An Asynchronous Cross Reality Collaborative System. In 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 848-853). IEEE.

@inproceedings{cho2023time,

title={Time Travellers: An Asynchronous Cross Reality Collaborative System},

author={Cho, Hyunwoo and Yuan, Bowen and Hart, Jonathon Derek and Chang, Zhuang and Cao, Jiashuo and Chang, Eunhee and Billinghurst, Mark},

booktitle={2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={848--853},

year={2023},

organization={IEEE}

}This work presents a Mixed Reality (MR)-based asynchronous hybrid cross reality collaborative system which supports recording and playback of user actions in a large task space at different periods in time. Using this system, an expert can record a task process such as virtual object placement or assembly, which can then be viewed by other users in either Augmented Reality (AR) or Virtual Reality (VR) at later points in time to complete the task. In VR, the pre-scanned 3D workspace can be experienced to enhance the understanding of spatial information. Alternatively, AR can provide real-scale information to help the workers manipulate real-world objects, and complete the assignment. Users can seamlessly switch between AR and VR views as desired. In this way, the system can contribute to improving task performance and co-presence during asynchronous collaboration. The system is demonstrated in a use-case scenario of object assembly using parts that must be retrieved from a storehouse location. A pilot user study found that cross reality asynchronous collaboration system was helpful in providing information about work environments, inducing faster task completion with a lower task load. We provide lessons learned and suggestions for future research.