Eunhee Chang

Eunhee Chang

Research Fellow

Eunhee is a Research Fellow at the Empathic Computing Laboratory. Her research interests include measuring human behaviors in virtual reality environments such as cybersickness, task performance during remote collaboration, and empathic responses. Eunhee completed her Ph.D. in the psychology department at Korea University and worked at the Korea Institute of Science Technology (KIST) to measure and detect cybersickness based on various physiological signals.

Projects

-

Detecting of the Onset of Cybersickness using Physiological Cues

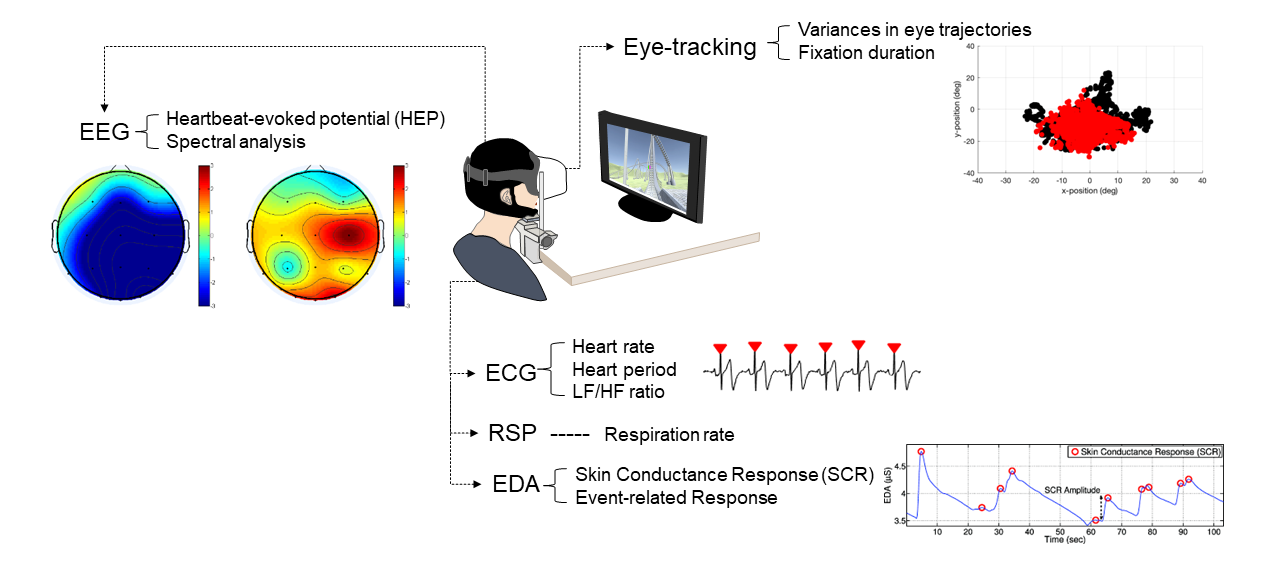

In this project we explore if the onset of cybersickness can be detected by considering multiple physiological signals simultaneously from users in VR. We are particularly interested in physiological cues that can be collected from the current generation of VR HMDs, such as eye-gaze, and heart rate. We are also interested in exploring other physiological cues that could be available in the near future in VR HMDs, such as GSR and EEG.

-

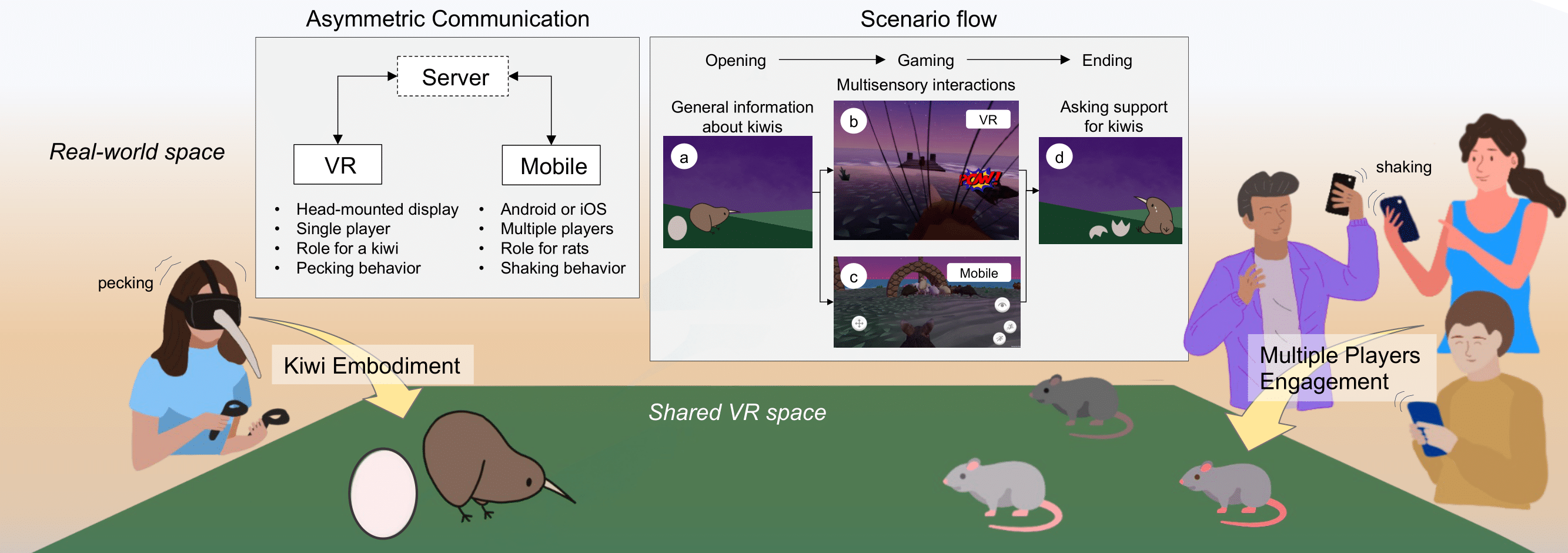

KiwiRescuer: A new interactive exhibition using an asymmetric interaction

This research demo aims to address the problem of passive and dull museum exhibition experiences that many audiences still encounter. The current approaches to exhibitions are typically less interactive and mostly provide single sensory information (e.g., visual, auditory, or haptic) in a one-to-one experience.

Publications

-

Identifying physiological correlates of cybersickness using heartbeat-evoked potential analysis

Eunhee Chang, Hyun Taek Kim & Byounghyun YooChang, E., Kim, H.T. & Yoo, B. Identifying physiological correlates of cybersickness using heartbeat-evoked potential analysis. Virtual Reality 26, 1193–1205 (2022). https://doi.org/10.1007/s10055-021-00622-2

@article{chang2022identifying,

title={Identifying physiological correlates of cybersickness using heartbeat-evoked potential analysis},

author={Chang, Eunhee and Kim, Hyun Taek and Yoo, Byounghyun},

journal={Virtual Reality},

volume={26},

number={3},

pages={1193--1205},

year={2022},

publisher={Springer}

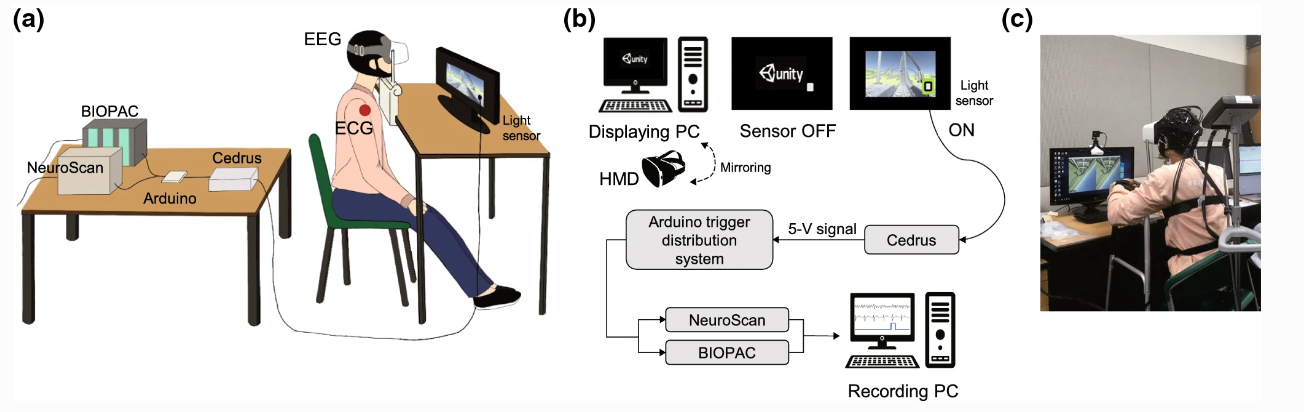

}Many studies have consistently proven that repeatedly watching virtual reality (VR) content can reduce cybersickness. Moreover, the discomfort level decreases when the VR content includes an unusual orientation, such as an inverted scene. However, few studies have investigated the physiological changes during these experiences. The present study aimed to identify psychophysiological correlates, especially the neural processing, of cybersickness. Twenty participants experienced two types of VR orientation (upright and inverted), which were repeated three times. During the experience, we recorded the participants’ subjective levels of discomfort, brain waves, cardiac signals, and eye trajectories. We performed a heartbeat-evoked potential (HEP) analysis to elucidate the cortical activity of heartbeats while experiencing cybersickness. The results showed that the severity of cybersickness decreased as the participants repeatedly watched the VR content. The participants also reported less nausea when watching the inverted orientation. We only found a significant suppression at the fronto-central HEP amplitudes in the upright orientation for the physiological changes. This study provides a comprehensive understanding of bodily responses to varying degrees of cybersickness. In addition, the HEP results suggest that this approach might reflect the neural correlates of transient changes in heartbeats caused by cybersickness. -

Predicting cybersickness based on user’s gaze behaviors in HMD-based virtual reality

Eunhee Chang, Hyun Taek Kim, Byounghyun YooEunhee Chang and others, Predicting cybersickness based on user’s gaze behaviors in HMD-based virtual reality, Journal of Computational Design and Engineering, Volume 8, Issue 2, April 2021, Pages 728–739, https://doi.org/10.1093/jcde/qwab010

@article{chang2021predicting,

title={Predicting cybersickness based on user’s gaze behaviors in HMD-based virtual reality},

author={Chang, Eunhee and Kim, Hyun Taek and Yoo, Byounghyun},

journal={Journal of Computational Design and Engineering},

volume={8},

number={2},

pages={728--739},

year={2021},

publisher={Oxford University Press}

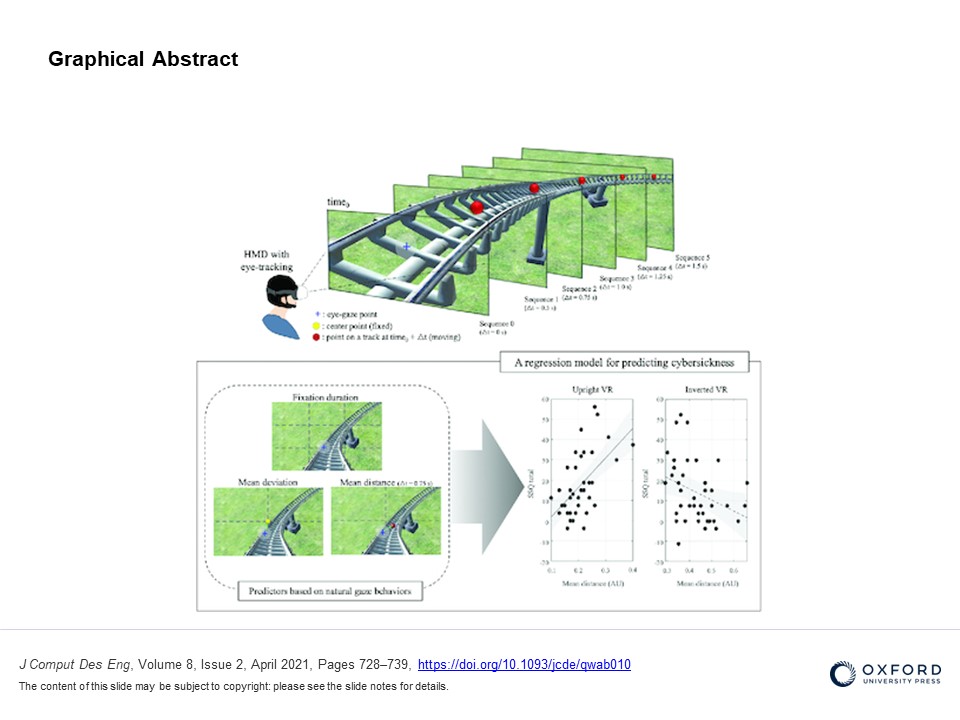

}Cybersickness refers to a group of uncomfortable symptoms experienced in virtual reality (VR). Among several theories of cybersickness, the subjective vertical mismatch (SVM) theory focuses on an individual’s internal model, which is created and updated through past experiences. Although previous studies have attempted to provide experimental evidence for the theory, most approaches are limited to subjective measures or body sway. In this study, we aimed to demonstrate the SVM theory on the basis of the participant’s eye movements and investigate whether the subjective level of cybersickness can be predicted using eye-related measures. 26 participants experienced roller coaster VR while wearing a head-mounted display with eye tracking. We designed four experimental conditions by changing the orientation of the VR scene (upright vs. inverted) or the controllability of the participant’s body (unrestrained vs. restrained body). The results indicated that participants reported more severe cybersickness when experiencing the upright VR content without controllability. Moreover, distinctive eye movements (e.g. fixation duration and distance between the eye gaze and the object position sequence) were observed according to the experimental conditions. On the basis of these results, we developed a regression model using eye-movement features and found that our model can explain 34.8% of the total variance of cybersickness, indicating a substantial improvement compared to the previous work (4.2%). This study provides empirical data for the SVM theory using both subjective and eye-related measures. In particular, the results suggest that participants’ eye movements can serve as a significant index for predicting cybersickness when considering natural gaze behaviors during a VR experience. -

Brain activity during cybersickness: a scoping review

Eunhee Chang, Mark Billinghurst, Byounghyun YooChang, E., Billinghurst, M., & Yoo, B. (2023). Brain activity during cybersickness: a scoping review. Virtual Reality, 1-25.

@article{chang2023brain,

title={Brain activity during cybersickness: a scoping review},

author={Chang, Eunhee and Billinghurst, Mark and Yoo, Byounghyun},

journal={Virtual Reality},

pages={1--25},

year={2023},

publisher={Springer}

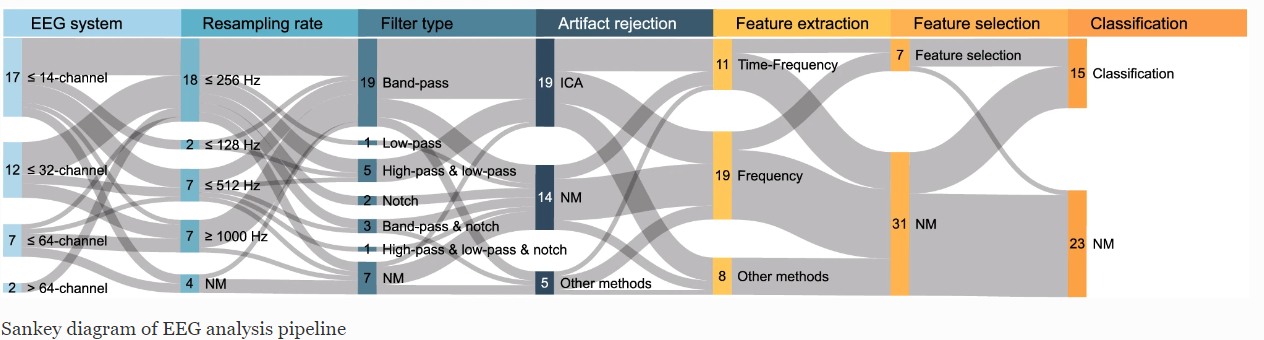

}Virtual reality (VR) experiences can cause a range of negative symptoms such as nausea, disorientation, and oculomotor discomfort, which is collectively called cybersickness. Previous studies have attempted to develop a reliable measure for detecting cybersickness instead of using questionnaires, and electroencephalogram (EEG) has been regarded as one of the possible alternatives. However, despite the increasing interest, little is known about which brain activities are consistently associated with cybersickness and what types of methods should be adopted for measuring discomfort through brain activity. We conducted a scoping review of 33 experimental studies in cybersickness and EEG found through database searches and screening. To understand these studies, we organized the pipeline of EEG analysis into four steps (preprocessing, feature extraction, feature selection, classification) and surveyed the characteristics of each step. The results showed that most studies performed frequency or time-frequency analysis for EEG feature extraction. A part of the studies applied a classification model to predict cybersickness indicating an accuracy between 79 and 100%. These studies tended to use HMD-based VR with a portable EEG headset for measuring brain activity. Most VR content shown was scenic views such as driving or navigating a road, and the age of participants was limited to people in their 20 s. This scoping review contributes to presenting an overview of cybersickness-related EEG research and establishing directions for future work. -

An Asynchronous Hybrid Cross Reality Collaborative System

Hyunwoo Cho, Bowen Yuan, Jonathon Derek Hart, Eunhee Chang, Zhuang Chang, Jiashuo Cao, Gun A. Lee, Thammathip Piumsomboon, and Mark Billinghurst.Cho, H., Yuan, B., Hart, J. D., Chang, E., Chang, Z., Cao, J., ... & Billinghurst, M. (2023, October). An asynchronous hybrid cross reality collaborative system. In 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 70-73). IEEE.

@inproceedings{cho2023asynchronous,

title={An asynchronous hybrid cross reality collaborative system},

author={Cho, Hyunwoo and Yuan, Bowen and Hart, Jonathon Derek and Chang, Eunhee and Chang, Zhuang and Cao, Jiashuo and Lee, Gun A and Piumsomboon, Thammathip and Billinghurst, Mark},

booktitle={2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={70--73},

year={2023},

organization={IEEE}

}This work presents a Mixed Reality (MR)-based asynchronous hybrid cross reality collaborative system which supports recording and playback of user actions in three-dimensional task space at different periods in time. Using this system, an expert user can record a task process such as virtual object placement or assembly, which can then be viewed by other users in either Augmented Reality (AR) or Virtual Reality (VR) views at later points in time to complete the task. In VR, the pre-scanned 3D workspace can be experienced to enhance the understanding of spatial information. Alternatively, AR can provide real-scale information to help the workers manipulate real world objects, and complete the task assignment. Users can also seamlessly move between AR and VR views as desired. In this way the system can contribute to improving task performance and co-presence during asynchronous collaboration. -

Time Travellers: An Asynchronous Cross Reality Collaborative System

Hyunwoo Cho, Bowen Yuan, Jonathon Derek Hart, Zhuang Chang, Jiashuo Cao, Eunhee Chang, and Mark Billinghurst.Cho, H., Yuan, B., Hart, J. D., Chang, Z., Cao, J., Chang, E., & Billinghurst, M. (2023, October). Time Travellers: An Asynchronous Cross Reality Collaborative System. In 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 848-853). IEEE.

@inproceedings{cho2023time,

title={Time Travellers: An Asynchronous Cross Reality Collaborative System},

author={Cho, Hyunwoo and Yuan, Bowen and Hart, Jonathon Derek and Chang, Zhuang and Cao, Jiashuo and Chang, Eunhee and Billinghurst, Mark},

booktitle={2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={848--853},

year={2023},

organization={IEEE}

}This work presents a Mixed Reality (MR)-based asynchronous hybrid cross reality collaborative system which supports recording and playback of user actions in a large task space at different periods in time. Using this system, an expert can record a task process such as virtual object placement or assembly, which can then be viewed by other users in either Augmented Reality (AR) or Virtual Reality (VR) at later points in time to complete the task. In VR, the pre-scanned 3D workspace can be experienced to enhance the understanding of spatial information. Alternatively, AR can provide real-scale information to help the workers manipulate real-world objects, and complete the assignment. Users can seamlessly switch between AR and VR views as desired. In this way, the system can contribute to improving task performance and co-presence during asynchronous collaboration. The system is demonstrated in a use-case scenario of object assembly using parts that must be retrieved from a storehouse location. A pilot user study found that cross reality asynchronous collaboration system was helpful in providing information about work environments, inducing faster task completion with a lower task load. We provide lessons learned and suggestions for future research. -

Brain activity during cybersickness: a scoping review

Eunhee Chang, Mark Billinghurst, and Byounghyun Yoo.Chang, E., Billinghurst, M., & Yoo, B. (2023). Brain activity during cybersickness: A scoping review. Virtual reality, 27(3), 2073-2097.

@article{chang2023brain,

title={Brain activity during cybersickness: A scoping review},

author={Chang, Eunhee and Billinghurst, Mark and Yoo, Byounghyun},

journal={Virtual reality},

volume={27},

number={3},

pages={2073--2097},

year={2023},

publisher={Springer}

}Virtual reality (VR) experiences can cause a range of negative symptoms such as nausea, disorientation, and oculomotor discomfort, which is collectively called cybersickness. Previous studies have attempted to develop a reliable measure for detecting cybersickness instead of using questionnaires, and electroencephalogram (EEG) has been regarded as one of the possible alternatives. However, despite the increasing interest, little is known about which brain activities are consistently associated with cybersickness and what types of methods should be adopted for measuring discomfort through brain activity. We conducted a scoping review of 33 experimental studies in cybersickness and EEG found through database searches and screening. To understand these studies, we organized the pipeline of EEG analysis into four steps (preprocessing, feature extraction, feature selection, classification) and surveyed the characteristics of each step. The results showed that most studies performed frequency or time-frequency analysis for EEG feature extraction. A part of the studies applied a classification model to predict cybersickness indicating an accuracy between 79 and 100%. These studies tended to use HMD-based VR with a portable EEG headset for measuring brain activity. Most VR content shown was scenic views such as driving or navigating a road, and the age of participants was limited to people in their 20 s. This scoping review contributes to presenting an overview of cybersickness-related EEG research and establishing directions for future work. -

A User Study on the Comparison of View Interfaces for VR-AR Communication in XR Remote Collaboration

Eunhee Chang, Yongjae Lee, and Byounghyun Yoo.Chang, E., Lee, Y., & Yoo, B. (2023). A user study on the comparison of view interfaces for VR-AR communication in XR remote collaboration. International Journal of Human–Computer Interaction, 1-16.

@article{chang2023user,

title={A user study on the comparison of view interfaces for VR-AR communication in XR remote collaboration},

author={Chang, Eunhee and Lee, Yongjae and Yoo, Byounghyun},

journal={International Journal of Human--Computer Interaction},

pages={1--16},

year={2023},

publisher={Taylor \& Francis}

}Previous studies have investigated which context-sharing interfaces are effective in improving task performance; however, consistent results have yet to be found. In this study, we developed a convenient remote collaboration system that provides multiple context-sharing interfaces in a single platform (2D video, 360° video, and 3D reconstruction view). All interfaces can reflect live updates of environmental changes, and we aimed to clarify the effect of the interface on the quality of remote collaboration. Thirty participants were recruited to perform a target-finding-and-placing scenario. Using both objective and subjective metrics, we compared the task performance among the three interface conditions. The results showed that participants completed the task faster and reported a better collaborative experience in the 3D interface condition. Moreover, we found a strong preference for the 3D view interface. These results suggest that providing 3D reconstructed spatial information can enable remote experts to instruct local workers more effectively.