Ashkan Hayati

Ashkan Hayati

PhD Student

Ashkan Hayati is a 3rd-year PhD student at University of South Australia and he is currently working on “Brain synchronisation in collaborative VR using EEG hyperscanning” under supervision of Prof. Mark Bullinghurst and Dr. Gun Lee. His research includes different areas such as EEG signal processing using Python and Matlab, Virtual Reality and Augmented Reality using Microsoft Hololens.

He received his B.Sc. in industrial engineering from Amirkabir University (Tehran Polytechnic) and M.Sc. in Information Technology from Shiraz University in Iran. He has been a web developer and has a broad knowledge in programming and web solutions.

He’s worked in gaming industry and advertisement networks for more than 5 years and has big dreams in AR/VR app development. He has started some researches in Augmented Reality from May 2015 and collaborating with Empathic Computing Lab in web development and Unity3d since then.

Projects

-

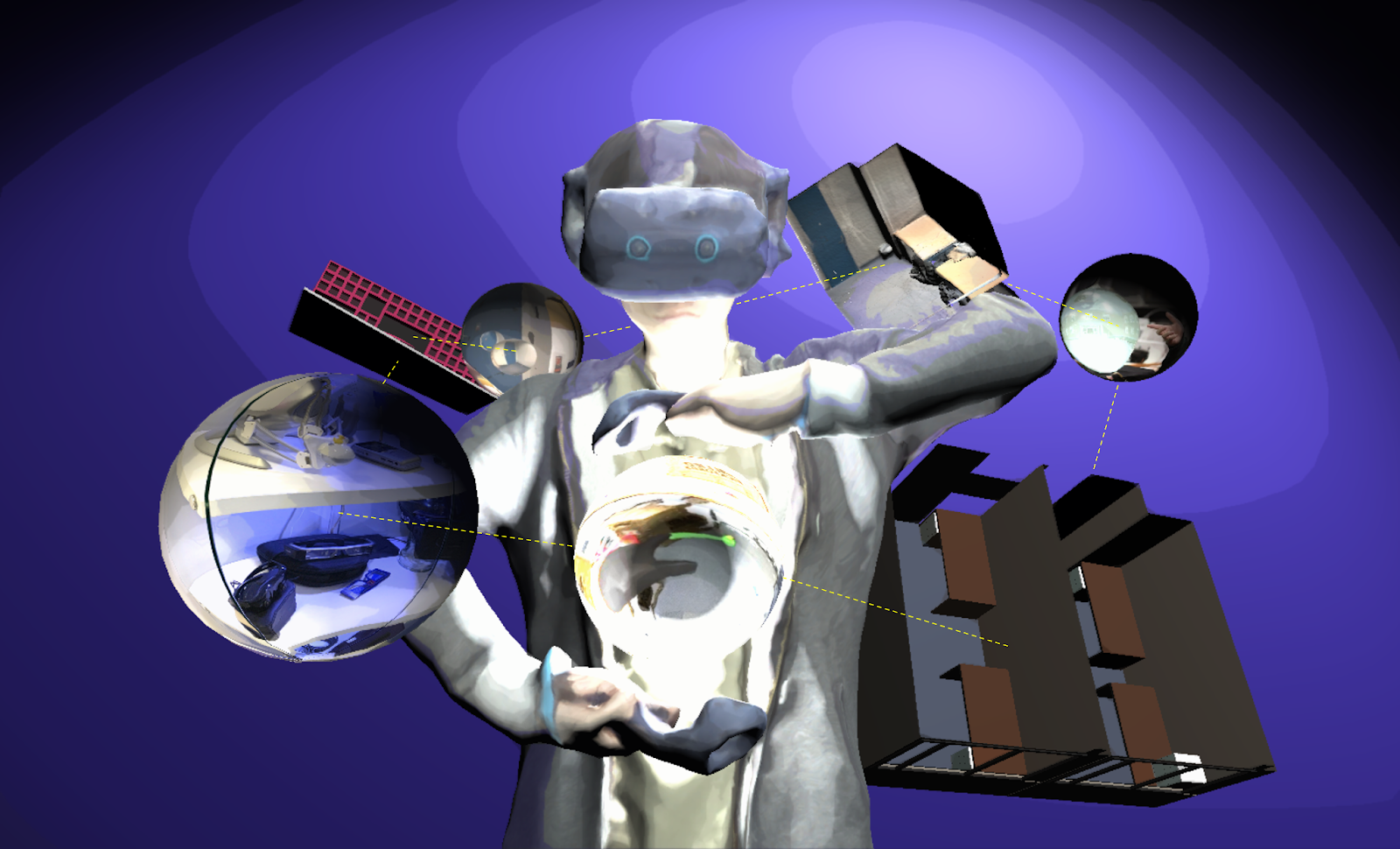

Empathy in Virtual Reality

Virtual reality (VR) interfaces is an influential medium to trigger emotional changes in humans. However, there is little research on making users of VR interfaces aware of their own and in collaborative interfaces, one another's emotional state. In this project, through a series of system development and user evaluations, we are investigating how physiological data such as heart rate, galvanic skin response, pupil dilation, and EEG can be used as a medium to communicate emotional states either to self (single user interfaces) or the collaborator (collaborative interfaces). The overarching goal is to make VR environments more empathetic and collaborators more aware of each other's emotional state.

-

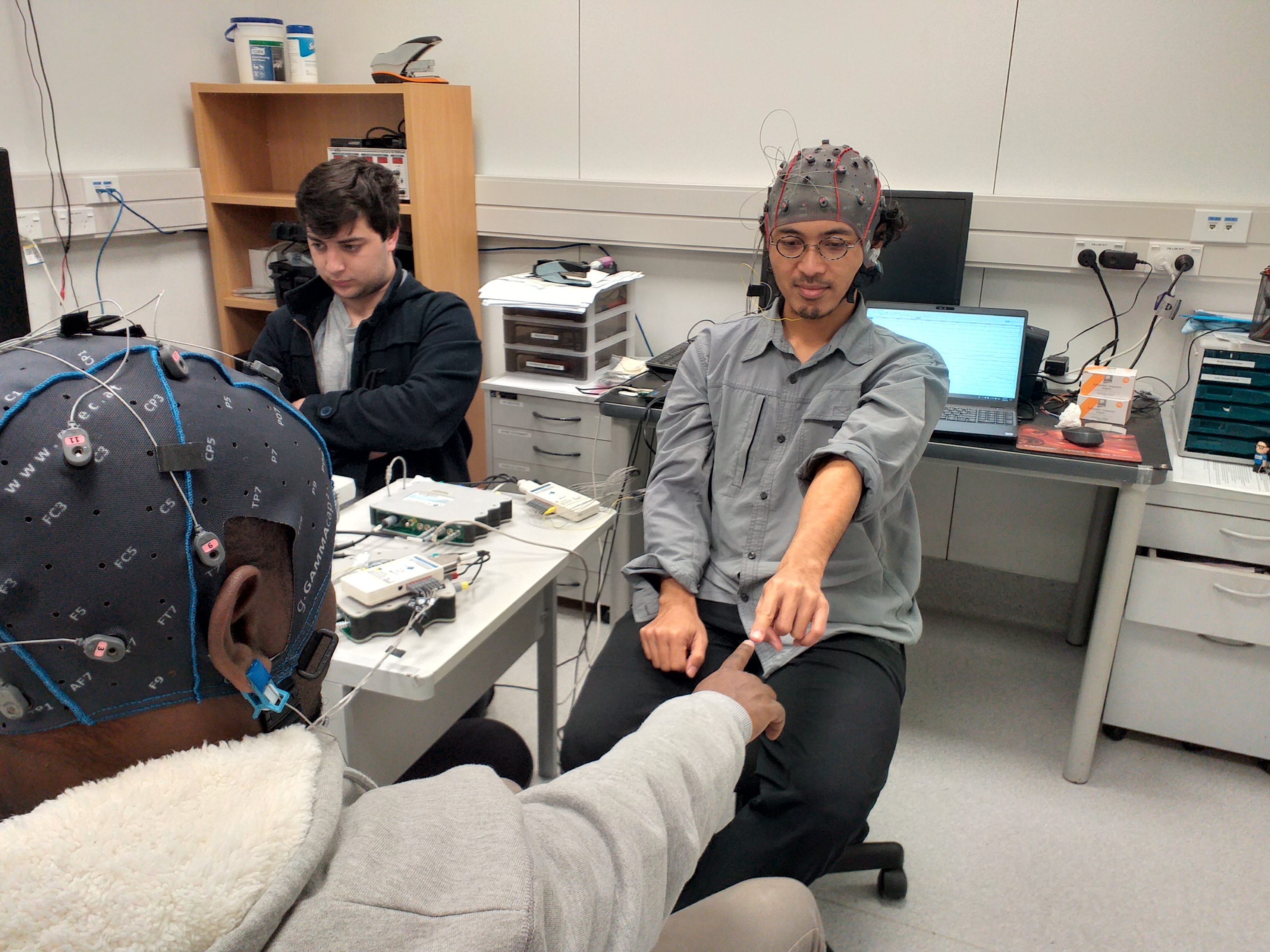

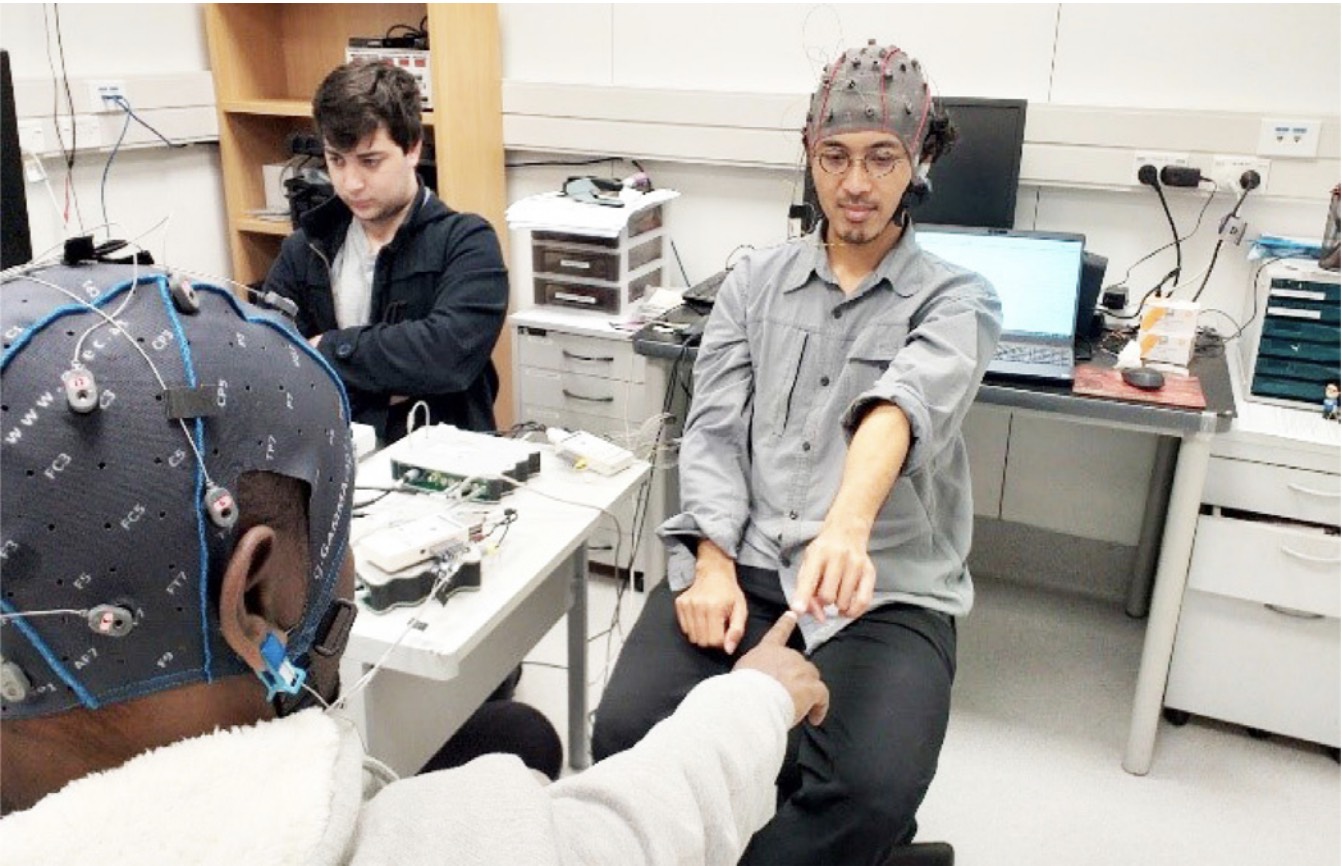

Brain Synchronisation in VR

Collaborative Virtual Reality have been the subject of research for nearly three decades now. This has led to a deep understanding of how individuals interact in such environments and some of the factors that impede these interactions. However, despite this knowledge we still do not fully understand how inter-personal interactions in virtual environments are reflected in the physiological domain. This project seeks to answer the question by monitoring neural activity of participants in collaborative virtual environments. We do this by using a technique known as Hyperscanning, which refers to the simultaneous acquisition of neural activity from two or more people. In this project we use Hyperscanning to determine if individuals interacting in a virtual environment exhibit inter-brain synchrony. The goal of this project is to first study the phenomenon of inter-brain synchrony, and then find means of inducing and expediting it by making changes in the virtual environment. This project feeds into the overarching goals of the Empathic Computing Laboratory that seek to bring individuals closer using technology as a vehicle to evoke empathy.

-

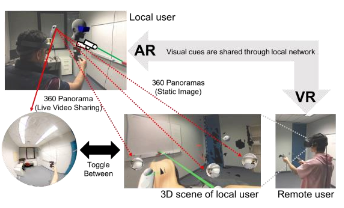

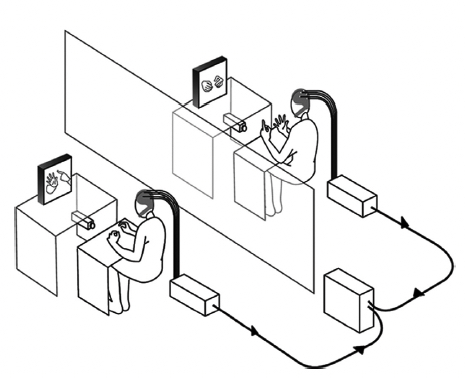

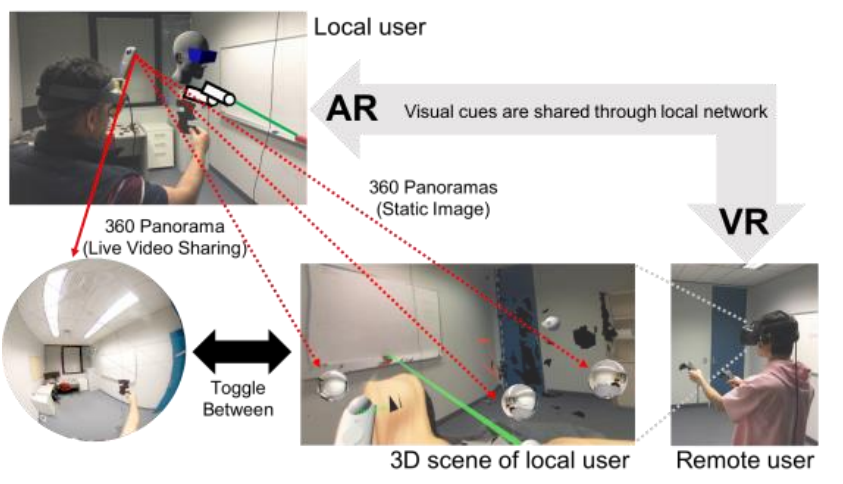

Using 3D Spaces and 360 Video Content for Collaboration

This project explores techniques to enhance collaborative experience in Mixed Reality environments using 3D reconstructions, 360 videos and 2D images. Previous research has shown that 360 video can provide a high resolution immersive visual space for collaboration, but little spatial information. Conversely, 3D scanned environments can provide high quality spatial cues, but with poor visual resolution. This project combines both approaches, enabling users to switch between a 3D view or 360 video of a collaborative space. In this hybrid interface, users can pick the representation of space best suited to the needs of the collaborative task. The project seeks to provide design guidelines for collaboration systems to enable empathic collaboration by sharing visual cues and environments across time and space.

Publications

-

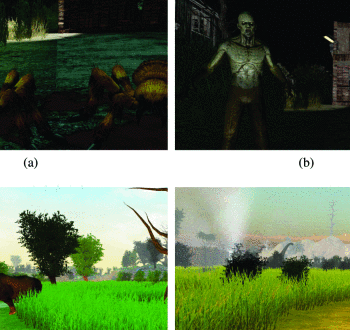

Sharing Manipulated Heart Rate Feedback in Collaborative Virtual Environments

Arindam Dey ; Hao Chen ; Ashkan Hayati ; Mark Billinghurst ; Robert W. Lindeman@inproceedings{dey2019sharing,

title={Sharing Manipulated Heart Rate Feedback in Collaborative Virtual Environments},

author={Dey, Arindam and Chen, Hao and Hayati, Ashkan and Billinghurst, Mark and Lindeman, Robert W},

booktitle={2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages={248--257},

year={2019},

organization={IEEE}

}We have explored the effects of sharing manipulated heart rate feedback in collaborative virtual environments. In our study, we created two types of different virtual environments (active and passive) with different levels of interactions and provided three levels of manipulated heart rate feedback (decreased, unchanged, and increased). We measured the effects of manipulated feedback on Social Presence, affect, physical heart rate, and overall experience. We noticed a significant effect of the manipulated heart rate feedback in affecting scariness and nervousness. The perception of the collaborator's valance and arousal was also affected where increased heart rate feedback perceived as a higher valance and lower arousal. Increased heart rate feedback decreased the real heart rate. The type of virtual environments had a significant effect on social presence, heart rate, and affect where the active environment had better performances across these measurements. We discuss the implications of this and directions for future research. -

A Technique for Mixed Reality Remote Collaboration using 360 Panoramas in 3D Reconstructed Scenes

Theophilus Teo, Ashkan F. Hayati, Gun A. Lee, Mark Billinghurst, Matt Adcock@inproceedings{teo2019technique,

title={A Technique for Mixed Reality Remote Collaboration using 360 Panoramas in 3D Reconstructed Scenes},

author={Teo, Theophilus and F. Hayati, Ashkan and A. Lee, Gun and Billinghurst, Mark and Adcock, Matt},

booktitle={25th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2019}

}Mixed Reality (MR) remote collaboration provides an enhanced immersive experience where a remote user can provide verbal and nonverbal assistance to a local user to increase the efficiency and performance of the collaboration. This is usually achieved by sharing the local user's environment through live 360 video or a 3D scene, and using visual cues to gesture or point at real objects allowing for better understanding and collaborative task performance. While most of prior work used one of the methods to capture the surrounding environment, there may be situations where users have to choose between using 360 panoramas or 3D scene reconstruction to collaborate, as each have unique benefits and limitations. In this paper we designed a prototype system that combines 360 panoramas into a 3D scene to introduce a novel way for users to interact and collaborate with each other. We evaluated the prototype through a user study which compared the usability and performance of our proposed approach to live 360 video collaborative system, and we found that participants enjoyed using different ways to access the local user's environment although it took them longer time to learn to use our system. We also collected subjective feedback for future improvements and provide directions for future research. -

A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning

Ihshan Gumilar, Ekansh Sareen, Reed Bell, Augustus Stone, Ashkan Hayati, Jingwen Mao, Amit Barde, Anubha Gupta, Arindam Dey, Gun Lee, Mark BillinghurstGumilar, I., Sareen, E., Bell, R., Stone, A., Hayati, A., Mao, J., ... & Billinghurst, M. (2021). A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning. Computers & Graphics, 94, 62-75.

@article{gumilar2021comparative,

title={A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning},

author={Gumilar, Ihshan and Sareen, Ekansh and Bell, Reed and Stone, Augustus and Hayati, Ashkan and Mao, Jingwen and Barde, Amit and Gupta, Anubha and Dey, Arindam and Lee, Gun and others},

journal={Computers \& Graphics},

volume={94},

pages={62--75},

year={2021},

publisher={Elsevier}

}Researchers have employed hyperscanning, a technique used to simultaneously record neural activity from multiple participants, in real-world collaborations. However, to the best of our knowledge, there is no study that has used hyperscanning in Virtual Reality (VR). The aims of this study were; firstly, to replicate results of inter-brain synchrony reported in existing literature for a real world task and secondly, to explore whether the inter-brain synchrony could be elicited in a Virtual Environment (VE). This paper reports on three pilot-studies in two different settings (real-world and VR). Paired participants performed two sessions of a finger-pointing exercise separated by a finger-tracking exercise during which their neural activity was simultaneously recorded by electroencephalography (EEG) hardware. By using Phase Locking Value (PLV) analysis, VR was found to induce similar inter-brain synchrony as seen in the real-world. Further, it was observed that the finger-pointing exercise shared the same neurally activated area in both the real-world and VR. Based on these results, we infer that VR can be used to enhance inter-brain synchrony in collaborative tasks carried out in a VE. In particular, we have been able to demonstrate that changing visual perspective in VR is capable of eliciting inter-brain synchrony. This demonstrates that VR could be an exciting platform to explore the phenomena of inter-brain synchrony further and provide a deeper understanding of the neuroscience of human communication. -

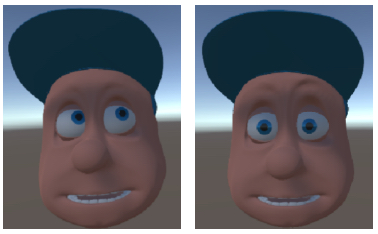

Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR

Ihshan Gumilar, Amit Barde, Ashkan Hayati, Mark Billinghurst, Gun Lee, Abdul Momin, Charles Averill, Arindam Dey.Gumilar, I., Barde, A., Hayati, A. F., Billinghurst, M., Lee, G., Momin, A., ... & Dey, A. (2021, May). Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-7).

@inproceedings{gumilar2021connecting,

title={Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR},

author={Gumilar, Ihshan and Barde, Amit and Hayati, Ashkan F and Billinghurst, Mark and Lee, Gun and Momin, Abdul and Averill, Charles and Dey, Arindam},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--7},

year={2021}

}Hyperscanning is an emerging method for measuring two or more brains simultaneously. This method allows researchers to simultaneously record neural activity from two or more people. While this method has been extensively implemented over the last five years in the real-world to study inter-brain synchrony, there is little work that has been undertaken in the use of hyperscanning in virtual environments. Preliminary research in the area demonstrates that inter-brain synchrony in virtual environments can be achieved in a mannersimilar to thatseen in the real world. The study described in this paper proposes to further research in the area by studying how non-verbal communication cues in social interactions in virtual environments can afect inter-brain synchrony. In particular, we concentrate on the role eye gaze playsin inter-brain synchrony. The aim of this research is to explore how eye gaze afects inter-brain synchrony between users in a collaborative virtual environment -

A Review of Hyperscanning and Its Use in Virtual Environments

Ihshan Gumilar, Ekansh Sareen, Reed Bell, Augustus Stone, Ashkan Hayati, Jingwen Mao, Amit Barde, Anubha Gupta, Arindam Dey, Gun Lee, Mark BillinghurstBarde, A., Gumilar, I., Hayati, A. F., Dey, A., Lee, G., & Billinghurst, M. (2020, December). A Review of Hyperscanning and Its Use in Virtual Environments. In Informatics (Vol. 7, No. 4, p. 55). Multidisciplinary Digital Publishing Institute.

@inproceedings{barde2020review,

title={A Review of Hyperscanning and Its Use in Virtual Environments},

author={Barde, Amit and Gumilar, Ihshan and Hayati, Ashkan F and Dey, Arindam and Lee, Gun and Billinghurst, Mark},

booktitle={Informatics},

volume={7},

number={4},

pages={55},

year={2020},

organization={Multidisciplinary Digital Publishing Institute}

}Researchers have employed hyperscanning, a technique used to simultaneously record neural activity from multiple participants, in real-world collaborations. However, to the best of our knowledge, there is no study that has used hyperscanning in Virtual Reality (VR). The aims of this study were; firstly, to replicate results of inter-brain synchrony reported in existing literature for a real world task and secondly, to explore whether the inter-brain synchrony could be elicited in a Virtual Environment (VE). This paper reports on three pilot-studies in two different settings (real-world and VR). Paired participants performed two sessions of a finger-pointing exercise separated by a finger-tracking exercise during which their neural activity was simultaneously recorded by electroencephalography (EEG) hardware. By using Phase Locking Value (PLV) analysis, VR was found to induce similar inter-brain synchrony as seen in the real-world. Further, it was observed that the finger-pointing exercise shared the same neurally activated area in both the real-world and VR. Based on these results, we infer that VR can be used to enhance inter-brain synchrony in collaborative tasks carried out in a VE. In particular, we have been able to demonstrate that changing visual perspective in VR is capable of eliciting inter-brain synchrony. This demonstrates that VR could be an exciting platform to explore the phenomena of inter-brain synchrony further and provide a deeper understanding of the neuroscience of human communication. -

Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR

Ihshan Gumilar , Amit Barde , Prasanth Sasikumar , Mark Billinghurst , Ashkan F. Hayati , Gun Lee , Yuda Munarko , Sanjit Singh , Abdul MominGumilar, I., Barde, A., Sasikumar, P., Billinghurst, M., Hayati, A. F., Lee, G., ... & Momin, A. (2022, April). Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR. In CHI Conference on Human Factors in Computing Systems Extended Abstracts (pp. 1-7).

@inproceedings{gumilar2022inter,

title={Inter-brain Synchrony and Eye Gaze Direction During Collaboration in VR},

author={Gumilar, Ihshan and Barde, Amit and Sasikumar, Prasanth and Billinghurst, Mark and Hayati, Ashkan F and Lee, Gun and Munarko, Yuda and Singh, Sanjit and Momin, Abdul},

booktitle={CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--7},

year={2022}

}Brain activity sometimes synchronises when people collaborate together on real world tasks. Understanding this process could to lead to improvements in face to face and remote collaboration. In this paper we report on an experiment exploring the relationship between eye gaze and inter-brain synchrony in Virtual Reality (VR). The experiment recruited pairs who were asked to perform finger-tracking exercises in VR with three different gaze conditions: averted, direct, and natural, while their brain activity was recorded. We found that gaze direction has a significant effect on inter-brain synchrony during collaboration for this task in VR. This shows that representing natural gaze could influence inter-brain synchrony in VR, which may have implications for avatar design for social VR. We discuss implications of our research and possible directions for future work. -

A Technique for Mixed Reality Remote Collaboration using 360° Panoramas in 3D Reconstructed Scenes

Theophilus Teo , Ashkan F. Hayati , Gun A. Lee , Mark Billinghurst , Matt AdcockT. Teo, A. F. Hayati, G. A. Lee, M. Billinghurst and M. Adcock. “A Technique for Mixed Reality Remote Collaboration using 360° Panoramas in 3D Reconstructed Scenes.” In: ACM Symposium on Virtual Reality Software and Technology. (VRST), Sydney, Australia, 2019.

@inproceedings{teo2019technique,

title={A technique for mixed reality remote collaboration using 360 panoramas in 3d reconstructed scenes},

author={Teo, Theophilus and F. Hayati, Ashkan and A. Lee, Gun and Billinghurst, Mark and Adcock, Matt},

booktitle={Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2019}

}Mixed Reality (MR) remote collaboration provides an enhanced immersive experience where a remote user can provide verbal and nonverbal assistance to a local user to increase the efficiency and performance of the collaboration. This is usually achieved by sharing the local user's environment through live 360 video or a 3D scene, and using visual cues to gesture or point at real objects allowing for better understanding and collaborative task performance. While most of prior work used one of the methods to capture the surrounding environment, there may be situations where users have to choose between using 360 panoramas or 3D scene reconstruction to collaborate, as each have unique benefits and limitations. In this paper we designed a prototype system that combines 360 panoramas into a 3D scene to introduce a novel way for users to interact and collaborate with each other. We evaluated the prototype through a user study which compared the usability and performance of our proposed approach to live 360 video collaborative system, and we found that participants enjoyed using different ways to access the local user's environment although it took them longer time to learn to use our system. We also collected subjective feedback for future improvements and provide directions for future research.