Theophilus Teo

Theophilus Teo

Research Fellow

Dr. Theophilus Teo (Theo) is a Postdoctoral Research Fellow at the Empathic Computing Laboratory (ECL) based at the University of South Australia (UniSA). His research interests centre around Mixed Reality, Immersive Collaboration, Telepresence, Interaction Techniques, and Human-Computer Interaction.

Before joining the ECL, he was a Project Assistant Professor at the Interactive Media Lab (IMLab, Keio University) working on the JST ERATO Inami JIZAI Body Project, focused on Virtual Living Laboratory, JIZAI Safari, Parallel View, and Immersive Robotic Interaction.

Theo received his Ph.D. at the ECL, UniSA in 2021, supervised by Prof. Mark Billinghurst, Dr. Gun A. Lee, and Dr. Matt Adcock (CSIRO Data61 advisor), investigating interactions and visualisations of a hybrid remote collaboration system, which combined 360 panorama images/videos and 3D reconstruction.

Projects

-

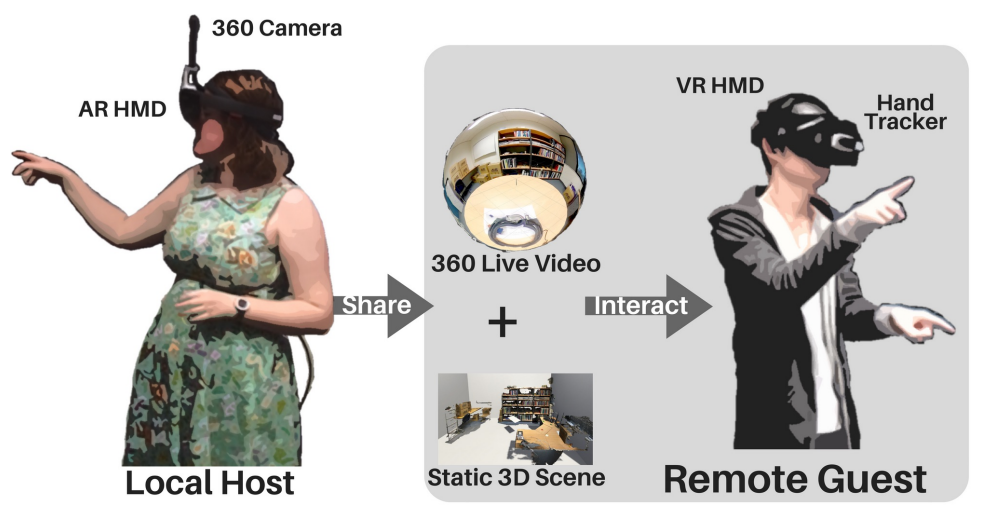

SharedSphere

SharedSphere is a Mixed Reality based remote collaboration system which not only allows sharing a live captured immersive 360 panorama, but also supports enriched two-way communication and collaboration through sharing non-verbal communication cues, such as view awareness cues, drawn annotation, and hand gestures.

-

Using 3D Spaces and 360 Video Content for Collaboration

This project explores techniques to enhance collaborative experience in Mixed Reality environments using 3D reconstructions, 360 videos and 2D images. Previous research has shown that 360 video can provide a high resolution immersive visual space for collaboration, but little spatial information. Conversely, 3D scanned environments can provide high quality spatial cues, but with poor visual resolution. This project combines both approaches, enabling users to switch between a 3D view or 360 video of a collaborative space. In this hybrid interface, users can pick the representation of space best suited to the needs of the collaborative task. The project seeks to provide design guidelines for collaboration systems to enable empathic collaboration by sharing visual cues and environments across time and space.

-

MPConnect: A Mixed Presence Mixed Reality System

This project explores how a Mixed Presence Mixed Reality System can enhance remote collaboration. Collaborative Mixed Reality (MR) is a popular area of research, but most work has focused on one-to-one systems where either both collaborators are co-located or the collaborators are remote from one another. For example, remote users might collaborate in a shared Virtual Reality (VR) system, or a local worker might use an Augmented Reality (AR) display to connect with a remote expert to help them complete a task.

Publications

-

Mixed Reality Collaboration through Sharing a Live Panorama

Gun A. Lee, Theophilus Teo, Seungwon Kim, Mark BillinghurstGun A. Lee, Theophilus Teo, Seungwon Kim, and Mark Billinghurst. 2017. Mixed reality collaboration through sharing a live panorama. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (SA '17). ACM, New York, NY, USA, Article 14, 4 pages. http://doi.acm.org/10.1145/3132787.3139203

@inproceedings{Lee:2017:MRC:3132787.3139203,

author = {Lee, Gun A. and Teo, Theophilus and Kim, Seungwon and Billinghurst, Mark},

title = {Mixed Reality Collaboration Through Sharing a Live Panorama},

booktitle = {SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

series = {SA '17},

year = {2017},

isbn = {978-1-4503-5410-3},

location = {Bangkok, Thailand},

pages = {14:1--14:4},

articleno = {14},

numpages = {4},

url = {http://doi.acm.org/10.1145/3132787.3139203},

doi = {10.1145/3132787.3139203},

acmid = {3139203},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {panorama, remote collaboration, shared experience},

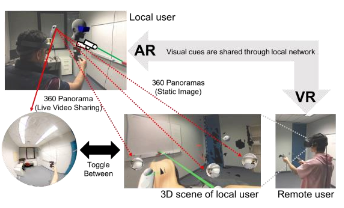

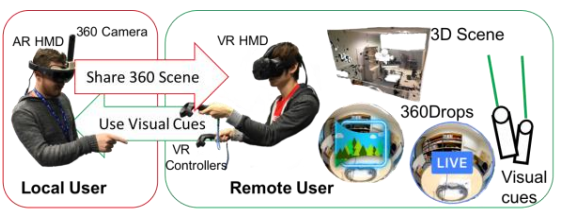

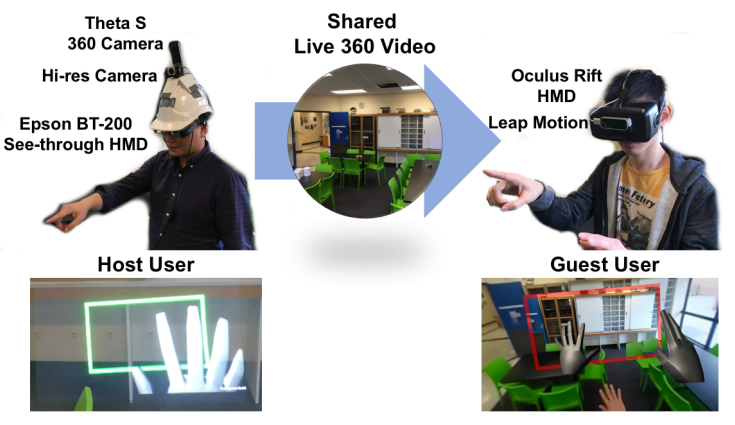

}One of the popular features on modern social networking platforms is sharing live 360 panorama video. This research investigates on how to further improve shared live panorama based collaborative experiences by applying Mixed Reality (MR) technology. SharedSphere is a wearable MR remote collaboration system. In addition to sharing a live captured immersive panorama, SharedSphere enriches the collaboration through overlaying MR visualisation of non-verbal communication cues (e.g., view awareness and gestures cues). User feedback collected through a preliminary user study indicated that sharing of live 360 panorama video was beneficial by providing a more immersive experience and supporting view independence. Users also felt that the view awareness cues were helpful for understanding the remote collaborator’s focus. -

Hand gestures and visual annotation in live 360 panorama-based mixed reality remote collaboration

Theophilus Teo, Gun A. Lee, Mark Billinghurst, Matt AdcockTheophilus Teo, Gun A. Lee, Mark Billinghurst, and Matt Adcock. 2018. Hand gestures and visual annotation in live 360 panorama-based mixed reality remote collaboration. In Proceedings of the 30th Australian Conference on Computer-Human Interaction (OzCHI '18). ACM, New York, NY, USA, 406-410. DOI: https://doi.org/10.1145/3292147.3292200

BibTeX | EndNote | ACM Ref

@inproceedings{Teo:2018:HGV:3292147.3292200,

author = {Teo, Theophilus and Lee, Gun A. and Billinghurst, Mark and Adcock, Matt},

title = {Hand Gestures and Visual Annotation in Live 360 Panorama-based Mixed Reality Remote Collaboration},

booktitle = {Proceedings of the 30th Australian Conference on Computer-Human Interaction},

series = {OzCHI '18},

year = {2018},

isbn = {978-1-4503-6188-0},

location = {Melbourne, Australia},

pages = {406--410},

numpages = {5},

url = {http://doi.acm.org/10.1145/3292147.3292200},

doi = {10.1145/3292147.3292200},

acmid = {3292200},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {gesture communication, mixed reality, remote collaboration},

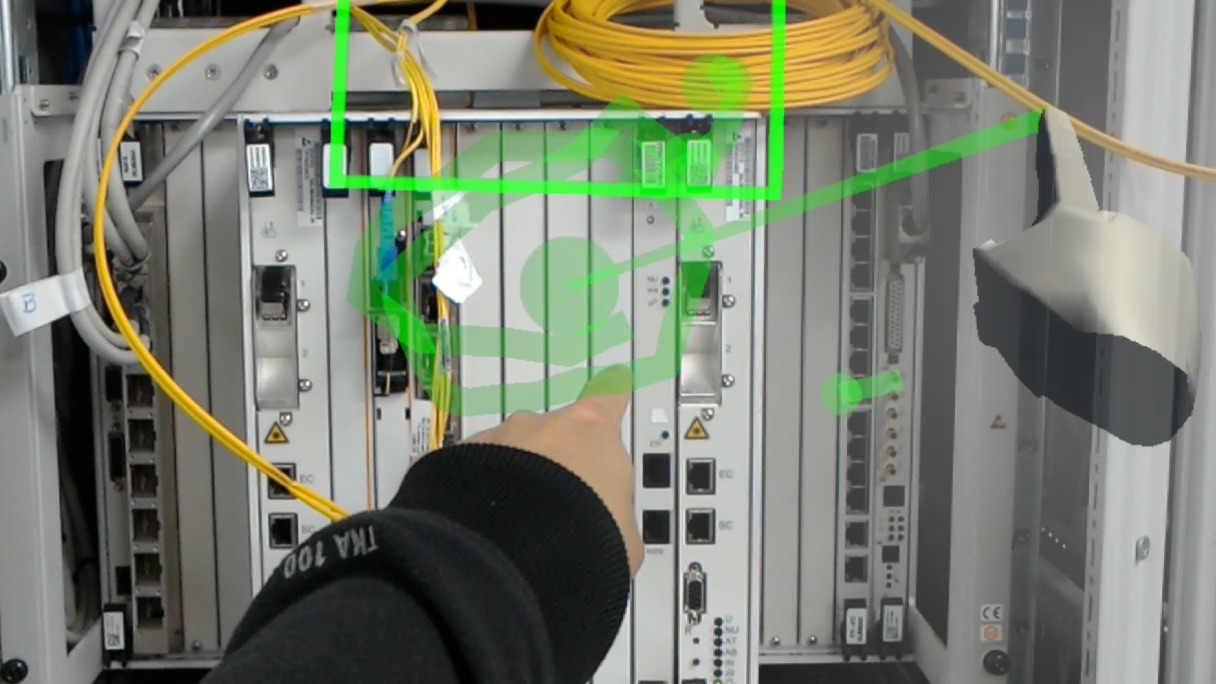

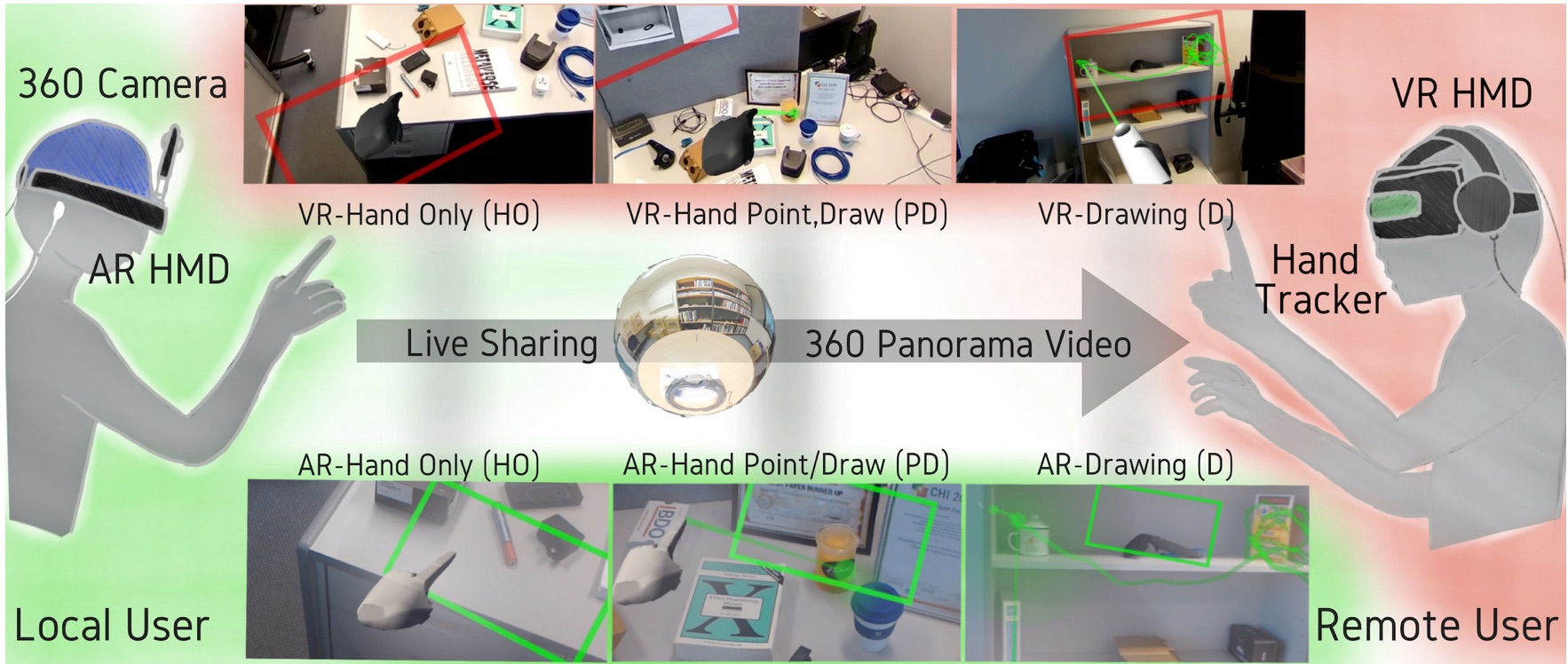

}In this paper, we investigate hand gestures and visual annotation cues overlaid in a live 360 panorama-based Mixed Reality remote collaboration. The prototype system captures 360 live panorama video of the surroundings of a local user and shares it with another person in a remote location. The two users wearing Augmented Reality or Virtual Reality head-mounted displays can collaborate using augmented visual communication cues such as virtual hand gestures, ray pointing, and drawing annotations. Our preliminary user evaluation comparing these cues found that using visual annotation cues (ray pointing and drawing annotation) helps local users perform collaborative tasks faster, easier, making less errors and with better understanding, compared to using only virtual hand gestures. -

Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction

Teo, T., Lawrence, L., Lee, G. A., Billinghurst, M., & Adcock, M.Teo, T., Lawrence, L., Lee, G. A., Billinghurst, M., & Adcock, M. (2019, April). Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (p. 201). ACM.

@inproceedings{teo2019mixed,

title={Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction},

author={Teo, Theophilus and Lawrence, Louise and Lee, Gun A and Billinghurst, Mark and Adcock, Matt},

booktitle={Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages={201},

year={2019},

organization={ACM}

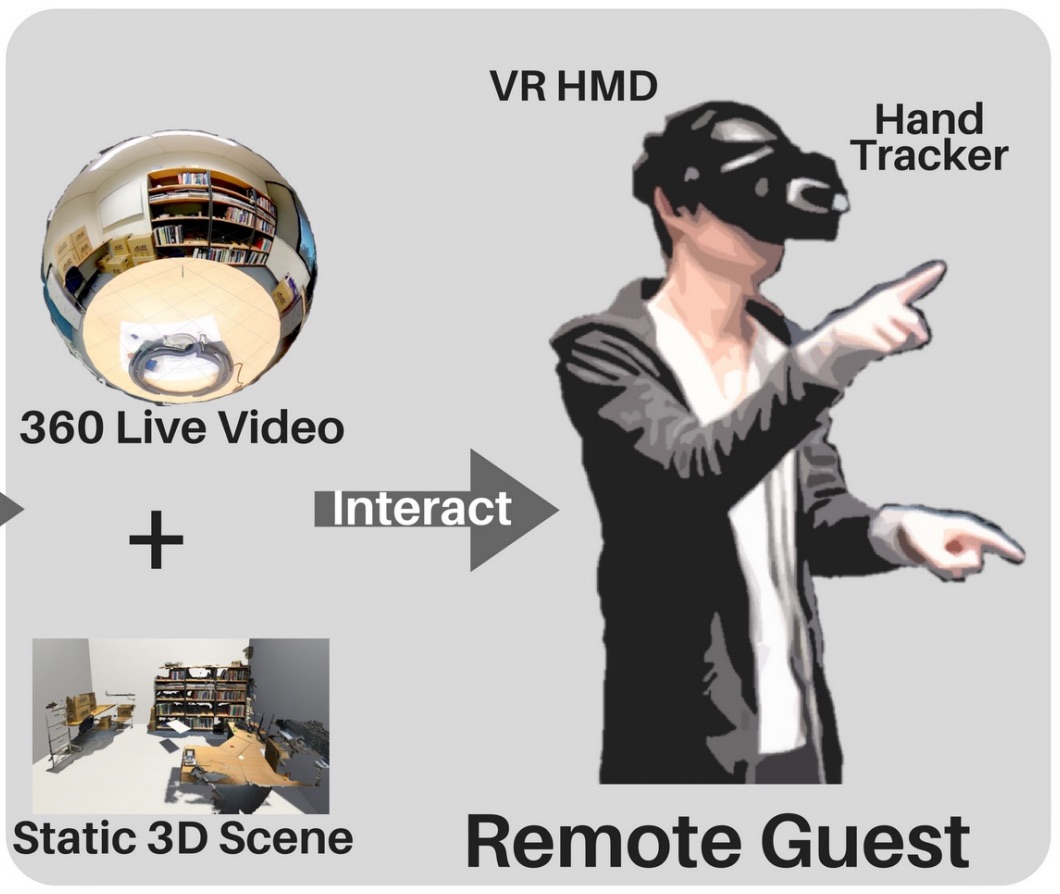

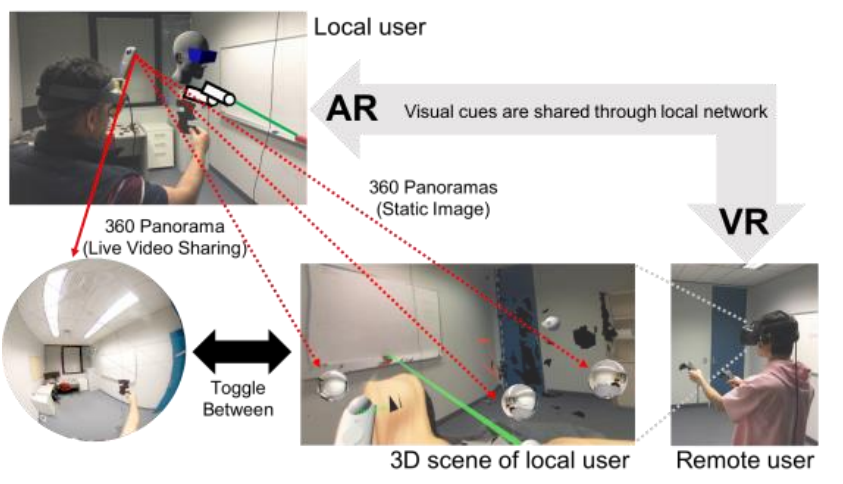

}Remote Collaboration using Virtual Reality (VR) and Augmented Reality (AR) has recently become a popular way for people from different places to work together. Local workers can collaborate with remote helpers by sharing 360-degree live video or 3D virtual reconstruction of their surroundings. However, each of these techniques has benefits and drawbacks. In this paper we explore mixing 360 video and 3D reconstruction together for remote collaboration, by preserving benefits of both systems while reducing drawbacks of each. We developed a hybrid prototype and conducted user study to compare benefits and problems of using 360 or 3D alone to clarify the needs for mixing the two, and also to evaluate the prototype system. We found participants performed significantly better on collaborative search tasks in 360 and felt higher social presence, yet 3D also showed potential to complement. Participant feedback collected after trying our hybrid system provided directions for improvement. -

Supporting Visual Annotation Cues in a Live 360 Panorama-based Mixed Reality Remote Collaboration

Teo, T., Lee, G. A., Billinghurst, M., & Adcock, M.Teo, T., Lee, G. A., Billinghurst, M., & Adcock, M. (2019, March). Supporting Visual Annotation Cues in a Live 360 Panorama-based Mixed Reality Remote Collaboration. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 1187-1188). IEEE.

@inproceedings{teo2019supporting,

title={Supporting Visual Annotation Cues in a Live 360 Panorama-based Mixed Reality Remote Collaboration},

author={Teo, Theophilus and Lee, Gun A and Billinghurst, Mark and Adcock, Matt},

booktitle={2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={1187--1188},

year={2019},

organization={IEEE}

}We propose enhancing live 360 panorama-based Mixed Reality (MR) remote collaboration through supporting visual annotation cues. Prior work on live 360 panorama-based collaboration used MR visualization to overlay visual cues, such as view frames and virtual hands, yet they were not registered onto the shared physical workspace, hence had limitations in accuracy for pointing or marking objects. Our prototype system uses spatial mapping and tracking feature of an Augmented Reality head-mounted display to show visual annotation cues accurately registered onto the physical environment. We describe the design and implementation details of our prototype system, and discuss on how such feature could help improve MR remote collaboration. -

A Technique for Mixed Reality Remote Collaboration using 360 Panoramas in 3D Reconstructed Scenes

Theophilus Teo, Ashkan F. Hayati, Gun A. Lee, Mark Billinghurst, Matt Adcock@inproceedings{teo2019technique,

title={A Technique for Mixed Reality Remote Collaboration using 360 Panoramas in 3D Reconstructed Scenes},

author={Teo, Theophilus and F. Hayati, Ashkan and A. Lee, Gun and Billinghurst, Mark and Adcock, Matt},

booktitle={25th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2019}

}Mixed Reality (MR) remote collaboration provides an enhanced immersive experience where a remote user can provide verbal and nonverbal assistance to a local user to increase the efficiency and performance of the collaboration. This is usually achieved by sharing the local user's environment through live 360 video or a 3D scene, and using visual cues to gesture or point at real objects allowing for better understanding and collaborative task performance. While most of prior work used one of the methods to capture the surrounding environment, there may be situations where users have to choose between using 360 panoramas or 3D scene reconstruction to collaborate, as each have unique benefits and limitations. In this paper we designed a prototype system that combines 360 panoramas into a 3D scene to introduce a novel way for users to interact and collaborate with each other. We evaluated the prototype through a user study which compared the usability and performance of our proposed approach to live 360 video collaborative system, and we found that participants enjoyed using different ways to access the local user's environment although it took them longer time to learn to use our system. We also collected subjective feedback for future improvements and provide directions for future research. -

OmniGlobeVR: A Collaborative 360° Communication System for VR

Zhengqing Li , Liwei Chan , Theophilus Teo , Hideki KoikeZhengqing Li, Liwei Chan, Theophilus Teo, and Hideki Koike. 2020. OmniGlobeVR: A Collaborative 360° Communication System for VR. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems (CHI EA ’20). Association for Computing Machinery, New York, NY, USA, 1–8. DOI:https://doi.org/10.1145/3334480.3382869

@inproceedings{li2020omniglobevr,

title={OmniGlobeVR: A Collaborative 360 Communication System for VR},

author={Li, Zhengqing and Chan, Liwei and Teo, Theophilus and Koike, Hideki},

booktitle={Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--8},

year={2020}

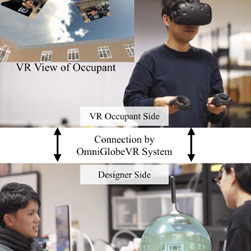

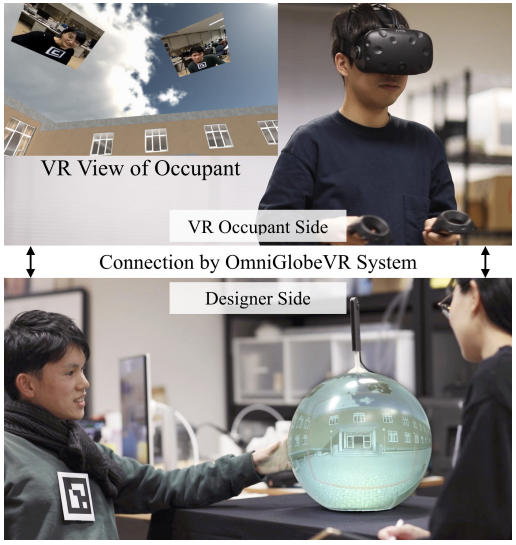

}In this paper, we propose OmniGlobeVR, a novel collaboration tool based on an asymmetric cooperation system that supports communication and cooperation between a VR user (occupant) and multiple non-VR users (designers) across the virtual and physical platform. The OmniGlobeVR allows designer(s) to access the content of a VR space from any point of view using two view modes: 360° first-person mode and third-person mode. Furthermore, a proper interface of a shared gaze awareness cue is designed to enhance communication between the occupant and the designer(s). The system also has a face window feature that allows designer(s) to share their facial expressions and upper body gesture with the occupant in order to exchange and express information in a nonverbal context. Combined together, the OmniGlobeVR allows collaborators between the VR and non-VR platforms to cooperate while allowing designer(s) to easily access physical assets while working synchronously with the occupant in the VR space. -

Exploring interaction techniques for 360 panoramas inside a 3D reconstructed scene for mixed reality remote collaboration

Theophilus Teo, Mitchell Norman, Gun A. Lee, Mark Billinghurst & Matt AdcockT. Teo, M. Norman, G. A. Lee, M. Billinghurst and M. Adcock. “Exploring interaction techniques for 360 panoramas inside a 3D reconstructed scene for mixed reality remote collaboration.” In: J Multimodal User Interfaces. (JMUI), 2020.

@article{teo2020exploring,

title={Exploring interaction techniques for 360 panoramas inside a 3D reconstructed scene for mixed reality remote collaboration},

author={Teo, Theophilus and Norman, Mitchell and Lee, Gun A and Billinghurst, Mark and Adcock, Matt},

journal={Journal on Multimodal User Interfaces},

volume={14},

pages={373--385},

year={2020},

publisher={Springer}

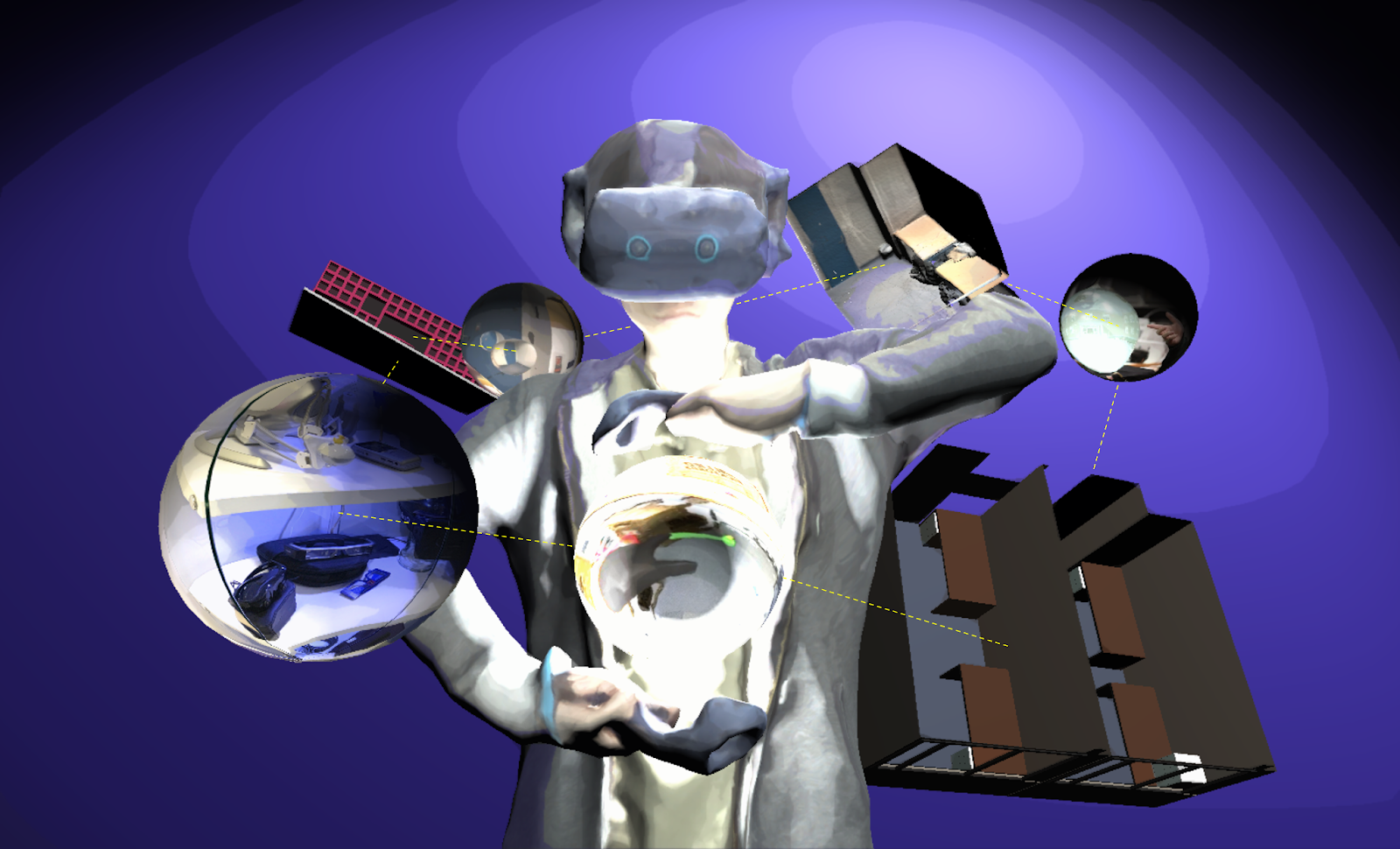

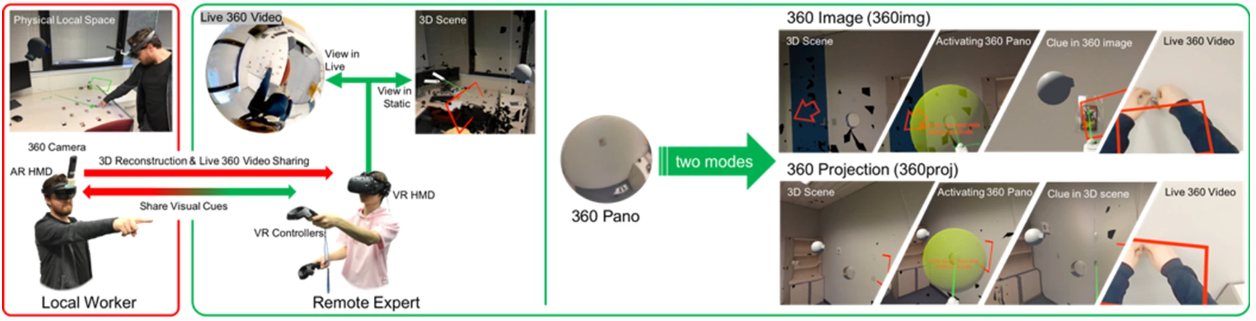

}Remote collaboration using mixed reality (MR) enables two separated workers to collaborate by sharing visual cues. A local worker can share his/her environment to the remote worker for a better contextual understanding. However, prior techniques were using either 360 video sharing or a complicated 3D reconstruction configuration. This limits the interactivity and practicality of the system. In this paper we show an interactive and easy-to-configure MR remote collaboration technique enabling a local worker to easily share his/her environment by integrating 360 panorama images into a low-cost 3D reconstructed scene as photo-bubbles and projective textures. This enables the remote worker to visit past scenes on either an immersive 360 panoramic scenery, or an interactive 3D environment. We developed a prototype and conducted a user study comparing the two modes of how 360 panorama images could be used in a remote collaboration system. Results suggested that both photo-bubbles and projective textures can provide high social presence, co-presence and low cognitive load for solving tasks while each have its advantage and limitations. For example, photo-bubbles are good for a quick navigation inside the 3D environment without depth perception while projective textures are good for spatial understanding but require physical efforts. -

OmniGlobeVR: A Collaborative 360-Degree Communication System for VR

Zhengqing Li , Theophilus Teo , Liwei Chan , Gun Lee , Matt Adcock , Mark Billinghurst , Hideki KoikeZ. Li, T. Teo, G. Lee, M. Adcock, M. Billinghurst, H. Koike. “A collaborative 360-degree communication system for VR”. In Proceedings of the 2020 Designing Interactive Systems Conference (DIS2020). ACM, 2020.

@inproceedings{10.1145/3357236.3395429,

author = {Li, Zhengqing and Teo, Theophilus and Chan, Liwei and Lee, Gun and Adcock, Matt and Billinghurst, Mark and Koike, Hideki},

title = {OmniGlobeVR: A Collaborative 360-Degree Communication System for VR},

year = {2020},

isbn = {9781450369749},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3357236.3395429},

doi = {10.1145/3357236.3395429},

abstract = {In this paper, we present a novel collaboration tool, OmniGlobeVR, which is an asymmetric system that supports communication and collaboration between a VR user (occupant) and multiple non-VR users (designers) across the virtual and physical platform. OmniGlobeVR allows designer(s) to explore the VR space from any point of view using two view modes: a 360° first-person mode and a third-person mode. In addition, a shared gaze awareness cue is provided to further enhance communication between the occupant and the designer(s). Finally, the system has a face window feature that allows designer(s) to share their facial expressions and upper body view with the occupant for exchanging and expressing information using nonverbal cues. We conducted a user study to evaluate the OmniGlobeVR, comparing three conditions: (1) first-person mode with the face window, (2) first-person mode with a solid window, and (3) third-person mode with the face window. We found that the first-person mode with the face window required significantly less mental effort, and provided better spatial presence, usability, and understanding of the partner's focus. We discuss the design implications of these results and directions for future research.},

booktitle = {Proceedings of the 2020 ACM Designing Interactive Systems Conference},

pages = {615–625},

numpages = {11},

keywords = {virtual reality, communication, collaboration, mixed reality, spherical display, 360-degree camera},

location = {Eindhoven, Netherlands},

series = {DIS '20}

}In this paper, we present a novel collaboration tool, OmniGlobeVR, which is an asymmetric system that supports communication and collaboration between a VR user (occupant) and multiple non-VR users (designers) across the virtual and physical platform. OmniGlobeVR allows designer(s) to explore the VR space from any point of view using two view modes: a 360° first-person mode and a third-person mode. In addition, a shared gaze awareness cue is provided to further enhance communication between the occupant and the designer(s). Finally, the system has a face window feature that allows designer(s) to share their facial expressions and upper body view with the occupant for exchanging and expressing information using nonverbal cues. We conducted a user study to evaluate the OmniGlobeVR, comparing three conditions: (1) first-person mode with the face window, (2) first-person mode with a solid window, and (3) third-person mode with the face window. We found that the first-person mode with the face window required significantly less mental effort, and provided better spatial presence, usability, and understanding of the partner's focus. We discuss the design implications of these results and directions for future research. -

360Drops: Mixed Reality Remote Collaboration using 360 Panoramas within the 3D Scene

Theophilus Teo , Gun A. Lee , Mark Billinghurst , Matt AdcockT. Teo, G. A. Lee, M. Billinghurst and M. Adcock. “360Drops: Mixed Reality Remove Collaboration using 360° Panoramas within the 3D Scene.” In: ACM SIGGRAPH Conference and Exhibition on Computer Graphics & Interactive Technologies in Asia. (SA 2019), Brisbane, Australia, 2019.

@inproceedings{10.1145/3355049.3360517,

author = {Teo, Theophilus and A. Lee, Gun and Billinghurst, Mark and Adcock, Matt},

title = {360Drops: Mixed Reality Remote Collaboration Using 360 Panoramas within the 3D Scene*},

year = {2019},

isbn = {9781450369428},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3355049.3360517},

doi = {10.1145/3355049.3360517},

abstract = {Mixed Reality (MR) remote guidance has become a practical solution for collaboration that includes nonverbal communication. This research focuses on integrating different types of MR remote collaboration systems together allowing a new variety for remote collaboration to extend its features and user experience. In this demonstration, we present 360Drops, a MR remote collaboration system that uses 360 panorama images within 3D reconstructed scenes. We introduce a new technique to interact with multiple 360 Panorama Spheres in an immersive 3D reconstructed scene. This allows a remote user to switch between multiple 360 scenes “live/static, past/present,” placed in a 3D reconstructed scene to promote a better understanding of space and interactivity through verbal and nonverbal communication. We present the system features and user experience to the attendees of SIGGRAPH Asia 2019 through a live demonstration.},

booktitle = {SIGGRAPH Asia 2019 Emerging Technologies},

pages = {1–2},

numpages = {2},

keywords = {Remote Collaboration, Shared Experience, Mixed Reality},

location = {Brisbane, QLD, Australia},

series = {SA '19}

}Mixed Reality (MR) remote guidance has become a practical solution for collaboration that includes nonverbal communication. This research focuses on integrating different types of MR remote collaboration systems together allowing a new variety for remote collaboration to extend its features and user experience. In this demonstration, we present 360Drops, a MR remote collaboration system that uses 360 panorama images within 3D reconstructed scenes. We introduce a new technique to interact with multiple 360 Panorama Spheres in an immersive 3D reconstructed scene. This allows a remote user to switch between multiple 360 scenes “live/static, past/present,” placed in a 3D reconstructed scene to promote a better understanding of space and interactivity through verbal and nonverbal communication. We present the system features and user experience to the attendees of SIGGRAPH Asia 2019 through a live demonstration. -

A Technique for Mixed Reality Remote Collaboration using 360° Panoramas in 3D Reconstructed Scenes

Theophilus Teo , Ashkan F. Hayati , Gun A. Lee , Mark Billinghurst , Matt AdcockT. Teo, A. F. Hayati, G. A. Lee, M. Billinghurst and M. Adcock. “A Technique for Mixed Reality Remote Collaboration using 360° Panoramas in 3D Reconstructed Scenes.” In: ACM Symposium on Virtual Reality Software and Technology. (VRST), Sydney, Australia, 2019.

@inproceedings{teo2019technique,

title={A technique for mixed reality remote collaboration using 360 panoramas in 3d reconstructed scenes},

author={Teo, Theophilus and F. Hayati, Ashkan and A. Lee, Gun and Billinghurst, Mark and Adcock, Matt},

booktitle={Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2019}

}Mixed Reality (MR) remote collaboration provides an enhanced immersive experience where a remote user can provide verbal and nonverbal assistance to a local user to increase the efficiency and performance of the collaboration. This is usually achieved by sharing the local user's environment through live 360 video or a 3D scene, and using visual cues to gesture or point at real objects allowing for better understanding and collaborative task performance. While most of prior work used one of the methods to capture the surrounding environment, there may be situations where users have to choose between using 360 panoramas or 3D scene reconstruction to collaborate, as each have unique benefits and limitations. In this paper we designed a prototype system that combines 360 panoramas into a 3D scene to introduce a novel way for users to interact and collaborate with each other. We evaluated the prototype through a user study which compared the usability and performance of our proposed approach to live 360 video collaborative system, and we found that participants enjoyed using different ways to access the local user's environment although it took them longer time to learn to use our system. We also collected subjective feedback for future improvements and provide directions for future research. -

Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction

Theophilus Teo , Louise Lawrence , Gun A. Lee , Mark Billinghurst , Matt AdcockT. Teo, L. Lawrence, G. A. Lee, M. Billinghurst, and M. Adcock. (2019). “Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction”. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI '19). ACM, New York, NY, USA, Paper 201, 14 pages.

@inproceedings{10.1145/3290605.3300431,

author = {Teo, Theophilus and Lawrence, Louise and Lee, Gun A. and Billinghurst, Mark and Adcock, Matt},

title = {Mixed Reality Remote Collaboration Combining 360 Video and 3D Reconstruction},

year = {2019},

isbn = {9781450359702},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3290605.3300431},

doi = {10.1145/3290605.3300431},

abstract = {Remote Collaboration using Virtual Reality (VR) and Augmented Reality (AR) has recently become a popular way for people from different places to work together. Local workers can collaborate with remote helpers by sharing 360-degree live video or 3D virtual reconstruction of their surroundings. However, each of these techniques has benefits and drawbacks. In this paper we explore mixing 360 video and 3D reconstruction together for remote collaboration, by preserving benefits of both systems while reducing drawbacks of each. We developed a hybrid prototype and conducted user study to compare benefits and problems of using 360 or 3D alone to clarify the needs for mixing the two, and also to evaluate the prototype system. We found participants performed significantly better on collaborative search tasks in 360 and felt higher social presence, yet 3D also showed potential to complement. Participant feedback collected after trying our hybrid system provided directions for improvement.},

booktitle = {Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages = {1–14},

numpages = {14},

keywords = {interaction methods, remote collaboration, 3d scene reconstruction, mixed reality, virtual reality, 360 panorama},

location = {Glasgow, Scotland Uk},

series = {CHI '19}

}Remote Collaboration using Virtual Reality (VR) and Augmented Reality (AR) has recently become a popular way for people from different places to work together. Local workers can collaborate with remote helpers by sharing 360-degree live video or 3D virtual reconstruction of their surroundings. However, each of these techniques has benefits and drawbacks. In this paper we explore mixing 360 video and 3D reconstruction together for remote collaboration, by preserving benefits of both systems while reducing drawbacks of each. We developed a hybrid prototype and conducted user study to compare benefits and problems of using 360 or 3D alone to clarify the needs for mixing the two, and also to evaluate the prototype system. We found participants performed significantly better on collaborative search tasks in 360 and felt higher social presence, yet 3D also showed potential to complement. Participant feedback collected after trying our hybrid system provided directions for improvement. -

Mixed reality collaboration through sharing a live panorama

Gun A. Lee , Theophilus Teo , Seungwon Kim , Mark BillinghurstG. A. Lee, T. Teo, S. Kim, and M. Billinghurst. (2017). “Mixed reality collaboration through sharing a live panorama”. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (SA 2017). ACM, New York, NY, USA, Article 14, 4 pages.

@inproceedings{10.1145/3132787.3139203,

author = {Lee, Gun A. and Teo, Theophilus and Kim, Seungwon and Billinghurst, Mark},

title = {Mixed Reality Collaboration through Sharing a Live Panorama},

year = {2017},

isbn = {9781450354103},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3132787.3139203},

doi = {10.1145/3132787.3139203},

abstract = {One of the popular features on modern social networking platforms is sharing live 360 panorama video. This research investigates on how to further improve shared live panorama based collaborative experiences by applying Mixed Reality (MR) technology. Shared-Sphere is a wearable MR remote collaboration system. In addition to sharing a live captured immersive panorama, SharedSphere enriches the collaboration through overlaying MR visualisation of non-verbal communication cues (e.g., view awareness and gestures cues). User feedback collected through a preliminary user study indicated that sharing of live 360 panorama video was beneficial by providing a more immersive experience and supporting view independence. Users also felt that the view awareness cues were helpful for understanding the remote collaborator's focus.},

booktitle = {SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

articleno = {14},

numpages = {4},

keywords = {shared experience, panorama, remote collaboration},

location = {Bangkok, Thailand},

series = {SA '17}

}One of the popular features on modern social networking platforms is sharing live 360 panorama video. This research investigates on how to further improve shared live panorama based collaborative experiences by applying Mixed Reality (MR) technology. Shared-Sphere is a wearable MR remote collaboration system. In addition to sharing a live captured immersive panorama, SharedSphere enriches the collaboration through overlaying MR visualisation of non-verbal communication cues (e.g., view awareness and gestures cues). User feedback collected through a preliminary user study indicated that sharing of live 360 panorama video was beneficial by providing a more immersive experience and supporting view independence. Users also felt that the view awareness cues were helpful for understanding the remote collaborator's focus.