Youngho Lee

Youngho Lee

Visiting Researcher

Projects

-

Empathy Glasses

We have been developing a remote collaboration system with Empathy Glasses, a head worn display designed to create a stronger feeling of empathy between remote collaborators. To do this, we combined a head- mounted see-through display with a facial expression recognition system, a heart rate sensor, and an eye tracker. The goal is to enable a remote person to see and hear from another person's perspective and to understand how they are feeling. In this way, the system shares non-verbal cues that could help increase empathy between remote collaborators.

Publications

-

Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze

Gun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark BillinghurstGun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark Billinghurst. 2017. Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze. In Proceedings of ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, pp. 197-204. http://dx.doi.org/10.2312/egve.20171359

@inproceedings {egve.20171359,

booktitle = {ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments},

editor = {Robert W. Lindeman and Gerd Bruder and Daisuke Iwai},

title = {{Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze}},

author = {Lee, Gun A. and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammathip and Norman, Mitchell and Billinghurst, Mark},

year = {2017},

publisher = {The Eurographics Association},

ISSN = {1727-530X},

ISBN = {978-3-03868-038-3},

DOI = {10.2312/egve.20171359}

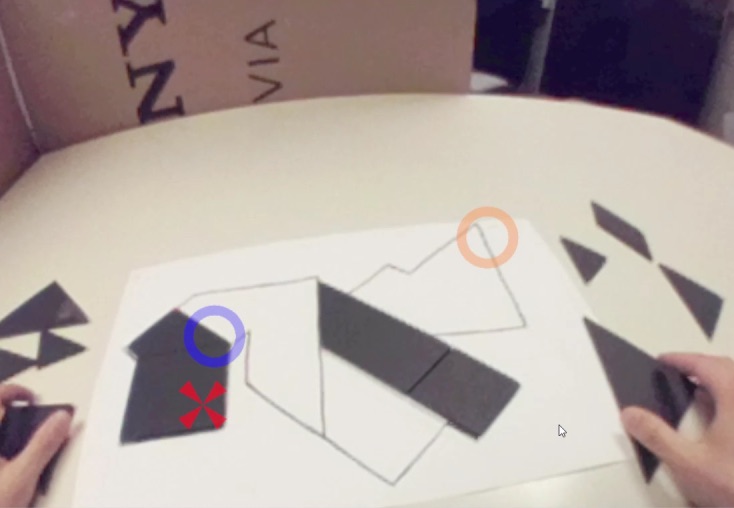

}To improve remote collaboration in video conferencing systems, researchers have been investigating augmenting visual cues onto a shared live video stream. In such systems, a person wearing a head-mounted display (HMD) and camera can share her view of the surrounding real-world with a remote collaborator to receive assistance on a real-world task. While this concept of augmented video conferencing (AVC) has been actively investigated, there has been little research on how sharing gaze cues might affect the collaboration in video conferencing. This paper investigates how sharing gaze in both directions between a local worker and remote helper in an AVC system affects the collaboration and communication. Using a prototype AVC system that shares the eye gaze of both users, we conducted a user study that compares four conditions with different combinations of eye gaze sharing between the two users. The results showed that sharing each other’s gaze significantly improved collaboration and communication. -

A Remote Collaboration System with Empathy Glasses

Y. Lee, K. Masai, K. Kunze, M. Sugimoto and M. Billinghurst. 2016. A Remote Collaboration System with Empathy Glasses. 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct)(ISMARW), Merida, pp. 342-343. http://doi.ieeecomputersociety.org/10.1109/ISMAR-Adjunct.2016.0112

@INPROCEEDINGS{7836533,

author = {Y. Lee and K. Masai and K. Kunze and M. Sugimoto and M. Billinghurst},

booktitle = {2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct)(ISMARW)},

title = {A Remote Collaboration System with Empathy Glasses},

year = {2017},

volume = {00},

number = {},

pages = {342-343},

keywords={Collaboration;Glass;Heart rate;Biomedical monitoring;Cameras;Hardware;Computers},

doi = {10.1109/ISMAR-Adjunct.2016.0112},

url = {doi.ieeecomputersociety.org/10.1109/ISMAR-Adjunct.2016.0112},

ISSN = {},

month={Sept.}

}

View: http://doi.ieeecomputersociety.org/10.1109/ISMAR-Adjunct.2016.0112

Video: https://www.youtube.com/watch?v=CdgWVDbMwp4In this paper, we describe a demonstration of remote collaboration system using Empathy glasses. Using our system, a local worker can share a view of their environment with a remote helper, as well as their gaze, facial expressions, and physiological signals. The remote user can send back visual cues via a see-through head mounted display to help them perform better on a real world task. The system also provides some indication of the remote users face expression using face tracking technology. -

A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs

Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M.Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M. (2017, November). A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs. In Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos (pp. 1-2). Eurographics Association.

@inproceedings{lee2017gaze,

title={A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs},

author={Lee, Youngho and Piumsomboon, Thammathip and Ens, Barrett and Lee, Gun and Dey, Arindam and Billinghurst, Mark},

booktitle={Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos},

pages={1--2},

year={2017},

organization={Eurographics Association}

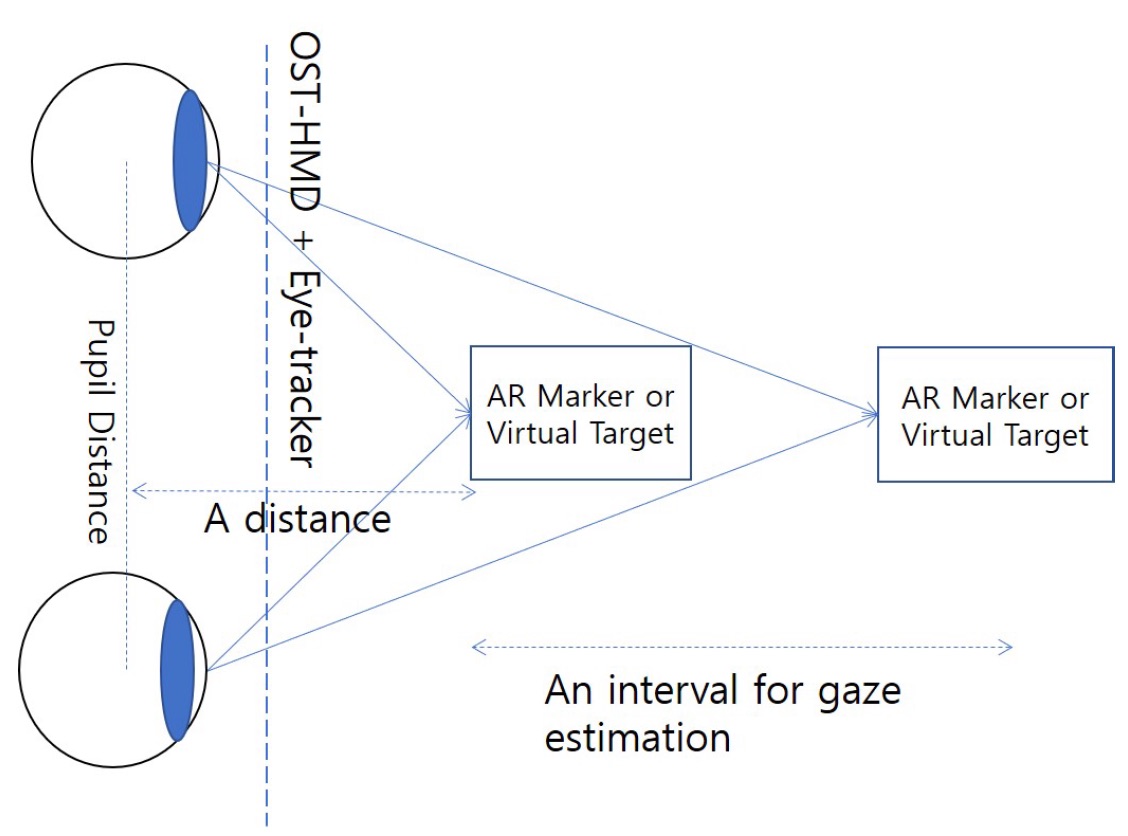

}The rapid developement of machine learning algorithms can be leveraged for potential software solutions in many domains including techniques for depth estimation of human eye gaze. In this paper, we propose an implicit and continuous data acquisition method for 3D gaze depth estimation for an optical see-Through head mounted display (OST-HMD) equipped with an eye tracker. Our method constantly monitoring and generating user gaze data for training our machine learning algorithm. The gaze data acquired through the eye-tracker include the inter-pupillary distance (IPD) and the gaze distance to the real andvirtual target for each eye.

-

Exploring enhancements for remote mixed reality collaboration

Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M.Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M. (2017, November). Exploring enhancements for remote mixed reality collaboration. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 16). ACM.

@inproceedings{piumsomboon2017exploring,

title={Exploring enhancements for remote mixed reality collaboration},

author={Piumsomboon, Thammathip and Day, Arindam and Ens, Barrett and Lee, Youngho and Lee, Gun and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={16},

year={2017},

organization={ACM}

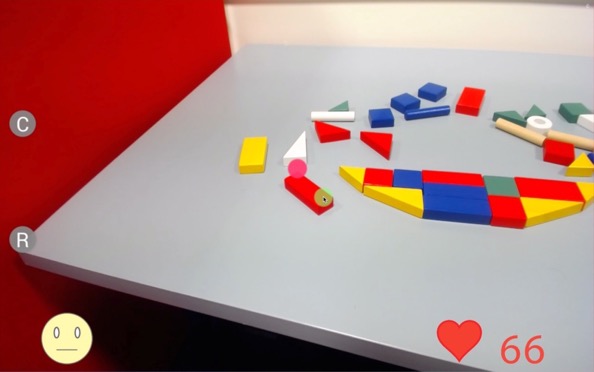

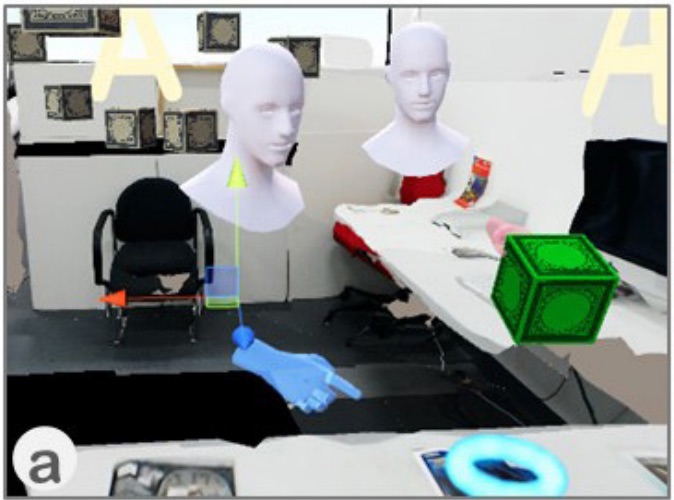

}In this paper, we explore techniques for enhancing remote Mixed Reality (MR) collaboration in terms of communication and interaction. We created CoVAR, a MR system for remote collaboration between an Augmented Reality (AR) and Augmented Virtuality (AV) users. Awareness cues and AV-Snap-to-AR interface were proposed for enhancing communication. Collaborative natural interaction, and AV-User-Body-Scaling were implemented for enhancing interaction. We conducted an exploratory study examining the awareness cues and the collaborative gaze, and the results showed the benefits of the proposed techniques for enhancing communication and interaction.