Nastaran Saffaryazdi

Nastaran Saffaryazdi

PhD Student

Nastaran is a PhD student in the Empathic Computing Laboratory, at the University of Auckland under Prof Mark Billinghurst supervision. She is working on emotional data collection and emotion recognition during the conversation using behavioural cues and physiological changes.

She got her master degree in Computer Architecture at Isfahan University of Technology in Iran in medical image processing. Before her PhD, she worked as a lecturer in some universities in Iran, and a software developer in a company which is the leading provider of banking software solutions in Iran.

Publications

-

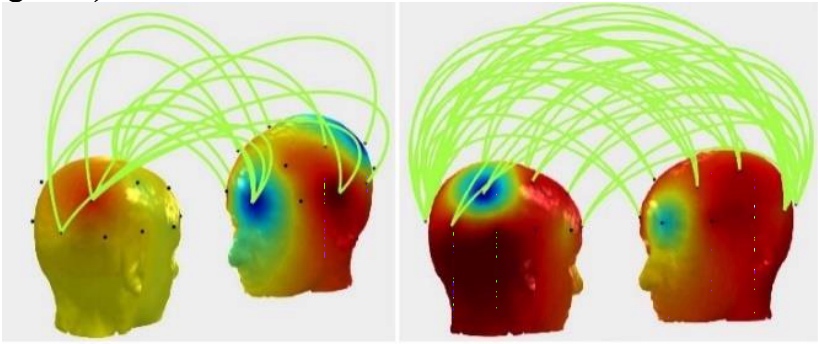

Inter-brain connectivity: Comparisons between real and virtual environments using hyperscanning

Amit Barde, Nastaran Saffaryazdi, P. Withana, N. Patel, Prasanth Sasikumar, Mark BillinghurstBarde, A., Saffaryazdi, N., Withana, P., Patel, N., Sasikumar, P., & Billinghurst, M. (2019, October). Inter-brain connectivity: Comparisons between real and virtual environments using hyperscanning. In 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 338-339). IEEE.

@inproceedings{barde2019inter,

title={Inter-brain connectivity: Comparisons between real and virtual environments using hyperscanning},

author={Barde, Amit and Saffaryazdi, Nastaran and Withana, Pawan and Patel, Nakul and Sasikumar, Prasanth and Billinghurst, Mark},

booktitle={2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={338--339},

year={2019},

organization={IEEE}

}Inter-brain connectivity between pairs of people was explored during a finger tracking task in the real-world and in Virtual Reality (VR). This was facilitated by the use of a dual EEG set-up that allowed us to use hyperscanning to simultaneously record the neural activity of both participants. We found that similar levels of inter-brain synchrony can be elicited in the real-world and VR for the same task. This is the first time that hyperscanning has been used to compare brain activity for the same task performed in real and virtual environments. -

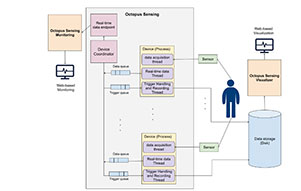

Octopus Sensing: A Python library for human behavior studies

Nastaran Saffaryazdi, Aidin Gharibnavaz, Mark BillinghurstSaffaryazdi, N., Gharibnavaz, A., & Billinghurst, M. (2022). Octopus Sensing: A Python library for human behavior studies. Journal of Open Source Software, 7(71), 4045.

@article{saffaryazdi2022octopus,

title={Octopus Sensing: A Python library for human behavior studies},

author={Saffaryazdi, Nastaran and Gharibnavaz, Aidin and Billinghurst, Mark},

journal={Journal of Open Source Software},

volume={7},

number={71},

pages={4045},

year={2022}

}Designing user studies and collecting data is critical to exploring and automatically recognizing human behavior. It is currently possible to use a range of sensors to capture heart rate, brain activity, skin conductance, and a variety of different physiological cues. These data can be combined to provide information about a user’s emotional state, cognitive load, or other factors. However, even when data are collected correctly, synchronizing data from multiple sensors is time-consuming and prone to errors. Failure to record and synchronize data is likely to result in errors in analysis and results, as well as the need to repeat the time-consuming experiments several times. To overcome these challenges, Octopus Sensing facilitates synchronous data acquisition from various sources and provides some utilities for designing user studies, real-time monitoring, and offline data visualization.

The primary aim of Octopus Sensing is to provide a simple scripting interface so that people with basic or no software development skills can define sensor-based experiment scenarios with less effort -

Emotion Recognition in Conversations Using Brain and Physiological Signals

Nastaran Saffaryazdi , Yenushka Goonesekera , Nafiseh Saffaryazdi , Nebiyou Daniel Hailemariam , Ebasa Girma Temesgen , Suranga Nanayakkara , Elizabeth Broadbent , Mark BillinghurstSaffaryazdi, N., Goonesekera, Y., Saffaryazdi, N., Hailemariam, N. D., Temesgen, E. G., Nanayakkara, S., ... & Billinghurst, M. (2022, March). Emotion Recognition in Conversations Using Brain and Physiological Signals. In 27th International Conference on Intelligent User Interfaces (pp. 229-242).

@inproceedings{saffaryazdi2022emotion,

title={Emotion recognition in conversations using brain and physiological signals},

author={Saffaryazdi, Nastaran and Goonesekera, Yenushka and Saffaryazdi, Nafiseh and Hailemariam, Nebiyou Daniel and Temesgen, Ebasa Girma and Nanayakkara, Suranga and Broadbent, Elizabeth and Billinghurst, Mark},

booktitle={27th International Conference on Intelligent User Interfaces},

pages={229--242},

year={2022}

}Emotions are complicated psycho-physiological processes that are related to numerous external and internal changes in the body. They play an essential role in human-human interaction and can be important for human-machine interfaces. Automatically recognizing emotions in conversation could be applied in many application domains like health-care, education, social interactions, entertainment, and more. Facial expressions, speech, and body gestures are primary cues that have been widely used for recognizing emotions in conversation. However, these cues can be ineffective as they cannot reveal underlying emotions when people involuntarily or deliberately conceal their emotions. Researchers have shown that analyzing brain activity and physiological signals can lead to more reliable emotion recognition since they generally cannot be controlled. However, these body responses in emotional situations have been rarely explored in interactive tasks like conversations. This paper explores and discusses the performance and challenges of using brain activity and other physiological signals in recognizing emotions in a face-to-face conversation. We present an experimental setup for stimulating spontaneous emotions using a face-to-face conversation and creating a dataset of the brain and physiological activity. We then describe our analysis strategies for recognizing emotions using Electroencephalography (EEG), Photoplethysmography (PPG), and Galvanic Skin Response (GSR) signals in subject-dependent and subject-independent approaches. Finally, we describe new directions for future research in conversational emotion recognition and the limitations and challenges of our approach.