Mahnoor Naeem

Mahnoor Naeem

Virtual Intern

Mahnoor is currently an intern in Empathic Computing Lab. She completed her bachelors in Computer Systems Engineering from University of Engineering and Technology Peshawar (Pakistan) in 2017. She works remotely as an AR/VR Unity developer in the US.

Her research in ECL is about exploration and remote collaboration of different gaze cues in AR/VR headsets.

Publications

-

eyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration

Allison Jing , Kieran William May , Mahnoor Naeem , Gun Lee , Mark BillinghurstAllison Jing, Kieran William May, Mahnoor Naeem, Gun Lee, and Mark Billinghurst. 2021. EyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (CHI EA '21). Association for Computing Machinery, New York, NY, USA, Article 283, 1–7. https://doi.org/10.1145/3411763.3451844

@inproceedings{10.1145/3411763.3451844,

author = {Jing, Allison and May, Kieran William and Naeem, Mahnoor and Lee, Gun and Billinghurst, Mark},

title = {EyemR-Vis: Using Bi-Directional Gaze Behavioural Cues to Improve Mixed Reality Remote Collaboration},

year = {2021},

isbn = {9781450380959},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3411763.3451844},

doi = {10.1145/3411763.3451844},

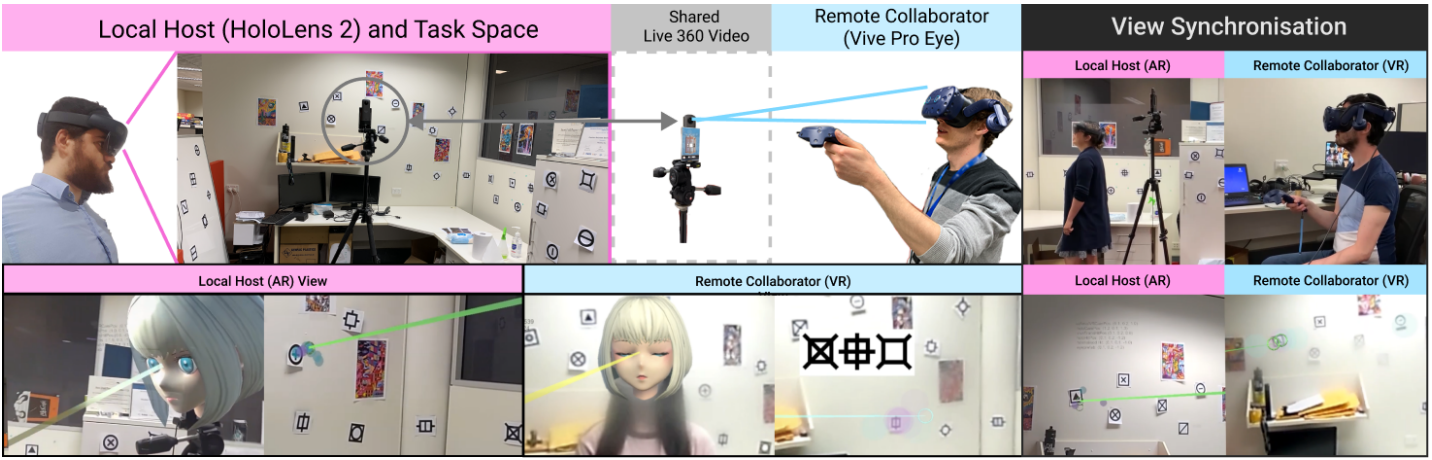

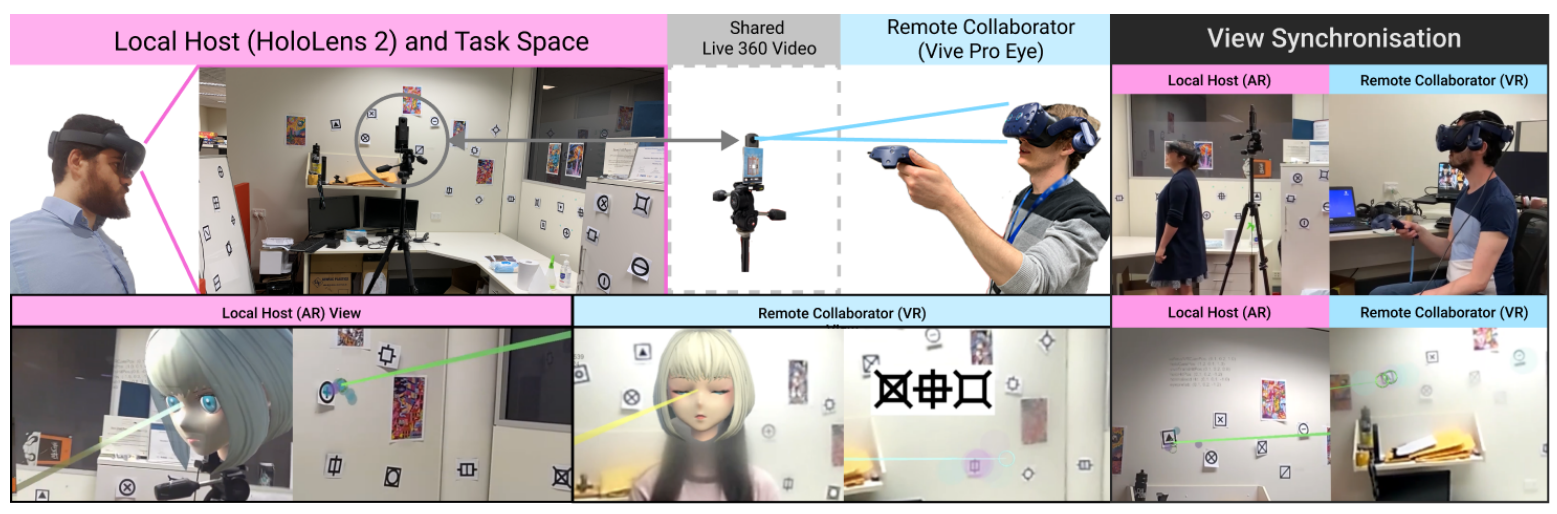

abstract = {Gaze is one of the most important communication cues in face-to-face collaboration. However, in remote collaboration, sharing dynamic gaze information is more difficult. In this research, we investigate how sharing gaze behavioural cues can improve remote collaboration in a Mixed Reality (MR) environment. To do this, we developed eyemR-Vis, a 360 panoramic Mixed Reality remote collaboration system that shows gaze behavioural cues as bi-directional spatial virtual visualisations shared between a local host and a remote collaborator. Preliminary results from an exploratory study indicate that using virtual cues to visualise gaze behaviour has the potential to increase co-presence, improve gaze awareness, encourage collaboration, and is inclined to be less physically demanding or mentally distracting.},

booktitle = {Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

articleno = {283},

numpages = {7},

keywords = {Human-Computer Interaction, Gaze Visualisation, Mixed Reality Remote Collaboration, CSCW},

location = {Yokohama, Japan},

series = {CHI EA '21}

}Gaze is one of the most important communication cues in face-to-face collaboration. However, in remote collaboration, sharing dynamic gaze information is more difficult. In this research, we investigate how sharing gaze behavioural cues can improve remote collaboration in a Mixed Reality (MR) environment. To do this, we developed eyemR-Vis, a 360 panoramic Mixed Reality remote collaboration system that shows gaze behavioural cues as bi-directional spatial virtual visualisations shared between a local host and a remote collaborator. Preliminary results from an exploratory study indicate that using virtual cues to visualise gaze behaviour has the potential to increase co-presence, improve gaze awareness, encourage collaboration, and is inclined to be less physically demanding or mentally distracting. -

eyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators

Allison Jing , Kieran William May , Mahnoor Naeem , Gun Lee , Mark BillinghurstAllison Jing, Kieran William May, Mahnoor Naeem, Gun Lee, and Mark Billinghurst. 2021. EyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (CHI EA '21). Association for Computing Machinery, New York, NY, USA, Article 188, 1–4. https://doi.org/10.1145/3411763.3451545

@inproceedings{10.1145/3411763.3451545,

author = {Jing, Allison and May, Kieran William and Naeem, Mahnoor and Lee, Gun and Billinghurst, Mark},

title = {EyemR-Vis: A Mixed Reality System to Visualise Bi-Directional Gaze Behavioural Cues Between Remote Collaborators},

year = {2021},

isbn = {9781450380959},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3411763.3451545},

doi = {10.1145/3411763.3451545},

abstract = {This demonstration shows eyemR-Vis, a 360 panoramic Mixed Reality collaboration system that translates gaze behavioural cues to bi-directional visualisations between a local host (AR) and a remote collaborator (VR). The system is designed to share dynamic gaze behavioural cues as bi-directional spatial virtual visualisations between a local host and a remote collaborator. This enables richer communication of gaze through four visualisation techniques: browse, focus, mutual-gaze, and fixated circle-map. Additionally, our system supports simple bi-directional avatar interaction as well as panoramic video zoom. This makes interaction in the normally constrained remote task space more flexible and relatively natural. By showing visual communication cues that are physically inaccessible in the remote task space through reallocating and visualising the existing ones, our system aims to provide a more engaging and effective remote collaboration experience.},

booktitle = {Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

articleno = {188},

numpages = {4},

keywords = {Gaze Visualisation, Human-Computer Interaction, Mixed Reality Remote Collaboration, CSCW},

location = {Yokohama, Japan},

series = {CHI EA '21}

}This demonstration shows eyemR-Vis, a 360 panoramic Mixed Reality collaboration system that translates gaze behavioural cues to bi-directional visualisations between a local host (AR) and a remote collaborator (VR). The system is designed to share dynamic gaze behavioural cues as bi-directional spatial virtual visualisations between a local host and a remote collaborator. This enables richer communication of gaze through four visualisation techniques: browse, focus, mutual-gaze, and fixated circle-map. Additionally, our system supports simple bi-directional avatar interaction as well as panoramic video zoom. This makes interaction in the normally constrained remote task space more flexible and relatively natural. By showing visual communication cues that are physically inaccessible in the remote task space through reallocating and visualising the existing ones, our system aims to provide a more engaging and effective remote collaboration experience.