Barrett Ens

Barrett Ens

Visiting Researcher

Dr. Barrett Ens is a Postdoctoral Research Fellow with experience designing interfaces for head-worn displays, aimed at pushing wearable capabilities beyond current micro-interactions. By incorporating new interactive devices and techniques with Augmented Reality, his previous work explored interfaces that support multi-view, analytic tasks for in-situ mobile workers and other everyday users. Along with additional experience in collaborative and social computing, 3D immersive interfaces, and visual analytics, Barrett brings his skills to the Empathic Computing Lab with hopes of creating rich collaborative experiences that support intuitive interaction with high-level cognitive tasks.

Barrett completed his PhD in Computer Science at the University of Manitoba under the supervision of Pourang Irani. Previously, he received a BSc in Computer Science with first class honours from the University of Manitoba and a BMus from the University of Calgary, where he studied classical guitar and specialized in Music Theory. In 2015 and 2016, Barrett completed a pair of research internships at Autodesk Research in Toronto under the supervision of Tovi Grossman and Fraser Anderson. In 2012, he joined Michael Haller at the Media Interaction Lab in Hagenberg, Austria in a summer exchange program. He has contributed to the program committees for the Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI) and the ACM Symposium on Spatial User Interaction (SUI).

Projects

-

Mini-Me

Mini-Me is an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user’s gaze direction and body gestures while it transforms in size and orientation to stay within the AR user’s field of view. We tested Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration.

-

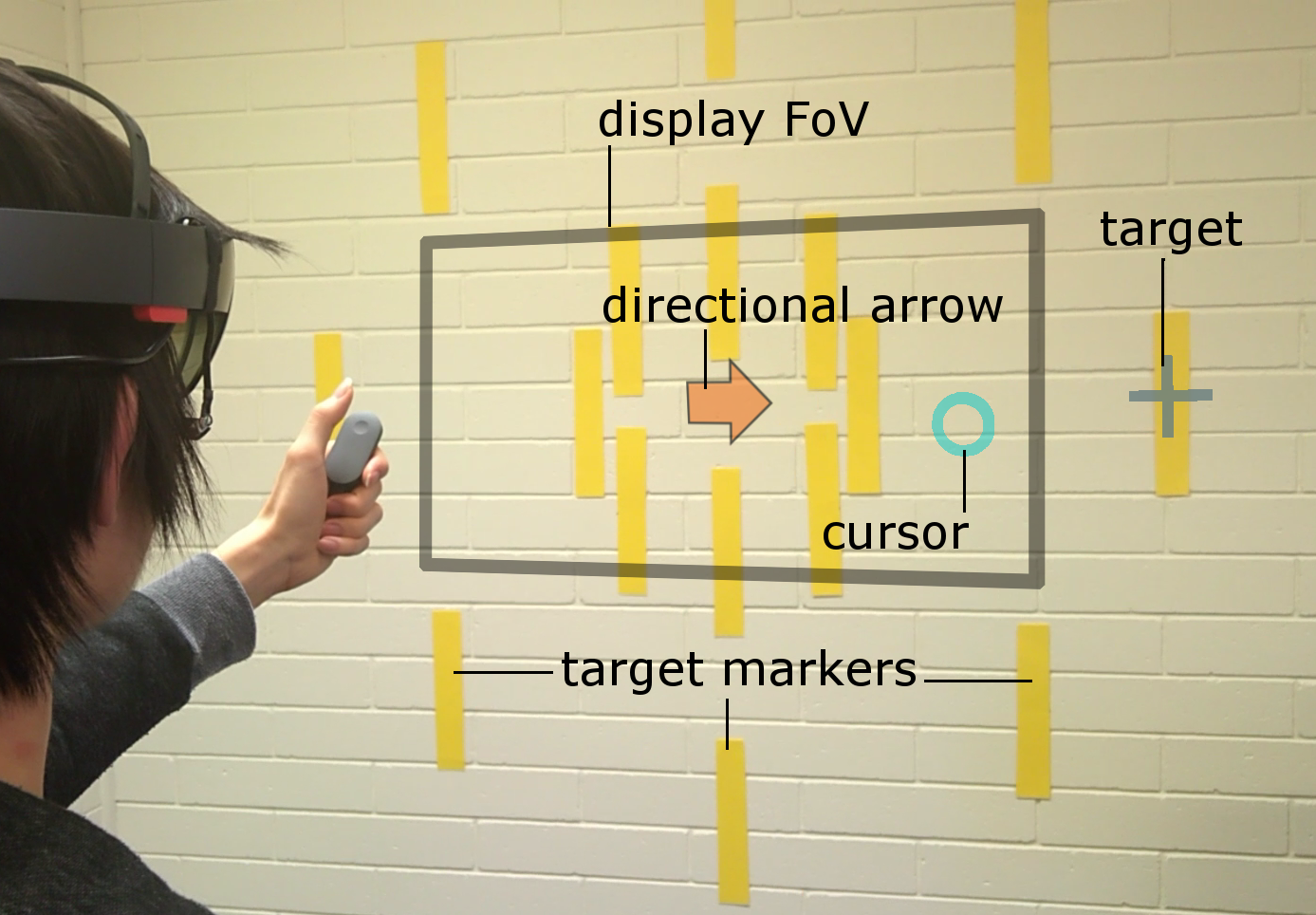

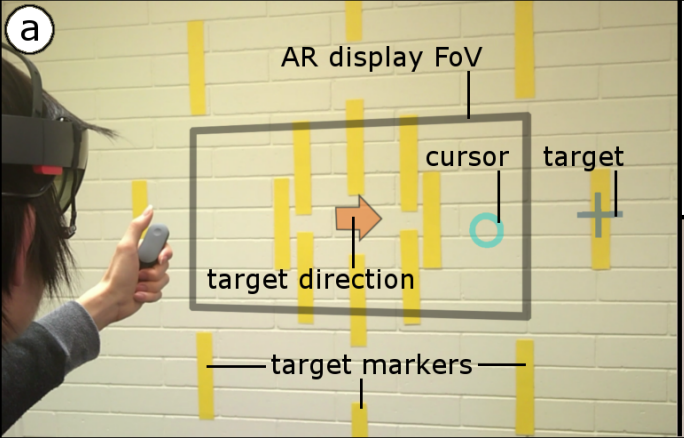

Pinpointing

Head and eye movement can be leveraged to improve the user’s interaction repertoire for wearable displays. Head movements are deliberate and accurate, and provide the current state-of-the-art pointing technique. Eye gaze can potentially be faster and more ergonomic, but suffers from low accuracy due to calibration errors and drift of wearable eye-tracking sensors. This work investigates precise, multimodal selection techniques using head motion and eye gaze. A comparison of speed and pointing accuracy reveals the relative merits of each method, including the achievable target size for robust selection. We demonstrate and discuss example applications for augmented reality, including compact menus with deep structure, and a proof-of-concept method for on-line correction of calibration drift.

Publications

-

Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration

Thammathip Piumsomboon, Gun A Lee, Jonathon D Hart, Barrett Ens, Robert W Lindeman, Bruce H Thomas, Mark BillinghurstThammathip Piumsomboon, Gun A. Lee, Jonathon D. Hart, Barrett Ens, Robert W. Lindeman, Bruce H. Thomas, and Mark Billinghurst. 2018. Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA, Paper 46, 13 pages. DOI: https://doi.org/10.1145/3173574.3173620

@inproceedings{Piumsomboon:2018:MAA:3173574.3173620,

author = {Piumsomboon, Thammathip and Lee, Gun A. and Hart, Jonathon D. and Ens, Barrett and Lindeman, Robert W. and Thomas, Bruce H. and Billinghurst, Mark},

title = {Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration},

booktitle = {Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI '18},

year = {2018},

isbn = {978-1-4503-5620-6},

location = {Montreal QC, Canada},

pages = {46:1--46:13},

articleno = {46},

numpages = {13},

url = {http://doi.acm.org/10.1145/3173574.3173620},

doi = {10.1145/3173574.3173620},

acmid = {3173620},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, avatar, awareness, gaze, gesture, mixed reality, redirected, remote collaboration, remote embodiment, virtual reality},

}

[download]We present Mini-Me, an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user's gaze direction and body gestures while it transforms in size and orientation to stay within the AR user's field of view. A user study was conducted to evaluate Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration. -

Pinpointing: Precise Head-and Eye-Based Target Selection for Augmented Reality

Mikko Kytö, Barrett Ens, Thammathip Piumsomboon, Gun A Lee, Mark BillinghurstMikko Kytö, Barrett Ens, Thammathip Piumsomboon, Gun A. Lee, and Mark Billinghurst. 2018. Pinpointing: Precise Head- and Eye-Based Target Selection for Augmented Reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA, Paper 81, 14 pages. DOI: https://doi.org/10.1145/3173574.3173655

@inproceedings{Kyto:2018:PPH:3173574.3173655,

author = {Kyt\"{o}, Mikko and Ens, Barrett and Piumsomboon, Thammathip and Lee, Gun A. and Billinghurst, Mark},

title = {Pinpointing: Precise Head- and Eye-Based Target Selection for Augmented Reality},

booktitle = {Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI '18},

year = {2018},

isbn = {978-1-4503-5620-6},

location = {Montreal QC, Canada},

pages = {81:1--81:14},

articleno = {81},

numpages = {14},

url = {http://doi.acm.org/10.1145/3173574.3173655},

doi = {10.1145/3173574.3173655},

acmid = {3173655},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, eye tracking, gaze interaction, head-worn display, refinement techniques, target selection},

}View: https://dl.acm.org/ft_gateway.cfm?id=3173655&ftid=1958752&dwn=1&CFID=51906271&CFTOKEN=b63dc7f7afbcc656-4D4F3907-C934-F85B-2D539C0F52E3652A

Video: https://youtu.be/nCX8zIEmv0sHead and eye movement can be leveraged to improve the user's interaction repertoire for wearable displays. Head movements are deliberate and accurate, and provide the current state-of-the-art pointing technique. Eye gaze can potentially be faster and more ergonomic, but suffers from low accuracy due to calibration errors and drift of wearable eye-tracking sensors. This work investigates precise, multimodal selection techniques using head motion and eye gaze. A comparison of speed and pointing accuracy reveals the relative merits of each method, including the achievable target size for robust selection. We demonstrate and discuss example applications for augmented reality, including compact menus with deep structure, and a proof-of-concept method for on-line correction of calibration drift. -

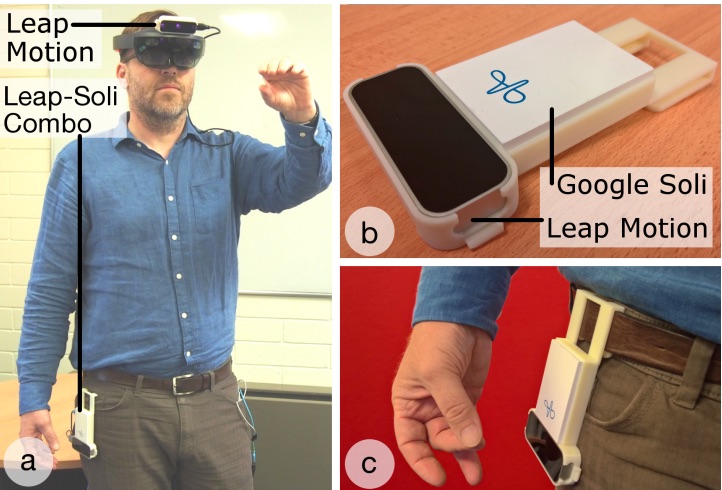

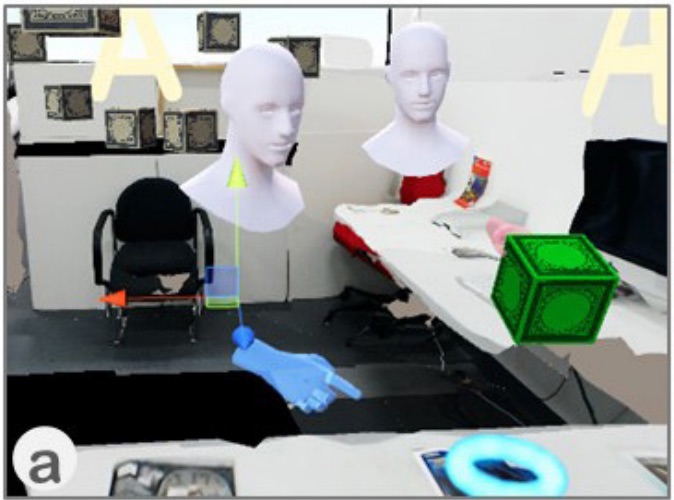

Counterpoint: Exploring Mixed-Scale Gesture Interaction for AR Applications

Barrett Ens, Aaron Quigley, Hui-Shyong Yeo, Pourang Irani, Thammathip Piumsomboon, Mark BillinghurstBarrett Ens, Aaron Quigley, Hui-Shyong Yeo, Pourang Irani, Thammathip Piumsomboon, and Mark Billinghurst. 2018. Counterpoint: Exploring Mixed-Scale Gesture Interaction for AR Applications. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (CHI EA '18). ACM, New York, NY, USA, Paper LBW120, 6 pages. DOI: https://doi.org/10.1145/3170427.3188513

@inproceedings{Ens:2018:CEM:3170427.3188513,

author = {Ens, Barrett and Quigley, Aaron and Yeo, Hui-Shyong and Irani, Pourang and Piumsomboon, Thammathip and Billinghurst, Mark},

title = {Counterpoint: Exploring Mixed-Scale Gesture Interaction for AR Applications},

booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI EA '18},

year = {2018},

isbn = {978-1-4503-5621-3},

location = {Montreal QC, Canada},

pages = {LBW120:1--LBW120:6},

articleno = {LBW120},

numpages = {6},

url = {http://doi.acm.org/10.1145/3170427.3188513},

doi = {10.1145/3170427.3188513},

acmid = {3188513},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, gesture interaction, wearable computing},

}View: http://delivery.acm.org/10.1145/3190000/3188513/LBW120.pdf?ip=130.220.8.189&id=3188513&acc=ACTIVE%20SERVICE&key=65D80644F295BC0D%2E66BF2BADDFDC7DE0%2EF0418AF7A4636953%2E4D4702B0C3E38B35&__acm__=1531307952_cec04550a31b41dfba9a6865be86b8ac

Video: https://youtu.be/GZzC0Vhte4MThis paper presents ongoing work on a design exploration for mixed-scale gestures, which interleave microgestures with larger gestures for computer interaction. We describe three prototype applications that show various facets of this multi-dimensional design space. These applications portray various tasks on a Hololens Augmented Reality display, using different combinations of wearable sensors. Future work toward expanding the design space and exploration is discussed, along with plans toward evaluation of mixed-scale gesture design. -

Levity: A Virtual Reality System that Responds to Cognitive Load

Lynda Gerry, Barrett Ens, Adam Drogemuller, Bruce Thomas, Mark BillinghurstLynda Gerry, Barrett Ens, Adam Drogemuller, Bruce Thomas, and Mark Billinghurst. 2018. Levity: A Virtual Reality System that Responds to Cognitive Load. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (CHI EA '18). ACM, New York, NY, USA, Paper LBW610, 6 pages. DOI: https://doi.org/10.1145/3170427.3188479

@inproceedings{Gerry:2018:LVR:3170427.3188479,

author = {Gerry, Lynda and Ens, Barrett and Drogemuller, Adam and Thomas, Bruce and Billinghurst, Mark},

title = {Levity: A Virtual Reality System That Responds to Cognitive Load},

booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI EA '18},

year = {2018},

isbn = {978-1-4503-5621-3},

location = {Montreal QC, Canada},

pages = {LBW610:1--LBW610:6},

articleno = {LBW610},

numpages = {6},

url = {http://doi.acm.org/10.1145/3170427.3188479},

doi = {10.1145/3170427.3188479},

acmid = {3188479},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {brain computer interface, cognitive load, virtual reality, visual search task},

}View: http://delivery.acm.org/10.1145/3190000/3188479/LBW610.pdf?ip=130.220.8.189&id=3188479&acc=ACTIVE%20SERVICE&key=65D80644F295BC0D%2E66BF2BADDFDC7DE0%2E4D4702B0C3E38B35%2E4D4702B0C3E38B35&__acm__=1531308138_ee3f5f719b239f1ee561612023b6fe1a

Video: https://youtu.be/r2csCoMvLeMThis paper presents the ongoing development of a proof-of-concept, adaptive system that uses a neurocognitive signal to facilitate efficient performance in a Virtual Reality visual search task. The Levity system measures and interactively adjusts the display of a visual array during a visual search task based on the user's level of cognitive load, measured with a 16-channel EEG device. Future developments will validate the system and evaluate its ability to improve search efficiency by detecting and adapting to a user's cognitive demands. -

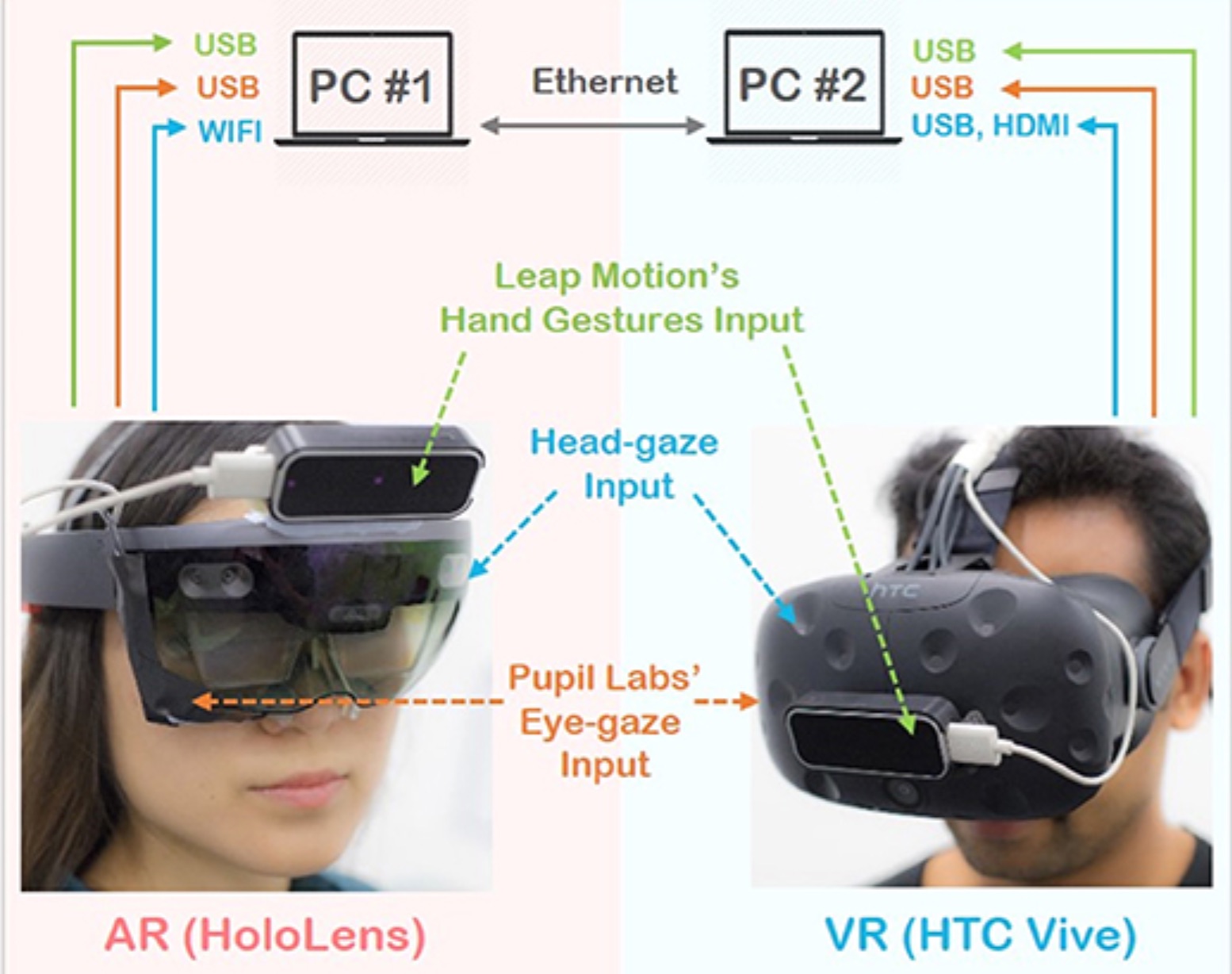

The effects of sharing awareness cues in collaborative mixed reality

Piumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M.Piumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M. (2019). The effects of sharing awareness cues in collaborative mixed reality. Front. Rob, 6(5).

@article{piumsomboon2019effects,

title={The effects of sharing awareness cues in collaborative mixed reality},

author={Piumsomboon, Thammathip and Dey, Arindam and Ens, Barrett and Lee, Gun and Billinghurst, Mark},

year={2019}

}Augmented and Virtual Reality provide unique capabilities for Mixed Reality collaboration. This paper explores how different combinations of virtual awareness cues can provide users with valuable information about their collaborator's attention and actions. In a user study (n = 32, 16 pairs), we compared different combinations of three cues: Field-of-View (FoV) frustum, Eye-gaze ray, and Head-gaze ray against a baseline condition showing only virtual representations of each collaborator's head and hands. Through a collaborative object finding and placing task, the results showed that awareness cues significantly improved user performance, usability, and subjective preferences, with the combination of the FoV frustum and the Head-gaze ray being best. This work establishes the feasibility of room-scale MR collaboration and the utility of providing virtual awareness cues. -

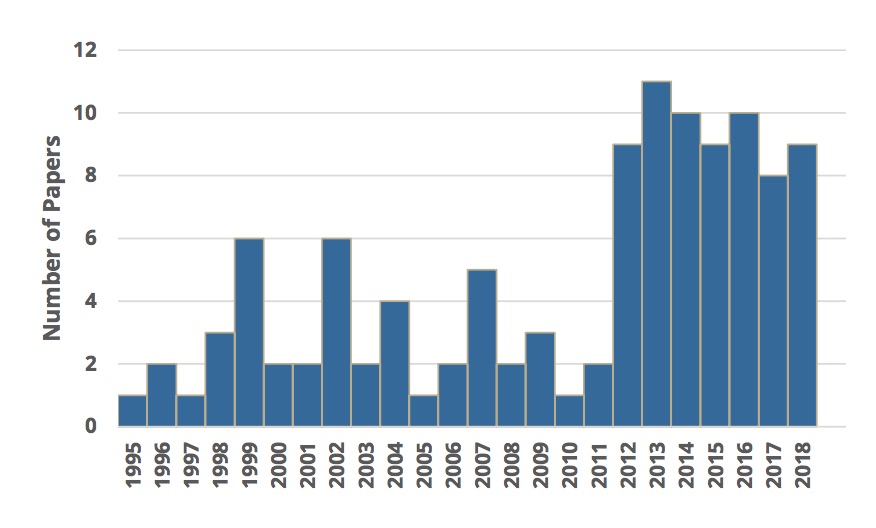

Revisiting collaboration through mixed reality: The evolution of groupware

Ens, B., Lanir, J., Tang, A., Bateman, S., Lee, G., Piumsomboon, T., & Billinghurst, M.Ens, B., Lanir, J., Tang, A., Bateman, S., Lee, G., Piumsomboon, T., & Billinghurst, M. (2019). Revisiting collaboration through mixed reality: The evolution of groupware. International Journal of Human-Computer Studies.

@article{ens2019revisiting,

title={Revisiting collaboration through mixed reality: The evolution of groupware},

author={Ens, Barrett and Lanir, Joel and Tang, Anthony and Bateman, Scott and Lee, Gun and Piumsomboon, Thammathip and Billinghurst, Mark},

journal={International Journal of Human-Computer Studies},

year={2019},

publisher={Elsevier}

}Collaborative Mixed Reality (MR) systems are at a critical point in time as they are soon to become more commonplace. However, MR technology has only recently matured to the point where researchers can focus deeply on the nuances of supporting collaboration, rather than needing to focus on creating the enabling technology. In parallel, but largely independently, the field of Computer Supported Cooperative Work (CSCW) has focused on the fundamental concerns that underlie human communication and collaboration over the past 30-plus years. Since MR research is now on the brink of moving into the real world, we reflect on three decades of collaborative MR research and try to reconcile it with existing theory from CSCW, to help position MR researchers to pursue fruitful directions for their work. To do this, we review the history of collaborative MR systems, investigating how the common taxonomies and frameworks in CSCW and MR research can be applied to existing work on collaborative MR systems, exploring where they have fallen behind, and look for new ways to describe current trends. Through identifying emergent trends, we suggest future directions for MR, and also find where CSCW researchers can explore new theory that more fully represents the future of working, playing and being with others. -

On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction

Piumsomboon, T., Lee, G. A., Irlitti, A., Ens, B., Thomas, B. H., & Billinghurst, M.Piumsomboon, T., Lee, G. A., Irlitti, A., Ens, B., Thomas, B. H., & Billinghurst, M. (2019, April). On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (p. 228). ACM.

@inproceedings{piumsomboon2019shoulder,

title={On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction},

author={Piumsomboon, Thammathip and Lee, Gun A and Irlitti, Andrew and Ens, Barrett and Thomas, Bruce H and Billinghurst, Mark},

booktitle={Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages={228},

year={2019},

organization={ACM}

}We propose a multi-scale Mixed Reality (MR) collaboration between the Giant, a local Augmented Reality user, and the Miniature, a remote Virtual Reality user, in Giant-Miniature Collaboration (GMC). The Miniature is immersed in a 360-video shared by the Giant who can physically manipulate the Miniature through a tangible interface, a combined 360-camera with a 6 DOF tracker. We implemented a prototype system as a proof of concept and conducted a user study (n=24) comprising of four parts comparing: A) two types of virtual representations, B) three levels of Miniature control, C) three levels of 360-video view dependencies, and D) four 360-camera placement positions on the Giant. The results show users prefer a shoulder mounted camera view, while a view frustum with a complimentary avatar is a good visualization for the Miniature virtual representation. From the results, we give design recommendations and demonstrate an example Giant-Miniature Interaction. -

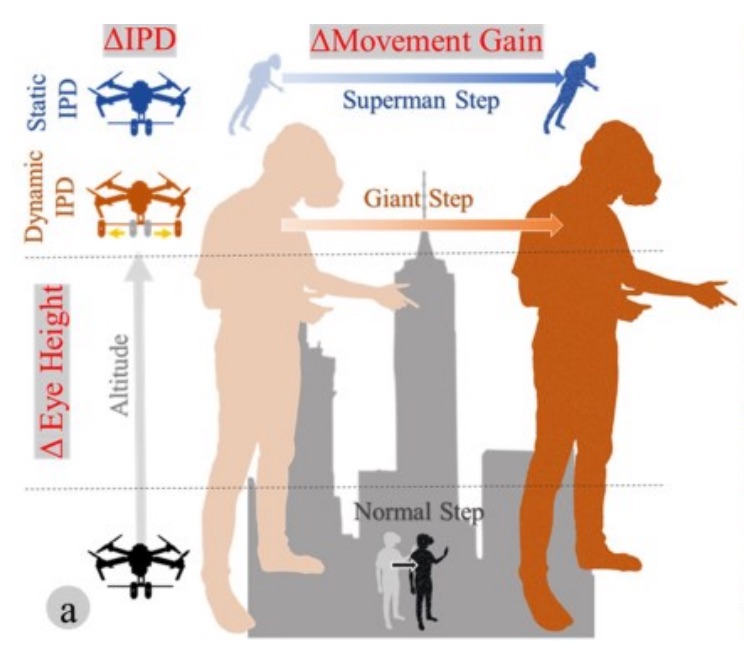

Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface

Piumsomboon, T., Lee, G. A., Ens, B., Thomas, B. H., & Billinghurst, M.Piumsomboon, T., Lee, G. A., Ens, B., Thomas, B. H., & Billinghurst, M. (2018). Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface. IEEE transactions on visualization and computer graphics, 24(11), 2974-2982.

@article{piumsomboon2018superman,

title={Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface},

author={Piumsomboon, Thammathip and Lee, Gun A and Ens, Barrett and Thomas, Bruce H and Billinghurst, Mark},

journal={IEEE transactions on visualization and computer graphics},

volume={24},

number={11},

pages={2974--2982},

year={2018},

publisher={IEEE}

}The advancements in Mixed Reality (MR), Unmanned Aerial Vehicle, and multi-scale collaborative virtual environments have led to new interface opportunities for remote collaboration. This paper explores a novel concept of flying telepresence for multi-scale mixed reality remote collaboration. This work could enable remote collaboration at a larger scale such as building construction. We conducted a user study with three experiments. The first experiment compared two interfaces, static and dynamic IPD, on simulator sickness and body size perception. The second experiment tested the user perception of a virtual object size under three levels of IPD and movement gain manipulation with a fixed eye height in a virtual environment having reduced or rich visual cues. Our last experiment investigated the participant’s body size perception for two levels of manipulation of the IPDs and heights using stereo video footage to simulate a flying telepresence experience. The studies found that manipulating IPDs and eye height influenced the user’s size perception. We present our findings and share the recommendations for designing a multi-scale MR flying telepresence interface. -

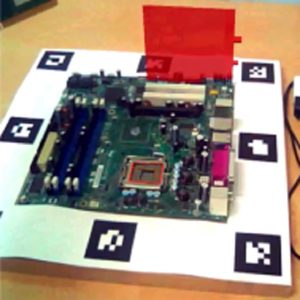

Design considerations for combining augmented reality with intelligent tutors

Herbert, B., Ens, B., Weerasinghe, A., Billinghurst, M., & Wigley, G.Herbert, B., Ens, B., Weerasinghe, A., Billinghurst, M., & Wigley, G. (2018). Design considerations for combining augmented reality with intelligent tutors. Computers & Graphics, 77, 166-182.

@article{herbert2018design,

title={Design considerations for combining augmented reality with intelligent tutors},

author={Herbert, Bradley and Ens, Barrett and Weerasinghe, Amali and Billinghurst, Mark and Wigley, Grant},

journal={Computers \& Graphics},

volume={77},

pages={166--182},

year={2018},

publisher={Elsevier}

}Augmented Reality overlays virtual objects on the real world in real-time and has the potential to enhance education, however, few AR training systems provide personalised learning support. Combining AR with intelligent tutoring systems has the potential to improve training outcomes by providing personalised learner support, such as feedback on the AR environment. This paper reviews the current state of AR training systems combined with ITSs and proposes a series of requirements for combining the two paradigms. In addition, this paper identifies a growing need to provide more research in the context of design and implementation of adaptive augmented reality tutors (ARATs). These include possibilities of evaluating the user interfaces of ARAT and potential domains where an ARAT might be considered effective. -

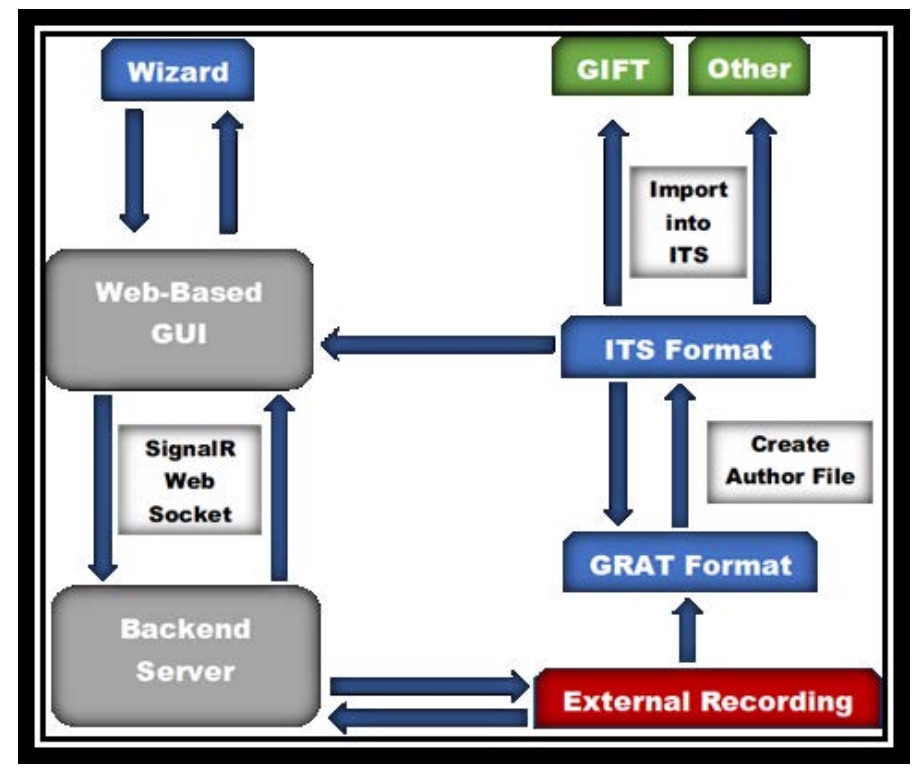

A generalized, rapid authoring tool for intelligent tutoring systems

Herbert, B., Billinghurst, M., Weerasinghe, A., Ens, B., & Wigley, G.Herbert, B., Billinghurst, M., Weerasinghe, A., Ens, B., & Wigley, G. (2018, December). A generalized, rapid authoring tool for intelligent tutoring systems. In Proceedings of the 30th Australian Conference on Computer-Human Interaction (pp. 368-373). ACM.

@inproceedings{herbert2018generalized,

title={A generalized, rapid authoring tool for intelligent tutoring systems},

author={Herbert, Bradley and Billinghurst, Mark and Weerasinghe, Amali and Ens, Barret and Wigley, Grant},

booktitle={Proceedings of the 30th Australian Conference on Computer-Human Interaction},

pages={368--373},

year={2018},

organization={ACM}

}As computer-based training systems become increasingly integrated into real-world training, tools which rapidly author courses for such systems are emerging. However, inconsistent user interface design and limited support for a variety of domains makes them time consuming and difficult to use. We present a Generalized, Rapid Authoring Tool (GRAT), which simplifies creation of Intelligent Tutoring Systems (ITSs) using a unified web-based wizard-style graphical user interface and programming-by-demonstration approaches to reduce technical knowledge needed to author ITS logic. We implemented a prototype, which authors courses for two kinds of tasks: A network cabling task and a console device configuration task to demonstrate the tool's potential. We describe the limitations of our prototype and present opportunities for evaluating the tool's usability and perceived effectiveness. -

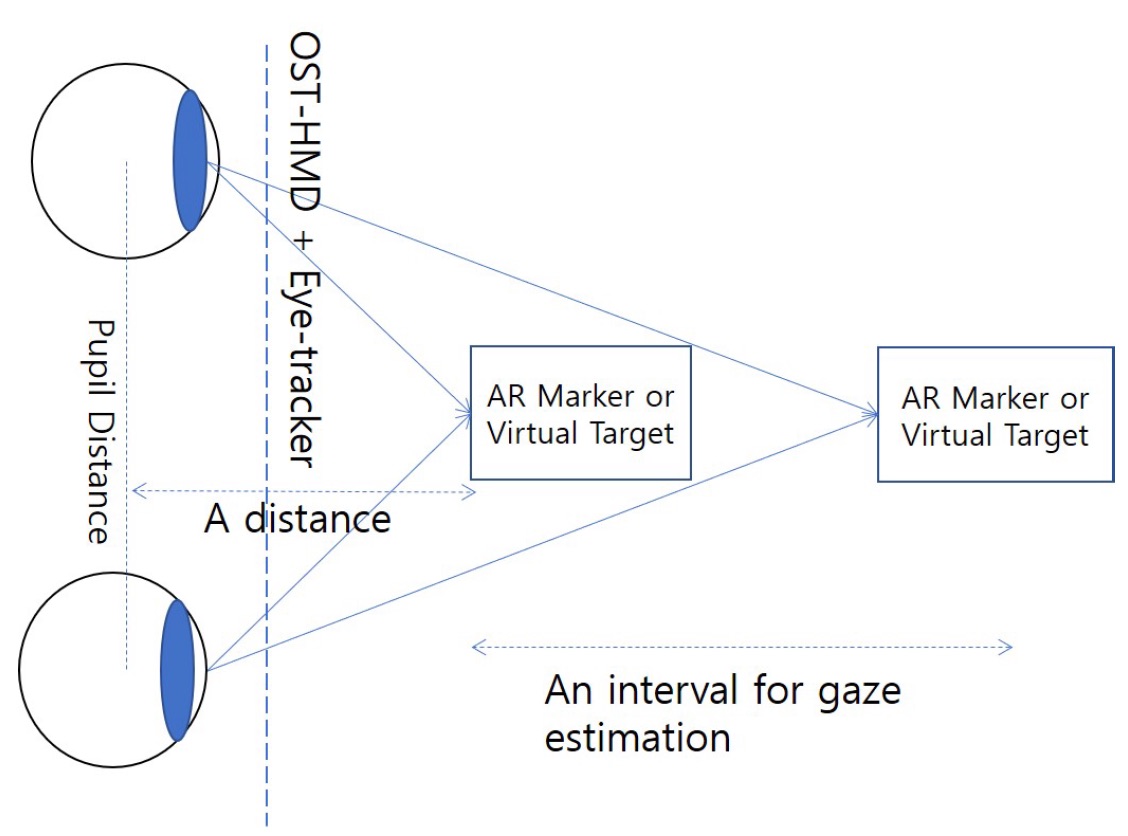

A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs

Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M.Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M. (2017, November). A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs. In Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos (pp. 1-2). Eurographics Association.

@inproceedings{lee2017gaze,

title={A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs},

author={Lee, Youngho and Piumsomboon, Thammathip and Ens, Barrett and Lee, Gun and Dey, Arindam and Billinghurst, Mark},

booktitle={Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos},

pages={1--2},

year={2017},

organization={Eurographics Association}

}The rapid developement of machine learning algorithms can be leveraged for potential software solutions in many domains including techniques for depth estimation of human eye gaze. In this paper, we propose an implicit and continuous data acquisition method for 3D gaze depth estimation for an optical see-Through head mounted display (OST-HMD) equipped with an eye tracker. Our method constantly monitoring and generating user gaze data for training our machine learning algorithm. The gaze data acquired through the eye-tracker include the inter-pupillary distance (IPD) and the gaze distance to the real andvirtual target for each eye.

-

Exploring Mixed-Scale Gesture Interaction

Ens, B., Quigley, A. J., Yeo, H. S., Irani, P., Piumsomboon, T., & Billinghurst, M.Ens, B., Quigley, A. J., Yeo, H. S., Irani, P., Piumsomboon, T., & Billinghurst, M. (2017). Exploring mixed-scale gesture interaction. SA'17 SIGGRAPH Asia 2017 Posters.

@article{ens2017exploring,

title={Exploring mixed-scale gesture interaction},

author={Ens, Barrett and Quigley, Aaron John and Yeo, Hui Shyong and Irani, Pourang and Piumsomboon, Thammathip and Billinghurst, Mark},

journal={SA'17 SIGGRAPH Asia 2017 Posters},

year={2017},

publisher={ACM}

}This paper presents ongoing work toward a design exploration for combining microgestures with other types of gestures within the greater lexicon of gestures for computer interaction. We describe three prototype applications that show various facets of this multi-dimensional design space. These applications portray various tasks on a Hololens Augmented Reality display, using different combinations of wearable sensors. -

Multi-Scale Gestural Interaction for Augmented Reality

Ens, B., Quigley, A., Yeo, H. S., Irani, P., & Billinghurst, M.Ens, B., Quigley, A., Yeo, H. S., Irani, P., & Billinghurst, M. (2017, November). Multi-scale gestural interaction for augmented reality. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 11). ACM.

@inproceedings{ens2017multi,

title={Multi-scale gestural interaction for augmented reality},

author={Ens, Barrett and Quigley, Aaron and Yeo, Hui-Shyong and Irani, Pourang and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={11},

year={2017},

organization={ACM}

}We present a multi-scale gestural interface for augmented reality applications. With virtual objects, gestural interactions such as pointing and grasping can be convenient and intuitive, however they are imprecise, socially awkward, and susceptible to fatigue. Our prototype application uses multiple sensors to detect gestures from both arm and hand motions (macro-scale), and finger gestures (micro-scale). Micro-gestures can provide precise input through a belt-worn sensor configuration, with the hand in a relaxed posture. We present an application that combines direct manipulation with microgestures for precise interaction, beyond the capabilities of direct manipulation alone.

-

Exploring enhancements for remote mixed reality collaboration

Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M.Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M. (2017, November). Exploring enhancements for remote mixed reality collaboration. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 16). ACM.

@inproceedings{piumsomboon2017exploring,

title={Exploring enhancements for remote mixed reality collaboration},

author={Piumsomboon, Thammathip and Day, Arindam and Ens, Barrett and Lee, Youngho and Lee, Gun and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={16},

year={2017},

organization={ACM}

}In this paper, we explore techniques for enhancing remote Mixed Reality (MR) collaboration in terms of communication and interaction. We created CoVAR, a MR system for remote collaboration between an Augmented Reality (AR) and Augmented Virtuality (AV) users. Awareness cues and AV-Snap-to-AR interface were proposed for enhancing communication. Collaborative natural interaction, and AV-User-Body-Scaling were implemented for enhancing interaction. We conducted an exploratory study examining the awareness cues and the collaborative gaze, and the results showed the benefits of the proposed techniques for enhancing communication and interaction.