Arindam Dey

Arindam Dey

Research Fellow

Dr. Arindam Dey is a Research Fellow at the Empathic Computing Laboratory. He completed his PhD from University of South Australia in 2013 (Supervised by: Prof. Christian Sandor and Prof. Bruce Thomas). Since the completion of his PhD and before joining UniSA again in 2016, Arindam worked in three postdoctoral positions at James Cook University (Australia), Worcester Polytechnic Institute (USA), and University of Tasmania (Australia). He visited Technical University of Munich (Germany) for a research internship and Indian Institute of Technology, Kharagpore (India) for a summer internship (during B.Tech).

His primary research area is Mixed Reality and Human-Computer Interaction. He has more than 20 publications (Google Scholar). He is a peer-reviewer of many journals and conferences and have been a part of the organizing committee of the following conferences:

- OzCHI (2016): Research Demonstration Chair

- IEEE Symposium on 3D User Interfaces (2016): Poster Chair

- Australasian Conference on Artificial Life and Computational Intelligence (2015): International Program Committee Member

- Asia Pacific Conference on Computer Human Interaction (2013): E-Publications Chair and Program Committee Member

- IEEE International Symposium on Mixed and Augmented Reality (2013): Online Communications Chair

- IEEE International Symposium on Mixed and Augmented Reality (2012): Student Volunteer Co-Chair

For more details about Arindam please visit his personal website.

Projects

-

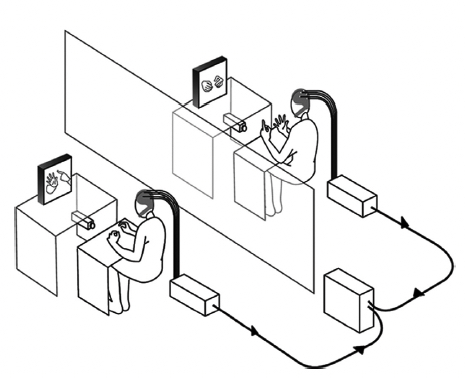

Empathy Glasses

We have been developing a remote collaboration system with Empathy Glasses, a head worn display designed to create a stronger feeling of empathy between remote collaborators. To do this, we combined a head- mounted see-through display with a facial expression recognition system, a heart rate sensor, and an eye tracker. The goal is to enable a remote person to see and hear from another person's perspective and to understand how they are feeling. In this way, the system shares non-verbal cues that could help increase empathy between remote collaborators.

-

Empathy in Virtual Reality

Virtual reality (VR) interfaces is an influential medium to trigger emotional changes in humans. However, there is little research on making users of VR interfaces aware of their own and in collaborative interfaces, one another's emotional state. In this project, through a series of system development and user evaluations, we are investigating how physiological data such as heart rate, galvanic skin response, pupil dilation, and EEG can be used as a medium to communicate emotional states either to self (single user interfaces) or the collaborator (collaborative interfaces). The overarching goal is to make VR environments more empathetic and collaborators more aware of each other's emotional state.

-

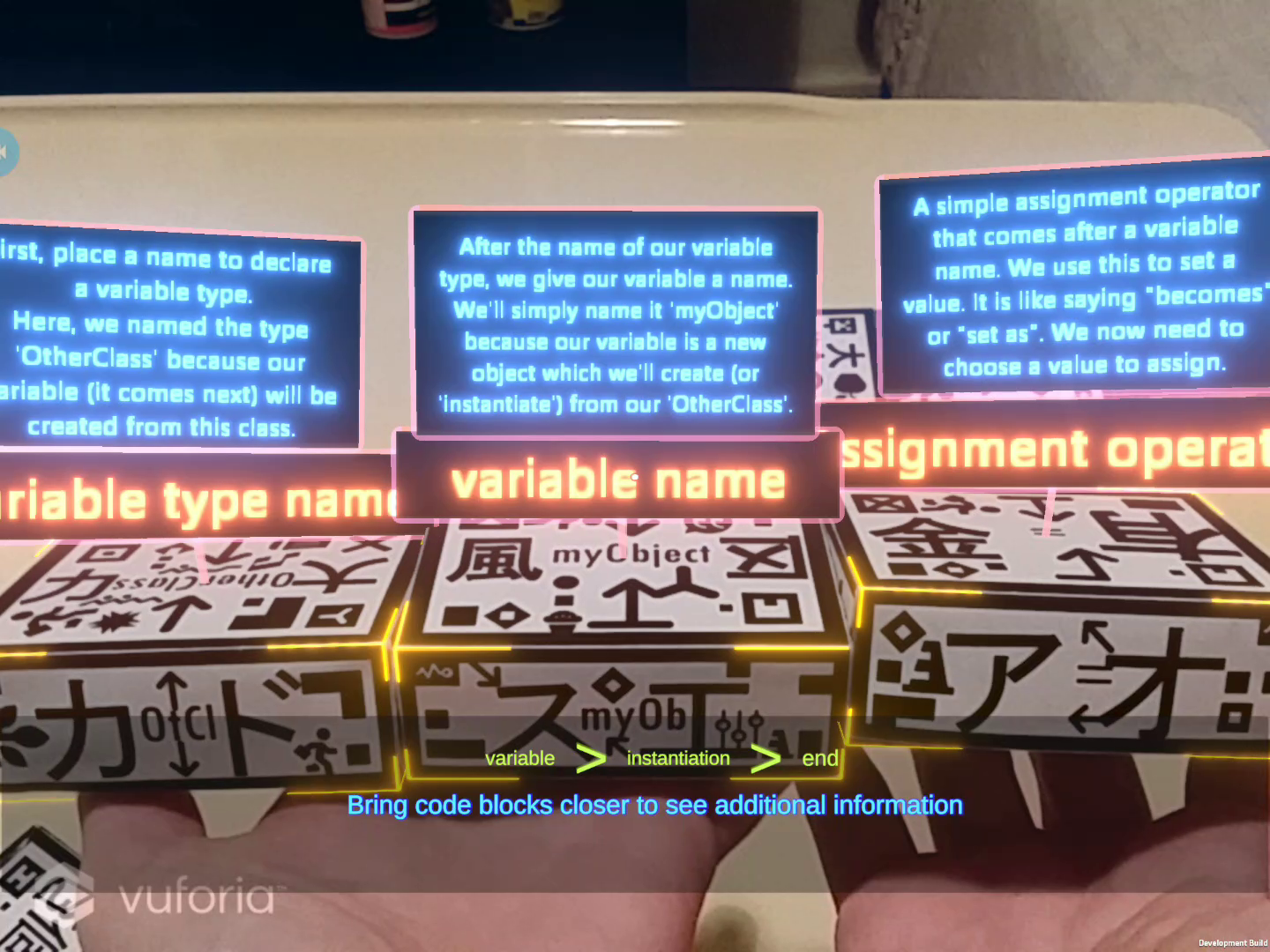

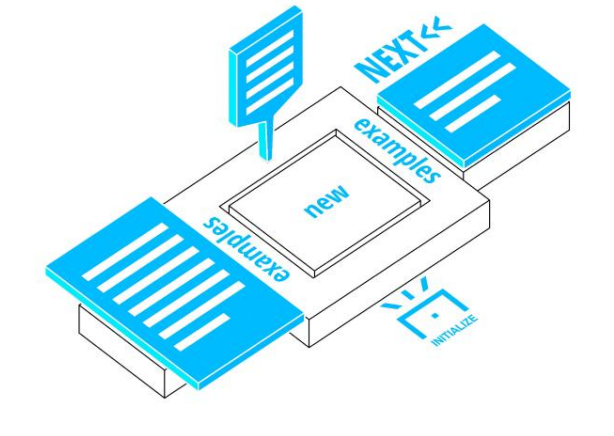

Tangible Augmented Reality for Learning Programming Learning

This project explores how tangible Augmented Reality (AR) can be used to teach computer programming. We have developed TARPLE, A Tangible Augmented Reality Programming Learning Environment, and are studying its efficacy for teaching text-based programming languages to novice learners. TARPLE uses physical blocks to represent programming functions and overlays virtual imagery on the blocks to show the programming code. Use can arrange the blocks by moving them with their hands, and see the AR content either through the Microsoft Hololens2 AR display, or a handheld tablet. This research project expands upon the broader question of educational AR as well as on the questions of tangible programming languages and tangible learning mediums. When supported by the embodied learning and natural interaction affordances of AR, physical objects may hold the key to developing fundamental knowledge of abstract, complex subjects for younger learners in particular. It may also serve as a powerful future tool in advancing early computational thinking skills in novices. Evaluation of such learning environments addresses the hypothesis that hybrid tangible AR mediums are able to support an extended learning taxonomy both within the classroom and without.

Publications

-

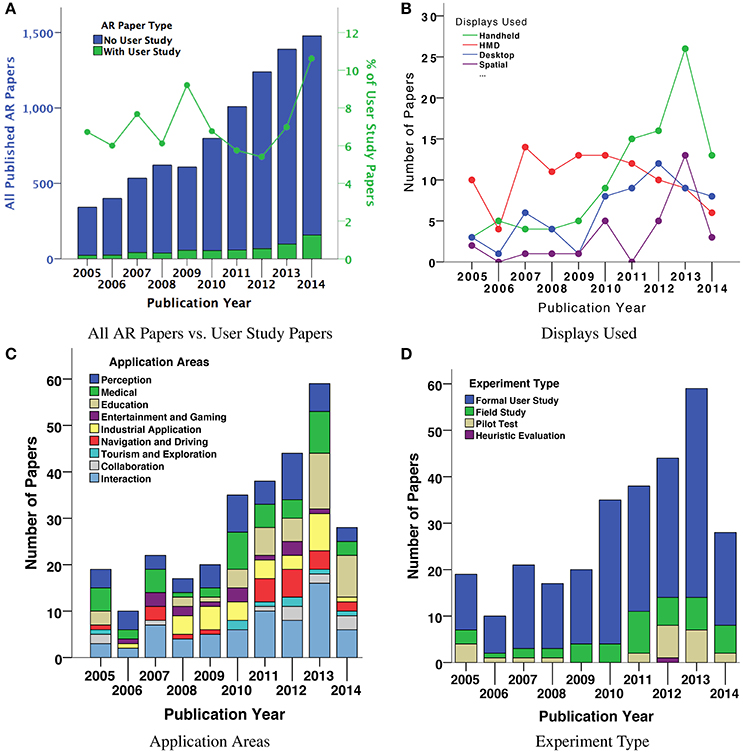

A Systematic Review of 10 Years of Augmented Reality Usability Studies: 2005 to 2014

Arindam Dey, Mark Billinghurst, Robert W Lindeman, J SwanDey A, Billinghurst M, Lindeman RW and Swan JE II (2018) A Systematic Review of 10 Years of Augmented Reality Usability Studies: 2005 to 2014. Front. Robot. AI 5:37. doi: 10.3389/frobt.2018.00037

@ARTICLE{10.3389/frobt.2018.00037,

AUTHOR={Dey, Arindam and Billinghurst, Mark and Lindeman, Robert W. and Swan, J. Edward},

TITLE={A Systematic Review of 10 Years of Augmented Reality Usability Studies: 2005 to 2014},

JOURNAL={Frontiers in Robotics and AI},

VOLUME={5},

PAGES={37},

YEAR={2018},

URL={https://www.frontiersin.org/article/10.3389/frobt.2018.00037},

DOI={10.3389/frobt.2018.00037},

ISSN={2296-9144},

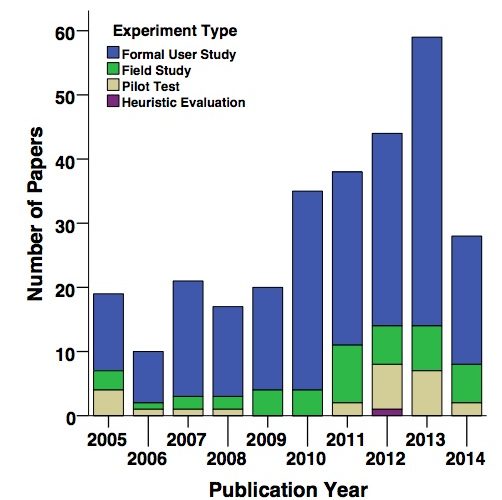

}Augmented Reality (AR) interfaces have been studied extensively over the last few decades, with a growing number of user-based experiments. In this paper, we systematically review 10 years of the most influential AR user studies, from 2005 to 2014. A total of 291 papers with 369 individual user studies have been reviewed and classified based on their application areas. The primary contribution of the review is to present the broad landscape of user-based AR research, and to provide a high-level view of how that landscape has changed. We summarize the high-level contributions from each category of papers, and present examples of the most influential user studies. We also identify areas where there have been few user studies, and opportunities for future research. Among other things, we find that there is a growing trend toward handheld AR user studies, and that most studies are conducted in laboratory settings and do not involve pilot testing. This research will be useful for AR researchers who want to follow best practices in designing their own AR user studies. -

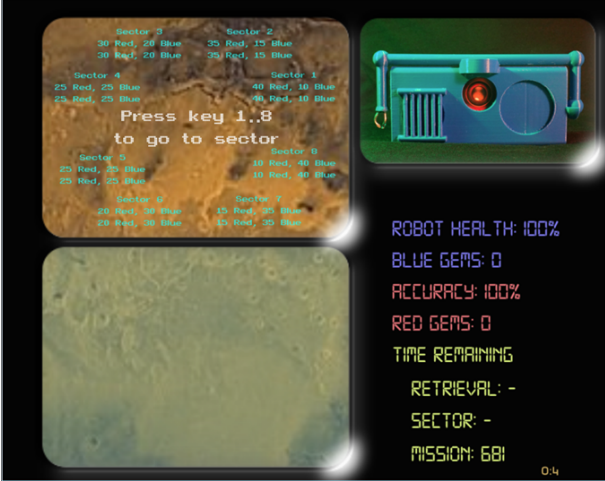

He who hesitates is lost (... in thoughts over a robot)

James Wen, Amanda Stewart, Mark Billinghurst, Arindam Dey, Chad Tossell, Victor FinomoreJames Wen, Amanda Stewart, Mark Billinghurst, Arindam Dey, Chad Tossell, and Victor Finomore. 2018. He who hesitates is lost (...in thoughts over a robot). In Proceedings of the Technology, Mind, and Society (TechMindSociety '18). ACM, New York, NY, USA, Article 43, 6 pages. DOI: https://doi.org/10.1145/3183654.3183703

@inproceedings{Wen:2018:HHL:3183654.3183703,

author = {Wen, James and Stewart, Amanda and Billinghurst, Mark and Dey, Arindam and Tossell, Chad and Finomore, Victor},

title = {He Who Hesitates is Lost (...In Thoughts over a Robot)},

booktitle = {Proceedings of the Technology, Mind, and Society},

series = {TechMindSociety '18},

year = {2018},

isbn = {978-1-4503-5420-2},

location = {Washington, DC, USA},

pages = {43:1--43:6},

articleno = {43},

numpages = {6},

url = {http://doi.acm.org/10.1145/3183654.3183703},

doi = {10.1145/3183654.3183703},

acmid = {3183703},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {Anthropomorphism, Empathy, Human Machine Team, Robotics, User Study},

}In a team, the strong bonds that can form between teammates are often seen as critical for reaching peak performance. This perspective may need to be reconsidered, however, if some team members are autonomous robots since establishing bonds with fundamentally inanimate and expendable objects may prove counterproductive. Previous work has measured empathic responses towards robots as singular events at the conclusion of experimental sessions. As relationships extend over long periods of time, sustained empathic behavior towards robots would be of interest. In order to measure user actions that may vary over time and are affected by empathy towards a robot teammate, we created the TEAMMATE simulation system. Our findings suggest that inducing empathy through a back story narrative can significantly change participant decisions in actions that may have consequences for a robot companion over time. The results of our study can have strong implications for the overall performance of human machine teams. -

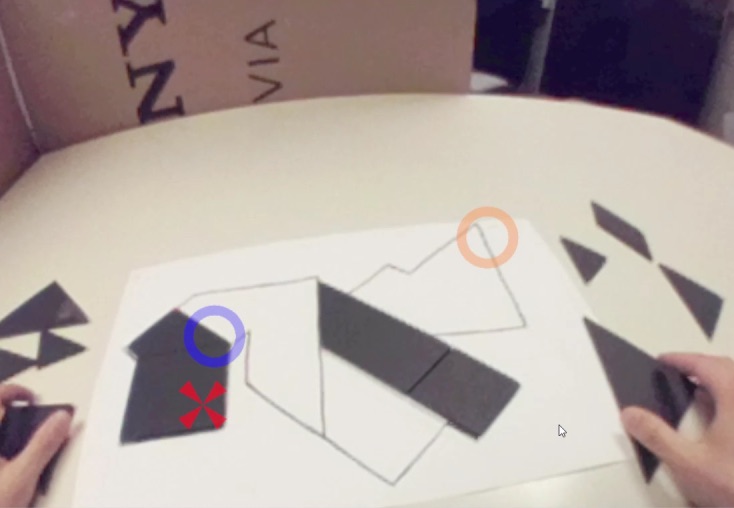

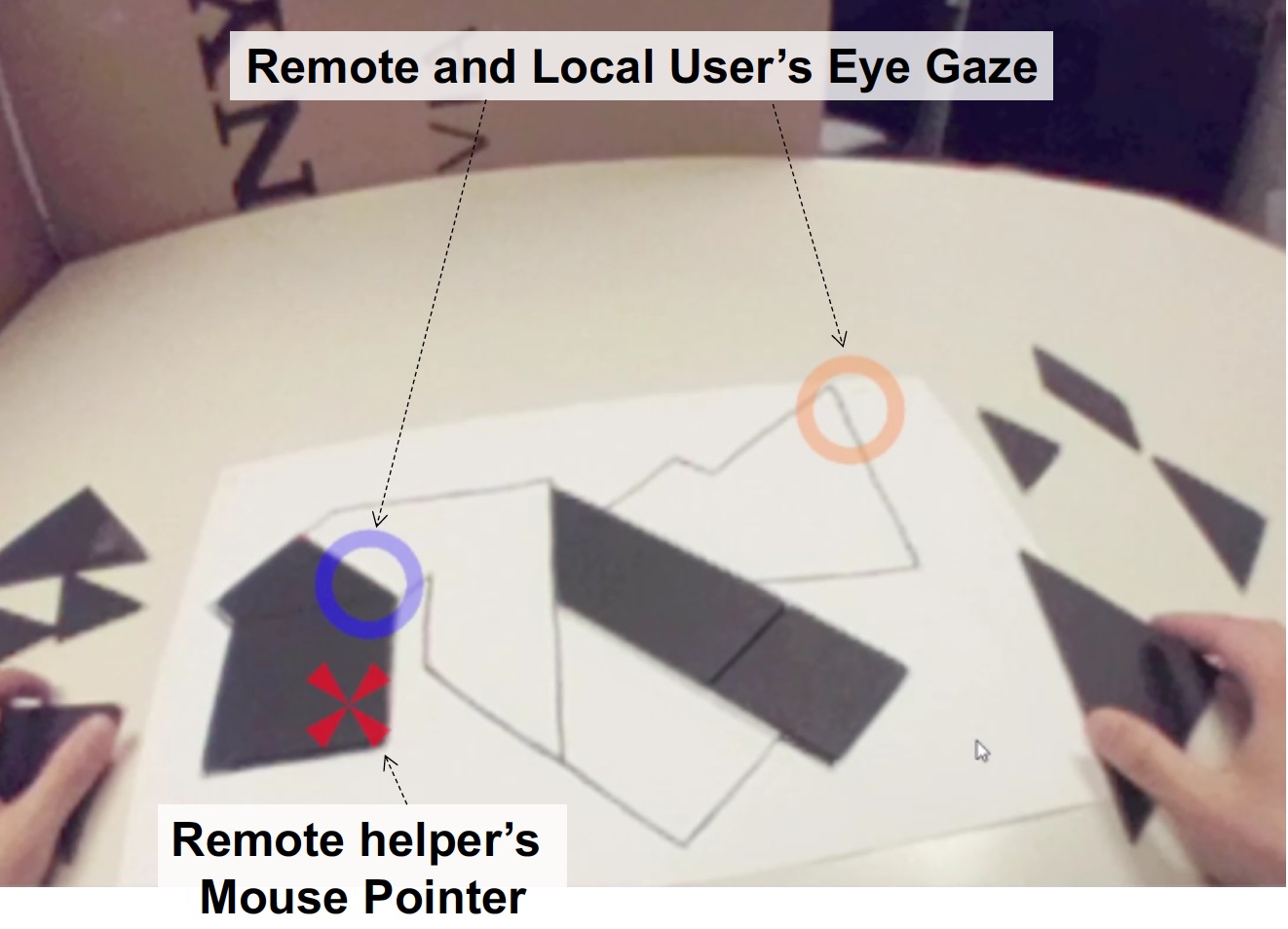

Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze

Gun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark BillinghurstGun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark Billinghurst. 2017. Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze. In Proceedings of ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, pp. 197-204. http://dx.doi.org/10.2312/egve.20171359

@inproceedings {egve.20171359,

booktitle = {ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments},

editor = {Robert W. Lindeman and Gerd Bruder and Daisuke Iwai},

title = {{Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze}},

author = {Lee, Gun A. and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammathip and Norman, Mitchell and Billinghurst, Mark},

year = {2017},

publisher = {The Eurographics Association},

ISSN = {1727-530X},

ISBN = {978-3-03868-038-3},

DOI = {10.2312/egve.20171359}

}To improve remote collaboration in video conferencing systems, researchers have been investigating augmenting visual cues onto a shared live video stream. In such systems, a person wearing a head-mounted display (HMD) and camera can share her view of the surrounding real-world with a remote collaborator to receive assistance on a real-world task. While this concept of augmented video conferencing (AVC) has been actively investigated, there has been little research on how sharing gaze cues might affect the collaboration in video conferencing. This paper investigates how sharing gaze in both directions between a local worker and remote helper in an AVC system affects the collaboration and communication. Using a prototype AVC system that shares the eye gaze of both users, we conducted a user study that compares four conditions with different combinations of eye gaze sharing between the two users. The results showed that sharing each other’s gaze significantly improved collaboration and communication. -

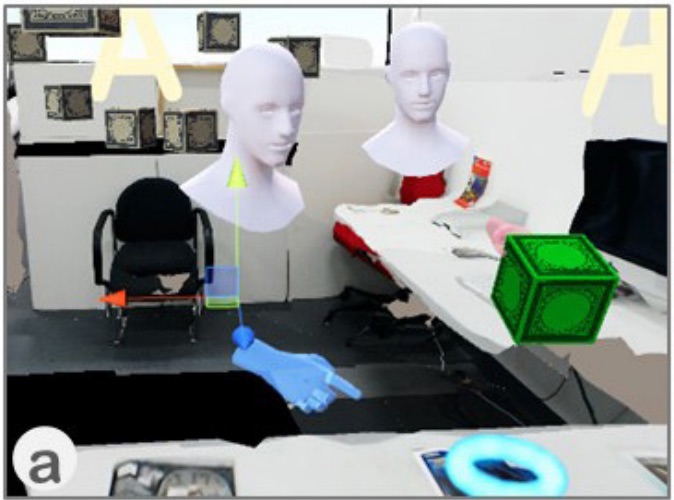

The effects of sharing awareness cues in collaborative mixed reality

Piumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M.Piumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M. (2019). The effects of sharing awareness cues in collaborative mixed reality. Front. Rob, 6(5).

@article{piumsomboon2019effects,

title={The effects of sharing awareness cues in collaborative mixed reality},

author={Piumsomboon, Thammathip and Dey, Arindam and Ens, Barrett and Lee, Gun and Billinghurst, Mark},

year={2019}

}Augmented and Virtual Reality provide unique capabilities for Mixed Reality collaboration. This paper explores how different combinations of virtual awareness cues can provide users with valuable information about their collaborator's attention and actions. In a user study (n = 32, 16 pairs), we compared different combinations of three cues: Field-of-View (FoV) frustum, Eye-gaze ray, and Head-gaze ray against a baseline condition showing only virtual representations of each collaborator's head and hands. Through a collaborative object finding and placing task, the results showed that awareness cues significantly improved user performance, usability, and subjective preferences, with the combination of the FoV frustum and the Head-gaze ray being best. This work establishes the feasibility of room-scale MR collaboration and the utility of providing virtual awareness cues. -

Using Augmented Reality with Speech Input for Non-Native Children's Language Learning

Dalim, C. S. C., Sunar, M. S., Dey, A., & Billinghurst, M.Dalim, C. S. C., Sunar, M. S., Dey, A., & Billinghurst, M. (2019). Using Augmented Reality with Speech Input for Non-Native Children's Language Learning. International Journal of Human-Computer Studies.

@article{dalim2019using,

title={Using Augmented Reality with Speech Input for Non-Native Children's Language Learning},

author={Dalim, Che Samihah Che and Sunar, Mohd Shahrizal and Dey, Arindam and Billinghurst, Mark},

journal={International Journal of Human-Computer Studies},

year={2019},

publisher={Elsevier}

}Augmented Reality (AR) offers an enhanced learning environment which could potentially influence children's experience and knowledge gain during the language learning process. Teaching English or other foreign languages to children with different native language can be difficult and requires an effective strategy to avoid boredom and detachment from the learning activities. With the growing numbers of AR education applications and the increasing pervasiveness of speech recognition, we are keen to understand how these technologies benefit non-native young children in learning English. In this paper, we explore children's experience in terms of knowledge gain and enjoyment when learning through a combination of AR and speech recognition technologies. We developed a prototype AR interface called TeachAR, and ran two experiments to investigate how effective the combination of AR and speech recognition was towards the learning of 1) English terms for color and shapes, and 2) English words for spatial relationships. We found encouraging results by creating a novel teaching strategy using these two technologies, not only in terms of increase in knowledge gain and enjoyment when compared with traditional strategy but also enables young children to finish the certain task faster and easier. -

Exploration of an EEG-Based Cognitively Adaptive Training System in Virtual Reality

Dey, A., Chatourn, A., & Billinghurst, M.Dey, A., Chatburn, A., & Billinghurst, M. (2019, March). Exploration of an EEG-Based Cognitively Adaptive Training System in Virtual Reality. In 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (pp. 220-226). IEEE.

@inproceedings{dey2019exploration,

title={Exploration of an EEG-Based Cognitively Adaptive Training System in Virtual Reality},

author={Dey, Arindam and Chatburn, Alex and Billinghurst, Mark},

booktitle={2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={220--226},

year={2019},

organization={IEEE}

}Virtual Reality (VR) is effective in various training scenarios across multiple domains, such as education, health and defense. However, most of those applications are not adaptive to the real-time cognitive or subjectively experienced load placed on the trainee. In this paper, we explore a cognitively adaptive training system based on real-time measurement of task related alpha activity in the brain. This measurement was made by a 32-channel mobile Electroencephalography (EEG) system, and was used to adapt the task difficulty to an ideal level which challenged our participants, and thus theoretically induces the best level of performance gains as a result of training. Our system required participants to select target objects in VR and the complexity of the task adapted to the alpha activity in the brain. A total of 14 participants undertook our training and completed 20 levels of increasing complexity. Our study identified significant differences in brain activity in response to increasing levels of task complexity, but response time did not alter as a function of task difficulty. Collectively, we interpret this to indicate the brain's ability to compensate for higher task load without affecting behaviourally measured visuomotor performance. -

Effects of Manipulating Physiological Feedback in Immersive Virtual Environments

Dey, A., Chen, H., Billinghurst, M., & Lindeman, R. W.Dey, A., Chen, H., Billinghurst, M., & Lindeman, R. W. (2018, October). Effects of Manipulating Physiological Feedback in Immersive Virtual Environments. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play (pp. 101-111). ACM.

@inproceedings{dey2018effects,

title={Effects of Manipulating Physiological Feedback in Immersive Virtual Environments},

author={Dey, Arindam and Chen, Hao and Billinghurst, Mark and Lindeman, Robert W},

booktitle={Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play},

pages={101--111},

year={2018},

organization={ACM}

}Virtual environments have been proven to be effective in evoking emotions. Earlier research has found that physiological data is a valid measurement of the emotional state of the user. Being able to see one’s physiological feedback in a virtual environment has proven to make the application more enjoyable. In this paper, we have investigated the effects of manipulating heart rate feedback provided to the participants in a single user immersive virtual environment. Our results show that providing slightly faster or slower real-time heart rate feedback can alter participants’ emotions more than providing unmodified feedback. However, altering the feedback does not alter real physiological signals.

-

The effect of video placement in AR conferencing applications

Lawrence, L., Dey, A., & Billinghurst, M.Lawrence, L., Dey, A., & Billinghurst, M. (2018, December). The effect of video placement in AR conferencing applications. In Proceedings of the 30th Australian Conference on Computer-Human Interaction (pp. 453-457). ACM.

@inproceedings{lawrence2018effect,

title={The effect of video placement in AR conferencing applications},

author={Lawrence, Louise and Dey, Arindam and Billinghurst, Mark},

booktitle={Proceedings of the 30th Australian Conference on Computer-Human Interaction},

pages={453--457},

year={2018},

organization={ACM}

}We ran a pilot study to investigate the impact of video placement in augmented reality conferencing on communication, social presence and user preference. In addition, we explored the influence of different tasks, assembly and negotiation. We discovered a correlation between video placement and the type of the tasks, with some significant results in social presence indicators. -

Effects of Sharing Real-Time Multi-Sensory Heart Rate Feedback in Different Immersive Collaborative Virtual Environments

Dey, A., Chen, H., Zhuang, C., Billinghurst, M., & Lindeman, R. W.Dey, A., Chen, H., Zhuang, C., Billinghurst, M., & Lindeman, R. W. (2018, October). Effects of Sharing Real-Time Multi-Sensory Heart Rate Feedback in Different Immersive Collaborative Virtual Environments. In 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (pp. 165-173). IEEE.

@inproceedings{dey2018effects,

title={Effects of Sharing Real-Time Multi-Sensory Heart Rate Feedback in Different Immersive Collaborative Virtual Environments},

author={Dey, Arindam and Chen, Hao and Zhuang, Chang and Billinghurst, Mark and Lindeman, Robert W},

booktitle={2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages={165--173},

year={2018},

organization={IEEE}

}Collaboration is an important application area for virtual reality (VR). However, unlike in the real world, collaboration in VR misses important empathetic cues that can make collaborators aware of each other's emotional states. Providing physiological feedback, such as heart rate or respiration rate, to users in VR has been shown to create a positive impact in single user environments. In this paper, through a rigorous mixed-factorial user experiment, we evaluated how providing heart rate feedback to collaborators influences their collaboration in three different environments requiring different kinds of collaboration. We have found that when provided with real-time heart rate feedback participants felt the presence of the collaborator more and felt that they understood their collaborator's emotional state more. Heart rate feedback also made participants feel more dominant when performing the task. We discuss the implication of this research for collaborative VR environments, provide design guidelines, and directions for future research. -

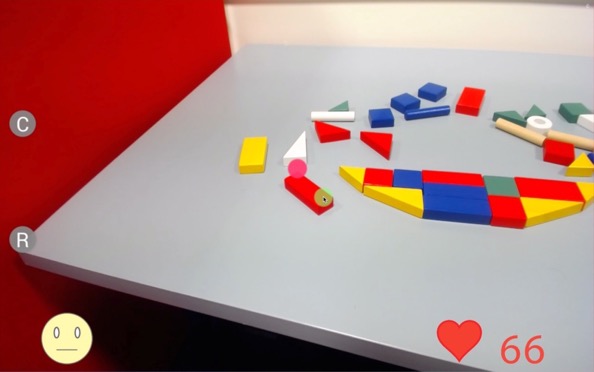

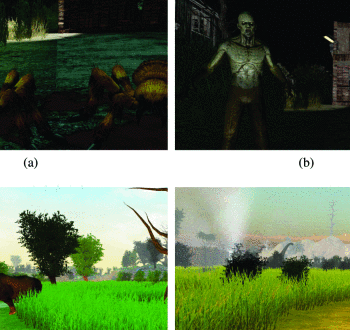

Effects of sharing physiological states of players in a collaborative virtual reality gameplay

Dey, A., Piumsomboon, T., Lee, Y., & Billinghurst, M.Dey, A., Piumsomboon, T., Lee, Y., & Billinghurst, M. (2017, May). Effects of sharing physiological states of players in a collaborative virtual reality gameplay. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 4045-4056). ACM.

@inproceedings{dey2017effects,

title={Effects of sharing physiological states of players in a collaborative virtual reality gameplay},

author={Dey, Arindam and Piumsomboon, Thammathip and Lee, Youngho and Billinghurst, Mark},

booktitle={Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems},

pages={4045--4056},

year={2017},

organization={ACM}

}Interfaces for collaborative tasks, such as multiplayer games can enable more effective and enjoyable collaboration. However, in these systems, the emotional states of the users are often not communicated properly due to their remoteness from one another. In this paper, we investigate the effects of showing emotional states of one collaborator to the other during an immersive Virtual Reality (VR) gameplay experience. We created two collaborative immersive VR games that display the real-time heart-rate of one player to the other. The two different games elicited different emotions, one joyous and the other scary. We tested the effects of visualizing heart-rate feedback in comparison with conditions where such a feedback was absent. The games had significant main effects on the overall emotional experience. -

User evaluation of hand gestures for designing an intelligent in-vehicle interface

Jahani, H., Alyamani, H. J., Kavakli, M., Dey, A., & Billinghurst, M.Jahani, H., Alyamani, H. J., Kavakli, M., Dey, A., & Billinghurst, M. (2017, May). User evaluation of hand gestures for designing an intelligent in-vehicle interface. In International Conference on Design Science Research in Information System and Technology (pp. 104-121). Springer, Cham.

@inproceedings{jahani2017user,

title={User evaluation of hand gestures for designing an intelligent in-vehicle interface},

author={Jahani, Hessam and Alyamani, Hasan J and Kavakli, Manolya and Dey, Arindam and Billinghurst, Mark},

booktitle={International Conference on Design Science Research in Information System and Technology},

pages={104--121},

year={2017},

organization={Springer}

}Driving a car is a high cognitive-load task requiring full attention behind the wheel. Intelligent navigation, transportation, and in-vehicle interfaces have introduced a safer and less demanding driving experience. However, there is still a gap for the existing interaction systems to satisfy the requirements of actual user experience. Hand gesture as an interaction medium, is natural and less visually demanding while driving. This paper aims to conduct a user-study with 79 participants to validate mid-air gestures for 18 major in-vehicle secondary tasks. We have demonstrated a detailed analysis on 900 mid-air gestures investigating preferences of gestures for in-vehicle tasks, their physical affordance, and driving errors. The outcomes demonstrate that employment of mid-air gestures reduces driving errors by up to 50% compared to traditional air-conditioning control. Results can be used for the development of vision-based in-vehicle gestural interfaces. -

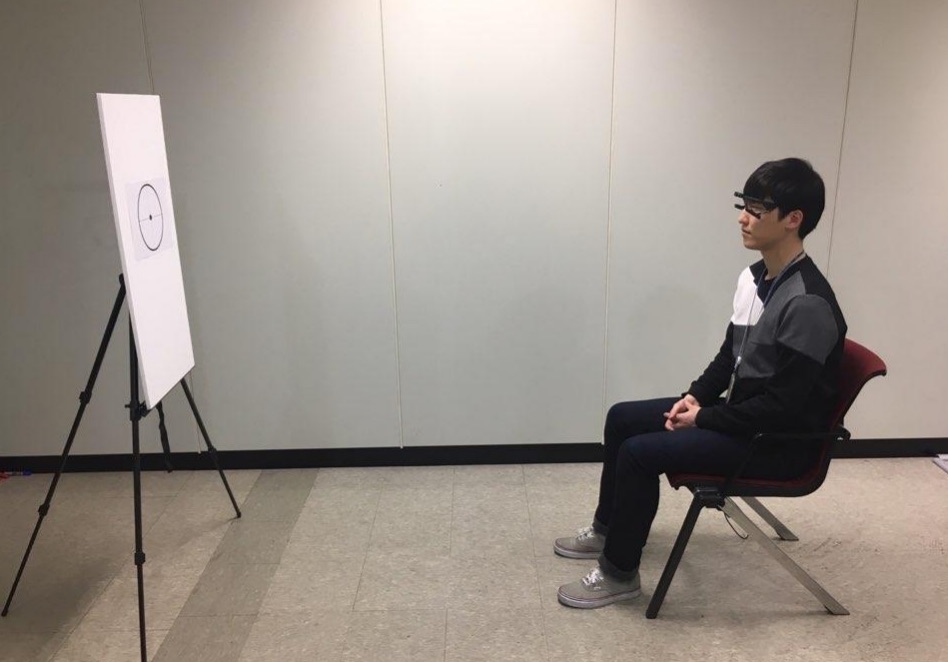

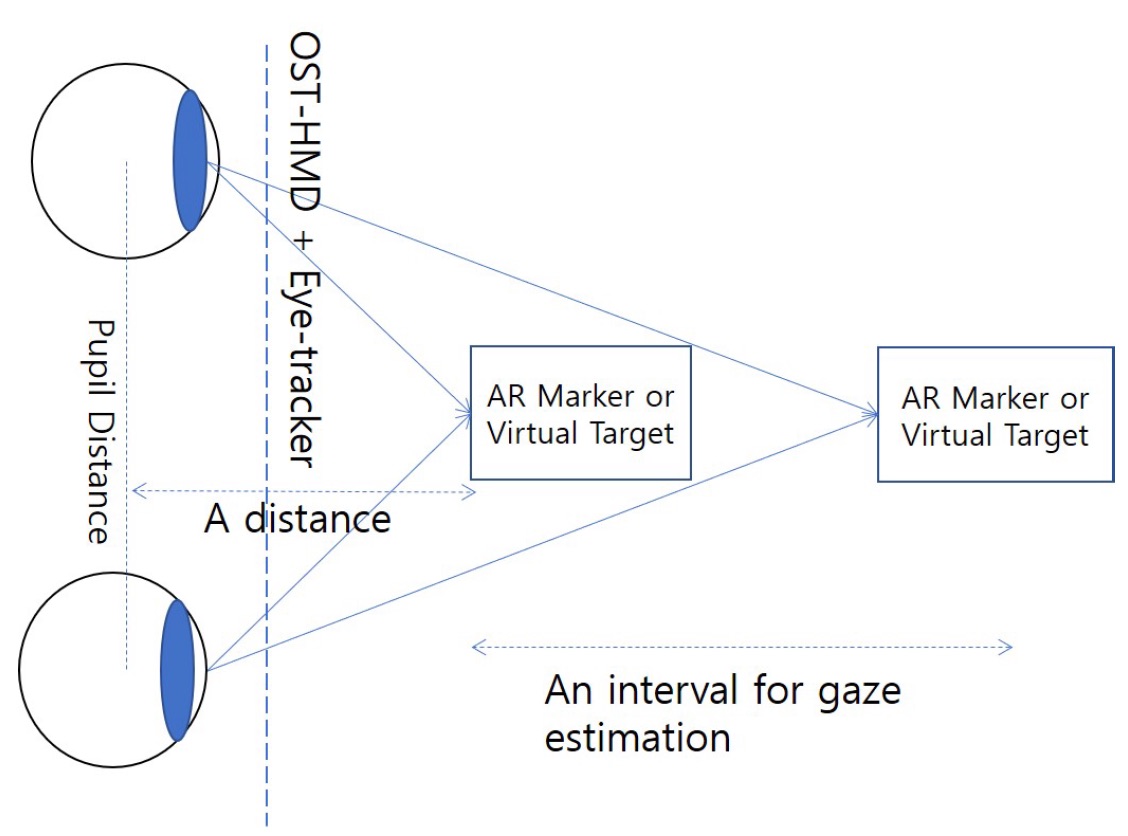

Estimating Gaze Depth Using Multi-Layer Perceptron

Lee, Y., Shin, C., Plopski, A., Itoh, Y., Piumsomboon, T., Dey, A., ... & Billinghurst, M. (2017, June). Estimating Gaze Depth Using Multi-Layer Perceptron. In 2017 International Symposium on Ubiquitous Virtual Reality (ISUVR) (pp. 26-29). IEEE.

@inproceedings{lee2017estimating,

title={Estimating Gaze Depth Using Multi-Layer Perceptron},

author={Lee, Youngho and Shin, Choonsung and Plopski, Alexander and Itoh, Yuta and Piumsomboon, Thammathip and Dey, Arindam and Lee, Gun and Kim, Seungwon and Billinghurst, Mark},

booktitle={2017 International Symposium on Ubiquitous Virtual Reality (ISUVR)},

pages={26--29},

year={2017},

organization={IEEE}

}In this paper we describe a new method for determining gaze depth in a head mounted eye-tracker. Eyetrackers are being incorporated into head mounted displays (HMDs), and eye-gaze is being used for interaction in Virtual and Augmented Reality. For some interaction methods, it is important to accurately measure the x- and y-direction of the eye-gaze and especially the focal depth information. Generally, eye tracking technology has a high accuracy in x- and y-directions, but not in depth. We used a binocular gaze tracker with two eye cameras, and the gaze vector was input to an MLP neural network for training and estimation. For the performance evaluation, data was obtained from 13 people gazing at fixed points at distances from 1m to 5m. The gaze classification into fixed distances produced an average classification error of nearly 10%, and an average error distance of 0.42m. This is sufficient for some Augmented Reality applications, but more research is needed to provide an estimate of a user’s gaze moving in continuous space. -

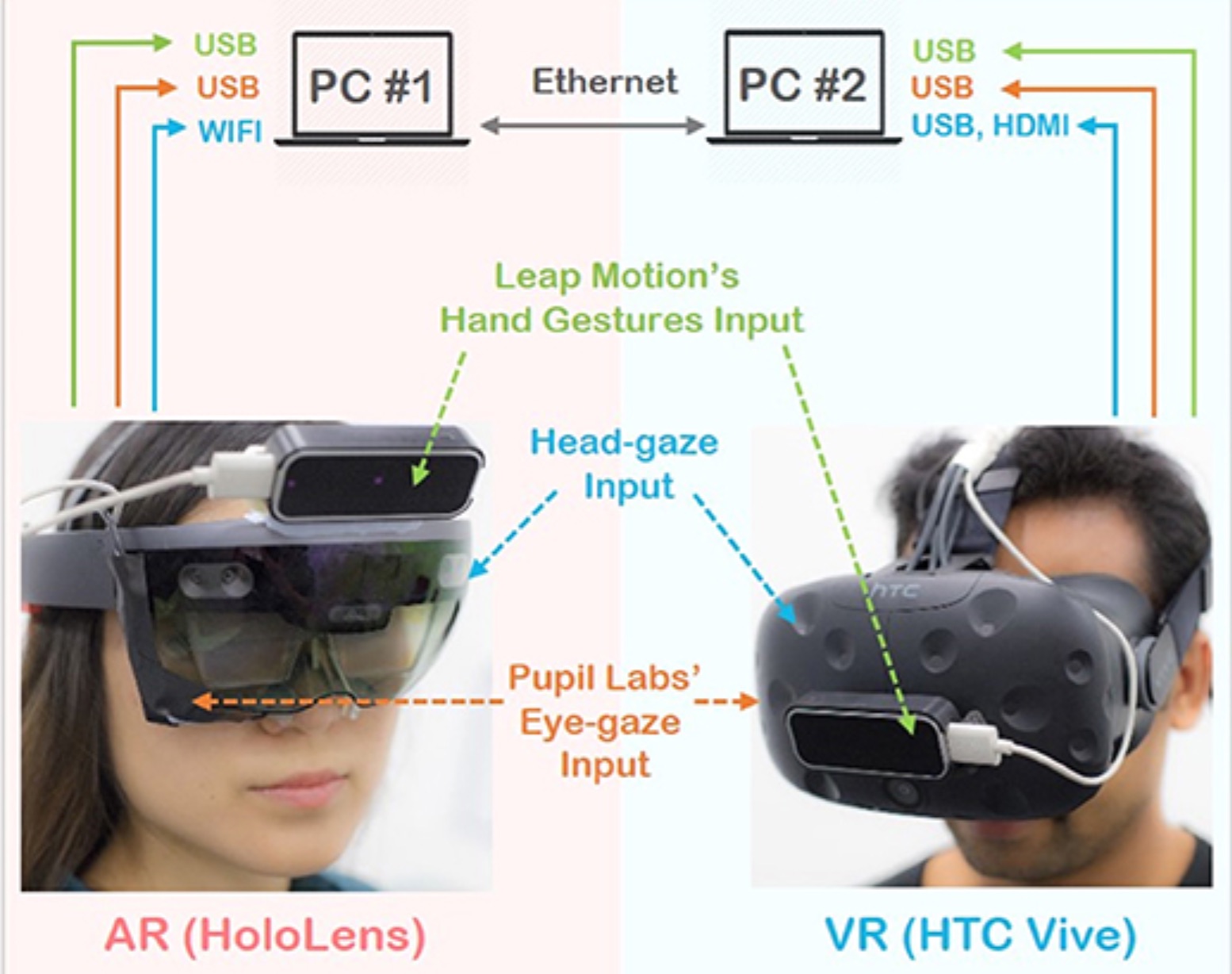

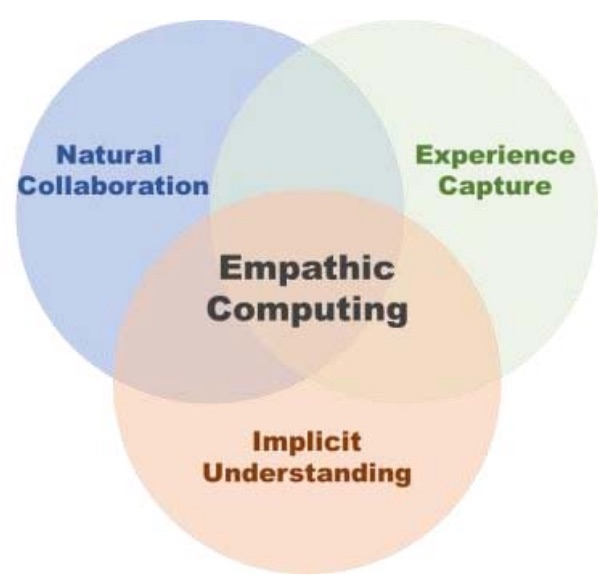

Empathic mixed reality: Sharing what you feel and interacting with what you see

Piumsomboon, T., Lee, Y., Lee, G. A., Dey, A., & Billinghurst, M.Piumsomboon, T., Lee, Y., Lee, G. A., Dey, A., & Billinghurst, M. (2017, June). Empathic mixed reality: Sharing what you feel and interacting with what you see. In 2017 International Symposium on Ubiquitous Virtual Reality (ISUVR) (pp. 38-41). IEEE.

@inproceedings{piumsomboon2017empathic,

title={Empathic mixed reality: Sharing what you feel and interacting with what you see},

author={Piumsomboon, Thammathip and Lee, Youngho and Lee, Gun A and Dey, Arindam and Billinghurst, Mark},

booktitle={2017 International Symposium on Ubiquitous Virtual Reality (ISUVR)},

pages={38--41},

year={2017},

organization={IEEE}

}Empathic Computing is a research field that aims to use technology to create deeper shared understanding or empathy between people. At the same time, Mixed Reality (MR) technology provides an immersive experience that can make an ideal interface for collaboration. In this paper, we present some of our research into how MR technology can be applied to creating Empathic Computing experiences. This includes exploring how to share gaze in a remote collaboration between Augmented Reality (AR) and Virtual Reality (VR) environments, using physiological signals to enhance collaborative VR, and supporting interaction through eye-gaze in VR. Early outcomes indicate that as we design collaborative interfaces to enhance empathy between people, this could also benefit the personal experience of the individual interacting with the interface. -

Mutually Shared Gaze in Augmented Video Conference

Lee, G., Kim, S., Lee, Y., Dey, A., Piumsomboon, T., Norman, M., & Billinghurst, M.Lee, G., Kim, S., Lee, Y., Dey, A., Piumsomboon, T., Norman, M., & Billinghurst, M. (2017, October). Mutually Shared Gaze in Augmented Video Conference. In Adjunct Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR-Adjunct 2017 (pp. 79-80). Institute of Electrical and Electronics Engineers Inc..

@inproceedings{lee2017mutually,

title={Mutually Shared Gaze in Augmented Video Conference},

author={Lee, Gun and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammatip and Norman, Mitchell and Billinghurst, Mark},

booktitle={Adjunct Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR-Adjunct 2017},

pages={79--80},

year={2017},

organization={Institute of Electrical and Electronics Engineers Inc.}

}Augmenting video conference with additional visual cues has been studied to improve remote collaboration. A common setup is a person wearing a head-mounted display (HMD) and camera sharing her view of the workspace with a remote collaborator and getting assistance on a real-world task. While this configuration has been extensively studied, there has been little research on how sharing gaze cues might affect the collaboration. This research investigates how sharing gaze in both directions between a local worker and remote helper affects the collaboration and communication. We developed a prototype system that shares the eye gaze of both users, and conducted a user study. Preliminary results showed that sharing gaze significantly improves the awareness of each other's focus, hence improving collaboration. -

A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs

Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M.Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M. (2017, November). A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs. In Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos (pp. 1-2). Eurographics Association.

@inproceedings{lee2017gaze,

title={A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs},

author={Lee, Youngho and Piumsomboon, Thammathip and Ens, Barrett and Lee, Gun and Dey, Arindam and Billinghurst, Mark},

booktitle={Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos},

pages={1--2},

year={2017},

organization={Eurographics Association}

}The rapid developement of machine learning algorithms can be leveraged for potential software solutions in many domains including techniques for depth estimation of human eye gaze. In this paper, we propose an implicit and continuous data acquisition method for 3D gaze depth estimation for an optical see-Through head mounted display (OST-HMD) equipped with an eye tracker. Our method constantly monitoring and generating user gaze data for training our machine learning algorithm. The gaze data acquired through the eye-tracker include the inter-pupillary distance (IPD) and the gaze distance to the real andvirtual target for each eye.

-

Exploring pupil dilation in emotional virtual reality environments.

Chen, H., Dey, A., Billinghurst, M., & Lindeman, R. W.Chen, H., Dey, A., Billinghurst, M., & Lindeman, R. W. (2017, November). Exploring pupil dilation in emotional virtual reality environments. In Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments (pp. 169-176). Eurographics Association.

@inproceedings{chen2017exploring,

title={Exploring pupil dilation in emotional virtual reality environments},

author={Chen, Hao and Dey, Arindam and Billinghurst, Mark and Lindeman, Robert W},

booktitle={Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments},

pages={169--176},

year={2017},

organization={Eurographics Association}

}Previous investigations have shown that pupil dilation can be affected by emotive pictures, audio clips, and videos. In this paper, we explore how emotive Virtual Reality (VR) content can also cause pupil dilation. VR has been shown to be able to evoke negative and positive arousal in users when they are immersed in different virtual scenes. In our research, VR scenes were used as emotional triggers. Five emotional VR scenes were designed in our study and each scene had five emotion segments; happiness, fear, anxiety, sadness, and disgust. When participants experienced the VR scenes, their pupil dilation and the brightness in the headset were captured. We found that both the negative and positive emotion segments produced pupil dilation in the VR environments. We also explored the effect of showing heart beat cues to the users, and if this could cause difference in pupil dilation. In our study, three different heart beat cues were shown to users using a combination of three channels; haptic, audio, and visual. The results showed that the haptic-visual cue caused the most significant pupil dilation change from the baseline. -

Exploring enhancements for remote mixed reality collaboration

Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M.Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M. (2017, November). Exploring enhancements for remote mixed reality collaboration. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 16). ACM.

@inproceedings{piumsomboon2017exploring,

title={Exploring enhancements for remote mixed reality collaboration},

author={Piumsomboon, Thammathip and Day, Arindam and Ens, Barrett and Lee, Youngho and Lee, Gun and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={16},

year={2017},

organization={ACM}

}In this paper, we explore techniques for enhancing remote Mixed Reality (MR) collaboration in terms of communication and interaction. We created CoVAR, a MR system for remote collaboration between an Augmented Reality (AR) and Augmented Virtuality (AV) users. Awareness cues and AV-Snap-to-AR interface were proposed for enhancing communication. Collaborative natural interaction, and AV-User-Body-Scaling were implemented for enhancing interaction. We conducted an exploratory study examining the awareness cues and the collaborative gaze, and the results showed the benefits of the proposed techniques for enhancing communication and interaction. -

A Systematic Review of Usability Studies in Augmented Reality between 2005 and 2014

Dey, A., Billinghurst, M., Lindeman, R. W., & Swan II, J. E.Dey, A., Billinghurst, M., Lindeman, R. W., & Swan II, J. E. (2016, September). A systematic review of usability studies in augmented reality between 2005 and 2014. In 2016 IEEE international symposium on mixed and augmented reality (ISMAR-Adjunct) (pp. 49-50). IEEE.

@inproceedings{dey2016systematic,

title={A systematic review of usability studies in augmented reality between 2005 and 2014},

author={Dey, Arindam and Billinghurst, Mark and Lindeman, Robert W and Swan II, J Edward},

booktitle={2016 IEEE international symposium on mixed and augmented reality (ISMAR-Adjunct)},

pages={49--50},

year={2016},

organization={IEEE}

}Augmented Reality (AR) interfaces have been studied extensively over the last few decades, with a growing number of user-based experiments. In this paper, we systematically review most AR papers published between 2005 and 2014 that include user studies. A total of 291 papers have been reviewed and classified based on their application areas. The primary contribution of the review is to present the broad landscape of user-based AR research, and to provide a high-level view of how that landscape has changed. We also identify areas where there have been few user studies, and opportunities for future research. This poster describes the methodology of the review and the classifications of AR research that have emerged. -

Sharing Manipulated Heart Rate Feedback in Collaborative Virtual Environments

Arindam Dey ; Hao Chen ; Ashkan Hayati ; Mark Billinghurst ; Robert W. Lindeman@inproceedings{dey2019sharing,

title={Sharing Manipulated Heart Rate Feedback in Collaborative Virtual Environments},

author={Dey, Arindam and Chen, Hao and Hayati, Ashkan and Billinghurst, Mark and Lindeman, Robert W},

booktitle={2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages={248--257},

year={2019},

organization={IEEE}

}We have explored the effects of sharing manipulated heart rate feedback in collaborative virtual environments. In our study, we created two types of different virtual environments (active and passive) with different levels of interactions and provided three levels of manipulated heart rate feedback (decreased, unchanged, and increased). We measured the effects of manipulated feedback on Social Presence, affect, physical heart rate, and overall experience. We noticed a significant effect of the manipulated heart rate feedback in affecting scariness and nervousness. The perception of the collaborator's valance and arousal was also affected where increased heart rate feedback perceived as a higher valance and lower arousal. Increased heart rate feedback decreased the real heart rate. The type of virtual environments had a significant effect on social presence, heart rate, and affect where the active environment had better performances across these measurements. We discuss the implications of this and directions for future research. -

Neurophysiological Effects of Presence in Calm Virtual Environments

Arindam Dey ; Jane Phoon ; Shuvodeep Saha ; Chelsea Dobbins ; Mark BillinghurstA. Dey, J. Phoon, S. Saha, C. Dobbins and M. Billinghurst, "Neurophysiological Effects of Presence in Calm Virtual Environments," 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 2020, pp. 745-746, doi: 10.1109/VRW50115.2020.00223.

@inproceedings{dey2020neurophysiological,

title={Neurophysiological Effects of Presence in Calm Virtual Environments},

author={Dey, Arindam and Phoon, Jane and Saha, Shuvodeep and Dobbins, Chelsea and Billinghurst, Mark},

booktitle={2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages={745--746},

year={2020},

organization={IEEE}

}Presence, the feeling of being there, is an important factor that affects the overall experience of virtual reality. Presence is measured through post-experience subjective questionnaires. While questionnaires are a widely used method in human-based research, they suffer from participant biases, dishonest answers, and fatigue. In this paper, we measured the effects of different levels of presence (high and low) in virtual environments using physiological and neurological signals as an alternative method. Results indicated a significant effect of presence on both physiological and neurological signals. -

Using augmented reality with speech input for non-native children's language learning

Che Samihah Che Dalim, Mohd Shahrizal, Sunar, Arindam Dey, MarkBillinghurstDalim, Che Samihah Che, et al. "Using augmented reality with speech input for non-native children's language learning." International Journal of Human-Computer Studies 134 (2020): 44-64.

@article{dalim2020using,

title={Using augmented reality with speech input for non-native children's language learning},

author={Dalim, Che Samihah Che and Sunar, Mohd Shahrizal and Dey, Arindam and Billinghurst, Mark},

journal={International Journal of Human-Computer Studies},

volume={134},

pages={44--64},

year={2020},

publisher={Elsevier}

}Augmented Reality (AR) offers an enhanced learning environment which could potentially influence children's experience and knowledge gain during the language learning process. Teaching English or other foreign languages to children with different native language can be difficult and requires an effective strategy to avoid boredom and detachment from the learning activities. With the growing numbers of AR education applications and the increasing pervasiveness of speech recognition, we are keen to understand how these technologies benefit non-native young children in learning English. In this paper, we explore children's experience in terms of knowledge gain and enjoyment when learning through a combination of AR and speech recognition technologies. We developed a prototype AR interface called TeachAR, and ran two experiments to investigate how effective the combination of AR and speech recognition was towards the learning of 1) English terms for color and shapes, and 2) English words for spatial relationships. We found encouraging results by creating a novel teaching strategy using these two technologies, not only in terms of increase in knowledge gain and enjoyment when compared with traditional strategy but also enables young children to finish the certain task faster and easier. -

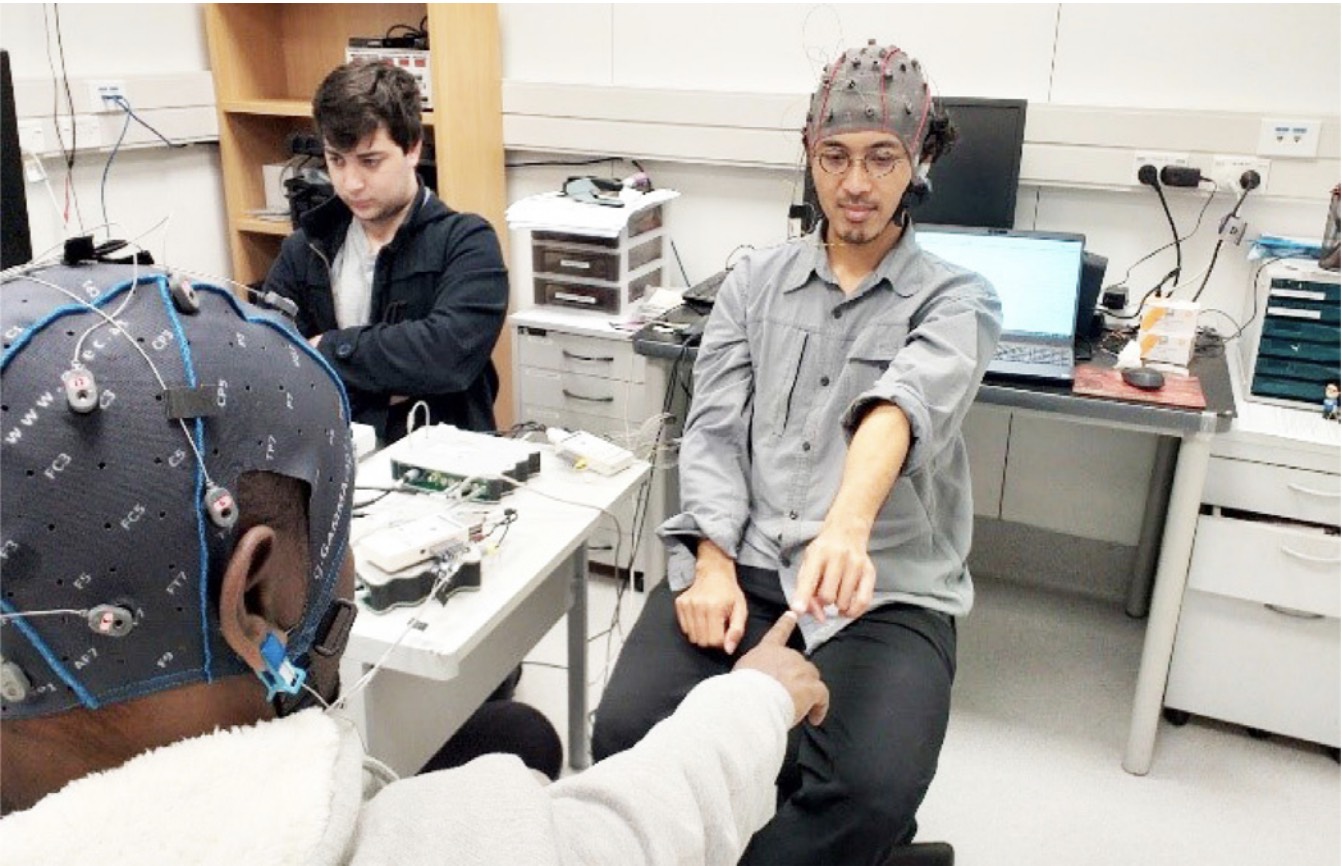

A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning

Ihshan Gumilar, Ekansh Sareen, Reed Bell, Augustus Stone, Ashkan Hayati, Jingwen Mao, Amit Barde, Anubha Gupta, Arindam Dey, Gun Lee, Mark BillinghurstGumilar, I., Sareen, E., Bell, R., Stone, A., Hayati, A., Mao, J., ... & Billinghurst, M. (2021). A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning. Computers & Graphics, 94, 62-75.

@article{gumilar2021comparative,

title={A comparative study on inter-brain synchrony in real and virtual environments using hyperscanning},

author={Gumilar, Ihshan and Sareen, Ekansh and Bell, Reed and Stone, Augustus and Hayati, Ashkan and Mao, Jingwen and Barde, Amit and Gupta, Anubha and Dey, Arindam and Lee, Gun and others},

journal={Computers \& Graphics},

volume={94},

pages={62--75},

year={2021},

publisher={Elsevier}

}Researchers have employed hyperscanning, a technique used to simultaneously record neural activity from multiple participants, in real-world collaborations. However, to the best of our knowledge, there is no study that has used hyperscanning in Virtual Reality (VR). The aims of this study were; firstly, to replicate results of inter-brain synchrony reported in existing literature for a real world task and secondly, to explore whether the inter-brain synchrony could be elicited in a Virtual Environment (VE). This paper reports on three pilot-studies in two different settings (real-world and VR). Paired participants performed two sessions of a finger-pointing exercise separated by a finger-tracking exercise during which their neural activity was simultaneously recorded by electroencephalography (EEG) hardware. By using Phase Locking Value (PLV) analysis, VR was found to induce similar inter-brain synchrony as seen in the real-world. Further, it was observed that the finger-pointing exercise shared the same neurally activated area in both the real-world and VR. Based on these results, we infer that VR can be used to enhance inter-brain synchrony in collaborative tasks carried out in a VE. In particular, we have been able to demonstrate that changing visual perspective in VR is capable of eliciting inter-brain synchrony. This demonstrates that VR could be an exciting platform to explore the phenomena of inter-brain synchrony further and provide a deeper understanding of the neuroscience of human communication. -

Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR

Ihshan Gumilar, Amit Barde, Ashkan Hayati, Mark Billinghurst, Gun Lee, Abdul Momin, Charles Averill, Arindam Dey.Gumilar, I., Barde, A., Hayati, A. F., Billinghurst, M., Lee, G., Momin, A., ... & Dey, A. (2021, May). Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-7).

@inproceedings{gumilar2021connecting,

title={Connecting the Brains via Virtual Eyes: Eye-Gaze Directions and Inter-brain Synchrony in VR},

author={Gumilar, Ihshan and Barde, Amit and Hayati, Ashkan F and Billinghurst, Mark and Lee, Gun and Momin, Abdul and Averill, Charles and Dey, Arindam},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--7},

year={2021}

}Hyperscanning is an emerging method for measuring two or more brains simultaneously. This method allows researchers to simultaneously record neural activity from two or more people. While this method has been extensively implemented over the last five years in the real-world to study inter-brain synchrony, there is little work that has been undertaken in the use of hyperscanning in virtual environments. Preliminary research in the area demonstrates that inter-brain synchrony in virtual environments can be achieved in a mannersimilar to thatseen in the real world. The study described in this paper proposes to further research in the area by studying how non-verbal communication cues in social interactions in virtual environments can afect inter-brain synchrony. In particular, we concentrate on the role eye gaze playsin inter-brain synchrony. The aim of this research is to explore how eye gaze afects inter-brain synchrony between users in a collaborative virtual environment -

A Review of Hyperscanning and Its Use in Virtual Environments

Ihshan Gumilar, Ekansh Sareen, Reed Bell, Augustus Stone, Ashkan Hayati, Jingwen Mao, Amit Barde, Anubha Gupta, Arindam Dey, Gun Lee, Mark BillinghurstBarde, A., Gumilar, I., Hayati, A. F., Dey, A., Lee, G., & Billinghurst, M. (2020, December). A Review of Hyperscanning and Its Use in Virtual Environments. In Informatics (Vol. 7, No. 4, p. 55). Multidisciplinary Digital Publishing Institute.

@inproceedings{barde2020review,

title={A Review of Hyperscanning and Its Use in Virtual Environments},

author={Barde, Amit and Gumilar, Ihshan and Hayati, Ashkan F and Dey, Arindam and Lee, Gun and Billinghurst, Mark},

booktitle={Informatics},

volume={7},

number={4},

pages={55},

year={2020},

organization={Multidisciplinary Digital Publishing Institute}

}Researchers have employed hyperscanning, a technique used to simultaneously record neural activity from multiple participants, in real-world collaborations. However, to the best of our knowledge, there is no study that has used hyperscanning in Virtual Reality (VR). The aims of this study were; firstly, to replicate results of inter-brain synchrony reported in existing literature for a real world task and secondly, to explore whether the inter-brain synchrony could be elicited in a Virtual Environment (VE). This paper reports on three pilot-studies in two different settings (real-world and VR). Paired participants performed two sessions of a finger-pointing exercise separated by a finger-tracking exercise during which their neural activity was simultaneously recorded by electroencephalography (EEG) hardware. By using Phase Locking Value (PLV) analysis, VR was found to induce similar inter-brain synchrony as seen in the real-world. Further, it was observed that the finger-pointing exercise shared the same neurally activated area in both the real-world and VR. Based on these results, we infer that VR can be used to enhance inter-brain synchrony in collaborative tasks carried out in a VE. In particular, we have been able to demonstrate that changing visual perspective in VR is capable of eliciting inter-brain synchrony. This demonstrates that VR could be an exciting platform to explore the phenomena of inter-brain synchrony further and provide a deeper understanding of the neuroscience of human communication. -

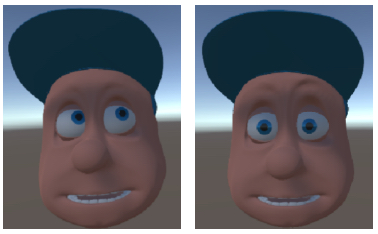

Effects of interacting with facial expressions and controllers in different virtual environments on presence, usability, affect, and neurophysiological signals

Arindam Dey, Amit Barde, Bowen Yuan, Ekansh Sareen, Chelsea Dobbins, Aaron Goh, Gaurav Gupta, Anubha Gupta, MarkBillinghurstDey, A., Barde, A., Yuan, B., Sareen, E., Dobbins, C., Goh, A., ... & Billinghurst, M. (2022). Effects of interacting with facial expressions and controllers in different virtual environments on presence, usability, affect, and neurophysiological signals. International Journal of Human-Computer Studies, 160, 102762.

@article{dey2022effects,

title={Effects of interacting with facial expressions and controllers in different virtual environments on presence, usability, affect, and neurophysiological signals},

author={Dey, Arindam and Barde, Amit and Yuan, Bowen and Sareen, Ekansh and Dobbins, Chelsea and Goh, Aaron and Gupta, Gaurav and Gupta, Anubha and Billinghurst, Mark},

journal={International Journal of Human-Computer Studies},

volume={160},

pages={102762},

year={2022},

publisher={Elsevier}

}Virtual Reality (VR) interfaces provide an immersive medium to interact with the digital world. Most VR interfaces require physical interactions using handheld controllers, but there are other alternative interaction methods that can support different use cases and users. Interaction methods in VR are primarily evaluated based on their usability, however, their differences in neurological and physiological effects remains less investigated. In this paper—along with other traditional qualitative matrices such as presence, affect, and system usability—we explore the neurophysiological effects—brain signals and electrodermal activity—of using an alternative facial expression interaction method to interact with VR interfaces. This form of interaction was also compared with traditional handheld controllers. Three different environments, with different experiences to interact with were used—happy (butterfly catching), neutral (object picking), and scary (zombie shooting). Overall, we noticed an effect of interaction methods on the gamma activities in the brain and on skin conductance. For some aspects of presence, facial expression outperformed controllers but controllers were found to be better than facial expressions in terms of usability. -

An AR/TUI-supported Debugging Teaching Environment

Dmitry Resnyansky , Mark Billinghurst , Arindam DeyResnyansky, D., Billinghurst, M., & Dey, A. (2019, December). An AR/TUI-supported debugging teaching environment. In Proceedings of the 31st Australian Conference on Human-Computer-Interaction (pp. 590-594).

@inproceedings{10.1145/3369457.3369538,

author = {Resnyansky, Dmitry and Billinghurst, Mark and Dey, Arindam},

title = {An AR/TUI-Supported Debugging Teaching Environment},

year = {2020},

isbn = {9781450376969},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3369457.3369538},

doi = {10.1145/3369457.3369538},

abstract = {This paper presents research on the potential application of Tangible and Augmented Reality (AR) technology to computer science education and the teaching of programming in tertiary settings. An approach to an AR-supported debugging-teaching prototype is outlined, focusing on the design of an AR workspace that uses physical markers to interact with content (code). We describe a prototype which has been designed to actively scaffold the student's development of the two primary abilities necessary for effective debugging: (1) the ability to read not just the code syntax, but to understand the overall program structure behind the code; and (2) the ability to independently recall and apply the new knowledge to produce new, working code structures.},

booktitle = {Proceedings of the 31st Australian Conference on Human-Computer-Interaction},

pages = {590–594},

numpages = {5},

keywords = {tangible user interface, tertiary education, debugging, Human-computer interaction, augmented reality},

location = {Fremantle, WA, Australia},

series = {OzCHI '19}

}This paper presents research on the potential application of Tangible and Augmented Reality (AR) technology to computer science education and the teaching of programming in tertiary settings. An approach to an AR-supported debugging-teaching prototype is outlined, focusing on the design of an AR workspace that uses physical markers to interact with content (code). We describe a prototype which has been designed to actively scaffold the student's development of the two primary abilities necessary for effective debugging: (1) the ability to read not just the code syntax, but to understand the overall program structure behind the code; and (2) the ability to independently recall and apply the new knowledge to produce new, working code structures. -

Deep Learning-based Simulator Sickness Estimation from 3D Motion

Junhong Zhao, Kien TP Tran, Andrew Chalmers, Weng Khuan Hoh, Richard Yao, Arindam Dey, James Wilmott, James Lin, Mark Billinghurst, Robert W. Lindeman, Taehyun RheeZhao, J., Tran, K. T., Chalmers, A., Hoh, W. K., Yao, R., Dey, A., ... & Rhee, T. (2023, October). Deep learning-based simulator sickness estimation from 3D motion. In 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (pp. 39-48). IEEE.

@inproceedings{zhao2023deep,

title={Deep learning-based simulator sickness estimation from 3D motion},

author={Zhao, Junhong and Tran, Kien TP and Chalmers, Andrew and Hoh, Weng Khuan and Yao, Richard and Dey, Arindam and Wilmott, James and Lin, James and Billinghurst, Mark and Lindeman, Robert W and others},

booktitle={2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

pages={39--48},

year={2023},

organization={IEEE}

}This paper presents a novel solution for estimating simulator sickness in HMDs using machine learning and 3D motion data, informed by user-labeled simulator sickness data and user analysis. We conducted a novel VR user study, which decomposed motion data and used an instant dial-based sickness scoring mechanism. We were able to emulate typical VR usage and collect user simulator sickness scores. Our user analysis shows that translation and rotation differently impact user simulator sickness in HMDs. In addition, users’ demographic information and self-assessed simulator sickness susceptibility data are collected and show some indication of potential simulator sickness. Guided by the findings from the user study, we developed a novel deep learning-based solution to better estimate simulator sickness with decomposed 3D motion features and user profile information. The model was trained and tested using the 3D motion dataset with user-labeled simulator sickness and profiles collected from the user study. The results show higher estimation accuracy when using the 3D motion data compared with methods based on optical flow extracted from the recorded video, as well as improved accuracy when decomposing the motion data and incorporating user profile information. -

Towards an Inclusive and Accessible Metaverse

Callum Parker, Soojeong Yoo, Youngho Lee, Joel Fredericks, Arindam Dey, Youngjun Cho, and Mark Billinghurst.Parker, C., Yoo, S., Lee, Y., Fredericks, J., Dey, A., Cho, Y., & Billinghurst, M. (2023, April). Towards an inclusive and accessible metaverse. In Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems (pp. 1-5).

@inproceedings{parker2023towards,

title={Towards an inclusive and accessible metaverse},

author={Parker, Callum and Yoo, Soojeong and Lee, Youngho and Fredericks, Joel and Dey, Arindam and Cho, Youngjun and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems},

pages={1--5},

year={2023}

}The push towards a Metaverse is growing, with companies such as Meta developing their own interpretation of what it should look like. The Metaverse at its conceptual core promises to remove boundaries and borders, becoming a decentralised entity for everyone to use - forming a digital virtual layer over our own “real” world. However, creation of a Metaverse or “new world” presents the opportunity to create one which is inclusive and accessible to all. This challenge is explored and discussed in this workshop, with an aim of understanding how to create a Metaverse which is open and inclusive to people with physical and intellectual disabilities, and how interactions can be designed in a way to minimise disadvantage. The key outcomes of this workshop outline new opportunities for improving accessibility in the Metaverse, methodologies for designing and evaluating accessibility, and key considerations for designing accessible Metaverse environments and interactions.