Tamil Selvan

Tamil Selvan

Research Fellow

Tamil Selvan is a master’s student at the Empathic Computing Laboratory, at the University of Auckland, New Zealand, under the supervision of Prof. Mark Billinghurst. His research involves creating an interactive system using multiple radar systems. Prior to his, he worked as a research intern at the Augmented Human Lab at the University of Auckland.

He obtained a bachelor’s degree in Electronics and Communication Engineering at Vellore Institute of Technology, India. For more info, please visit www.tamilselvan.info

Projects

-

Mind Reader

This project explores how brain activity can be used for computer input. The innovative MindReader game uses EEG (electroencephalogram) based Brain-Computer Interface (BCI) technology to showcase the player’s real-time brain waves. It uses colourful and creative visuals to show the raw brain activity from a number of EEG electrodes worn on the head. The player can also play a version of the Angry Birds game where their concentration level determines how far the birds can be shot. In this cheerful and engaging demo, friends and family can challenge each other to see who has the strongest neural connections!

-

RadarHand

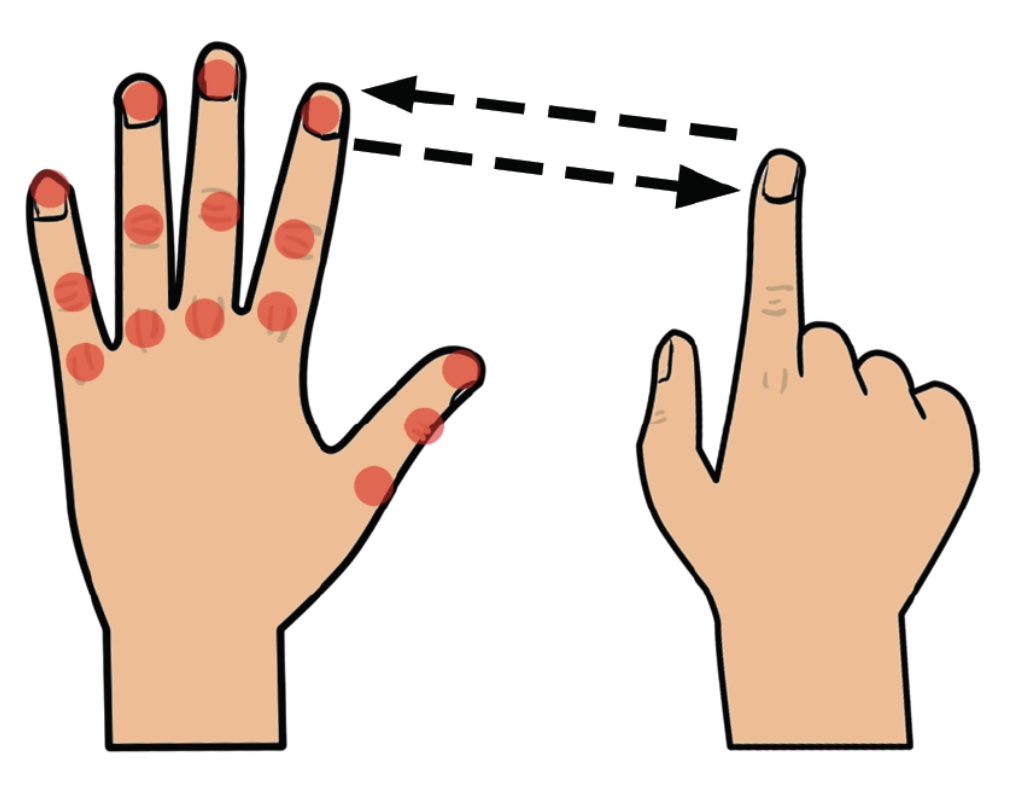

RadarHand is a wrist-worn wearable system that uses radar sensing to detect on-skin proprioceptive hand gestures, making it easy to interact with simple finger motions. Radar has the advantage of being robust, private, small, penetrating materials and requiring low computation costs. In this project, we first evaluated the proprioceptive nature of the back of the hand and found that the thumb is the most proprioceptive of all the finger joints, followed by the index finger, middle finger, ring finger and pinky finger. This helped determine the types of gestures most suitable for the system. Next, we trained deep-learning models for gesture classification. Out of 27 gesture group possibilities, we achieved 92% accuracy for a generic set of seven gestures and 93% accuracy for the proprioceptive set of eight gestures. We also evaluated RadarHand's performance in real-time and achieved an accuracy of between 74% and 91% depending if the system or user initiates the gesture first. This research could contribute to a new generation of radar-based interfaces that allow people to interact with computers in a more natural way.

Publications

-

Adapting Fitts’ Law and N-Back to Assess Hand Proprioception

Tamil Gunasekaran, Ryo Hajika, Chloe Dolma Si Ying Haigh, Yun Suen Pai, Danielle Lottridge, Mark Billinghurst.Gunasekaran, T. S., Hajika, R., Haigh, C. D. S. Y., Pai, Y. S., Lottridge, D., & Billinghurst, M. (2021, May). Adapting Fitts’ Law and N-Back to Assess Hand Proprioception. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-7).

@inproceedings{gunasekaran2021adapting,

title={Adapting Fitts’ Law and N-Back to Assess Hand Proprioception},

author={Gunasekaran, Tamil Selvan and Hajika, Ryo and Haigh, Chloe Dolma Si Ying and Pai, Yun Suen and Lottridge, Danielle and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--7},

year={2021}

}Proprioception is the body’s ability to sense the position and movement of each limb, as well as the amount of effort exerted onto or by them. Methods to assess proprioception have been introduced before, yet there is little to no study on assessing the degree of proprioception on body parts for use cases like gesture recognition wearable computing. We propose the use of Fitts’ law coupled with the N-Back task to evaluate proprioception of the hand. We evaluate 15 distinct points at the back of the hand and assess the musing extended 3D Fitts’ law. Our results show that the index of difficulty of tapping point from thumb to pinky increases gradually with a linear regression factor of 0.1144. Additionally, participants perform the tap before performing the N-Back task. From these results, we discuss the fundamental limitations and suggest how Fitts’ law can be further extended to assess proprioception -

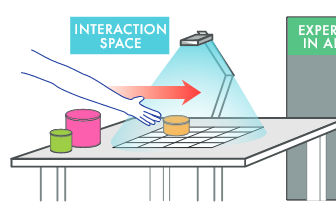

RaITIn: Radar-Based Identification for Tangible Interactions

Tamil Selvan Gunasekaran , Ryo Hajika , Yun Suen Pai , Eiji Hayashi , Mark BillinghurstGunasekaran, T. S., Hajika, R., Pai, Y. S., Hayashi, E., & Billinghurst, M. (2022, April). RaITIn: Radar-Based Identification for Tangible Interactions. In CHI Conference on Human Factors in Computing Systems Extended Abstracts (pp. 1-7).

@inproceedings{gunasekaran2022raitin,

title={RaITIn: Radar-Based Identification for Tangible Interactions},

author={Gunasekaran, Tamil Selvan and Hajika, Ryo and Pai, Yun Suen and Hayashi, Eiji and Billinghurst, Mark},

booktitle={CHI Conference on Human Factors in Computing Systems Extended Abstracts},

pages={1--7},

year={2022}

}Radar is primarily used for applications like tracking and large-scale ranging, and its use for object identification has been rarely explored. This paper introduces RaITIn, a radar-based identification (ID) method for tangible interactions. Unlike conventional radar solutions, RaITIn can track and identify objects on a tabletop scale. We use frequency modulated continuous wave (FMCW) radar sensors to classify different objects embedded with low-cost radar reflectors of varying sizes on a tabletop setup. We also introduce Stackable IDs, where different objects can be stacked and combined to produce unique IDs. The result allows RaITIn to accurately identify visually identical objects embedded with different low-cost reflector configurations. When combined with a radar’s ability for tracking, it creates novel tabletop interaction modalities. We discuss possible applications and areas for future work. -

RadarHand: a Wrist-Worn Radar for On-Skin Touch-based Proprioceptive Gestures

Ryo Hajika, Tamil Selvan Gunasekaran, Chloe Dolma Si Ying Haigh, Yun Suen Pai, Eiji Hayashi, Jaime Lien, Danielle Lottridge, and Mark Billinghurst.Hajika, R., Gunasekaran, T. S., Haigh, C. D. S. Y., Pai, Y. S., Hayashi, E., Lien, J., ... & Billinghurst, M. (2024). RadarHand: A Wrist-Worn Radar for On-Skin Touch-Based Proprioceptive Gestures. ACM Transactions on Computer-Human Interaction, 31(2), 1-36.

@article{hajika2024radarhand,

title={RadarHand: A Wrist-Worn Radar for On-Skin Touch-Based Proprioceptive Gestures},

author={Hajika, Ryo and Gunasekaran, Tamil Selvan and Haigh, Chloe Dolma Si Ying and Pai, Yun Suen and Hayashi, Eiji and Lien, Jaime and Lottridge, Danielle and Billinghurst, Mark},

journal={ACM Transactions on Computer-Human Interaction},

volume={31},

number={2},

pages={1--36},

year={2024},

publisher={ACM New York, NY, USA}

}We introduce RadarHand, a wrist-worn wearable with millimetre wave radar that detects on-skin touch-based proprioceptive hand gestures. Radars are robust, private, small, penetrate materials, and require low computation costs. We first evaluated the proprioceptive and tactile perception nature of the back of the hand and found that tapping on the thumb is the least proprioceptive error of all the finger joints, followed by the index finger, middle finger, ring finger, and pinky finger in the eyes-free and high cognitive load situation. Next, we trained deep-learning models for gesture classification. We introduce two types of gestures based on the locations of the back of the hand: generic gestures and discrete gestures. Discrete gestures are gestures that start at specific locations and end at specific locations at the back of the hand, in contrast to generic gestures, which can start anywhere and end anywhere on the back of the hand. Out of 27 gesture group possibilities, we achieved 92% accuracy for a set of seven gestures and 93% accuracy for the set of eight discrete gestures. Finally, we evaluated RadarHand’s performance in real-time under two interaction modes: Active interaction and Reactive interaction. Active interaction is where the user initiates input to achieve the desired output, and reactive interaction is where the device initiates interaction and requires the user to react. We obtained an accuracy of 87% and 74% for active generic and discrete gestures, respectively, as well as 91% and 81.7% for reactive generic and discrete gestures, respectively. We discuss the implications of RadarHand for gesture recognition and directions for future works. -

Virtual Journalist: Measuring and Inducing Cultural Empathy by Visualizing Empathic Perspectives in VR

Ana Alipass Fernandez, Christopher Changmok Kim, Tamil Selvan Gunasekaran, Yun Suen Pai, and Kouta Minamizawa.Fernandez, A. A., Kim, C. C., Gunasekaran, T. S., Pai, Y. S., & Minamizawa, K. (2023, October). Virtual journalist: measuring and inducing cultural empathy by visualizing empathic perspectives in VR. In 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 667-672). IEEE.

@inproceedings{fernandez2023virtual,

title={Virtual journalist: measuring and inducing cultural empathy by visualizing empathic perspectives in VR},

author={Fernandez, Ana Alipass and Kim, Christopher Changmok and Gunasekaran, Tamil Selvan and Pai, Yun Suen and Minamizawa, Kouta},

booktitle={2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={667--672},

year={2023},

organization={IEEE}

}The concept of cultural empathy, which entails the ability to empathize with individuals from diverse cultural backgrounds, has garnered attention across multiple disciplines. However, there has been limited research focusing on the utilization of immersive experiences to deliver journalistic encounters that foster cultural empathy. In this regard, we propose the development of Virtual Journalist, a virtual reality (VR) system that immerses users in the role of a journalist, allowing them to consume 360° video documentaries and capture moments that evoke cultural empathy through photography. Furthermore, this system integrates both cognitive and affective dimensions of empathy by linking physiological data, namely electrodermal activity (EDA) and heart rate variability (HRV), to the captured photographs. Our findings suggest that the impact of Virtual Journalist varies based on the user’s cultural background, and we have observed that empathic accuracy can be achieved by combining user-generated photographs with corresponding physiological data.