Li Zhang

Li Zhang

PhD Student

Li Zhang is a Ph.D. candidate at the joint Cyber-Physical Laboratory at the Northwestern Polytechnical University, Xi’an, China, under the supervision of Prof. Weiping He. He is currently a visiting student at the Empathic Computing Laboratory at the University of Auckland, New Zealand, under the supervision of Prof. Mark Billinghurst. His research interest is the interactions between humans, computers and smart objects in AR/VR based intelligent manufacturing.

He received a bachelor’s degree in advanced manufacturing engineering from the Northwestern Polytechnical University in 2015. Since then, he has been in the AR/VR community for 5 years. He is dedicated to combining the latest information technology with industry to further enhance the intelligence of advanced manufacturing systems and the harmony between humans and machines.

Projects

-

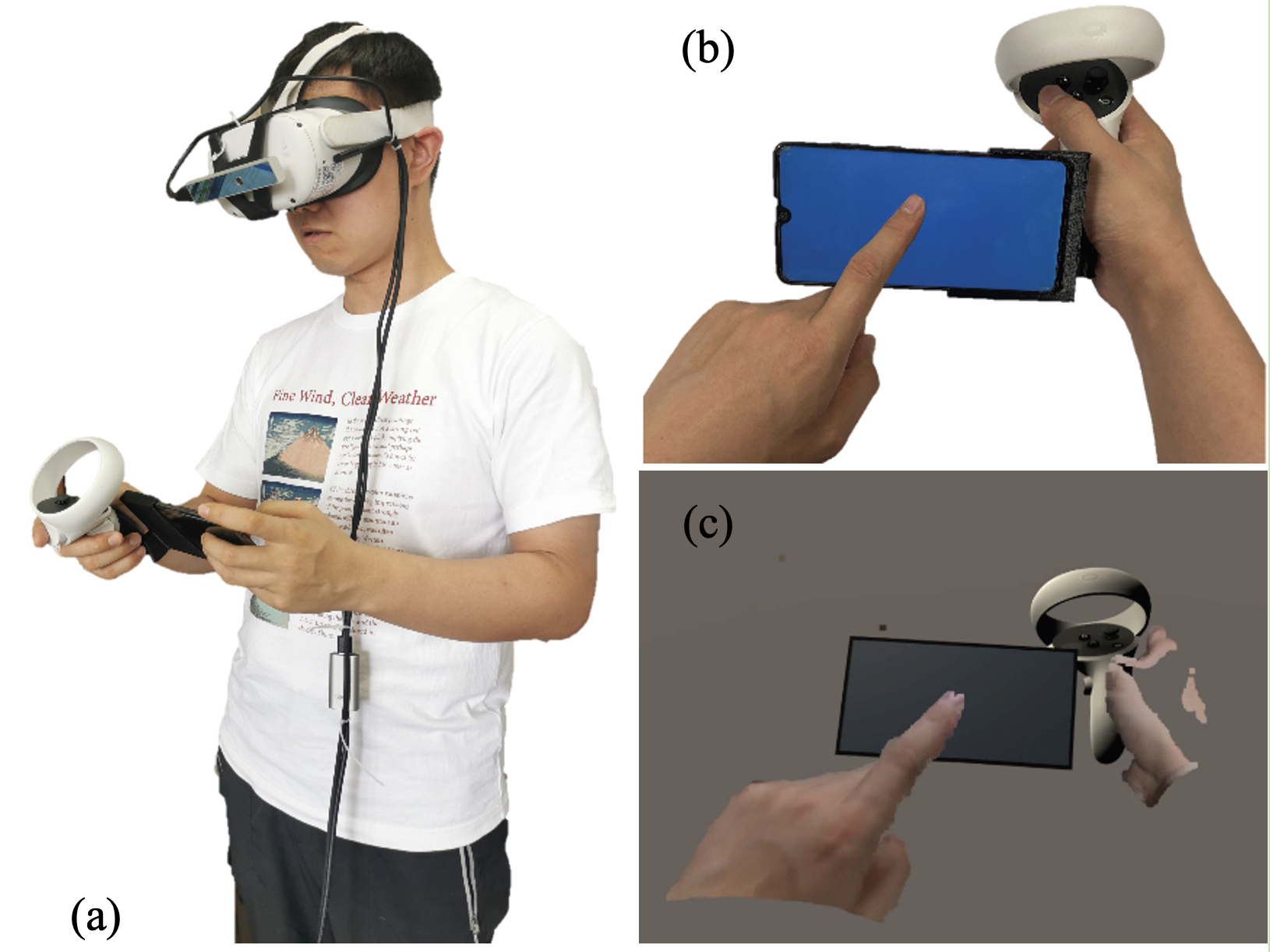

Using a Mobile Phone in VR

Virtual Reality (VR) Head-Mounted Display (HMD) technology immerses a user in a computer generated virtual environment. However, a VR HMD also blocks the users’ view of their physical surroundings, and so prevents them from using their mobile phones in a natural manner. In this project, we present a novel Augmented Virtuality (AV) interface that enables people to naturally interact with a mobile phone in real time in a virtual environment. The system allows the user to wear a VR HMD while seeing his/her 3D hands captured by a depth sensor and rendered in different styles, and enables the user to operate a virtual mobile phone aligned with their real phone.

Publications

-

Bringing full-featured mobile phone interaction into virtual reality

H Bai, L Zhang, J Yang, M BillinghurstBai, H., Zhang, L., Yang, J., & Billinghurst, M. (2021). Bringing full-featured mobile phone interaction into virtual reality. Computers & Graphics, 97, 42-53.

@article{bai2021bringing,

title={Bringing full-featured mobile phone interaction into virtual reality},

author={Bai, Huidong and Zhang, Li and Yang, Jing and Billinghurst, Mark},

journal={Computers \& Graphics},

volume={97},

pages={42--53},

year={2021},

publisher={Elsevier}

}Virtual Reality (VR) Head-Mounted Display (HMD) technology immerses a user in a computer generated virtual environment. However, a VR HMD also blocks the users’ view of their physical surroundings, and so prevents them from using their mobile phones in a natural manner. In this paper, we present a novel Augmented Virtuality (AV) interface that enables people to naturally interact with a mobile phone in real time in a virtual environment. The system allows the user to wear a VR HMD while seeing his/her 3D hands captured by a depth sensor and rendered in different styles, and enables the user to operate a virtual mobile phone aligned with their real phone. We conducted a formal user study to compare the AV interface with physical touch interaction on user experience in five mobile applications. Participants reported that our system brought the real mobile phone into the virtual world. Unfortunately, the experiment results indicated that using a phone with our AV interfaces in VR was more difficult than the regular smartphone touch interaction, with increased workload and lower system usability, especially for a typing task. We ran a follow-up study to compare different hand visualizations for text typing using the AV interface. Participants felt that a skin-colored hand visualization method provided better usability and immersiveness than other hand rendering styles.

-

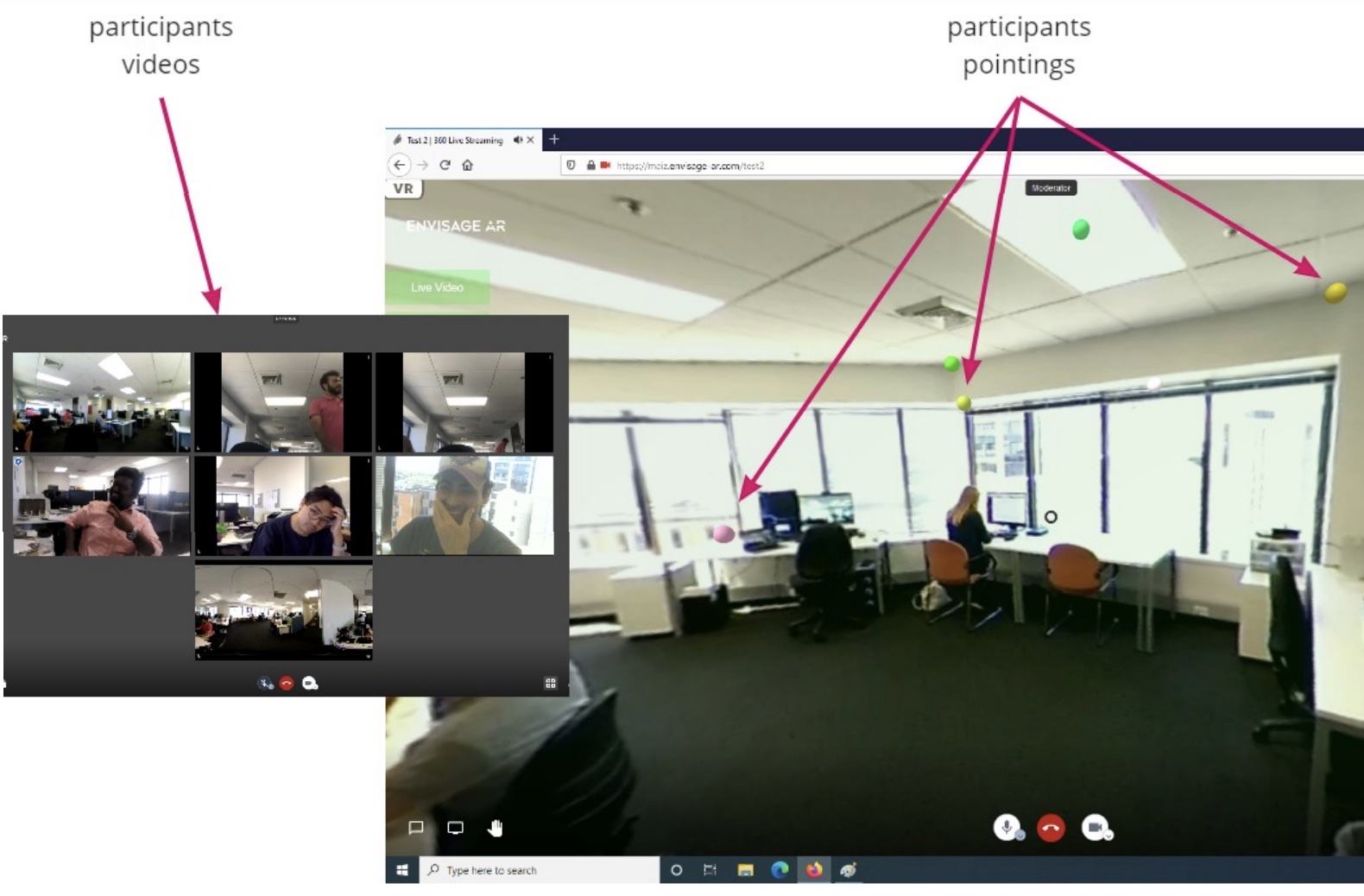

ShowMeAround: Giving Virtual Tours Using Live 360 Video

Alaeddin Nassani, Li Zhang, Huidong Bai, Mark Billinghurst.Nassani, A., Zhang, L., Bai, H., & Billinghurst, M. (2021, May). ShowMeAround: Giving Virtual Tours Using Live 360 Video. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-4).

@inproceedings{nassani2021showmearound,

title={ShowMeAround: Giving Virtual Tours Using Live 360 Video},

author={Nassani, Alaeddin and Zhang, Li and Bai, Huidong and Billinghurst, Mark},

booktitle={Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems},

pages={1--4},

year={2021}

}This demonstration presents ShowMeAround, a video conferencing system designed to allow people to give virtual tours over live 360-video. Using ShowMeAround a host presenter walks through a real space and can live stream a 360-video view to a small group of remote viewers. The ShowMeAround interface has features such as remote pointing and viewpoint awareness to support natural collaboration between the viewers and host presenter. The system also enables sharing of pre-recorded high resolution 360 video and still images to further enhance the virtual tour experience. -

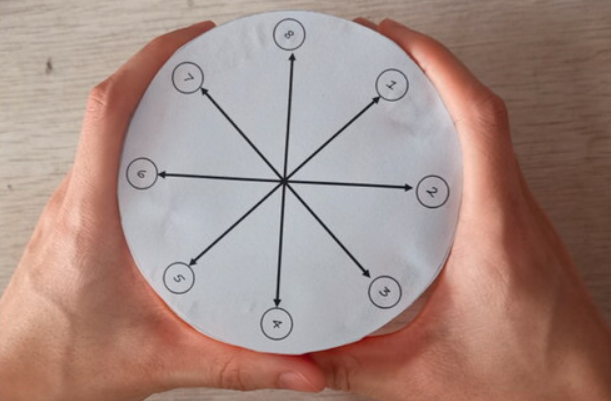

HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality

Zhang, L., He, W., Cao, Z., Wang, S., Bai, H., & Billinghurst, M.Zhang, L., He, W., Cao, Z., Wang, S., Bai, H., & Billinghurst, M. (2022). HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality. International Journal of Human–Computer Interaction, 1-15.

@article{zhang2022hapticproxy,

title={HapticProxy: Providing Positional Vibrotactile Feedback on a Physical Proxy for Virtual-Real Interaction in Augmented Reality},

author={Zhang, Li and He, Weiping and Cao, Zhiwei and Wang, Shuxia and Bai, Huidong and Billinghurst, Mark},

journal={International Journal of Human--Computer Interaction},

pages={1--15},

year={2022},

publisher={Taylor \& Francis}

}Consistent visual and haptic feedback is an important way to improve the user experience when interacting with virtual objects. However, the perception provided in Augmented Reality (AR) mainly comes from visual cues and amorphous tactile feedback. This work explores how to simulate positional vibrotactile feedback (PVF) with multiple vibration motors when colliding with virtual objects in AR. By attaching spatially distributed vibration motors on a physical haptic proxy, users can obtain an augmented collision experience with positional vibration sensations from the contact point with virtual objects. We first developed a prototype system and conducted a user study to optimize the design parameters. Then we investigated the effect of PVF on user performance and experience in a virtual and real object alignment task in the AR environment. We found that this approach could significantly reduce the alignment offset between virtual and physical objects with tolerable task completion time increments. With the PVF cue, participants obtained a more comprehensive perception of the offset direction, more useful information, and a more authentic AR experience.