Kunal Gupta

Kunal Gupta

PhD Student

Kunal Gupta is a PhD candidate at the Empathic Computing Laboratory, at the University of Auckland, New Zealand, under the supervision of Prof. Mark Billinghurst. His research revolves around emotion recognition and representation in VR/AR using physiological sensing and contextual information.

Prior to this, he worked as a User Experience Researcher at Google and as an Interaction Designer at a startup in India, for a total of around 5 years of industry experience. He obtained a master’s degree in Human Interface Technology (MHIT) from the HITLab NZ at the University of Canterbury under the supervision of Prof Mark Billinghurst in 2015. As part of his master’s degree, he conducted some of the first research into the use of eye-gaze to enhance remote collaboration in wearable AR systems.

For more details about his research work, check out his website: kunalgupta.in

Projects

-

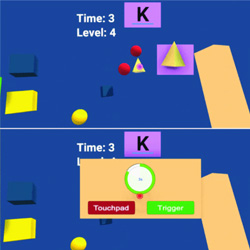

Mind Reader

This project explores how brain activity can be used for computer input. The innovative MindReader game uses EEG (electroencephalogram) based Brain-Computer Interface (BCI) technology to showcase the player’s real-time brain waves. It uses colourful and creative visuals to show the raw brain activity from a number of EEG electrodes worn on the head. The player can also play a version of the Angry Birds game where their concentration level determines how far the birds can be shot. In this cheerful and engaging demo, friends and family can challenge each other to see who has the strongest neural connections!

Publications

-

Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality

Kunal Gupta, Ryo Hajika, Yun Suen Pai, Andreas Duenser, Martin Lochner, Mark BillinghurstK. Gupta, R. Hajika, Y. S. Pai, A. Duenser, M. Lochner and M. Billinghurst, "Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality," 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 2020, pp. 756-765, doi: 10.1109/VR46266.2020.1581313729558.

@inproceedings{gupta2020measuring,

title={Measuring Human Trust in a Virtual Assistant using Physiological Sensing in Virtual Reality},

author={Gupta, Kunal and Hajika, Ryo and Pai, Yun Suen and Duenser, Andreas and Lochner, Martin and Billinghurst, Mark},

booktitle={2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR)},

pages={756--765},

year={2020},

organization={IEEE}

}With the advancement of Artificial Intelligence technology to make smart devices, understanding how humans develop trust in virtual agents is emerging as a critical research field. Through our research, we report on a novel methodology to investigate user’s trust in auditory assistance in a Virtual Reality (VR) based search task, under both high and low cognitive load and under varying levels of agent accuracy. We collected physiological sensor data such as electroencephalography (EEG), galvanic skin response (GSR), and heart-rate variability (HRV), subjective data through questionnaire such as System Trust Scale (STS), Subjective Mental Effort Questionnaire (SMEQ) and NASA-TLX. We also collected a behavioral measure of trust (congruency of users’ head motion in response to valid/ invalid verbal advice from the agent). Our results indicate that our custom VR environment enables researchers to measure and understand human trust in virtual agents using the matrices, and both cognitive load and agent accuracy play an important role in trust formation. We discuss the implications of the research and directions for future work. -

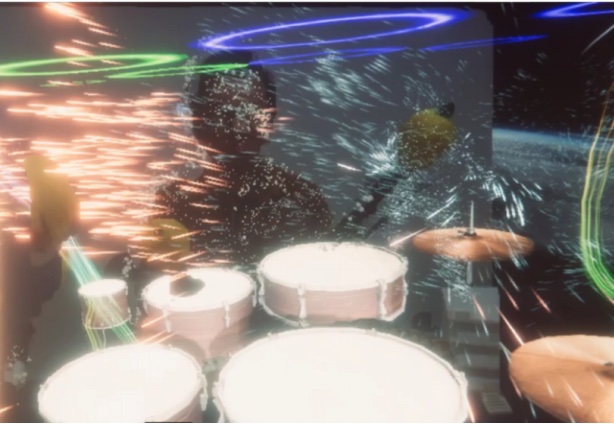

NeuralDrum: Perceiving Brain Synchronicity in XR Drumming

Y. S. Pai, Ryo Hajika, Kunla Gupta, Prasnth Sasikumar, Mark Billinghurst.Pai, Y. S., Hajika, R., Gupta, K., Sasikumar, P., & Billinghurst, M. (2020). NeuralDrum: Perceiving Brain Synchronicity in XR Drumming. In SIGGRAPH Asia 2020 Technical Communications (pp. 1-4).

@incollection{pai2020neuraldrum,

title={NeuralDrum: Perceiving Brain Synchronicity in XR Drumming},

author={Pai, Yun Suen and Hajika, Ryo and Gupta, Kunal and Sasikumar, Prasanth and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2020 Technical Communications},

pages={1--4},

year={2020}

}Brain synchronicity is a neurological phenomena where two or more individuals have their brain activation in phase when performing a shared activity. We present NeuralDrum, an extended reality (XR) drumming experience that allows two players to drum together while their brain signals are simultaneously measured. We calculate the Phase Locking Value (PLV) to determine their brain synchronicity and use this to directly affect their visual and auditory experience in the game, creating a closed feedback loop. In a pilot study, we logged and analysed the users’ brain signals as well as had them answer a subjective questionnaire regarding their perception of synchronicity with their partner and the overall experience. From the results, we discuss design implications to further improve NeuralDrum and propose methods to integrate brain synchronicity into interactive experiences. -

Jamming in MR: Towards Real-Time Music Collaboration in Mixed Reality

Ruben Schlagowski; Kunal Gupta; Silvan Mertes; Mark Billinghurst; Susanne Metzner; Elisabeth AndréSchlagowski, R., Gupta, K., Mertes, S., Billinghurst, M., Metzner, S., & André, E. (2022, March). Jamming in MR: Towards Real-Time Music Collaboration in Mixed Reality. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) (pp. 854-855). IEEE.

@inproceedings{schlagowski2022jamming,

title={Jamming in MR: towards real-time music collaboration in mixed reality},

author={Schlagowski, Ruben and Gupta, Kunal and Mertes, Silvan and Billinghurst, Mark and Metzner, Susanne and Andr{\'e}, Elisabeth},

booktitle={2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

pages={854--855},

year={2022},

organization={IEEE}

}Recent pandemic-related contact restrictions have made it difficult for musicians to meet in person to make music. As a result, there has been an increased demand for applications that enable remote and real-time music collaboration. One desirable goal here is to give musicians a sense of social presence, to make them feel that they are “on site” with their musical partners. We conducted a focus group study to investigate the impact of remote jamming on users' affect. Further, we gathered user requirements for a Mixed Reality system that enables real-time jamming and developed a prototype based on these findings. -

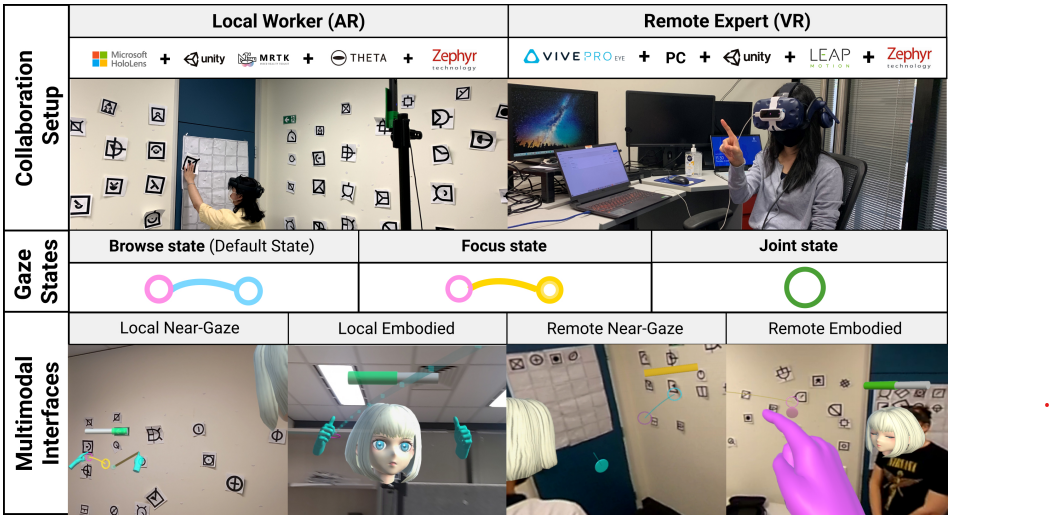

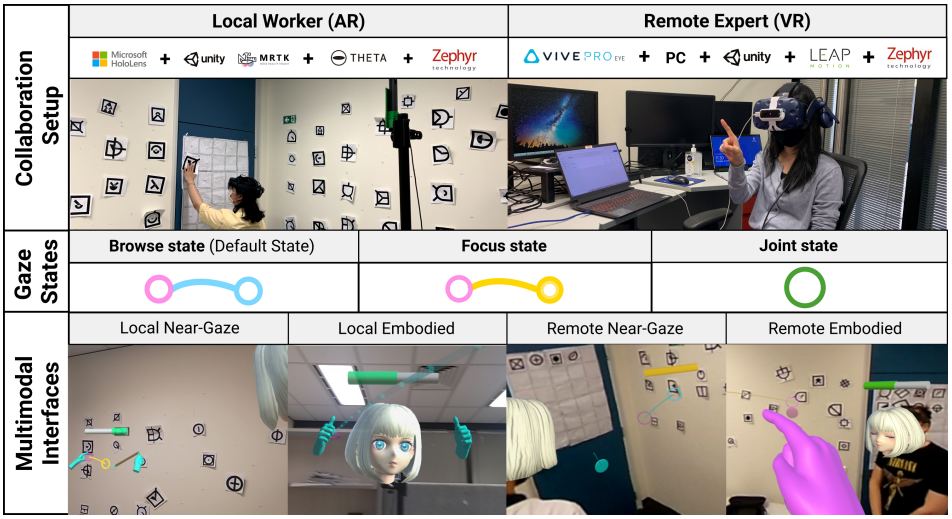

Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration

Allison Jing; Kunal Gupta; Jeremy McDade; Gun A. Lee; Mark BillinghurstA. Jing, K. Gupta, J. McDade, G. A. Lee and M. Billinghurst, "Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration," 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, Singapore, 2022, pp. 837-846, doi: 10.1109/ISMAR55827.2022.00102.

@INPROCEEDINGS{9995367,

author={Jing, Allison and Gupta, Kunal and McDade, Jeremy and Lee, Gun A. and Billinghurst, Mark},

booktitle={2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

title={Comparing Gaze-Supported Modalities with Empathic Mixed Reality Interfaces in Remote Collaboration},

year={2022},

volume={},

number={},

pages={837-846},

doi={10.1109/ISMAR55827.2022.00102}}

In this paper, we share real-time collaborative gaze behaviours, hand pointing, gesturing, and heart rate visualisations between remote collaborators using a live 360 ° panoramic-video based Mixed Reality (MR) system. We first ran a pilot study to explore visual designs to combine communication cues with biofeedback (heart rate), aiming to understand user perceptions of empathic collaboration. We then conducted a formal study to investigate the effect of modality (Gaze+Hand, Hand-only) and interface (Near-Gaze, Embodied). The results show that the Gaze+Hand modality in a Near-Gaze interface is significantly better at reducing task load, improving co-presence, enhancing understanding and tightening collaborative behaviours compared to the conventional Embodied hand-only experience. Ranked as the most preferred condition, the Gaze+Hand in Near-Gaze condition is perceived to reduce the need for dividing attention to the collaborator’s physical location, although it feels slightly less natural compared to the embodied visualisations. In addition, the Gaze+Hand conditions also led to more joint attention and less hand pointing to align mutual understanding. Lastly, we provide a design guideline to summarize what we have learned from the studies on the representation between modality, interface, and biofeedback. -

Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration

Allison Jing; Kunal Gupta; Jeremy McDade; Gun A. Lee; Mark BillinghurstAllison Jing, Kunal Gupta, Jeremy McDade, Gun Lee, and Mark Billinghurst. 2022. Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration. In ACM SIGGRAPH 2022 Posters (SIGGRAPH '22). Association for Computing Machinery, New York, NY, USA, Article 29, 1–2. https://doi.org/10.1145/3532719.3543213

@inproceedings{10.1145/3532719.3543213,

author = {Jing, Allison and Gupta, Kunal and McDade, Jeremy and Lee, Gun and Billinghurst, Mark},

title = {Near-Gaze Visualisations of Empathic Communication Cues in Mixed Reality Collaboration},

year = {2022},

isbn = {9781450393614},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3532719.3543213},

doi = {10.1145/3532719.3543213},

abstract = {In this poster, we present a live 360° panoramic-video based empathic Mixed Reality (MR) collaboration system that shares various Near-Gaze non-verbal communication cues including gaze, hand pointing, gesturing, and heart rate visualisations in real-time. The preliminary results indicate that the interface with the partner’s communication cues visualised close to the gaze point allows users to focus without dividing attention to the collaborator’s physical body movements yet still effectively communicate. Shared gaze visualisations coupled with deictic languages are primarily used to affirm joint attention and mutual understanding, while hand pointing and gesturing are used as secondary. Our approach provides a new way to help enable effective remote collaboration through varied empathic communication visualisations and modalities which covers different task properties and spatial setups.},

booktitle = {ACM SIGGRAPH 2022 Posters},

articleno = {29},

numpages = {2},

location = {Vancouver, BC, Canada},

series = {SIGGRAPH '22}

}In this poster, we present a live 360° panoramic-video based empathic Mixed Reality (MR) collaboration system that shares various Near-Gaze non-verbal communication cues including gaze, hand pointing, gesturing, and heart rate visualisations in real-time. The preliminary results indicate that the interface with the partner’s communication cues visualised close to the gaze point allows users to focus without dividing attention to the collaborator’s physical body movements yet still effectively communicate. Shared gaze visualisations coupled with deictic languages are primarily used to affirm joint attention and mutual understanding, while hand pointing and gesturing are used as secondary. Our approach provides a new way to help enable effective remote collaboration through varied empathic communication visualisations and modalities which covers different task properties and spatial setups.