Thammathip Piumsomboon

Thammathip Piumsomboon

Research Fellow

Dr. Thammathip Piumsomboon is a Research Fellow at the Empathic Computing Laboratory (ECL) exploring research in Empathic Mixed Reality for remote collaboration. Prior to his return to academia, he was a Unity Director in an Augmented Reality (AR) start-up, QuiverVision, developing mobile AR application. He received a PhD in Computer Science from the University of Canterbury in 2015, supervised by Prof. Mark Billinghurst, Prof. Andy Cockburn, and Dr. Adrian Clark. During his PhD, he was a research assistant at the Human Interface Laboratory New Zealand (HIT Lab NZ). His research focused on developing novel AR interfaces and exploring natural interaction for AR using advanced interface technology.

For more information, please visit his personal website.

Projects

-

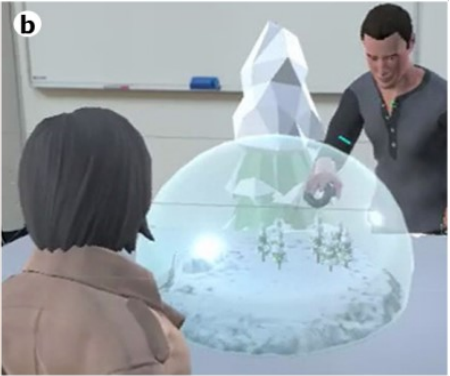

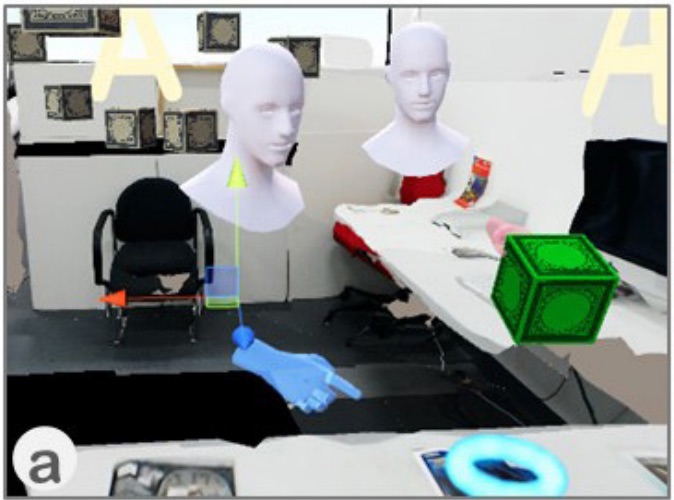

Mini-Me

Mini-Me is an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user’s gaze direction and body gestures while it transforms in size and orientation to stay within the AR user’s field of view. We tested Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration.

-

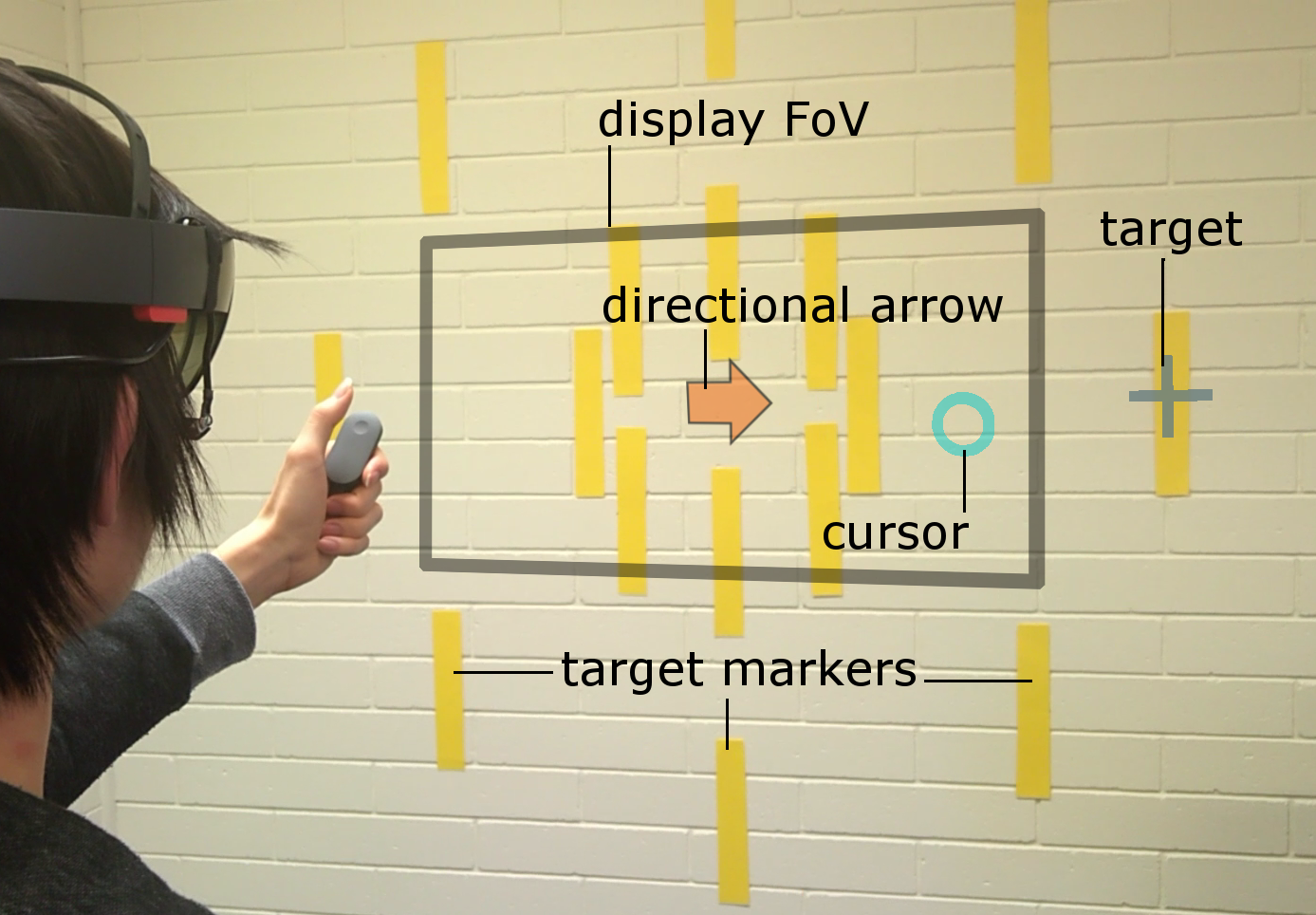

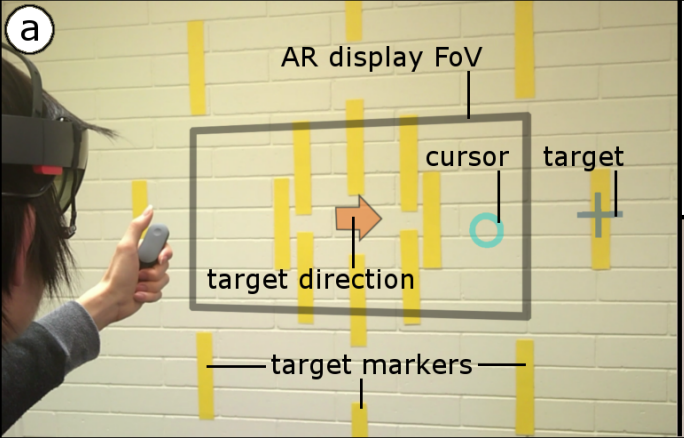

Pinpointing

Head and eye movement can be leveraged to improve the user’s interaction repertoire for wearable displays. Head movements are deliberate and accurate, and provide the current state-of-the-art pointing technique. Eye gaze can potentially be faster and more ergonomic, but suffers from low accuracy due to calibration errors and drift of wearable eye-tracking sensors. This work investigates precise, multimodal selection techniques using head motion and eye gaze. A comparison of speed and pointing accuracy reveals the relative merits of each method, including the achievable target size for robust selection. We demonstrate and discuss example applications for augmented reality, including compact menus with deep structure, and a proof-of-concept method for on-line correction of calibration drift.

-

Empathy Glasses

We have been developing a remote collaboration system with Empathy Glasses, a head worn display designed to create a stronger feeling of empathy between remote collaborators. To do this, we combined a head- mounted see-through display with a facial expression recognition system, a heart rate sensor, and an eye tracker. The goal is to enable a remote person to see and hear from another person's perspective and to understand how they are feeling. In this way, the system shares non-verbal cues that could help increase empathy between remote collaborators.

-

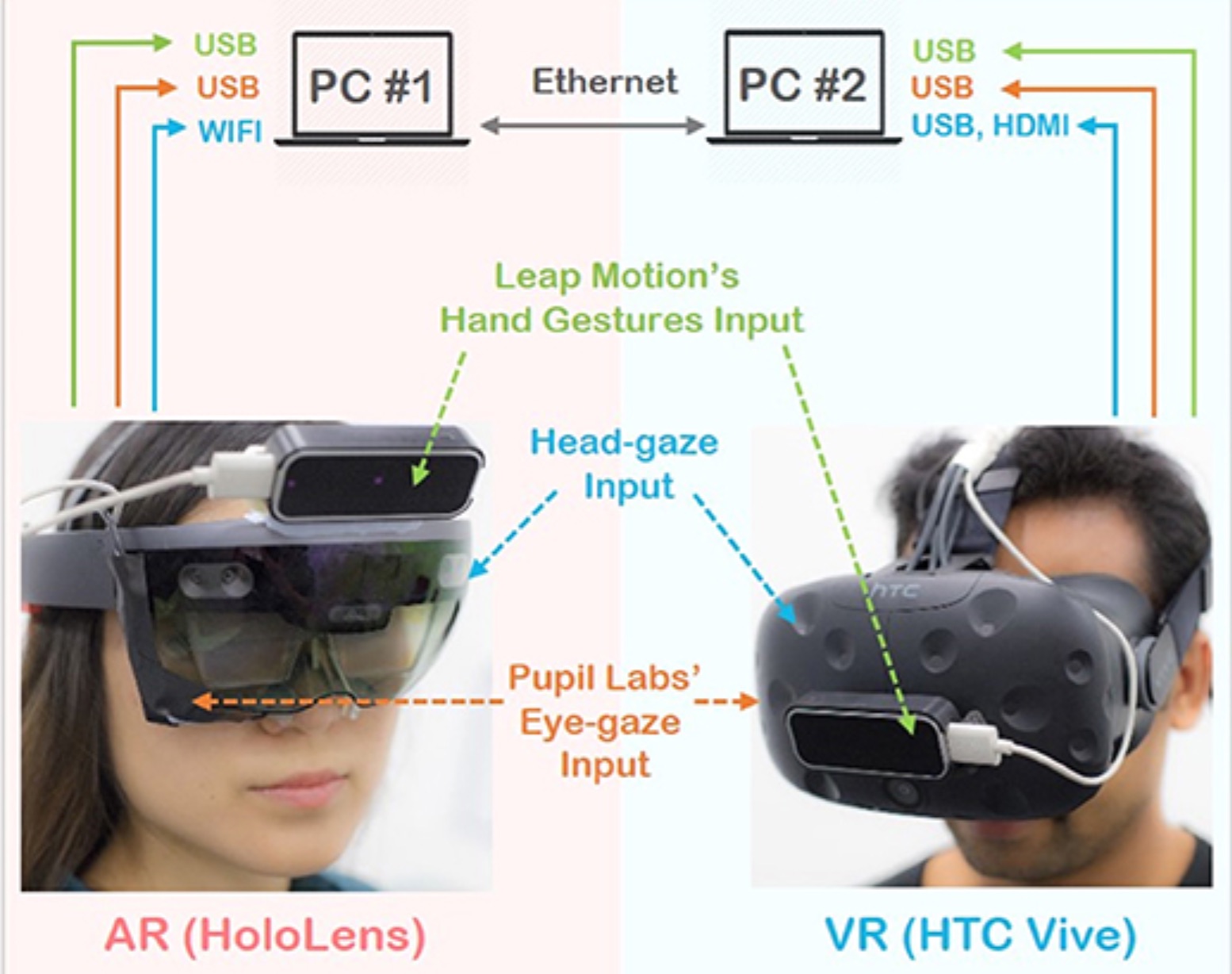

Sharing Gesture and Gaze Cues for Enhancing MR Collaboration

This research focuses on visualizing shared gaze cues, designing interfaces for collaborative experience, and incorporating multimodal interaction techniques and physiological cues to support empathic Mixed Reality (MR) remote collaboration using HoloLens 2, Vive Pro Eye, Meta Pro, HP Omnicept, Theta V 360 camera, Windows Speech Recognition, Leap motion hand tracking, and Zephyr/Shimmer Sensing technologies

-

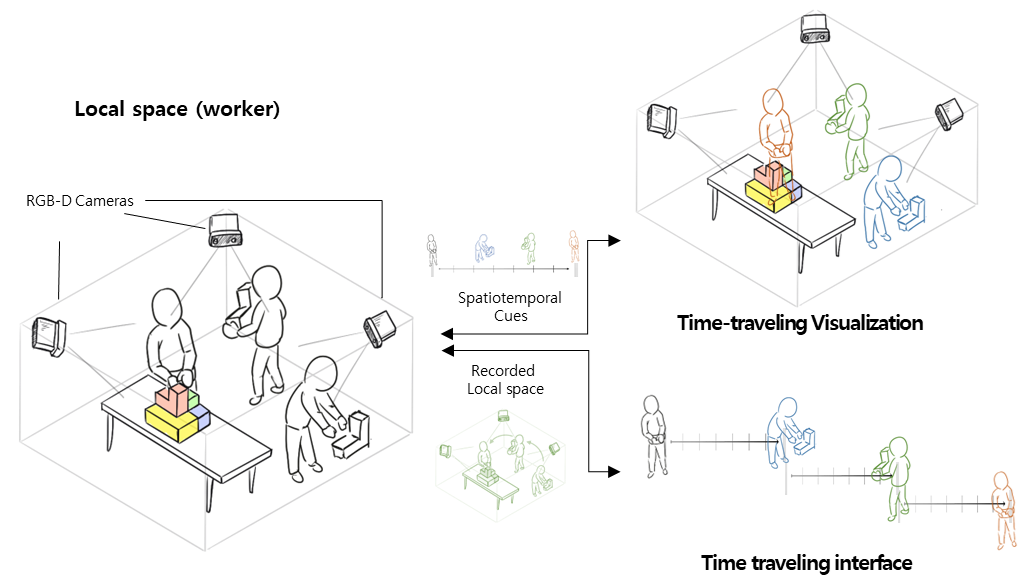

AR-based spatiotemporal interface and visualization for the physical task

The proposed study aims to assist in solving physical tasks such as mechanical assembly or collaborative design efficiently by using augmented reality-based space-time visualization techniques. In particular, when disassembling/reassembling is required, 3D recording of past actions and playback visualization are used to help memorize the exact assembly order and position of objects in the task. This study proposes a novel method that employs 3D-based spatial information recording and augmented reality-based playback to effectively support these types of physical tasks.

Publications

-

Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration

Thammathip Piumsomboon, Gun A Lee, Jonathon D Hart, Barrett Ens, Robert W Lindeman, Bruce H Thomas, Mark BillinghurstThammathip Piumsomboon, Gun A. Lee, Jonathon D. Hart, Barrett Ens, Robert W. Lindeman, Bruce H. Thomas, and Mark Billinghurst. 2018. Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA, Paper 46, 13 pages. DOI: https://doi.org/10.1145/3173574.3173620

@inproceedings{Piumsomboon:2018:MAA:3173574.3173620,

author = {Piumsomboon, Thammathip and Lee, Gun A. and Hart, Jonathon D. and Ens, Barrett and Lindeman, Robert W. and Thomas, Bruce H. and Billinghurst, Mark},

title = {Mini-Me: An Adaptive Avatar for Mixed Reality Remote Collaboration},

booktitle = {Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI '18},

year = {2018},

isbn = {978-1-4503-5620-6},

location = {Montreal QC, Canada},

pages = {46:1--46:13},

articleno = {46},

numpages = {13},

url = {http://doi.acm.org/10.1145/3173574.3173620},

doi = {10.1145/3173574.3173620},

acmid = {3173620},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, avatar, awareness, gaze, gesture, mixed reality, redirected, remote collaboration, remote embodiment, virtual reality},

}

[download]We present Mini-Me, an adaptive avatar for enhancing Mixed Reality (MR) remote collaboration between a local Augmented Reality (AR) user and a remote Virtual Reality (VR) user. The Mini-Me avatar represents the VR user's gaze direction and body gestures while it transforms in size and orientation to stay within the AR user's field of view. A user study was conducted to evaluate Mini-Me in two collaborative scenarios: an asymmetric remote expert in VR assisting a local worker in AR, and a symmetric collaboration in urban planning. We found that the presence of the Mini-Me significantly improved Social Presence and the overall experience of MR collaboration. -

Pinpointing: Precise Head-and Eye-Based Target Selection for Augmented Reality

Mikko Kytö, Barrett Ens, Thammathip Piumsomboon, Gun A Lee, Mark BillinghurstMikko Kytö, Barrett Ens, Thammathip Piumsomboon, Gun A. Lee, and Mark Billinghurst. 2018. Pinpointing: Precise Head- and Eye-Based Target Selection for Augmented Reality. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). ACM, New York, NY, USA, Paper 81, 14 pages. DOI: https://doi.org/10.1145/3173574.3173655

@inproceedings{Kyto:2018:PPH:3173574.3173655,

author = {Kyt\"{o}, Mikko and Ens, Barrett and Piumsomboon, Thammathip and Lee, Gun A. and Billinghurst, Mark},

title = {Pinpointing: Precise Head- and Eye-Based Target Selection for Augmented Reality},

booktitle = {Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI '18},

year = {2018},

isbn = {978-1-4503-5620-6},

location = {Montreal QC, Canada},

pages = {81:1--81:14},

articleno = {81},

numpages = {14},

url = {http://doi.acm.org/10.1145/3173574.3173655},

doi = {10.1145/3173574.3173655},

acmid = {3173655},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, eye tracking, gaze interaction, head-worn display, refinement techniques, target selection},

}View: https://dl.acm.org/ft_gateway.cfm?id=3173655&ftid=1958752&dwn=1&CFID=51906271&CFTOKEN=b63dc7f7afbcc656-4D4F3907-C934-F85B-2D539C0F52E3652A

Video: https://youtu.be/nCX8zIEmv0sHead and eye movement can be leveraged to improve the user's interaction repertoire for wearable displays. Head movements are deliberate and accurate, and provide the current state-of-the-art pointing technique. Eye gaze can potentially be faster and more ergonomic, but suffers from low accuracy due to calibration errors and drift of wearable eye-tracking sensors. This work investigates precise, multimodal selection techniques using head motion and eye gaze. A comparison of speed and pointing accuracy reveals the relative merits of each method, including the achievable target size for robust selection. We demonstrate and discuss example applications for augmented reality, including compact menus with deep structure, and a proof-of-concept method for on-line correction of calibration drift. -

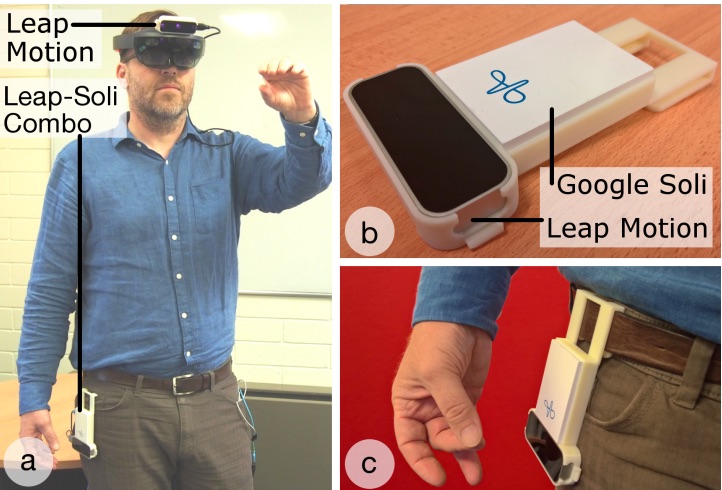

Counterpoint: Exploring Mixed-Scale Gesture Interaction for AR Applications

Barrett Ens, Aaron Quigley, Hui-Shyong Yeo, Pourang Irani, Thammathip Piumsomboon, Mark BillinghurstBarrett Ens, Aaron Quigley, Hui-Shyong Yeo, Pourang Irani, Thammathip Piumsomboon, and Mark Billinghurst. 2018. Counterpoint: Exploring Mixed-Scale Gesture Interaction for AR Applications. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (CHI EA '18). ACM, New York, NY, USA, Paper LBW120, 6 pages. DOI: https://doi.org/10.1145/3170427.3188513

@inproceedings{Ens:2018:CEM:3170427.3188513,

author = {Ens, Barrett and Quigley, Aaron and Yeo, Hui-Shyong and Irani, Pourang and Piumsomboon, Thammathip and Billinghurst, Mark},

title = {Counterpoint: Exploring Mixed-Scale Gesture Interaction for AR Applications},

booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI EA '18},

year = {2018},

isbn = {978-1-4503-5621-3},

location = {Montreal QC, Canada},

pages = {LBW120:1--LBW120:6},

articleno = {LBW120},

numpages = {6},

url = {http://doi.acm.org/10.1145/3170427.3188513},

doi = {10.1145/3170427.3188513},

acmid = {3188513},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, gesture interaction, wearable computing},

}View: http://delivery.acm.org/10.1145/3190000/3188513/LBW120.pdf?ip=130.220.8.189&id=3188513&acc=ACTIVE%20SERVICE&key=65D80644F295BC0D%2E66BF2BADDFDC7DE0%2EF0418AF7A4636953%2E4D4702B0C3E38B35&__acm__=1531307952_cec04550a31b41dfba9a6865be86b8ac

Video: https://youtu.be/GZzC0Vhte4MThis paper presents ongoing work on a design exploration for mixed-scale gestures, which interleave microgestures with larger gestures for computer interaction. We describe three prototype applications that show various facets of this multi-dimensional design space. These applications portray various tasks on a Hololens Augmented Reality display, using different combinations of wearable sensors. Future work toward expanding the design space and exploration is discussed, along with plans toward evaluation of mixed-scale gesture design. -

Snow Dome: A Multi-Scale Interaction in Mixed Reality Remote Collaboration

Thammathip Piumsomboon, Gun A Lee, Mark BillinghurstThammathip Piumsomboon, Gun A. Lee, and Mark Billinghurst. 2018. Snow Dome: A Multi-Scale Interaction in Mixed Reality Remote Collaboration. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (CHI EA '18). ACM, New York, NY, USA, Paper D115, 4 pages. DOI: https://doi.org/10.1145/3170427.3186495

@inproceedings{Piumsomboon:2018:SDM:3170427.3186495,

author = {Piumsomboon, Thammathip and Lee, Gun A. and Billinghurst, Mark},

title = {Snow Dome: A Multi-Scale Interaction in Mixed Reality Remote Collaboration},

booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI EA '18},

year = {2018},

isbn = {978-1-4503-5621-3},

location = {Montreal QC, Canada},

pages = {D115:1--D115:4},

articleno = {D115},

numpages = {4},

url = {http://doi.acm.org/10.1145/3170427.3186495},

doi = {10.1145/3170427.3186495},

acmid = {3186495},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {augmented reality, avatar, mixed reality, multiple, remote collaboration, remote embodiment, scale, virtual reality},

}View: http://delivery.acm.org/10.1145/3190000/3186495/D115.pdf?ip=130.220.8.189&id=3186495&acc=ACTIVE%20SERVICE&key=65D80644F295BC0D%2E66BF2BADDFDC7DE0%2EF0418AF7A4636953%2E4D4702B0C3E38B35&__acm__=1531308619_3eface84d74bae70fd47b11af3589b10

Video: https://youtu.be/nm8A9wzobIEWe present Snow Dome, a Mixed Reality (MR) remote collaboration application that supports a multi-scale interaction for a Virtual Reality (VR) user. We share a local Augmented Reality (AR) user's reconstructed space with a remote VR user who has an ability to scale themselves up into a giant or down into a miniature for different perspectives and interaction at that scale within the shared space. -

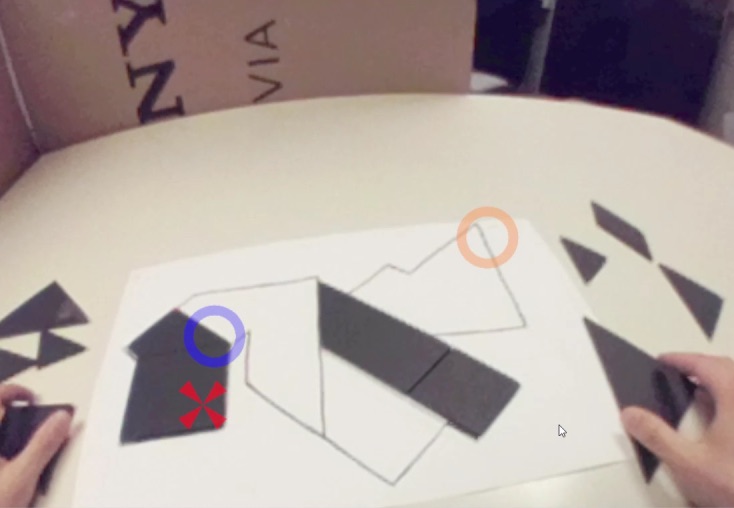

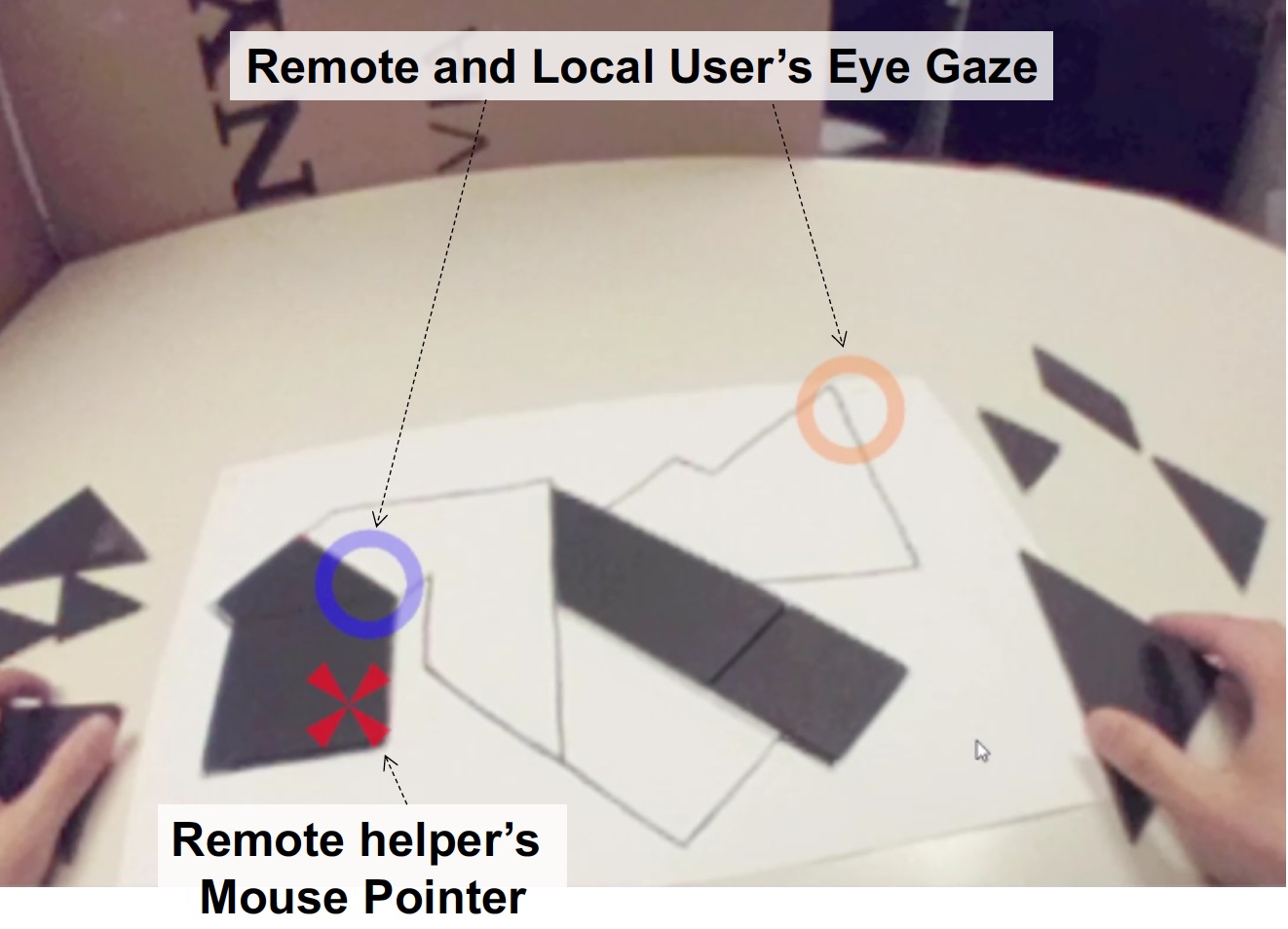

Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze

Gun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark BillinghurstGun Lee, Seungwon Kim, Youngho Lee, Arindam Dey, Thammathip Piumsomboon, Mitchell Norman and Mark Billinghurst. 2017. Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze. In Proceedings of ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments, pp. 197-204. http://dx.doi.org/10.2312/egve.20171359

@inproceedings {egve.20171359,

booktitle = {ICAT-EGVE 2017 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments},

editor = {Robert W. Lindeman and Gerd Bruder and Daisuke Iwai},

title = {{Improving Collaboration in Augmented Video Conference using Mutually Shared Gaze}},

author = {Lee, Gun A. and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammathip and Norman, Mitchell and Billinghurst, Mark},

year = {2017},

publisher = {The Eurographics Association},

ISSN = {1727-530X},

ISBN = {978-3-03868-038-3},

DOI = {10.2312/egve.20171359}

}To improve remote collaboration in video conferencing systems, researchers have been investigating augmenting visual cues onto a shared live video stream. In such systems, a person wearing a head-mounted display (HMD) and camera can share her view of the surrounding real-world with a remote collaborator to receive assistance on a real-world task. While this concept of augmented video conferencing (AVC) has been actively investigated, there has been little research on how sharing gaze cues might affect the collaboration in video conferencing. This paper investigates how sharing gaze in both directions between a local worker and remote helper in an AVC system affects the collaboration and communication. Using a prototype AVC system that shares the eye gaze of both users, we conducted a user study that compares four conditions with different combinations of eye gaze sharing between the two users. The results showed that sharing each other’s gaze significantly improved collaboration and communication. -

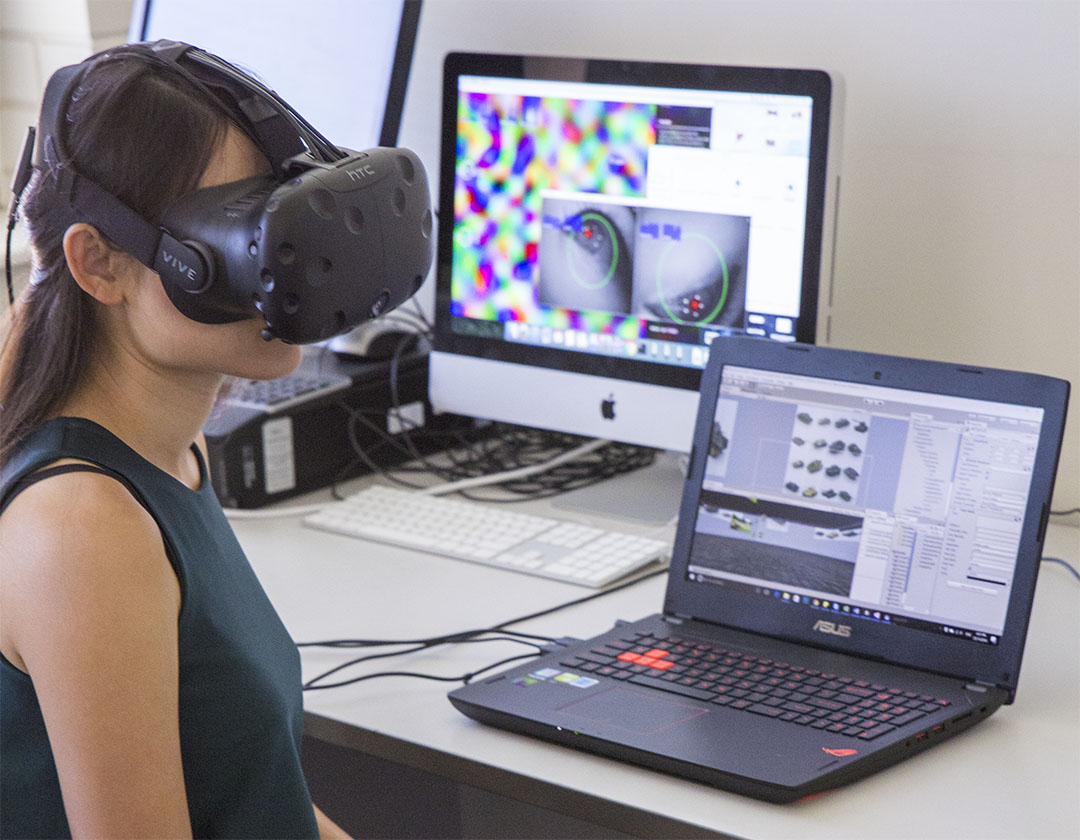

Exploring Natural Eye-Gaze-Based Interaction for Immersive Virtual Reality

Thammathip Piumsomboon, Gun Lee, Robert W. Lindeman and Mark BillinghurstThammathip Piumsomboon, Gun Lee, Robert W. Lindeman and Mark Billinghurst. 2017. Exploring Natural Eye-Gaze-Based Interaction for Immersive Virtual Reality. In 2017 IEEE Symposium on 3D User Interfaces (3DUI), pp. 36-39. https://doi.org/10.1109/3DUI.2017.7893315

@INPROCEEDINGS{7893315,

author={T. Piumsomboon and G. Lee and R. W. Lindeman and M. Billinghurst},

booktitle={2017 IEEE Symposium on 3D User Interfaces (3DUI)},

title={Exploring natural eye-gaze-based interaction for immersive virtual reality},

year={2017},

volume={},

number={},

pages={36-39},

keywords={gaze tracking;gesture recognition;helmet mounted displays;virtual reality;Duo-Reticles;Nod and Roll;Radial Pursuit;cluttered-object selection;eye tracking technology;eye-gaze selection;head-gesture-based interaction;head-mounted display;immersive virtual reality;inertial reticles;natural eye movements;natural eye-gaze-based interaction;smooth pursuit;vestibulo-ocular reflex;Electronic mail;Erbium;Gaze tracking;Painting;Portable computers;Resists;Two dimensional displays;H.5.2 [Information Interfaces and Presentation]: User Interfaces—Interaction styles},

doi={10.1109/3DUI.2017.7893315},

ISSN={},

month={March},}Eye tracking technology in a head-mounted display has undergone rapid advancement in recent years, making it possible for researchers to explore new interaction techniques using natural eye movements. This paper explores three novel eye-gaze-based interaction techniques: (1) Duo-Reticles, eye-gaze selection based on eye-gaze and inertial reticles, (2) Radial Pursuit, cluttered-object selection that takes advantage of smooth pursuit, and (3) Nod and Roll, head-gesture-based interaction based on the vestibulo-ocular reflex. In an initial user study, we compare each technique against a baseline condition in a scenario that demonstrates its strengths and weaknesses. -

The effects of sharing awareness cues in collaborative mixed reality

Piumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M.Piumsomboon, T., Dey, A., Ens, B., Lee, G., & Billinghurst, M. (2019). The effects of sharing awareness cues in collaborative mixed reality. Front. Rob, 6(5).

@article{piumsomboon2019effects,

title={The effects of sharing awareness cues in collaborative mixed reality},

author={Piumsomboon, Thammathip and Dey, Arindam and Ens, Barrett and Lee, Gun and Billinghurst, Mark},

year={2019}

}Augmented and Virtual Reality provide unique capabilities for Mixed Reality collaboration. This paper explores how different combinations of virtual awareness cues can provide users with valuable information about their collaborator's attention and actions. In a user study (n = 32, 16 pairs), we compared different combinations of three cues: Field-of-View (FoV) frustum, Eye-gaze ray, and Head-gaze ray against a baseline condition showing only virtual representations of each collaborator's head and hands. Through a collaborative object finding and placing task, the results showed that awareness cues significantly improved user performance, usability, and subjective preferences, with the combination of the FoV frustum and the Head-gaze ray being best. This work establishes the feasibility of room-scale MR collaboration and the utility of providing virtual awareness cues. -

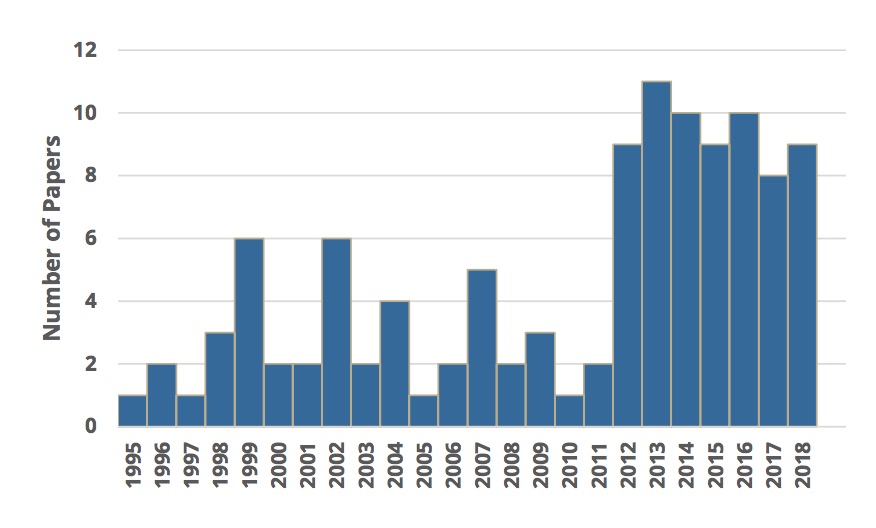

Revisiting collaboration through mixed reality: The evolution of groupware

Ens, B., Lanir, J., Tang, A., Bateman, S., Lee, G., Piumsomboon, T., & Billinghurst, M.Ens, B., Lanir, J., Tang, A., Bateman, S., Lee, G., Piumsomboon, T., & Billinghurst, M. (2019). Revisiting collaboration through mixed reality: The evolution of groupware. International Journal of Human-Computer Studies.

@article{ens2019revisiting,

title={Revisiting collaboration through mixed reality: The evolution of groupware},

author={Ens, Barrett and Lanir, Joel and Tang, Anthony and Bateman, Scott and Lee, Gun and Piumsomboon, Thammathip and Billinghurst, Mark},

journal={International Journal of Human-Computer Studies},

year={2019},

publisher={Elsevier}

}Collaborative Mixed Reality (MR) systems are at a critical point in time as they are soon to become more commonplace. However, MR technology has only recently matured to the point where researchers can focus deeply on the nuances of supporting collaboration, rather than needing to focus on creating the enabling technology. In parallel, but largely independently, the field of Computer Supported Cooperative Work (CSCW) has focused on the fundamental concerns that underlie human communication and collaboration over the past 30-plus years. Since MR research is now on the brink of moving into the real world, we reflect on three decades of collaborative MR research and try to reconcile it with existing theory from CSCW, to help position MR researchers to pursue fruitful directions for their work. To do this, we review the history of collaborative MR systems, investigating how the common taxonomies and frameworks in CSCW and MR research can be applied to existing work on collaborative MR systems, exploring where they have fallen behind, and look for new ways to describe current trends. Through identifying emergent trends, we suggest future directions for MR, and also find where CSCW researchers can explore new theory that more fully represents the future of working, playing and being with others. -

On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction

Piumsomboon, T., Lee, G. A., Irlitti, A., Ens, B., Thomas, B. H., & Billinghurst, M.Piumsomboon, T., Lee, G. A., Irlitti, A., Ens, B., Thomas, B. H., & Billinghurst, M. (2019, April). On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (p. 228). ACM.

@inproceedings{piumsomboon2019shoulder,

title={On the Shoulder of the Giant: A Multi-Scale Mixed Reality Collaboration with 360 Video Sharing and Tangible Interaction},

author={Piumsomboon, Thammathip and Lee, Gun A and Irlitti, Andrew and Ens, Barrett and Thomas, Bruce H and Billinghurst, Mark},

booktitle={Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems},

pages={228},

year={2019},

organization={ACM}

}We propose a multi-scale Mixed Reality (MR) collaboration between the Giant, a local Augmented Reality user, and the Miniature, a remote Virtual Reality user, in Giant-Miniature Collaboration (GMC). The Miniature is immersed in a 360-video shared by the Giant who can physically manipulate the Miniature through a tangible interface, a combined 360-camera with a 6 DOF tracker. We implemented a prototype system as a proof of concept and conducted a user study (n=24) comprising of four parts comparing: A) two types of virtual representations, B) three levels of Miniature control, C) three levels of 360-video view dependencies, and D) four 360-camera placement positions on the Giant. The results show users prefer a shoulder mounted camera view, while a view frustum with a complimentary avatar is a good visualization for the Miniature virtual representation. From the results, we give design recommendations and demonstrate an example Giant-Miniature Interaction. -

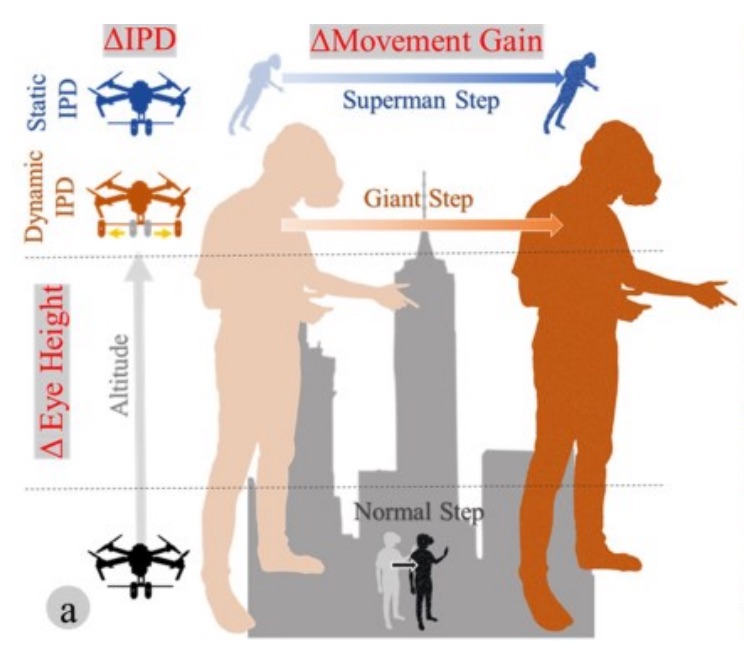

Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface

Piumsomboon, T., Lee, G. A., Ens, B., Thomas, B. H., & Billinghurst, M.Piumsomboon, T., Lee, G. A., Ens, B., Thomas, B. H., & Billinghurst, M. (2018). Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface. IEEE transactions on visualization and computer graphics, 24(11), 2974-2982.

@article{piumsomboon2018superman,

title={Superman vs giant: a study on spatial perception for a multi-scale mixed reality flying telepresence interface},

author={Piumsomboon, Thammathip and Lee, Gun A and Ens, Barrett and Thomas, Bruce H and Billinghurst, Mark},

journal={IEEE transactions on visualization and computer graphics},

volume={24},

number={11},

pages={2974--2982},

year={2018},

publisher={IEEE}

}The advancements in Mixed Reality (MR), Unmanned Aerial Vehicle, and multi-scale collaborative virtual environments have led to new interface opportunities for remote collaboration. This paper explores a novel concept of flying telepresence for multi-scale mixed reality remote collaboration. This work could enable remote collaboration at a larger scale such as building construction. We conducted a user study with three experiments. The first experiment compared two interfaces, static and dynamic IPD, on simulator sickness and body size perception. The second experiment tested the user perception of a virtual object size under three levels of IPD and movement gain manipulation with a fixed eye height in a virtual environment having reduced or rich visual cues. Our last experiment investigated the participant’s body size perception for two levels of manipulation of the IPDs and heights using stereo video footage to simulate a flying telepresence experience. The studies found that manipulating IPDs and eye height influenced the user’s size perception. We present our findings and share the recommendations for designing a multi-scale MR flying telepresence interface. -

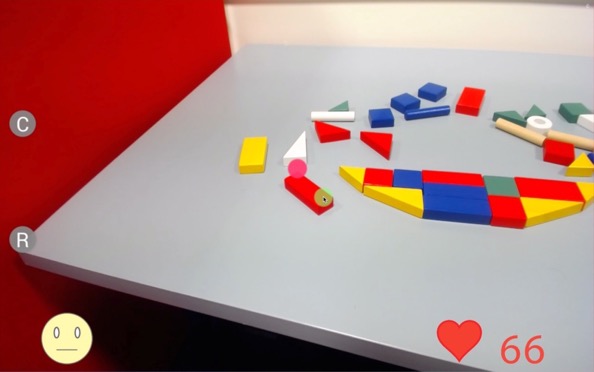

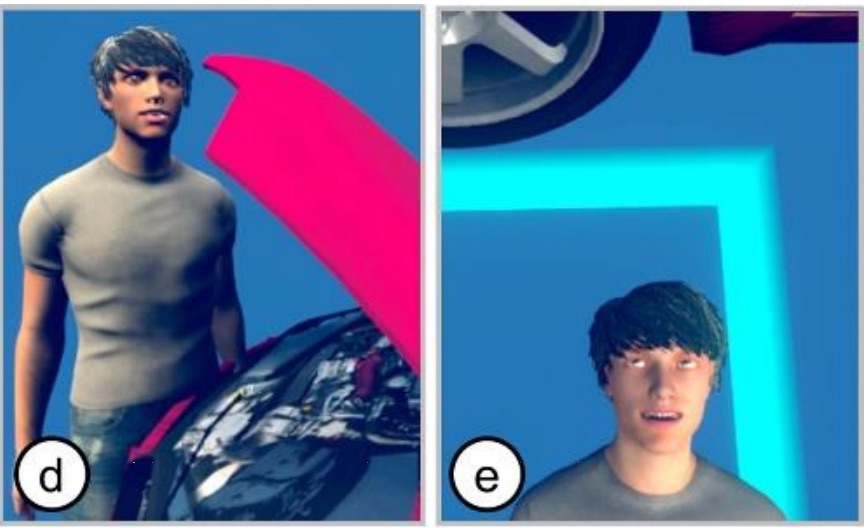

Emotion Sharing and Augmentation in Cooperative Virtual Reality Games

Hart, J. D., Piumsomboon, T., Lawrence, L., Lee, G. A., Smith, R. T., & Billinghurst, M.Hart, J. D., Piumsomboon, T., Lawrence, L., Lee, G. A., Smith, R. T., & Billinghurst, M. (2018, October). Emotion Sharing and Augmentation in Cooperative Virtual Reality Games. In Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts (pp. 453-460). ACM.

@inproceedings{hart2018emotion,

title={Emotion Sharing and Augmentation in Cooperative Virtual Reality Games},

author={Hart, Jonathon D and Piumsomboon, Thammathip and Lawrence, Louise and Lee, Gun A and Smith, Ross T and Billinghurst, Mark},

booktitle={Proceedings of the 2018 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts},

pages={453--460},

year={2018},

organization={ACM}

}We present preliminary findings from sharing and augmenting facial expression in cooperative social Virtual Reality (VR) games. We implemented a prototype system for capturing and sharing facial expression between VR players through their avatar. We describe our current prototype system and how it could be assimilated into a system for enhancing social VR experience. Two social VR games were created for a preliminary user study. We discuss our findings from the user study, potential games for this system, and future directions for this research. -

Sharing and Augmenting Emotion in Collaborative Mixed Reality

Hart, J. D., Piumsomboon, T., Lee, G., & Billinghurst, M.Hart, J. D., Piumsomboon, T., Lee, G., & Billinghurst, M. (2018, October). Sharing and Augmenting Emotion in Collaborative Mixed Reality. In 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct) (pp. 212-213). IEEE.

@inproceedings{hart2018sharing,

title={Sharing and Augmenting Emotion in Collaborative Mixed Reality},

author={Hart, Jonathon D and Piumsomboon, Thammathip and Lee, Gun and Billinghurst, Mark},

booktitle={2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)},

pages={212--213},

year={2018},

organization={IEEE}

}We present a concept of emotion sharing and augmentation for collaborative mixed-reality. To depict the ideal use case of such system, we give two example scenarios. We describe our prototype system for capturing and augmenting emotion through facial expression, eye-gaze, voice, physiological data and share them through their virtual representation, and discuss on future research directions with potential applications. -

Effects of sharing physiological states of players in a collaborative virtual reality gameplay

Dey, A., Piumsomboon, T., Lee, Y., & Billinghurst, M.Dey, A., Piumsomboon, T., Lee, Y., & Billinghurst, M. (2017, May). Effects of sharing physiological states of players in a collaborative virtual reality gameplay. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (pp. 4045-4056). ACM.

@inproceedings{dey2017effects,

title={Effects of sharing physiological states of players in a collaborative virtual reality gameplay},

author={Dey, Arindam and Piumsomboon, Thammathip and Lee, Youngho and Billinghurst, Mark},

booktitle={Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems},

pages={4045--4056},

year={2017},

organization={ACM}

}Interfaces for collaborative tasks, such as multiplayer games can enable more effective and enjoyable collaboration. However, in these systems, the emotional states of the users are often not communicated properly due to their remoteness from one another. In this paper, we investigate the effects of showing emotional states of one collaborator to the other during an immersive Virtual Reality (VR) gameplay experience. We created two collaborative immersive VR games that display the real-time heart-rate of one player to the other. The two different games elicited different emotions, one joyous and the other scary. We tested the effects of visualizing heart-rate feedback in comparison with conditions where such a feedback was absent. The games had significant main effects on the overall emotional experience. -

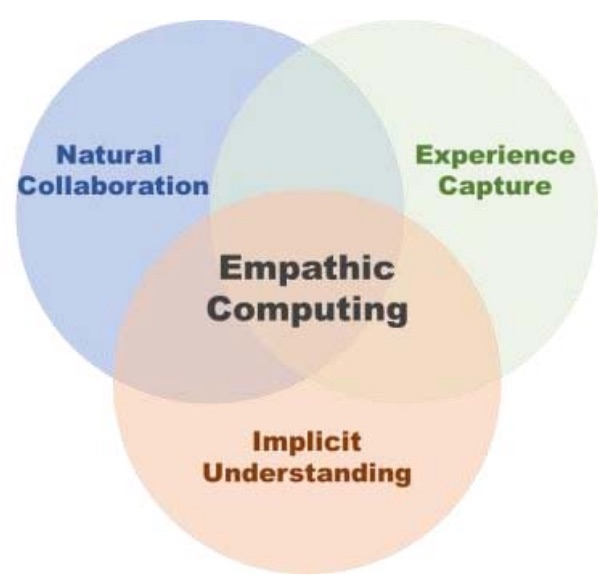

Empathic mixed reality: Sharing what you feel and interacting with what you see

Piumsomboon, T., Lee, Y., Lee, G. A., Dey, A., & Billinghurst, M.Piumsomboon, T., Lee, Y., Lee, G. A., Dey, A., & Billinghurst, M. (2017, June). Empathic mixed reality: Sharing what you feel and interacting with what you see. In 2017 International Symposium on Ubiquitous Virtual Reality (ISUVR) (pp. 38-41). IEEE.

@inproceedings{piumsomboon2017empathic,

title={Empathic mixed reality: Sharing what you feel and interacting with what you see},

author={Piumsomboon, Thammathip and Lee, Youngho and Lee, Gun A and Dey, Arindam and Billinghurst, Mark},

booktitle={2017 International Symposium on Ubiquitous Virtual Reality (ISUVR)},

pages={38--41},

year={2017},

organization={IEEE}

}Empathic Computing is a research field that aims to use technology to create deeper shared understanding or empathy between people. At the same time, Mixed Reality (MR) technology provides an immersive experience that can make an ideal interface for collaboration. In this paper, we present some of our research into how MR technology can be applied to creating Empathic Computing experiences. This includes exploring how to share gaze in a remote collaboration between Augmented Reality (AR) and Virtual Reality (VR) environments, using physiological signals to enhance collaborative VR, and supporting interaction through eye-gaze in VR. Early outcomes indicate that as we design collaborative interfaces to enhance empathy between people, this could also benefit the personal experience of the individual interacting with the interface. -

Mutually Shared Gaze in Augmented Video Conference

Lee, G., Kim, S., Lee, Y., Dey, A., Piumsomboon, T., Norman, M., & Billinghurst, M.Lee, G., Kim, S., Lee, Y., Dey, A., Piumsomboon, T., Norman, M., & Billinghurst, M. (2017, October). Mutually Shared Gaze in Augmented Video Conference. In Adjunct Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR-Adjunct 2017 (pp. 79-80). Institute of Electrical and Electronics Engineers Inc..

@inproceedings{lee2017mutually,

title={Mutually Shared Gaze in Augmented Video Conference},

author={Lee, Gun and Kim, Seungwon and Lee, Youngho and Dey, Arindam and Piumsomboon, Thammatip and Norman, Mitchell and Billinghurst, Mark},

booktitle={Adjunct Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality, ISMAR-Adjunct 2017},

pages={79--80},

year={2017},

organization={Institute of Electrical and Electronics Engineers Inc.}

}Augmenting video conference with additional visual cues has been studied to improve remote collaboration. A common setup is a person wearing a head-mounted display (HMD) and camera sharing her view of the workspace with a remote collaborator and getting assistance on a real-world task. While this configuration has been extensively studied, there has been little research on how sharing gaze cues might affect the collaboration. This research investigates how sharing gaze in both directions between a local worker and remote helper affects the collaboration and communication. We developed a prototype system that shares the eye gaze of both users, and conducted a user study. Preliminary results showed that sharing gaze significantly improves the awareness of each other's focus, hence improving collaboration. -

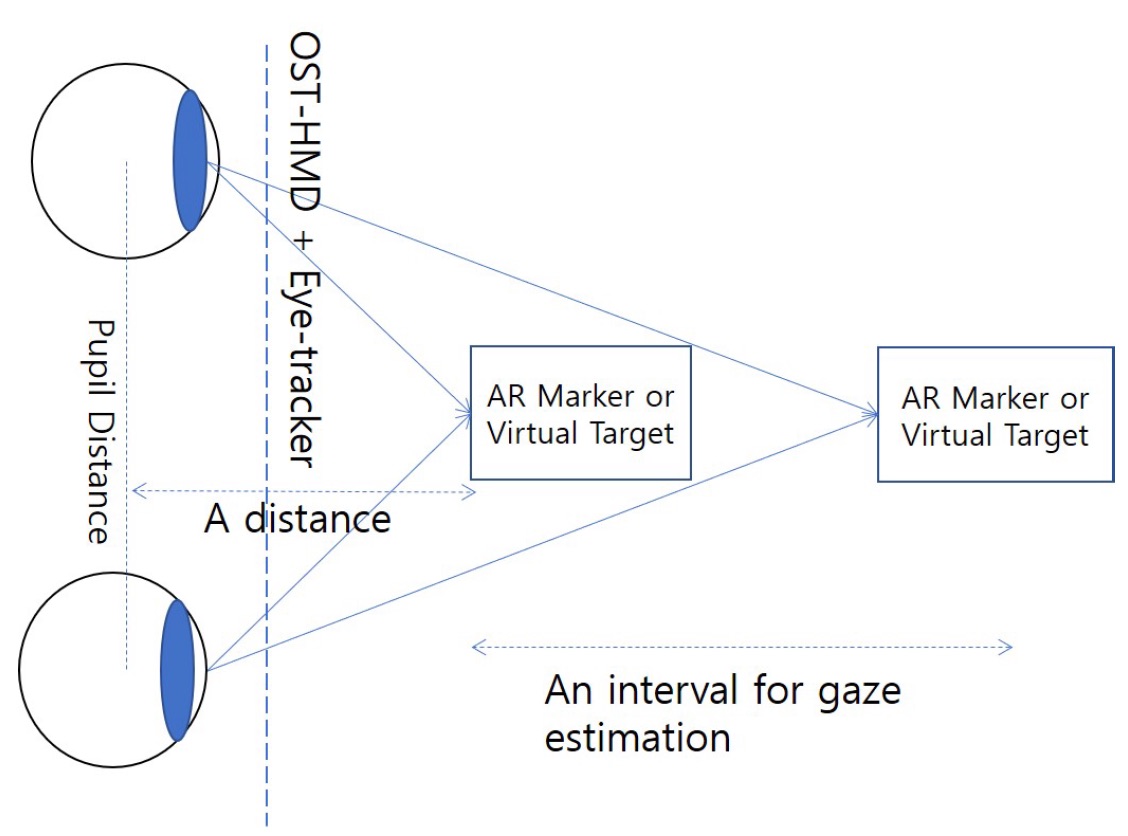

A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs

Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M.Lee, Y., Piumsomboon, T., Ens, B., Lee, G., Dey, A., & Billinghurst, M. (2017, November). A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs. In Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos (pp. 1-2). Eurographics Association.

@inproceedings{lee2017gaze,

title={A gaze-depth estimation technique with an implicit and continuous data acquisition for OST-HMDs},

author={Lee, Youngho and Piumsomboon, Thammathip and Ens, Barrett and Lee, Gun and Dey, Arindam and Billinghurst, Mark},

booktitle={Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments: Posters and Demos},

pages={1--2},

year={2017},

organization={Eurographics Association}

}The rapid developement of machine learning algorithms can be leveraged for potential software solutions in many domains including techniques for depth estimation of human eye gaze. In this paper, we propose an implicit and continuous data acquisition method for 3D gaze depth estimation for an optical see-Through head mounted display (OST-HMD) equipped with an eye tracker. Our method constantly monitoring and generating user gaze data for training our machine learning algorithm. The gaze data acquired through the eye-tracker include the inter-pupillary distance (IPD) and the gaze distance to the real andvirtual target for each eye.

-

Exploring Mixed-Scale Gesture Interaction

Ens, B., Quigley, A. J., Yeo, H. S., Irani, P., Piumsomboon, T., & Billinghurst, M.Ens, B., Quigley, A. J., Yeo, H. S., Irani, P., Piumsomboon, T., & Billinghurst, M. (2017). Exploring mixed-scale gesture interaction. SA'17 SIGGRAPH Asia 2017 Posters.

@article{ens2017exploring,

title={Exploring mixed-scale gesture interaction},

author={Ens, Barrett and Quigley, Aaron John and Yeo, Hui Shyong and Irani, Pourang and Piumsomboon, Thammathip and Billinghurst, Mark},

journal={SA'17 SIGGRAPH Asia 2017 Posters},

year={2017},

publisher={ACM}

}This paper presents ongoing work toward a design exploration for combining microgestures with other types of gestures within the greater lexicon of gestures for computer interaction. We describe three prototype applications that show various facets of this multi-dimensional design space. These applications portray various tasks on a Hololens Augmented Reality display, using different combinations of wearable sensors. -

Multi-Scale Gestural Interaction for Augmented Reality

Ens, B., Quigley, A., Yeo, H. S., Irani, P., & Billinghurst, M.Ens, B., Quigley, A., Yeo, H. S., Irani, P., & Billinghurst, M. (2017, November). Multi-scale gestural interaction for augmented reality. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 11). ACM.

@inproceedings{ens2017multi,

title={Multi-scale gestural interaction for augmented reality},

author={Ens, Barrett and Quigley, Aaron and Yeo, Hui-Shyong and Irani, Pourang and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={11},

year={2017},

organization={ACM}

}We present a multi-scale gestural interface for augmented reality applications. With virtual objects, gestural interactions such as pointing and grasping can be convenient and intuitive, however they are imprecise, socially awkward, and susceptible to fatigue. Our prototype application uses multiple sensors to detect gestures from both arm and hand motions (macro-scale), and finger gestures (micro-scale). Micro-gestures can provide precise input through a belt-worn sensor configuration, with the hand in a relaxed posture. We present an application that combines direct manipulation with microgestures for precise interaction, beyond the capabilities of direct manipulation alone.

-

Exploring enhancements for remote mixed reality collaboration

Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M.Piumsomboon, T., Day, A., Ens, B., Lee, Y., Lee, G., & Billinghurst, M. (2017, November). Exploring enhancements for remote mixed reality collaboration. In SIGGRAPH Asia 2017 Mobile Graphics & Interactive Applications (p. 16). ACM.

@inproceedings{piumsomboon2017exploring,

title={Exploring enhancements for remote mixed reality collaboration},

author={Piumsomboon, Thammathip and Day, Arindam and Ens, Barrett and Lee, Youngho and Lee, Gun and Billinghurst, Mark},

booktitle={SIGGRAPH Asia 2017 Mobile Graphics \& Interactive Applications},

pages={16},

year={2017},

organization={ACM}

}In this paper, we explore techniques for enhancing remote Mixed Reality (MR) collaboration in terms of communication and interaction. We created CoVAR, a MR system for remote collaboration between an Augmented Reality (AR) and Augmented Virtuality (AV) users. Awareness cues and AV-Snap-to-AR interface were proposed for enhancing communication. Collaborative natural interaction, and AV-User-Body-Scaling were implemented for enhancing interaction. We conducted an exploratory study examining the awareness cues and the collaborative gaze, and the results showed the benefits of the proposed techniques for enhancing communication and interaction. -

Manipulating Avatars for Enhanced Communication in Extended Reality

Jonathon Hart, Thammathip Piumsomboon, Gun A. Lee, Ross T. Smith, Mark Billinghurst.Hart, J. D., Piumsomboon, T., Lee, G. A., Smith, R. T., & Billinghurst, M. (2021, May). Manipulating Avatars for Enhanced Communication in Extended Reality. In 2021 IEEE International Conference on Intelligent Reality (ICIR) (pp. 9-16). IEEE.

@inproceedings{hart2021manipulating,

title={Manipulating Avatars for Enhanced Communication in Extended Reality},

author={Hart, Jonathon Derek and Piumsomboon, Thammathip and Lee, Gun A and Smith, Ross T and Billinghurst, Mark},

booktitle={2021 IEEE International Conference on Intelligent Reality (ICIR)},

pages={9--16},

year={2021},

organization={IEEE}

}Avatars are common virtual representations used in Extended Reality (XR) to support interaction and communication between remote collaborators. Recent advancements in wearable displays provide features such as eye and face-tracking, to enable avatars to express non-verbal cues in XR. The research in this paper investigates the impact of avatar visualization on Social Presence and user’s preference by simulating face tracking in an asymmetric XR remote collaboration between a desktop user and a Virtual Reality (VR) user. Our study was conducted between pairs of participants, one on a laptop computer supporting face tracking and the other being immersed in VR, experiencing different visualization conditions. They worked together to complete an island survival task. We found that the users preferred 3D avatars with facial expressions placed in the scene, compared to 2D screen attached avatars without facial expressions. Participants felt that the presence of the collaborator’s avatar improved overall communication, yet Social Presence was not significantly different between conditions as they mainly relied on audio for communication.